This article appeared in a journal published by Elsevier. The attached

copy is furnished to the author for internal non-commercial research

and education use, including for instruction at the authors institution

and sharing with colleagues.

Other uses, including reproduction and distribution, or selling or

licensing copies, or posting to personal, institutional or third party

websites are prohibited.

In most cases authors are permitted to post their version of the

article (e.g. in Word or Tex form) to their personal website or

institutional repository. Authors requiring further information

regarding Elsevier’s archiving and manuscript policies are

encouraged to visit:

http://www.elsevier.com/authorsrights

Author's personal copy

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

journal homepage: www.intl.elsevierhealth.com/journals/cmpb

Privacy-preserving Kruskal–Wallis test

Suxin Guo a,∗ , Sheng Zhong b , Aidong Zhang a

a

b

Department of Computer Science and Engineering, SUNY at Buffalo, United States

State Key Laboratory for Novel Software Technology, Nanjing University, China

a r t i c l e

i n f o

a b s t r a c t

Article history:

Statistical tests are powerful tools for data analysis. Kruskal–Wallis test is a non-parametric

Received 4 January 2013

statistical test that evaluates whether two or more samples are drawn from the same dis-

Received in revised form

tribution. It is commonly used in various areas. But sometimes, the use of the method is

17 May 2013

impeded by privacy issues raised in fields such as biomedical research and clinical data

Accepted 28 May 2013

analysis because of the confidential information contained in the data. In this work, we give

a privacy-preserving solution for the Kruskal–Wallis test which enables two or more parties

Keywords:

to coordinately perform the test on the union of their data without compromising their data

Data security

privacy. To the best of our knowledge, this is the first work that solves the privacy issues in

Statistical test

the use of the Kruskal–Wallis test on distributed data.

© 2013 Elsevier Ireland Ltd. All rights reserved.

Kruskal–Wallis test

1.

Introduction

Statistical hypothesis tests are very widely used for data

analysis. Some popular statistical tests include t-test [1],

ANOVA [2], Kruskal–Wallis test [3], and Wilcoxon rank sum

test [4]. Although these four are different tests, they serve

the same goal, which is to find out whether the samples

come from the same population. The t-test and ANOVA are

parametric tests and assume the normal distribution of data.

The non-parametric equivalence of these two tests are the

Wilcoxon rank sum test, which is also known as MannWhitney U test [5], and Kruskal–Wallis test, respectively.

They do not assume the data to be normally distributed.

The t-test can only deal with the comparison between

two samples, and the ANOVA extends it to multiple samples. Similarly, the Kruskal–Wallis is also a generalization of

the Wilcoxon rank sum test from two samples to multiple

samples.

As stated above, the four tests are doing similar things

under different assumptions. The non-parametric tests

perform better when the data is not normally distributed, and

are suitable especially in the cases when the data size is small

(<25 per sample group) [6]. Although the Kruskal–Wallis test

is a helpful tool in many areas, sometimes the use of it is

impeded by privacy concerns due to the confidential information in the data, especially in the clinical and biomedical

research.

For example, some hospitals conducted a study and tested

the INR (International Normalized Ratio) values for their

patients so that each hospital holds a set of INR values. The

hospitals want to perform the Kruskal–Wallis test to check

whether their values are following the same trend. In this

case, the set of the INR values of each hospital is treated

as a sample. To conduct the Kruskal–Wallis test, all samples

should be known, which means, the hospitals have to share

their data with each other. The problem is that it might be

improper for the hospitals to share their samples because

the data contains the private information of patients. Currently there is no method that enables the conduction of

the Kruskal–Wallis test on such distributed data with privacy

concerns.

∗

Corresponding author. Tel.: +1 7165647706.

0169-2607/$ – see front matter © 2013 Elsevier Ireland Ltd. All rights reserved.

http://dx.doi.org/10.1016/j.cmpb.2013.05.023

Author's personal copy

136

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

To solve this problem, we propose a privacy-preserving

algorithm that allows the Kruskal–Wallis test to be applied

on samples distributed in different parties without revealing each party’s private information to others. Due to the

similarity in non-parametric tests, our method can also help

the design of privacy-preserving solutions for other nonparametric tests. For example, the Wilcoxon rank sum test

and the Kruskal–Wallis test are used in the situations of two

samples and two or more samples, respectively, and are essentially the same in the two samples case [3]. So our algorithm

also solves the privacy issue of the Wilcoxon rank sum test to

some extent.

The rest of this paper is organized as follows: In Section 2,

we present the related work. Section 3 provides the technical preliminaries including the background knowledge about

the Kruskal–Wallis test and the cryptographic tools we need.

We propose the basic algorithm and the complete algorithm

in Sections 4 and 5, respectively. The basic algorithm shows

the procedure of conducting the Kruskal–Wallis test securely

when there is no tie in the data. The complete algorithm

follows the basic algorithm and takes the existence of ties

into consideration. In Section 6, we present the experimental

results and finally, Section 7 concludes the paper.

2.

Related work

In recent years, due to the increasing awareness of privacy problems, a lot of data analyzing methods have been

enhanced to be privacy-preserving, including many popular

data mining and machine learning algorithms. Most of these

approaches can be divided into two categories. Approaches

in the first category protect data privacy with data perturbation techniques, such as randomization [7,8],rotation [9]

and resampling [10]. Since the original data is changed, these

approaches usually lose some accuracy. The methods in the

second category are generally based on the Secure Multiparty

Computation (SMC) and apply cryptographic techniques to

protect data during the computations [11,12]. Such methods

usually cause no accuracy loss but have higher computational

cost. In our case, since the Kruskal–Wallis test is often used on

small sized data, we choose the second way, which is to protect privacy with cryptographic tools. It enables us to achieve

higher accuracy with an affordable computational cost.

In the cryptographic category, some SMC tools are very

commonly used, such as secure sum [13], secure comparison

[14,15], secure division [16], secure scalar product [13,16,17],

secure matrix multiplication [18–20], and secure set operations

[13].

Many data mining and machine learning algorithms have

been extended with privacy solutions, such as decision tree

classification [11,21], k-means clustering [22,23], gradient

descent methods [24], but only a few works have been proposed to study the privacy issues in statistical tests. [25] gives

a privacy-preserving algorithm to compare survival curves

with the logrank test. [26] presents a privacy-preserving solution to perform the permutation test securely on distributed

data. There is no work studies the privacy issues of the

Kruskal–Wallis test on distributed data. To the best of our

knowledge, our work is the first one.

3.

Technical preliminaries

3.1.

The Kruskal–Wallis test

We first review the Kruskal–Wallis test in this section. The

test as proposed by Kruskal and Wallis [3] evaluates whether

two or more samples are from the same distribution. The null

hypothesis is that all the samples come from the same distribution.

Suppose we have k samples, each contains a set of values.

To perform the Kruskal–Wallis test, we need to first rank all the

values together without considering which sample the values

belong to, then compute the sum of all the ranks of values

within every sample, so that each sample has its sum of ranks.

If there is no tie in all the values, the test statistic is:

R

12

i

− 3(N + 1),

ni

N(N + 1)

k

H=

2

(1)

i=1

where N is the total number of values in all samples; ni is the

number of values contained in the ith sample, and Ri is the

sum of ranks in ith sample.

2

After the calculation of H, we compare it to a value ˛:k−1

which can be found in a table of the chi-squared probability

distribution with k − 1 as the degrees of freedom and ˛ as the

2

desired significance. If H ≥ ˛:k−1

, the hypothesis is rejected.

Otherwise, the hypothesis is accepted.

If there are ties in the values, the calculation of the test

statistic should be changed slightly. First, when ranking all

the values, the ranks of each group of tied values are given

as the average of the ranks that these tied values would have

received without ties. For example, suppose we have values {1,

3, 3, 5} with one tie of two “3”s. Without considering the tie,

their ranks should be 1, 2, 3, 4, respectively. After we change

the ranks of the tied values to the average of them, their ranks

become 1, 2.5, 2.5, 4. Then we can compute H with these new

ranks.

Besides the adjustment of ranks, we also need to divide H

by:

g

C=1−

(t3 − ti )

i=1 i

,

N3 − N

(2)

where g is the number of groups of tied values, and ti is the

number of tied values in the ith group. For the above example

{1, 3, 3, 5}, we have only 1 group of 2 tied values, so g = 1 and

t1 = 2.

To sum up for the case with existence of ties, we need to

adjust the ranks of tied values, and the test statistic is:

Hc =

H

.

C

(3)

Actually Eq. (3) is the general solution that holds no matter

there are ties or not. If there is no tie, C = 1 and thus, Hc = H.

Author's personal copy

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

3.2.

Privacy protection of the Kruskal–Wallis test

Like the hospital example mentioned in the introduction, we

assume that each party has a sample and they hope to conduct

a Kruskal–Wallis test jointly to find out whether their samples

follow the same distribution without revealing their data to

others. Here our solution is based on the semi-honest model,

which is widely used in the cryptographic category of privacypreserving methods [27,11,28,29,24,13,16,30]. In this model, all

parties strictly follow the protocol, but can attempt to derive

the private information of other parties with the intermediate

results they get during the execution of protocols.

3.3.

Cryptographic tools

3.3.1.

Homomorphic cryptographic scheme

An additive homomorphic asymmetric cryptographic system

is used to encrypt and decrypt the data in our work. A cryptographic scheme that encrypts integer x as E(x) is additive

homomorphic if there are operators ⊕ and ⊗ that for any two

integers x1 , x2 and a constant a, we have

E(x1 + x2 ) = E(x1 ) ⊕ E(x2 ),

E(a × x1 ) = a ⊗ E(x1 ).

This means, with an additive homomorphic cryptographic

system, we can compute the encrypted sum of integers

directly from their encryptions. We do not need to decrypt the

integers and compute the sum.

In an asymmetric cryptographic system, we have a pair of

keys: a public key for encryption and a private key for decryption.

3.3.2.

Elgamal cryptographic system

There are several additive homomorphic cryptographic

schemes [30,31]. In this work, we apply a variant of ElGamal

scheme [32], which is semantically secure under the DiffeHellman Assumption [33].

Elgamal Cryptographic system is a multiplicative homomorphic asymmetric cryptographic system. With this system,

the encryption of a cleartext m is such a pair:

E(m) = (m × yr , gr ),

where g is a generator, x is the private key, y is the public key

that y = gx and r is a random integer.

We call the first part of the pair c1 and the second part

c2 . c1 = m × yr and c2 = gr . To decrypt E(m), we compute s = c2x =

grx = gxr = yr . Then do c1 × s−1 = m × yr × y−r and we can get the

cleartext m.

In the variant of Elgamal scheme we use, the cleartext m is

encrypted in such a way:

E(m) = (gm × yr , gr ).

The only difference between the original Elgomal scheme

and this variant is that m in the first part is changed to gm .

With this operation, this variant is an additive homomorphic

cryptosystem such that:

E(x1 + x2 ) = E(x1 ) × E(x2 ),

137

a

E(a × x1 ) = E(x1 ) .

To decrypt E(m), we follow the same procedure as in the

original Elgamal algorithm. But this time, after the above calculations, we obtain gm instead of m. To get m from gm , we need

to perform exhaustive search, which is to try every possible

m and look for the one that matches gm . Please note that this

exhaustive search is limited to a small range of possible plaintexts

only, so the time needed is reasonable.

In our work, the private key is shared by all the parties and

no party knows the complete private key. The parties need

to coordinate with each other to do the decryptions and the

ciphertexts can be exposed to any party, because no party can

decrypt them without the help of others.

The private key is shared in this way: Suppose there are

two parties, parties A and B. A holds a part of private key, x1

and B holds the other part, x2 such that x1 + x2 = x, where x is

the complete private key. In the decryption, we need to compute s = c2x = c2x1 +x2 = c2x1 × c2x2 . Party A computes s1 = c2x1 and

party B computes s2 = c2x2 . s = s1 × s2 . We need to do c1 × s−1 =

−1

−1

−1

c1 × (s1 × s2 ) = c1 × s−1

1 × s2 . Party A computes c1 × s1 and

−1

sends it to party B. Then party B computes c1 × s1 × s−1

2 =

c1 × s−1 = gm and sends it to A. In this way both parties can get

the decrypted result. Here since the party B does the decryption later, it gets the final result earlier. If it does not send the

result to A, the decrypted result can only be known to party B.

The sequence of the parties can be changed, so if we need the

result to be known to only one party, the party should do the

decryption later.

3.3.3.

Secure comparison

We apply the secure comparison protocol proposed in [15] to

compare two values from different parties securely. The input

of this algorithm are two integers a and b which are from different parties. The output is an encryption of 1 if a > b, or an

encryption of 0 otherwise.

The basic idea of the secure comparison algorithm is as

follows.

Let the binary presentation of a and b be al , . . ., a1

and bl , . . ., b1 , where a1 and b1 are the least significant

bits. If a > b, there is a “pivot bit” i such that bi − ai + 1 =0

and aj XOR bj = aj + bj − 2aj bj = 0 for every i < j ≤ l. This method

applies the homomorphic encryptions to check if the pivot bit

exists.

This method can find out if a > b, but it cannot find out if

a ≥ b directly. So when we want to know if a ≥ b, we compare

2a + 1 and 2b instead of a and b. If 2a + 1 >2b, since both a and

b are integers, we can derive that a ≥ b.

4.

The basic algorithm of

privacy-preserving Kruskal–Wallis test

In this part, we present the basic algorithm for computing the

H statistic of the Kruskal–Wallis test securely without considering the existence of ties. The complete algorithm that also

deals with ties will be discussed in the next section. To make

the presentation clear, we first give the algorithm for performing the test within two parties, then extend it to the multiparty

case.

Author's personal copy

138

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

Suppose there are two parties, A and B. Party A has sample

S1 which contains n1 values, and party B has sample S2 that

contains n2 values. The total number of values N = n1 + n2 . The

basic structure of the algorithm goes as follows:

1. For each value in each party, count how many values in its

own party (including itself) are smaller than or equal to it.

Encrypt these counts.

2. For each value in each party, compare it with all the values

in the other party using the secure comparison algorithm.

Then by adding the comparison results up, count how

many values in the other party are smaller than or equal

to it. Since the results of the secure comparison algorithm

are in cipher text, these counts are also in cipher text.

3. For each value in each party, add the above two counts

securely so we can get the total number of values in both

parties that are smaller than or equal to it, which is the

rank of it in cipher text. Then for each party, add all the

encrypted ranks of its values and this is the encrypted rank

sum of this party. Call the rank sums of the two parties R1

and R2 , respectively.

4. With the encrypted rank sum of both parties, compute the

H statistic with Eq. (1). Here comes a problem: to calculate

H, we need the squared rank sum of both parties, R12 and

R22 . Since we only have the encrypted rank sums of the two

parties E(R1 ) and E(R2 ), we have to compute E(R12 ) and E(R22 )

from E(R1 ) and E(R2 ). This is not easy because we are using

an additive homomorphic system, which does not support

the direct multiplication of two encrypted integers. So we

need to develop an algorithm to solve it.

Let us explain each step in details.

4.1.

Secure computation of the rank sums

To compute the rank of one value, we just need to count how

many values in both parties are smaller than or equal to it. For

example, with values {5, 6, 7}, the rank of value 5 is 1, because

only 1 value is smaller than or equal to it, which is itself (5 ≤ 5).

The rank of 6 is 2 since there are 2 values smaller than or equal

to it (5 ≤ 6 and 6 ≤ 6). Similarly, the rank of 7 is 3.

For each value in each party, to count how many values are

smaller than or equal to it in its own party is quite simple.

We compare it with all values in its party, which can be easily

done. But to count the number of smaller or equal values in

the other party is not that straightforward. We also need to

compare the value with all values in the other party, and the

comparisons should be conducted with the secure comparison algorithm.

Suppose the values in party A are a1 , a2 , . . . , an1 , and the

values in party B are b1 , b2 , . . . , bn2 . For each value ai (i = 1, b,

. . ., n1 ), we need to compare it with every value in party B with

the secure comparison protocol. After these n2 secure comparisons, we have n2 results, and each of them is an encryption

of 0 or 1 (E(0) or E(1)). For each value bj (j = 1, 2, . . ., n2 ), the comparison between ai and bj is E(1) if ai ≥ bj and E(0) otherwise.

Since the results are in cipher text, no party knows what they

are. The sum of the n2 results is the encrypted number of values that are smaller than or equal to ai in party B. We call it

E(RB (ai )).

The number of values that are smaller than or equal to

ai in party A can be easily computed. It is named RA (ai ). We

encrypt it and get E(RA (ai )). The sum of RA (ai ) and RB (ai ) is the

rank of ai , which is R(ai ). The encryption of this rank E(R(ai ))

can be computed from E(RA (ai )) and E(RB (ai )) with the additive

homomorphic system that we utilize.

In this way, we can get the encryptions of the ranks of all

values from both parties:

E(R(a1 )), E(R(a2 )), . . . , E(R(an1 )),

E(R(b1 )), E(R(b2 )), . . . , E(R(bn2 )).

Then E(R1 ) and E(R2 ), which are the encryptions of the rank

sums of party A and B, respectively, can be computed from

them because R1 = R(a1 ) + R(a2 ) + · · · + R(an1 ) and R2 = R(b1 ) +

R(b2 ) + · · · + R(bn2 ).

4.2.

Secure computation of the squared rank sums

We need to compute E(R12 ) and E(R22 ) from E(R1 ) and E(R2 ). Since

the additive homomorphic cryptosystem does not support

the direct multiplication of two encrypted integers, here we

present an algorithm to solve it.

To compute E(ab) from E(a) and E(b) that are known to both

parties, first we need to make one of the integers additively

shared by the two parties. For example, we make a additively

shared by the two parties such that party A holds an integer

aA and party B holds an integer aB that aA + aB = a. aA and aB

can be got from E(a) in this way: Party A randomly generates

an integer aA , and computes E(aA ). Then E(a − aA ) = E(aB ) can be

computed from E(a) and E(aA ) by party A. A sends it to party

B and the two parties coordinate with each other to decrypt

E(aB ). During the decryption, we make sure that the decryption result aB is only known to party B. This can be achieved

with the cryptographic system that we use, as explained in

Section 3.

After A gets aA and B gets aB , the two parties A and B can

compute E(aA × b) and E(aB × b), respectively. This can be done

with the additive homomorphic system from aA , aB and E(b)

because aA and aB are both integers in plaintext.

What we want is E(ab) = E((aA + aB ) × b) = E(aA × b + aB × b).

Since E(aA × b) is held by party A and E(aB × b) is held by party

B, the two parties should exchange their values so that both

of them can compute the final result E(ab). But exchanging the

values directly may cause privacy loss. For example, if party A

gives E(aA × b) to party B, since E(aA × b) = E(b)aA with the variant of Elgamal system we use, and E(b) is known to party B,

party B can derive some information about aA from E(aA × b).

So before the two parties calculate E(aA × b) and E(aB × b) and

exchange their values, they do rerandomizations to their E(b)s.

With the rerandomizations, the random numbers “r” that are

used in the encryptions are changed, so the encryptions are

different from the original ones. To make the presentation

clear, we call the rerandomized E(b)s as E (b) and E (b) in party

A and party B, respectively. Then parties A and B can cala

a

culate E (aA × b) = E (b) A and E (aB × b) = E (b) B , respectively,

and exchange their values E (aA × b) and E (aB × b). Since the

encryptions are changed, the parties cannot derive information from the value they get from each other. For example,

Author's personal copy

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

a

although party B gets E (aA × b) from A, E (aA × b) = E (b) A and

party B does not know E (b) because it is the rerandomization

done by party A. So B cannot derive aA .

After the exchange, party A has E(aA × b) and E (aB × b)

and party B has E (aA × b) and E(aB × b). They can compute

E(ab) = E(aA × b + aB × b) by themselves. The rerandomizations

do not affect the calculations of the encrypted sums. In this

way, both parties can get E(ab) from E(a) and E(b). Algorithm 1

shows the main procedure of this encrypted multiplication.

Algorithm 1. Encrypted multiplication of two integers

Input. Encryptions of integers a and b, E(a) and E(b) that are

known to both parties;

Output. The encryption of a × b, E(ab);

1: Party A generates a random integer aA and

computes E(aA );

2: Party A computes E(a − aA ) and sends it to

party B;

The two parties coordinately decrypt

3:

E(a − aA ) and only party B gets the result

a − aA = aB ;

4: Parties A and B rerandomize E(b) and get

E (b) and E (b), respectively;

Parties A and B calculate E (aA × b) and

5:

E (aB × b), respectively, and exchange the

two values;

Parties

6:

A

and

B

compute

E(ab) = E(aA × b + aB × b) by themselves;

4.3.

Secure computation of H

With Algorithm 1 we can get E(R12 ) and E(R22 ) from E(R1 ) and

E(R2 ). Because we assume there are two parties, the H statistic

is calculated as:

H=

R2

R2

12

( 1 + 2 ) − 3(N + 1),

n2

N(N + 1) n1

where N, n1 and n2 are constants known to both parties. From

E(R12 ) and E(R22 ), both parties can compute E(R12 × n2 + R22 × n1 ).

They then coordinately decrypt it and get R12 × n2 + R22 × n1 .

The final result is calculated as:

H=

12

(R2 × n2 + R22 × n1 ) − 3(N + 1).

N(N + 1)n1 n2 1

The reason why we compute R12 × n2 + R22 × n1 and then

divide it with n1 n2 instead of compute R12 /n1 + R22 /n2 directly is

that the cryptographic system we use only support the operations on non-negative integers. To avoid the decimal fractions

in the encryptions, we compute R1 2 × n2 + R2 2 × n1 and after

the decryption, the division is applied.

4.4.

The summarized algorithm

The main steps of the algorithm is summarized in Algorithm

2.

139

Algorithm 2. The basic algorithm of privacy-preserving

Kruskal–Wallis test

Input. Party A has sample S1 which contains n1 values, and

party B has sample S2 which contains n2 values. The total

number of values N = n1 + n2 ;

Output. The statistic H;

1:

for each value ai in party A do

2:

Calculate the encrypted rank of it E(R(ai ));

end for

3:

for each value bj in party B do

4:

Calculate the encrypted rank of it E(R(bj ));

5:

end for

6:

Compute the encrypted rank sum of each

7:

n1

party E(R1 ) and E(R2 ) where R1 =

R(ai )

i=1

n2

R(bj );

and R2 =

j=1

8:

9:

10:

4.5.

Calculate E(R12 ) and E(R22 ) from E(R1 ) and

E(R2 ) with Algorithm 1;

Calculate E(R12 × n2 + R22 × n1 ) and decrypt

it;

Compute H from R12 × n2 + R22 × n1 ;

Extension to multiparty

The extension of the algorithm from two-party to multiparty

is straightforward. For each value in each party, to get its rank

in the two-party case, we count the number of values that are

smaller than or equal to it in its own party and in the other

party. To count the number in the other party, we need the

secure comparison protocol. Similarly, in the multiparty case,

we also count the number of values that are smaller than or

equal to it in its own party and every other party with the help

of the secure comparison protocol.

After the computation of encrypted ranks for every

value in every party, the encrypted rank sums are calculated, just like in the 2-party case. Then the encrypted

squared rank sums E(R12 ), E(R22 ), . . . , E(Rk2 ) can be computed

with Algorithm 1. They are known to all the parties. As

we compute E(R12 × n2 + R22 × n1 ) when there are two parties,

for the k parties, E(R12 × n2 n3 . . . nk + R22 × n1 n3 . . . nk + · · · + Rk2 ×

n1 n2 . . . nk−1 ) is computed. We decrypt it and divide the decrypt

result by n1 n2 . . . nk instead of n1 n2 in the two-party case. Then

the final result H is calculated.

5.

The complete algorithm of

privacy-preserving Kruskal–Wallis test

We present the privacy-preserving Kruskal–Wallis test with

considering ties in this section.

5.1.

Modifying the data to eliminate ties

Before we explain the complete algorithm, we give a simpler

method to deal with the tied values. This is to modify the

values slightly to eliminate the ties and then apply the basic

algorithm to the modified data. Since the data is modified a

little, this method causes slight accuracy loss.

Author's personal copy

140

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

To eliminate ties between parties, we do the following

steps: If there are two parties, for every value in the first party,

multiply it with 10 and then add 0 to it. For every value in

the second party, multiply it with 10 and then add 1 to it. For

example, suppose ai belongs to the first party and bj belongs

to the second party. We do ai = ai × 10 + 0 and bj = bj × 10 + 1. In

this way, the ties between the two parties are eliminated and

the ranks of other values are not affected.

If there are more than two parties, the data is modified

similarly depending on the number of parties. For example,

if there are ten parties, we still multiply every value in every

party with 10 and add zeros to the values in the first party,

add ones to the values in the second party, . . ., add nines to

the tenth party. If there are 100 parties, multiply every value

with 100 and add zeros to ninety-nines to the values of the

first to 100th party, respectively.

To deal with the ties within parties, we do not need to modify the data. We can ignore these ties when calculating the

ranks. For example, suppose one party has three values, {1, 1,

1}. With our algorithm, the ranks are calculated by counting

the number of smaller or equal values. For these three values,

the number of smaller or equal values in their own parties

are 3, 3 and 3. We change them to 1, 2 and 3, respectively. This

can be easily finished because every party has the information

of ties within it. After changing the local counts, the counts of

smaller or equal values from other parties are added to get the

rank. The ranks do not contain any tie because both ties within

the local party and the ties between parties are disregarded.

After the modifications, we can apply the basic algorithm that

deals with data without ties.

5.2.

The complete algorithm

Here we present the complete algorithm that works for data

containing ties. Similar to the previous section, the algorithm

is proposed with assumption that there are only two parties

and then extended to the multiparty case.

As mentioned in Section 3, when there are ties in the data,

the calculation of the statistic is changed in two aspects: The

ranks of the tied values should be adjusted when computing

H, and H should be divided by C. Both of them will be discussed

in details.

5.2.1.

Adjustment of the ranks of tied values

The ranks of each group of tied values should be changed to

the average of the ranks that these tied values would have

received without ties. We use an example to show the basic

idea to achieve this adjustment. Suppose there are values {1,

2, 3, 4, 4, 4, 4, 4} that are distributed in two samples held by

two parties, respectively. Party A has sample S1 which contains

values {1, 2, 4, 4} and party B has sample S2 which contains

values {3, 4, 4, 4}. Without considering the tie, we know that

the ranks of the values {1, 2, 3, 4, 4, 4, 4, 4} are 1, 2, 3, 4, 5, 6,

7, 8, respectively. The five “4”s are tied and their ranks are 4,

5, 6, 7, 8. The largest rank in this tie is 8 and the smallest rank

is 4. The average of the ranks is 6 and it can be calculated by

taking the average of the largest rank 8 and the smallest rank

4. This is because that the ranks of values in a tie is an arithmetic sequence, so the average of all values in the sequence is

the same as the average of the smallest and the largest values.

After changing the ranks of the tied values to the average of

them, the ranks should be 1, 2, 3, 6, 6, 6, 6, 6. In our algorithm,

since we calculate the rank of each value by counting the values that smaller than or equal to it, the ranks are 1, 2, 3, 8, 8,

8, 8, 8 because for each 4, there are 8 values smaller than or

equal to it. So with our algorithm, the ranks of each group of

tied values are actually the largest rank in the tie. We need to

add some steps into our algorithm to change the ranks form

1, 2, 3, 8, 8, 8, 8, 8 to 1, 2, 3, 6, 6, 6, 6, 6.

The basic idea is: Since the ranks of values in each tie is

the largest rank in the tie, we only need to get the smallest

rank in the tie and take the average of the largest rank and

the smallest rank. To get the smallest rank from the largest

rank, we need to know the number of values in the tie. With

the largest rank named as l, the smallest rank named as s, and

the number of values in the tie named as t, we have s = l − t + 1.

As in our example, the tie contains 5 values with the largest

rank as 8 and the smallest rank as 4. We have 8 − 5 +1 = 4. So,

to change the ranks form 1, 2, 3, 8, 8, 8, 8, 8 to 1, 2, 3, 6, 6, 6, 6, 6,

we need to get the number of values in ties, and then compute

the smallest ranks in ties, and take the average of the largest

ranks and the smallest ranks.

We assume that each value is in a tie and calculate the

number of values in each value’s tie. In our example, value 1 is

in a tie that contains only 1 value, so are values 2 and 3. Each

value 4 is in a tie that contains 5 values. So for values {1, 2,

3, 4, 4, 4, 4, 4}, we have 1, 1, 1, 5, 5, 5, 5, 5 as the number of

values in each value’s tie. Then for each value, compute the

smallest rank in its tie with s = l − t + 1. For value 1, the smallest

rank is 1 − 1 +1 = 1. For value 2, the smallest rank is 2 − 1 +1 = 2.

For value 3, the smallest rank is 3 − 1 +1 = 3. For each value 4,

the smallest rank is 8 − 5 +1 = 4. So the smallest ranks for the

eight values are 1, 2, 3, 4, 4, 4, 4, 4. With the largest ranks 1,

2, 3, 8, 8, 8, 8, 8, we can get the averaged ranks 1, 2, 3, 6, 6, 6,

6, 6. We can see that for values 1, 2 and 3 that are not tied,

assuming that they are in ties containing 1 value does not

affect the calculation results of their ranks. The reason why

we make such assumption is that, although we show all the

values, ranks and tied numbers of values together in cleartext

to make it easier to understand, in the real settings, they are

encrypted or distributed and no party has the complete information about them. So no party knows whether a value is in a

tie or not. For example, party A has one value 1 and this value

is not in a tie in party A. But A does not know whether party

B has value 1 or not, and A does not know whether value 1 is

in a tie globally. So all values are assumed to be in a tie.

After explaining the basic idea of the adjustment of ranks,

let us show the steps that the two parties do the adjustment

securely.

We follow the basic algorithm in Section 4 to get the ranks

of each value in each party. Here the “rank”s are the number

of smaller or equal values, which are the largest ranks of each

tie. To count the smaller or equal values for value ai in party A,

it is compared with both values in party A and party B. When

comparing ai with values in party A, we also count the number

of values that are equal to ai in party A and name it TA (ai ). As

mentioned in Section 4, when comparing ai with every value

in party B securely, each of the comparison result is an encryption of 0 or 1 such that if bj ≤ ai , the comparison result between

bj and ai is E(1) and otherwise E(0). The sum of these results

Author's personal copy

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

Table 1 – An example table.

b1

a1

...

ai

...

an1

...

bj

...

bn2

E(1)

is the encrypted number of values smaller than or equal to ai

in party B. Here we keep all the comparison results between

every pair of ai and bj in a n1 × n2 table such that the element

in the table on the ai th row and bj th column is the comparison result between ai and bj , which is E(1) if bj ≤ ai and E(0)

otherwise. Table 1 is an example with a n1 × n2 table.

Similarly, to count the smaller or equal values of value bj in

party B, we compare it with values in both party A and party

B. When comparing bj with values in party B, we also count

the number of values that are equal to bj in party B and name

it TB (bj ). When comparing bj with values in party A securely,

each comparison result is not the same as the previous case.

Here the comparison result between bj and ai is E(1) if ai ≤ bj

and E(0) otherwise. We also keep the comparison results in a

n1 × n2 table.

The two tables storing the comparison results are not the

same. In the first table, the value in the ai th row and bj th column is E(1) if bj ≤ ai and E(0) otherwise; while in the second

table, the value in the ai th row and bj th column is E(1) if bj ≥ ai

and E(0) otherwise. Here we introduce a third n1 × n2 table that

each element in it is the secure sum of the two corresponding elements in the first and second tables. For example, if the

value in the ai th row and bj th column in the first table is E(1)

and in the second table is E(0), then the value in the ai th row

and bj th column in the third table is E(1 + 0).

The values in the third table is either E(1) or E(2). If ai < bj ,

the value in the second table is E(1) and the value in the first

table is E(0). Thus, the value in the third table is E(1). If ai > bj ,

the value in the first table is E(1) and the value in the second

table is E(0). Thus, the value in the third table is also E(1). If

ai = bj , both values in the first and second tables are E(1) and

the value in the third table is E(2). To sum up, the value in the

/ bj and

ai th row and bj th column in the third table is E(1) if ai =

E(2) if ai = bj .

We securely deduct 1 from every element in the third table.

Then the value in the ai th row and bj th column in the new

table is E(0) if ai =

/ bj and E(1) if ai = bj . This new table contains

the information of equal values between the two parties. The

sum of all the values in the ai th row is the encrypted number of values that are equal to ai in party B which is named

as E(TB (ai )). The sum of all the values in the bj th column is

the encrypted number of values that are equal to bj in party A

which is named as E(TA (bj )). Since parties A and B have computed TA (ai ) and TB (bj ), respectively, the two numbers can be

encrypted and added to the E(TB (ai )) and E(TA (bj )), respectively

to get E(T(ai )) = E(TA (ai ) + TB (ai )) and E(T(bj )) = E(TA (bj ) + TB (bj )).

For each value ai (i = 1, 2, . . ., n1 ) in party A, we have E(R(ai ))

which is the encrypted largest rank in ai ’s tie and E(T(ai )) which

is the encrypted number of values in ai ’s tie, or the number of

values equal to ai in both parties. For each value bj (j = 1, 2, . . .,

n2 ) in party B, we have the similar numbers E(R(bj )) and E(T(bj )).

141

To get the averaged rank for each value, we need to know the

smallest rank in each value’s tie. The smallest ranks can be

calculated from the largest ranks and the numbers of values

in ties. For each value ai (i = 1, 2, . . ., n1 ) in party A, the encrypted

smallest rank E(S(ai )) in ai ’s tie is E(R(ai ) − T(ai ) + 1) and the

encrypted adjusted rank of ai is E((S(ai ) + R(ai ))/2), which is

the average between the largest and the smallest rank. To

avoid the decimal fraction in the ciphertext, we only calculate E(S(ai ) + R(ai )) and the division by 2 is applied after the

final decryption. For each value bj (j = 1, 2, . . ., n2 ) in party B, the

encrypted smallest rank E(S(bj )) in bj ’s tie is E(R(bj ) − T(bj ) + 1)

and the encrypted adjusted rank of bj is E((S(bj ) + R(bj ))/2). We

also calculate E(S(bj ) + R(bj )) and apply the division by 2 after

the final decryption.

In this way, we can adjust the ranks of every value and the

rank sums are calculated based on these new ranks. Please

notice that if a value is not tied with others, the adjustment

does not change its rank. The complete algorithm of calculating H is summarized in Algorithm 3.

Algorithm 3. The complete algorithm of privacy-preserving

Kruskal–Wallis test

Input. Party A has sample S1 which contains n1 values,

and party B has sample S2 which contains n2 values. The

total number of values N = n1 + n2 ;

Output. The statistic H;

1:

for each value ai in party A do

Calculate the encrypted rank of it E(R(ai ))

2:

and record the secure comparison results;

end for

3:

for each value bj in party B do

4:

5:

Calculate the encrypted rank of it E(R(bj ))

and record the secure comparison results;

6:

end for

From the secure comparison results, get

7:

the information of equal values between

the two parties;

for each value ai in party A do

8:

9:

Calculate the encrypted number of

values equal to it E(T(ai ));

Calculate the encrypted smallest rank in

10:

its tie E(S(ai )) from E(T(ai )) and E(R(ai ));

Calculate the encrypted averaged rank

11:

of it;

12: end for

13: for each value bj in party B do

Calculate the encrypted number of

14:

values equal to it E(T(bj ));

Calculate the encrypted smallest rank in

15:

its tie E(S(bj )) from E(T(bj )) and E(R(bj ));

Calculate the encrypted averaged rank

16:

of it;

17: end for

18: Do the remaining calculations to compute

H as in Algorithm 2 with the encrypted

averaged ranks;

Author's personal copy

142

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

To extend the adjustment from two parties to multiple

parties, we just need to create a table containing the information of equal values for each pair of parties during the

computations of ranks. For each value, calculate the encrypted

number of values equal to it by collecting information from all

tables it is involved. Then the encrypted smallest rank in its

tie and the averaged rank can be computed and the following steps are the same as in the extension of Algorithm 2 in

Section 4.

5.2.2.

Calculation of C

In most cases, dividing H by C makes little change in the final

result. If the number of tied values are not more than 1/4 of the

total values, the division does not change the result by more

than 10% for some degrees of freedom and significance [3].

To calculate C securely for two parties A and B, we need

the information of ties computed in the adjustment of ranks,

the E(T(ai )) for each value ai (i = 1, 2, . . ., n1 ) in party A and the

E(T(bj )) for each value bj (j = 1, 2, . . ., n2 ) in party B.

From Eq. (2), we have

C=1−

(ti3 − ti )

N3 − N

,

where ti is the number of values in the ith tie.

To compute C securely, we treat T(ai ) of each distinct ai and

T(bj ) of each distinct bj as ti . For the values that are not tied

with others, since their T values are equal to 1, and 13 − 1 =0,

adding them do not affect the value of C. For the tied values,

their T values should be considered just once in the calculation of C, so we consider the T’s of the distinct values in each

party. With the example we used before that party A has values {1, 2, 4, 4} and party B has values {3, 4, 4, 4}, for party A,

we only consider T(1) = 1, T(2) = 1 and T(4) = 5. For party B, we

consider T(3) = 1 and T(4) = 5. Here all the T’s are encrypted and

no party knows the exact numbers. C can be securely computed from the encryption of ti s. The E(ti3 ) is calculated from

3

E(ti ) with Algorithm 1 and then E(

ti − ti ) can be computed.

The problem is, although only the T’s of distinct values

in each party are included in the calculation of C, there are

still duplicates. Considering only the distinct values in each

party can make sure that the ties within parties are counted

only once, but it cannot eliminate the duplicated ties between

parties. As in the above example, T(4) is counted twice because

the tie of value 4 exists in both parties.

We call the set of ties exist only in party A TA , the set of

ties exist only in party B TB and the set of ties exist in both

parties TAB . We want the information about TA , TB and TAB

to be included in C just once. With the above solution, TAB is

counted twice.

If we consider only T(ai ) for each value ai (i = 1, 2, . . ., n1 ) in

party A and do not add the T(bj ) for each value bj (j = 1, 2, . . ., n2 )

in party B, TA and TAB are considered once but the information

of TB is lost. We cannot add the information of only TB without

adding TAB , because every party does not know whether a tie

in it is local or global.

We haven’t worked out a solution to calculate C exactly as

it is. The two solutions mentioned above either add more tie

information or lose some tie information when calculating C.

But they can give a range of C by providing an upper bound

and a lower bound and cut down the loss of accuracy.

Table 2 – The BMI dataset.

Asians

Indians

Malays

32 (15)

30.1 (14)

27.6 (12)

26.2 (10)

28.2 (13)

26.4 (11)

23.1 (2)

23.5 (4)

24.6 (7)

24.3 (6)

24.9 (8)

25.3 (9)

23.8 (5)

22.1 (1)

23.4 (3)

We use some examples to show the extension of the calculation of C from two parties to multiparty. Suppose there

are three parties, A, B and C. Similar to the two-party case, we

denote TA , TB and TC as the sets of ties exist only in party A,

B and C, respectively. TAB is the set of ties in parties A and B.

TAC , TBC and TABC are defined in the same way.

For each pair of parties, we have a table storing the information of tied values between the two parties. The three tables

are named as Table(AB), Table(AC) and Table(BC), respectively.

We collect the tie information of each distinct value in party

A from all the tables that involve A, which are Table(AB) and

Table(AC). This gives us the information about all the ties

appear in party A, which are TA , TAB , TAC and TABC . Then

we disregard party A and the tables involving A, and collect

the tie information of each distinct value in party B from all

the remaining tables that involve B, which is only Table(BC).

With this step, we can add the information about all the ties

appearing in party B but not in A, which are TB and TBC . Then

we encounter the same problem as in the two-party case: if

we stop here, the tie information of TC is lost; if we add the

tie information of each distinct value in party C from a table

involving C such as Table(AC), both TC and TAC are added, and

thus TAC is counted twice.

When there are k parties, we follow the same procedure

and get the information of all the ties appear in the first party,

then add the information of ties in the second party, and so on.

When it comes to the last party, we either lose the information

of ties appearing only in the last party, or add duplicate information about ties appearing in both the last party and some

other party. This gives us an upper bound and a lower bound

of C.

6.

Experiments

The experimental results are presented in this section. All

the algorithms are implemented with the Crypto++library in

the C++language and the communications between parties

are implemented with socket API. The experiments are conducted on a Red Hat server with 16 × 2.27 GHz CPUs and 24 G

of memory.

We use the two datasets from [34] to test the accuracy of

our algorithms. The first dataset, as shown in Table 2, contains

3 samples with equal sizes. The “sample” in the context of

this paper is clearly different from that in many other papers.

Each “sample” here is the set of data held by a party and the

number of samples is the number of parties. In this dataset,

the data are simulated Body Mass Index (BMI) values for subjects of 3 different races from a surburb of San Francisco. Here

the BMI values for subjects of each race is a sample. There is

no tie in this dataset and the rank of every value is given in

parentheses.

Author's personal copy

143

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

Table 3 – The INR dataset.

Hospital A

1.68 (1)

1.69 (2)

1.70 (3.5)

1.70 (3.5)

1.72 (8)

1.73 (10)

1.73 (10)

1.76 (17)

Hospital B

Hospital C

Hospital D

1.71 (6)

1.73 (10)

1.74 (13.5)

1.74 (13.5)

1.78 (20)

1.78 (20)

1.80 (23.5)

1.81 (26)

1.74 (13.5)

1.75 (16)

1.77 (18)

1.78 (20)

1.80 (23.5)

1.81 (26)

1.84 (28)

1.71 (6)

1.71 (6)

1.74 (13.5)

1.79 (22)

1.81 (26)

1.85 (29)

1.87 (30)

1.91 (31)

The second dataset is presented in Table 3. It contains 4

samples and the sizes of them are not all equal. Each sample is

a set of simulated International Normalized Ratio (INR) values

of patients in one hospital. The ranks are given in parentheses.

There are ties in the data and the tied ranks are bold.

Since our secure algorithm only deal with non-negative

integers, each value in dataset 1 is multiplied by 10 and each

value in dataset 2 is multiplied by 100. This step changes all the

values to non-negative integers without changing the ranks of

values, and it does not affect the result of the Kruskal–Wallis

test which is calculated from the ranks.

The accuracy of our basic algorithm for data without ties

is 100%. This is shown with dataset 1. We provide both the

H values calculated in two-party and multiparty scenarios in

Table 4. In the two-party case, we take the first two samples of

dataset 1 and calculate the H value on these two samples. In

the multiparty case, the H value is calculated on all the three

samples of dataset 1.

Our algorithms for data with ties cause some accuracy loss.

There are two methods to deal with tied values. The first one

is to modify the data slightly to eliminate ties and then compute H with the basic algorithm. Accuracy loss occurs because

the data is changed. The second method is to keep the data

unchanged, but adjust the ranks and divide H by C. Here the

accuracy loss comes from the calculation of C. Because we

can compute an upper bound and a lower bound for C, we can

also get an upper bound and a lower bound for the final result

Hc . We test the two methods with dataset 2 and the results

are shown in Table 5. Here we also take the first two samples

from dataset 2 to test the two-party case and all four samples

of dataset 2 to test the multiparty case.

As we can see in the result, the second method has better accuracy than the first one. In the case with two parties,

although the first two samples of dataset 2 that we use contain

a lot of ties (9 out of 16 values are in ties), the two bounds are

both very close to the accurate result. In the multiparty case,

both the upper and lower bounds are equal to the accurate

result. This is because the two bounds are calculated by either

disregarding the ties only in the last sample, or counting the

ties between the last and the first samples twice. Fortunately,

in this dataset, the last sample does not contain any tie that is

only in it, and there is no tie between the last sample and the

first sample. So with this dataset, the two bounds are equal to

the accurate result.

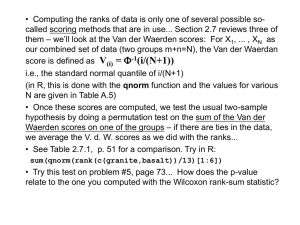

Let us show the computation overheads of the algorithms.

In Fig. 1 we present the running time comparison between the

algorithms we proposed with different sizes of data under the

two-party scenario. The running time values are in seconds.

We can find that the execution time of the basic algorithm for

data without ties and the first method for data with ties are

very close. This is because in the first method of dealing with

ties, we eliminate the ties and then follow the same procedure

as the basic algorithm. The second method for data with ties

takes more time than the first one, mostly because that the

adjustment of ranks takes time.

We also show the overheads in the multiparty case with

datasets 1 and 2. The execution time of the basic algorithm on

dataset 1 is:

Running time for 2 samples:

Running time for 3 samples:

5s

17 s

The execution time of the first method for data containing

ties on dataset 2 is:

Running time for 2 samples:

Running time for 3 samples:

Running time for 4 samples:

15 s

67 s

599 s

The execution time of the second method for data containing ties on dataset 2 is:

Running time for 2 samples:

Running time for 3 samples:

Running time for 4 samples:

26 s

169 s

2159 s

Table 5 – Kruskal–Wallis test result on data with ties.

2 samples

Table 4 – Kruskal–Wallis test result on data without ties.

2 samples

H calculated by the original

Kruskal–Wallis test

H calculated by our basic algorithm

3 samples

5.7709

8.72

5.7709

8.72

Hc calculated by the original

Kruskal–Wallis test

H calculated from modified

data (the first method)

The upper bound of Hc (the

second method)

The lower bound of Hc (the

second method)

4 samples

6.4191

11.876

6.89338

12.6971

6.4574

11.876

6.4

11.876

Author's personal copy

144

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

600

Running Time (s)

500

The basic algorithm for data without ties

The first method for data with ties

The second method for data with ties

400

300

200

100

0

5

10

15

20

Sample Size

Fig. 1 – Running time comparison of algorithms with two parties.

7.

Conclusion

[6]

In this work, we proposed several algorithms that enable

parties to conduct the Kruskal–Wallis test securely without

revealing their data to others. We showed the procedure of the

algorithms for data both with and without ties. We also presented an algorithm to do the multiplication of two encrypted

integers under the additive homomorphic cryptosystem. Our

algorithms can be extended to make other non-parametric

rank based statistical tests secure, such as the Friedman test.

This is our future work.

[7]

[8]

[9]

Conflict of interest

[10]

We wish to confirm that there are no known conflicts of interest associated with this publication and there has been no

significant financial support for this work that could have

influenced its outcome.

[11]

[12]

[13]

references

[14]

[1] W.H. Press, S.A. Teukolsky, W.T. Vetterling, B.P. Flannery,

Numerical recipes in C: the art of scientific computing,

Transform 1 (i) (1992) 504–510 (online). Available at:

http://www.jstor.org/stable/1269484?origin=crossref.

[2] G.E.P. Box, Non-normality and tests on variances, Biometrika

(1953) 318–335.

[3] W.H. Kruskal, W.A. Wallis, Use of ranks in one-criterion

variance analysis, Journal of the American Statistical

Association 47 (260) (1952) 583–621 (online). Available at:

http://www.jstor.org/stable/2280779.

[4] F. Wilcoxon, Individual comparisons by ranking methods,

Biometrics Bulletin (1945) 80–83.

[5] H.B. Mann, D.R. Whitney, On a test of whether one of two

random variables is stochastically larger than the other,

[15]

[16]

[17]

[18]

Annals of Mathematical Statistics 18 (1) (1947) 50–60

(online). Available at: http://www.jstor.org/stable/2236101.

C.M.R. Kitchen, Nonparametric versus parametric tests of

location in biomedical research, American Journal of

Ophthalmology (2009) 571–572.

R. Agrawal, R. Srikant, Privacy-Preserving Data Mining (2000).

Z. Huang, W. Du, B. Chen, Deriving private information from

randomized data, in: Proceedings of the 2005 ACM SIGMOD

International Conference on Management of Data SIGMOD

05, 2005, p. 37.

K. Chen, L. Liu, Privacy preserving data classification with

rotation perturbation, in: Proceedings of the Fifth IEEE

International Conference on Data Mining, ser. ICDM ‘05, IEEE

Computer Society, Washington, DC, USA, 2005, pp. 589–592

(online). Available at:

http://dx.doi.org/10.1109/ICDM.2005.121.

G.R. Heer, A bootstrap procedure to preserve statistical

confidentiality in contingency tables, in: Proceedings of the

International Seminar on Statistical Confidentiality, 1993,

pp. 261–271.

Y. Lindell, B. Pinkas, Privacy preserving data mining, Journal

of Cryptology 15 (3) (2002) 177–206.

W. Du, Z. Zhan, Building decision tree classifier on private

data, Reproduction (2002) 1–8.

C. Clifton, M. Kantarcioglu, J. Vaidya, X. Lin, M.Y. Zhu, Tools

for privacy preserving distributed data mining, ACM SIGKDD

Explorations Newsletter 4 (2) (2002) 28–34.

I. Damgard, M. Fitzi, E. Kiltz, J.B. Nielsen, T. Toft,

Unconditionally Secure Constant-Rounds Multi-party

Computation for Equality, Comparison, Bits and

Exponentiation, vol. 3876, Springer, 2006, pp. 285–304.

I. Damgard, M. Geisler, M. Kroigard, Homomorphic

encryption and secure comparison, International Journal of

Applied Cryptography 1 (2008) 22.

W. Du, M. Atallah, Privacy-Preserving Cooperative Statistical

Analysis, IEEE Computer Society, 2001, p. 102.

B. Goethals, S. Laur, H. Lipmaa, T. Mielik?inen, On private

scalar product computation for privacy-preserving data

mining, Science 3506 (2004) 104–120.

S. Han, W.K. Ng, Privacy-preserving linear fisher

discriminant analysis., in: Proceedings of the 12th

Pacific-Asia Conference on Advances in Knowledge

Author's personal copy

c o m p u t e r m e t h o d s a n d p r o g r a m s i n b i o m e d i c i n e 1 1 2 ( 2 0 1 3 ) 135–145

[19]

[20]

[21]

[22]

[23]

[24]

[25]

Discovery and Data Mining, ser. PAKDD’08, Springer-Verlag,

Berlin, Heidelberg, 2008, pp. 136–147.

W. Du, Y.Y.S. Han, S. Chen, Privacy-Preserving Multivariate

Statistical Analysis: Linear Regression and Classification,

vol. 233, Lake Buena Vista, Florida, 2004.

S. Han, W.K. Ng, P.S. Yu, Privacy-preserving singular value

decomposition, in: 2009 IEEE 25th International Conference

on Data Engineering, 2009, pp. 1267–1270.

Z. Teng, W. Du, A hybrid multi-group privacy-preserving

approach for building decision trees, in: Proceedings of the

11th Pacific-Asia Conference on Advances in Knowledge

Discovery and Data Mining, ser. PAKDD’07, Springer-Verlag,

Berlin, Heidelberg, 2007, pp. 296–307.

J. Vaidya, W. Lafayette, C. Clifton, Privacy-preserving

k-means clustering over vertically partitioned data, Security

(2003) 206–215.

G. Jagannathan, R.N. Wright, Privacy-Preserving Distributed

k-Means Clustering Over Arbitrarily Partitioned Data, ACM,

2005, pp. 593–599.

L. Wan, W.K. Ng, S. Han, V.C.S. Lee, Privacy-preservation for

gradient descent methods, in: Proceedings of the 13th ACM

SIGKDD International Conference on Knowledge Discovery

and Data Mining KDD 07, 2007, p. 775.

T. Chen, S. Zhong, Privacy-preserving models for comparing

survival curves using the logrank test, Computer Methods

and Programs in Biomedicine 104 (2) (2011) 249–253 (online).

Available at: http://www.ncbi.nlm.nih.gov/pubmed/

21636164.

145

[26] Y. Zhang, S. Zhong, Privacy preserving distributed

permutation test, 2012, submitted for publication.

[27] O. Goldreich, Foundations of Cryptography, vol. 1, no. 3,

Cambridge University Press, 2001.

[28] M. Kantarcioglu, C. Clifton, Privacy-preserving distributed

mining of association rules on horizontally partitioned data,

IEEE Transactions on Knowledge and Data Engineering 16 (9)

(2004) 1026–1037.

[29] J. Vaidya, C. Clifton, Privacy-Preserving Outlier Detection,

vol. 41, no. 1, IEEE, 2004, pp. 233–240.

[30] S. Zhong, Privacy-preserving algorithms for distributed

mining of frequent itemsets, Information Sciences 177 (2)

(2007) 490–503.

[31] P. Paillier, Public-key cryptosystems based on composite

degree residuosity classes, Computer 1592 (1999)

223–238.

[32] T. ElGamal, A public key cryptosystem and a signature

scheme based on discrete logarithms, IEEE Transactions on

Information Theory 31 (4) (1985) 469–472.

[33] D. Boneh, The Decision Diffie-Hellman Problem, vol. 1423,

Springer-Verlag, 1998, pp. 48–63.

[34] A.C. Elliott, L.S. Hynan, A sas((r)) macro implementation of a

multiple comparison post hoc test for a Kruskal–Wallis

analysis, Computer Methods and Programs in Biomedicine

102 (1) (2011) 75–80 (online). Available at:

http://www.ncbi.nlm.nih.gov/pubmed/21146248.