Machine Recognition of Sounds in Mixtures

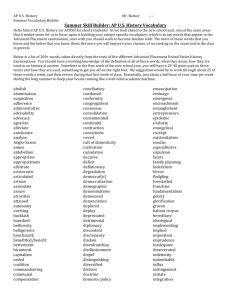

advertisement

Machine Recognition of Sounds in Mixtures

Outline

1

Computational Auditory Scene Analysis

2

Speech Recognition as Source Formation

3

Sound Fragment Decoding

4

Results & Conclusions

Dan Ellis <dpwe@ee.columbia.edu>

LabROSA, Columbia University, New York

Jon Barker <j.barker@dcs.shef.ac.uk>

SPandH, Sheffield University, UK

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 1

1 Computational Auditory Scene Analysis

(CASA)

•

Human sound organization:

Auditory Scene Analysis

Sound mixture

Object 1 percept

Object 2 percept

Object 3 percept

- composite sound signal → separate percepts

- based on ecological constraints

- acoustic cues → perceptual grouping

•

Computational ASA:

Doing the same thing by computer

...?

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 2

What is the goal of CASA?

CASA

?

•

Separate signals?

- output is unmixed waveforms

- underconstrained, very hard ...

- too hard? not required?

•

Source classification?

- output is set of event-names

- listeners do more than this...

•

Something in-between?

Identify independent sources + characteristics

- standard task, results?

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 3

Segregation vs. Inference

•

Source separation

requires attribute separation

- sources are characterized by attributes

(pitch, loudness, timbre + finer details)

- need to identify & gather different attributes for

different sources ...

•

Need representation that segregates attributes

- spectral decomposition

- periodicity decomposition

•

Sometimes values can’t be separated

- e.g. unvoiced speech

- maybe infer factors from probabilistic model?

p ( O, x , y ) → p ( x , y O )

- or: just skip those values,

infer from higher-level context

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 4

Outline

1 Computational Auditory Scene Analysis

2 Speech Recognition as Source Formation

- Standard speech recognition

- Handling mixtures

3 Sound Fragment Decoding

4 Results & Conclusions

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 5

Speech Recognition

as Source Formation

2

•

Automatic Speech Recognition (ASR):

the most advanced sound analysis

•

ASR extracts abstract information from sound

- (i.e. words)

- even in mixtures (noisy backgrounds) .. a bit

•

ASR is not signal extraction:

only certain signal information is recovered

- .. just the bits we care about

•

Not CASA preprocessing for ASR:

Instead, approach ASR as an example of CASA

- words = description of source properties

- uses strong prior constraints: signal models

- but: must handle mixtures!

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 6

How ASR Represents Speech

•

Markov model structure: states + transitions

S

A

0.1

0.02

T

0.9

4

Model M'2

0.8

0.9

K

freq / kHz

0.8

0.18

0.2

3

0.8

0.05

2

A

0.15

20

T

0.2

30

E

40

O

0

•

10

0.8

0.1

1

O

0.1

0.9

K

S

E

0.1

freq / kHz

M'1

State Transition Probabilities

State models (means)

0.9

50

10

Generative model

- but not a good speech generator!

4

3

2

1

0

0

1

2

3

4

5

time / sec

- only meant for inference of p(X|M)

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 7

20

30

40

50

Sequence Recognition

•

Statistical Pattern Recognition:

P( X M ) ⋅ P( M )

∗

M = argmax P ( M X ) = argmax --------------------------------------P( X )

M

M

models

observations

•

Markov assumption decomposes into frames:

P( X M ) =

•

∏n p ( x n

mn ) p ( mn mn – 1 )

Solve by searching over all possible state

sequences {mn}.. but with efficient pruning:

oy

DECOY

s

DECODES

z

DECODES

axr

DECODER

k

iy

d

uw

ow

d

DECODE

DO

root

b

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 8

Approaches to sound mixture recognition

•

Separate signals, then recognize

- e.g. (traditional) CASA, ICA

- nice, if you can do it

•

Recognize combined signal

- ‘multicondition training’

- combinatorics..

•

Recognize with parallel models

- full joint-state space?

- divide signal into fragments,

then use missing-data recognition

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 9

Outline

1 Computational Auditory Scene Analysis

2 Speech Recognition as Source Formation

3 Sound Fragment Decoding

- Missing Data Recognition

- Considering alternate segmentations

4 Results & Conclusions

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 10

Sound Fragment Decoding

3

•

Signal separation is too hard!

Instead:

- segregate features into partially-observed

sources

- then classify

•

Made possible by missing data recognition

- integrate over uncertainty in observations

for true posterior distribution

•

Goal:

Relate clean speech models P(X|M)

to speech-plus-noise mixture observations

- .. and make it tractable

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 11

Missing Data Recognition

•

Speech models p(x|m) are multidimensional...

- i.e. means, variances for every freq. channel

- need values for all dimensions to get p(•)

•

But: can evaluate over a

subset of dimensions xk

p ( xk m ) =

•

∫ p ( x k, x u

xu

y

m ) dx u

Hence,

missing data recognition:

Present data mask

p(xk,xu)

p(xk|xu<y )

P(x | q) =

dimension →

P(x1 | q)

· P(x2 | q)

· P(x3 | q)

· P(x4 | q)

· P(x5 | q)

· P(x6 | q)

time →

- hard part is finding the mask (segregation)

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 12

xk

p(xk )

Missing Data Results

•

Estimate static background noise level N(f)

•

Cells with energy close to background are

considered “missing”

"1754" + noise

SNR mask

Missing Data

Classifier

"1754"

Digit recognition accuracy / %

Factory Noise

100

80

60

40

20

0

a priori

missing data

MFCC+CMN

5

10

15

20

SNR (dB)

- must use spectral features!

•

But: nonstationary noise → spurious mask bits

- can we try removing parts of mask?

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 13

∞

Comparing different segregations

•

Standard classification chooses between

models M to match source features X

P( M )

M ∗ = argmax P ( M X ) = argmax P ( X M ) ⋅ -------------P( X )

M

M

•

Mixtures: observed features Y, segregation S,

all related by P ( X Y , S )

Observation

Y(f )

Source

X(f )

Segregation S

•

freq

Joint classification of model and segregation:

P( X Y , S )

P ( M , S Y ) = P ( M ) ∫ P ( X M ) ⋅ ------------------------- dX ⋅ P ( S Y )

P( X )

- P(X) no longer constant

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 14

Calculating fragment matches

P( X Y , S )

P ( M , S Y ) = P ( M ) ∫ P ( X M ) ⋅ ------------------------- dX ⋅ P ( S Y )

P( X )

•

P(X|M) - the clean-signal feature model

•

P(X|Y,S)/P(X) - is X ‘visible’ given segregation?

•

Integration collapses some bands...

•

P(S|Y) - segregation inferred from observation

- just assume uniform, find S for most likely M

- or: use extra information in Y to distinguish S’s...

•

Result:

- probabilistically-correct relation between

clean-source models P(X|M)

and inferred, recognized source + segregation

P(M,S|Y)

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 15

Using CASA features

•

P(S|Y) links acoustic information to segregation

- is this segregation worth considering?

- how likely is it?

•

Opportunity for CASA-style information to

contribute

- periodicity/harmonicity:

these different frequency bands belong together

- onset/continuity:

this time-frequency region must be whole

Frequency Proximity

Ellis & Barker

Common Onset

Machine Recognition of Sounds in Mixtures

Harmonicity

2003-04-29 - 16

Fragment decoding

•

Limiting S to whole fragments

makes hypothesis search tractable:

Fragments

Hypotheses

w1

w5

w2

w6

w7

w3

w8

w4

time

T1

T2

T3

T4

T5

T6

- choice of fragments reflects P(S|Y) · P(X|M)

i.e. best combination of segregation

and match to speech models

•

Merging hypotheses limits space demands

- .. but erases specific history

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 17

Multi-Source Decoding

•

Match multiple models at once?

M2

Y(t)

S2(t)

S1(t)

M1

- disjoint subsets of cells for each source

- each model match P(Mx|Sx,Y) is independent

- masks are mutually dependent: P(S1,S2|Y)

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 18

Outline

1 Computational Auditory Scene Analysis

2 Speech Recognition as Source Formation

3 Sound Fragment Decoding

4 Results & Conclusions

- Speech recognition

- Alarm detection

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 19

4

Speech fragment decoder results

•

Simple P(S|Y) model forces contiguous regions

to stay together

- big efficiency gain when searching S space

"1754" + noise

Digit recognition accuracy / %

Factory Noise

SNR mask

Fragments

Fragment

Decoder

•

100

80

60

40

20

0

a priori

multisource

missing data

MFCC+CMN

5

"1754"

10

15

SNR (dB)

Clean-models-based recognition

rivals trained-in-noise recognition

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 20

20

∞

Alarm sound detection

•

freq / kHz

s0n6a8+20

4

Alarm sounds have particular structure

- people ‘know them when they hear them’

- clear even at low SNRs

hrn01

bfr02

buz01

20

3

0

2

-20

1

0

0

5

10

15

20

25

time / s

•

Why investigate alarm sounds?

- they’re supposed to be easy

- potential applications...

•

Contrast two systems:

- standard, global features, P(X|M)

- sinusoidal model, fragments, P(M,S|Y)

Ellis & Barker

Machine Recognition of Sounds in Mixtures

-40

level / dB

2003-04-29 - 21

freq / kHz

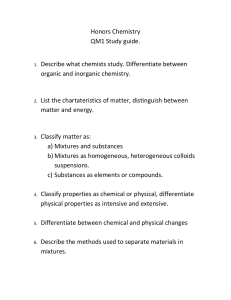

Alarms: Results

Restaurant+ alarms (snr 0 ns 6 al 8)

4

3

2

1

0

MLP classifier output

freq / kHz

0

Sound object classifier output

4

6

9

8

7

3

2

1

0

20

•

25

30

35

40

45

time/sec 50

Both systems commit many insertions at 0dB

SNR, but in different circumstances:

Noise

Neural net system

Del

Ins

Tot

Sinusoid model system

Del

Ins

Tot

1 (amb)

7 / 25

2

36%

14 / 25

1

60%

2 (bab)

5 / 25

63

272%

15 / 25

2

68%

3 (spe)

2 / 25

68

280%

12 / 25

9

84%

4 (mus)

8 / 25

37

180%

9 / 25

135

576%

170

192%

50 / 100

147

197%

Overall 22 / 100

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 22

Summary & Conclusions

•

Scene Analysis

- necessary for useful hearing

•

Recognition

- a model domain for scene analysis

•

Fragment decoding

- recognition with partial observations

- combines segmentation & model fitting

•

Future work

- models of sources other than speech

- simultaneous ‘perception’ of multiple sources

Ellis & Barker

Machine Recognition of Sounds in Mixtures

2003-04-29 - 23