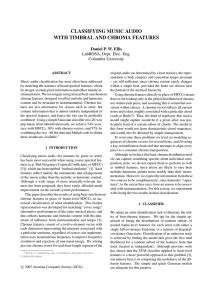

Classifying music audio with timbral and chroma features

advertisement

Classifying music audio with timbral and chroma features COLUMBIA UNIVERSITY Dan Ellis • Columbia University • dpwe@ee.columbia.edu Laboratory for the Recognition and Organization of Speech and Audio Summary: In addition to conventional cepstra, we model the covariance of chroma (melodic/harmonic) features and gain a small improvement in a 20-way pop music artist identification. Results Music audio Mel-frequency spectral analysis (MFCCs) Instantaneousfrequency Chroma features Per-beat averaging Per-beat averaging Per-artist Gaussian (mix) models Key normalization Classification Multi-time-frame feature stacking Key Normalization aligned chroma Taxman Eleanor Rigby I'm Only Sleeping G F G F G F D D D C C C A A A A C D F G A C D F G A Love You To G F D D D C C C A A C D C D F G Good Day Sunshine A G F G F D D D C C C A C D F G C D true u2 tori_amos suzanne_vega steely_dan roxette radiohead queen prince metallica madonna led_zeppelin green_day garth_brooks fleetwood_mac depeche_mode dave_matthews_b cure creedence_c_r beatles aerosmith $Confusion: fused – MFCC u2 tori_amos suzanne_vega steely_dan roxette radiohead queen prince metallica madonna led_zeppelin green_day garth_brooks fleetwood_mac depeche_mode dave_matthews_b cure creedence_c_r beatles aerosmith 5 0 -5 recognized $count F G A A C D F G A C D F G aligned chroma Data - “artist20” dataset • 1413 tracks, from 120 albums (20 artists x 6 albums) [5] • Contemporary pop music drawn from uspop2002 [6] and others • Studio albums, chosen for chronological & stylistic consistency • MFCCs, Chroma features, etc. available for download at http://labrosa.ee.columbia.edu/projects/artistid/ References [1] J.-J. Aucouturier & F. Pachet, “Music similarity measures: What’s the use?” ISMIR, Paris, 2002. [2] M. I. Mandel &D. P. W. Ellis, “Song-level features and support vector machines for music classification,” ISMIR, London, 2005. [3] J. H. Jensen, M. G. Christensen, S. H. Jensen, “A framework for analysis of music similarity measures,” EUSIPCO, 2007. [4] M. A. Bartsch & G. H. Wakefield, “To catch a chorus: Using chroma-based representations for audio thumbnailing,” WASPAA, Mohonk, 2001. [5] D. P. W. Ellis, “Music artist identification: artist20 baseline system in Matlab,” web resource, 2007. http://labrosa.ee.columbia.edu/projects/artistid/ [6] D. P. W. Ellis, A. Berenzweig, B. Whitman, “The uspop2002 Pop Music data set,” web resource, 2003. http://labrosa.ee.columbia.edu/projects/musicsim/uspop2002.html MATLAB code to run this system is available at: http://labrosa.ee.columbia.edu/projects/timbrechroma/ for ISMIR'07 • 2007-09-15, revised/corrected 2007-09-25 dpwe@ee.columbia.edu 0 count Confusion: MFCC+Chroma (57.40%) And Your Bird Can Sing G F A 20 A A F G She Said She Said A F G 40 u2 to su st ro ra qu pr me le ma gr fl ga de da cu cr be ae G F A C D Yellow Submarine G F 60 u2 to su st ro ra qu pr me le ma gr fl ga de da cu cr be ae • Similar melodic/harmonic gestures occur ‘relative’ to the different keys of individual songs. • Key normalization attempts to transpose (rotate) the chroma features to a canonical key prior to modeling. • We do this by: - build a chroma covariance Aligned Global model matrix from all songs - transpose each song to maximize likelihood under global model - re-estimate global model from transposed songs and repeat. • Covariance of single beat-chroma vectors carries some information about artist, beyond that captured by MFCCs. This could relate to e.g. an artist’s preferred chords. • Used alone, chroma features do quite well on a few artists (tori_amos, metallica) but learn almost nothing about others (madonna, beatles). • Our best performance came from a simple weighted sum of likelihoods from separate MFCC and Chroma models. Differences were small, but gains were on different artists than those classified best by Chroma alone (beatles, led_zeppelin). Confusion: Chroma (28.73%) u2 tori_amos suzanne_vega steely_dan roxette radiohead queen prince metallica madonna led_zeppelin green_day garth_brooks fleetwood_mac depeche_mode dave_matthews_b cure creedence_c_r beatles aerosmith u2 to su st ro ra qu pr me le ma gr fl ga de da cu cr be ae The unusual part Exec time 127 s 563 s 21 s 57 s 337 s 1060 s 70 s 197 s 516 s 1238 s • MFCC-based classification is much more accurate (56%) than using Chroma features (33% at best), but combining the two does give further gains (to 59% correct; McNemar p < .001). • A single, full-covariance Gaussian is adequate to model the MFCC data, but Chroma data need a 64-mix GMM. • Key normalization (ChromaKN) is important for Chroma features. • Stacking Chroma features from up to 4 adjacent beat-times (T win = 4) helps a little. Discussion and Conclusions Beat tracking Acc 56% 56% 14% 20% 25% 29% 23% 28% 32% 33% 59% true System Block Diagram Feature Model T win MFCC20 FullCov 1 MFCC20 64 GMM 1 Chroma FullCov 1 Chroma FullCov 4 Chroma 64GMM 1 Chroma 64GMM 4 ChromaKN FullCov 1 ChromaKN FullCov 4 ChromaKN 64GMM 1 ChromaKN 64GMM 4 MFCC + Chroma fusion true Introduction • “Classic” approaches to music audio classification model the covariance of spectral features (e.g. MFCCs) as distributions [1] or discriminatively [2]. These appear to work by reflecting the instrumentation, which correlates well with genre or band [3]. • Chroma features [4] attempt to represent the pitch content (melody and harmony) while minimizing the influence of instrumentation. • We investigate using the covariance of chroma features as a basis for music classification by artist. Although weak on their own, chroma features can improve classification when combined with spectral features • This suggests that artists have particular harmonic combinations or motifs that can be automatically recognized.