1 23 Convergence Analysis of the Gauss– Newton-Type Method for Lipschitz-Like Mappings

advertisement

Convergence Analysis of the Gauss–

Newton-Type Method for Lipschitz-Like

Mappings

M. H. Rashid, S. H. Yu, C. Li & S. Y. Wu

Journal of Optimization Theory and

Applications

ISSN 0022-3239

Volume 158

Number 1

J Optim Theory Appl (2013) 158:216-233

DOI 10.1007/s10957-012-0206-3

1 23

Your article is protected by copyright and all

rights are held exclusively by Springer Science

+Business Media New York. This e-offprint is

for personal use only and shall not be selfarchived in electronic repositories. If you wish

to self-archive your article, please use the

accepted manuscript version for posting on

your own website. You may further deposit

the accepted manuscript version in any

repository, provided it is only made publicly

available 12 months after official publication

or later and provided acknowledgement is

given to the original source of publication

and a link is inserted to the published article

on Springer's website. The link must be

accompanied by the following text: "The final

publication is available at link.springer.com”.

1 23

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

DOI 10.1007/s10957-012-0206-3

Convergence Analysis of the Gauss–Newton-Type

Method for Lipschitz-Like Mappings

M.H. Rashid · S.H. Yu · C. Li · S.Y. Wu

Received: 30 January 2011 / Accepted: 6 October 2012 / Published online: 20 October 2012

© Springer Science+Business Media New York 2012

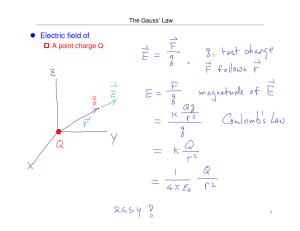

Abstract We introduce in the present paper a Gauss–Newton-type method for solving generalized equations defined by sums of differentiable mappings and set-valued

mappings in Banach spaces. Semi-local convergence and local convergence of the

Gauss–Newton-type method are analyzed.

Keywords Set-valued mappings · Lipschitz-like mappings · Generalized equations ·

Gauss–Newton-type method · Semi-local convergence

1 Introduction

The generalized equation problem, defined by the sum of a differentiable mapping

and a set-valued mapping in Banach spaces, was introduced by Robinson [1, 2] as

Communicated by Nguyen Don Yen.

M.H. Rashid · C. Li ()

Department of Mathematics, Zhejiang University, Hangzhou 310027, P.R. China

e-mail: cli@zju.edu.cn

M.H. Rashid

e-mail: harun_math@ru.ac.bd

Present address:

M.H. Rashid

Department of Mathematics, University of Rajshahi, Rajshahi 6205, Bangladesh

S.H. Yu

Department of Mathematics, Zhejiang Normal University, Jinhua 321004, P.R. China

e-mail: yushaohua00@126.com

S.Y. Wu

Department of Mathematics, National Cheng Kung University, Tainan, Taiwan

e-mail: soonyi@mail.ncku.edu.tw

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

217

a general tool for describing, analyzing, and solving different problems in a unified

manner. This kind of generalized equation problems has been studied extensively.

Typical examples are systems of inequalities, variational inequalities, linear and nonlinear complementary problems, systems of nonlinear equations, equilibrium problems, etc.; see, for example, [1–3]. The classical method for finding an approximate

solution is the Newton-type method (see Algorithm 3.1 in Sect. 3), which was introduced by Dontchev in [4]. Under some suitable conditions, around a solution x ∗ of

the generalized equation, it was proved in [4] that there exists a neighborhood U (x ∗ )

of x ∗ such that, for any initial point in U (x ∗ ), there exists a sequence generated by

the Newton-type method that converges quadratically to the solution x ∗ .

Generally, for an initial point, near to a solution, the sequences generated by the

Newton-type method are not uniquely defined and not every generated sequence is

convergent. The above convergence result established in [4] guarantees the existence

of a convergent sequence. Therefore, from the viewpoint of practical computations,

this kind of Newton-type methods would not be convenient in practical applications.

This drawback motivates us to propose a (modified) Gauss–Newton-type method (see

Algorithm 3.2 in Sect. 3), which indeed is an extension of the famous Gauss–Newton

method for solving nonlinear least square problems and the extended Newton method

for solving convex inclusion problems; see, for example, [5–11].

Usually, one’s interest in convergence issues about the Gauss–Newton-type

method is focused on two types: local convergence, which is concerned with the

convergence ball based on the information in a neighborhood of a solution, and semilocal analysis, which is concerned with the convergence criterion based on the information around an initial point. There has been fruitful work on semi-local analysis of

the Gauss–Newton-type method for some special cases such as the Gauss–Newton

method for nonlinear least square problems (cf. [6, 8, 10]), and the extended Gauss–

Newton method for convex inclusion problems (cf. [12]). However, to the best of our

knowledge, there is no study on semi-local analysis for the general case considered

here, even for the Newton-type method.

Our purpose in the present paper is to analyze semi-local convergence of the

Gauss–Newton-type method. The main tool is the Lipschitz-like property for setvalued mappings, which was introduced in [13] by Aubin in the context of nonsmooth analysis, and studied by many mathematicians; see, for example, [14–18] and

the references therein. Our main results are the convergence criteria established in

Sect. 3, which, based on the information around the initial point, provide some sufficient conditions ensuring the convergence to a solution of any sequence generated

by the Gauss–Newton-type method. As a consequence, local convergence results for

the Gauss–Newton-type method are obtained.

This paper is organized as follows. The next section contains some necessary notations and preliminary results. In Sect. 3, we introduce a Gauss–Newton-type method

for solving the generalized equation, and establish existence results of solutions of the

generalized equation and convergence results of the Gauss–Newton-type method for

Lipschitz-like mappings. In the last section, we give a summary of the major results

to close our paper.

Author's personal copy

218

J Optim Theory Appl (2013) 158:216–233

2 Notations and Preliminary Results

Throughout the whole paper, we assume that X and Y are two real or complex Banach

spaces. Let x ∈ X and r > 0. The closed ball centered at x with radius r is denoted

by Br (x). Let F : X ⇒ Y be a set-valued mapping. The domain dom F , the inverse

F −1 and the graph gph F of F are, respectively, defined by

dom F := x ∈ X : F (x) = ∅ ,

F −1 (y) := x ∈ X : y ∈ F (x) for each y ∈ Y

and

gph F := (x, y) ∈ X × Y : y ∈ F (x) .

Let A ⊂ X. The distance function of A is defined by

dist(x, A) := inf x − a : a ∈ A for each x ∈ X,

while the excess from the set A to a set C ⊆ X is defined by

e(C, A) := sup dist(x, A) : x ∈ C .

Recall from [19] the notions of pseudo-Lipschitz and Lipschitz-like set-valued

mappings. These notions were introduced by Aubin in [13, 20], and have been studied extensively. In particular, connections to linear rate of openness, coderivative and

metrically regularity of set-valued mappings were established by Penot and Mordukhovich; see, for example, [21, 22] and the book [19] for details.

Definition 2.1 Let Γ : Y ⇒ X be a set-valued mapping, and let (ȳ, x̄) ∈ gph Γ . Let

rx̄ > 0, rȳ > 0 and M > 0. Γ is said to be

(a) Lipschitz-like on Brȳ (ȳ) relative to Brx̄ (x̄) with constant M iff the following

inequality holds:

e Γ (y1 ) ∩ Brx̄ (x̄), Γ (y2 ) ≤ My1 − y2 for any y1 , y2 ∈ Brȳ (ȳ).

(b) Pseudo-Lipschitz around (ȳ, x̄) iff there exist constants rȳ > 0, rx̄ > 0 and M >

0 such that Γ is Lipschitz-like on Brȳ (ȳ) relative to Brx̄ (x̄) with constant M .

The following lemma is useful and its proof is similar to that for [19, Theorem 1.49(i)].

Lemma 2.1 Let Γ : Y ⇒ X be a set-valued mapping, and let (ȳ, x̄) ∈ gph Γ . Assume that Γ is Lipschitz-like on Brȳ (ȳ) relative to Brx̄ (x̄) with constant M. Then

dist x, Γ (y) ≤ M dist y, Γ −1 (x)

for any x ∈ Brx̄ (x̄) and y ∈ B rȳ (ȳ) satisfying dist(y, Γ −1 (x)) ≤

3

(1)

rȳ

3.

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

219

Proof Let x ∈ Brx̄ (x̄) and y ∈ B rȳ (ȳ) be such that dist(y, Γ −1 (x)) ≤

3

show that (1) holds. To do this, let 0 < ≤

rȳ

3

rȳ

3.

We have to

and ỹ ∈ Γ −1 (x) be such that

2rȳ

.

ỹ − y ≤ dist y, Γ −1 (x) + ≤

3

(2)

Then x ∈ Γ (ỹ) and ỹ − ȳ ≤ ỹ − y + y − ȳ ≤ rȳ , that is, ỹ ∈ Brȳ (ȳ). Thus, by

the assumed Lipschitz-like property of Γ , we have

e Γ (ỹ) ∩ Brx̄ (x̄), Γ (y) ≤ Mỹ − y.

Since x ∈ Γ (ỹ) ∩ Brx̄ (x̄), it follows from the definition of excess that

dist x, Γ (y) ≤ e Γ (ỹ) ∩ Brx̄ (x̄), Γ (y) ≤ Mỹ − y.

This together with (2) implies that

dist x, Γ (y) ≤ M dist y, Γ −1 (x)

as 0 < ≤

rȳ

3

is arbitrary. This completes the proof.

We end this section with the following known lemma in [23].

Lemma 2.2 Let φ : X ⇒ X be a set-valued mapping. Let η0 ∈ X, r > 0 and

0 < λ < 1 be such that

dist η0 , φ(η0 ) < r(1 − λ)

(3)

and

e φ(x1 ) ∩ Br (η0 ), φ(x2 ) ≤ λx1 − x2 for any x1 , x2 ∈ Br (η0 ).

(4)

Then φ has a fixed point in Br (η0 ), that is, there exists x ∈ Br (η0 ) such that x ∈ φ(x).

If φ is additionally single-valued, then the fixed point of φ in Br (η0 ) is unique.

3 Convergence Analysis of the Gauss–Newton-Type Method

Let f : Ω ⊆ X → Y be Fréchet differentiable with its Fréchet derivative denoted by

∇f , and let F : X ⇒ Y be a set-valued mapping with closed graph, where Ω is an

open set in X. The generalized equation problem considered in the present paper is

to find a point x ∈ Ω satisfying

0 ∈ f (x) + F (x).

Fixing x ∈ X, by D(x) we denote the subset of X defined by

D(x) := d ∈ X : 0 ∈ f (x) + ∇f (x)d + F (x + d) .

Recall that the Newton-type method introduced in [4] is defined as follows.

(5)

Author's personal copy

220

J Optim Theory Appl (2013) 158:216–233

Algorithm 3.1 (The Newton-type method)

Step 1.

Step 2.

Step 3.

Step 4.

Select x0 ∈ X and put k := 0.

If 0 ∈ D(xk ) then stop; otherwise, go to Step 3.

Choose dk ∈ D(xk ) and set xk+1 := xk + dk .

Replace k by k + 1 and go to Step 2.

The Gauss–Newton-type method we propose here is given in the following:

Algorithm 3.2 (The Gauss–Newton-type method)

Step 1. Select η ∈ [1, ∞[, x0 ∈ X and put k := 0.

Step 2. If 0 ∈ D(xk ) then stop; otherwise, go to Step 3.

Step 3. Choose dk ∈ D(xk ) such that

dk ≤ η dist 0, D(xk ) .

(6)

Step 4. Set xk+1 := xk + dk .

Step 5. Replace k by k + 1 and go to Step 2.

We remark that in the case where F := 0, Algorithm 3.2 is reduced to the famous

Gauss–Newton method, which is a well known iterative technique for solving nonlinear least square (model fitting) problems and has been studied extensively; see, for

example, [5–10]; while in the case where F := C, where C is a closed convex cone,

this algorithm is reduced to the extended Newton method for solving convex inclusion problems, which was presented and studied by Robinson in [11]. The Gauss–

Newton method for solving convex composite optimization problems was studied in

[12, 24, 25] and the references therein.

3.1 Linear Convergence

Let x ∈ X and define the mapping Qx by

Qx (·) := f (x) + ∇f (x)(· − x) + F (·).

Then

D(x) = d ∈ X : 0 ∈ Qx (x + d) .

(7)

Moreover, the following equivalence is clear for any z ∈ X and y ∈ Y :

z ∈ Q−1

x (y)

⇐⇒

y ∈ f (x) + ∇f (x)(z − x) + F (z).

(8)

In particular,

x̄ ∈ Q−1

x̄ (ȳ)

for each (x̄, ȳ) ∈ gph(f + F ).

(9)

Let (x̄, ȳ) ∈ gph(f + F ) and let rx̄ > 0, rȳ > 0. Throughout the whole paper, we

assume that Brx̄ (x̄) ⊆ Ω ∩ dom F and that the mapping Qx̄−1 (·) is Lipschitz-like on

Brȳ (ȳ) relative to Brx̄ (x̄) with constant M, that is,

−1

e Q−1

x̄ (y1 ) ∩ Brx̄ (x̄), Qx̄ (y2 ) ≤ My1 − y2 for any y1 , y2 ∈ Brȳ (ȳ).

(10)

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

221

Let ε0 > 0 and write

rx̄ (1 − Mε0 )

r̄ := min rȳ − 2ε0 rx̄ ,

.

4M

(11)

rȳ

1

.

,

ε0 < min

2rx̄ M

(12)

Then

r̄ > 0

⇐⇒

The following lemma plays a crucial role in convergence analysis of the Gauss–

Newton-type method. The proof is a refinement of the one for [26, Lemma 1].

Lemma 3.1 Suppose that Q−1

x̄ (·) is Lipschitz-like on Brȳ (ȳ) relative to Brx̄ (x̄) with

constant M and that

rȳ 1

.

(13)

sup ∇f (x) − ∇f (x̄) ≤ ε0 < min

,

2rx̄ M

x∈B rx̄ (x̄)

2

Let x ∈ B rx̄ (x̄). Then Q−1

x (·) is Lipschitz-like on Br̄ (ȳ) relative to B rx̄ (x̄) with con2

stant

M

1−Mε0 ,

2

that is,

−1

e Q−1

x (y1 ) ∩ B rx̄ (x̄), Qx (y2 ) ≤

2

M

y1 − y2 for any y1 , y2 ∈ Br̄ (ȳ).

1 − Mε0

Proof Note that (12) and (13) imply r̄ > 0. Now let

y1 , y2 ∈ Br̄ (ȳ)

and x ∈ Q−1

x (y1 ) ∩ B rx̄ (x̄).

2

(14)

It suffices to show that there exists x ∈ Q−1

x (y2 ) such that

x − x ≤

M

y1 − y2 .

1 − Mε0

To this end, we shall verify that there exists a sequence {xk } ⊂ Brx̄ (x̄) such that

y2 ∈ f (x) + ∇f (x)(xk−1 − x) + ∇f (x̄)(xk − xk−1 ) + F (xk )

(15)

xk − xk−1 ≤ My1 − y2 (Mε0 )k−2

(16)

and

hold for each k = 2, 3, 4, . . . . We proceed by induction on k. Write

zi := yi − f (x) − ∇f (x) x − x + f (x̄) + ∇f (x̄) x − x̄

for each i = 1, 2.

Note by (14) that x − x ≤ x − x̄ + x̄ − x ≤ rx̄ . It follows from (14) and the

relation r̄ ≤ rȳ − 2ε0 rx̄ (thanks to (11)) that

Author's personal copy

222

J Optim Theory Appl (2013) 158:216–233

zi − ȳ ≤ yi − ȳ + f (x) − f (x̄) − ∇f (x̄)(x − x̄)

+ ∇f (x) − ∇f (x̄) x − x ≤ r̄ + ε0 x − x̄ + x − x rx̄

≤ r̄ + ε0

+ rx̄ ≤ rȳ .

2

That is zi ∈ Brȳ (ȳ) for each i = 1, 2. Define x1 := x . Then x1 ∈ Q−1

x (y1 ) by (14),

and it follows from (8) that

y1 ∈ f (x) + ∇f (x)(x1 − x) + F (x1 ),

which can be rewritten as

y1 + f (x̄) + ∇f (x̄)(x1 − x̄)

∈ f (x) + ∇f (x)(x1 − x) + F (x1 ) + f (x̄) + ∇f (x̄)(x1 − x̄).

This, by the definition of z1 , means that z1 ∈ f (x̄) + ∇f (x̄)(x1 − x) + F (x1 ). Hence

−1

x1 ∈ Q−1

x̄ (z1 ) by (8), and then x1 ∈ Qx̄ (z1 ) ∩ Brx̄ (x̄) thanks to (14). By the assumed

Lipschitz-like property of Q−1

x̄ (·) and noting that z1 , z2 ∈ Br̄ (ȳ), from (10) we infer

(z

that there exists x2 ∈ Q−1

2 ) with

x̄

x2 − x1 ≤ Mz1 − z2 = My1 − y2 .

Moreover, by the definition of z2 and noting x1 = x , we have

−1 x2 ∈ Q−1

x̄ (z2 ) = Qx̄ y2 − f (x) − ∇f (x)(x1 − x) + f (x̄) + ∇f (x̄)(x1 − x̄) ,

which, together with (14), implies that

y2 ∈ f (x) + ∇f (x)(x1 − x) + ∇f (x̄)(x2 − x1 ) + F (x2 ).

This shows that (15) and (16) are true for the constructed points x1 , x2 .

We assume that x1 , x2 , . . . , xn are constructed such that (15) and (16) are true for

k = 2, 3, . . . , n. We need to construct xn+1 such that (15) and (16) are also true for

k = n + 1. For this purpose, we write

zin := y2 − f (x) − ∇f (x)(xn+i−1 − x) + f (x̄) + ∇f (x̄)(xn+i−1 − x̄)

for each i = 0, 1.

Then, by the inductive assumption,

n

z − zn = ∇f (x̄) − ∇f (x) (xn − xn−1 )

0

1

≤ ε0 xn − xn−1 ≤ y1 − y2 (Mε0 )n−1 .

(17)

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

Since x1 − x̄ ≤

rx̄

2

223

and y1 − y2 ≤ 2r̄ by (14), it follows from (16) that

xn − x̄ ≤

n

xk − xk−1 + x1 − x̄

k=2

≤ 2M r̄

n

rx̄

(Mε0 )k−2 +

2

k=2

≤

By (11), we have r̄ ≤

rx̄ (1−Mε0 )

,

4M

2M r̄

rx̄

+ .

1 − Mε0

2

and so

xn − x̄ ≤ rx̄ .

(18)

3

xn − x ≤ xn − x̄ + x̄ − x ≤ rx̄ .

2

(19)

Consequently,

Furthermore, using (14) and (19), one has, for each i = 0, 1,

n

z − ȳ ≤ y2 − ȳ + f (x) − f (x̄) − ∇f (x̄)(x − x̄)

i

+ ∇f (x) − ∇f (x̄) (x − xn+i−1 )

rx̄

3rx̄

+

≤ r̄ + ε0 x − x̄ + x − xn+i−1 ≤ r̄ + ε0

2

2

= r̄ + 2ε0 rx̄ .

It follows from the definition of r̄ in (11) that zin ∈ Brȳ (ȳ) for each i = 0, 1. Since

assumption (15) holds for k = n, we have

y2 ∈ f (x) + ∇f (x)(xn−1 − x) + ∇f (x̄)(xn − xn−1 ) + F (xn ),

which can be written as

y2 + f (x̄) + ∇f (x̄)(xn−1 − x̄) ∈ f (x) + ∇f (x)(xn−1 − x) + ∇f (x̄)(xn − xn−1 )

+ F (xn ) + f (x̄) + ∇f (x̄)(xn−1 − x̄),

that is, z0n ∈ f (x̄) + ∇f (x̄)(xn − x̄) + F (xn ) by the definition of z0n . This, together

n

with (8) and (18), yields xn ∈ Q−1

x̄ (z0 ) ∩ Brx̄ (x̄). By using (10) again, there exists an

−1 n

element xn+1 ∈ Qx̄ (z1 ) such that

xn+1 − xn ≤ M z0n − z1n ≤ My1 − y2 (Mε0 )n−1 ,

(20)

where the last inequality holds by (17). By the definition of z1n , we have

n

−1 xn+1 ∈ Q−1

x̄ z1 = Qx̄ y2 − f (x) − ∇f (x)(xn − x) + f (x̄) + ∇f (x̄)(xn − x̄) ,

Author's personal copy

224

J Optim Theory Appl (2013) 158:216–233

which, together with (8), implies

y2 ∈ f (x) + ∇f (x)(xn − x) + ∇f (x̄)(xn+1 − xn ) + F (xn+1 ).

This, together with (20), completes the induction step, and the existence of sequence

{xn } satisfying (15) and (16) is established.

Since Mε0 < 1, we see from (16) that {xk } is a Cauchy sequence, and hence it is

convergent. Let x := limk→∞ xk . Then, taking limit in (15) and noting that F has

closed graph, we get y2 ∈ f (x) + ∇f (x)(x − x) + F (x ) and so x ∈ Q−1

x (y2 ).

Moreover,

n

x − x ≤ lim sup

xk − xk−1 n→∞

≤ lim

n→∞

=

k=2

n

(Mε0 )k−2 My1 − y2 k=2

M

y1 − y2 .

1 − Mε0

This completes the proof of the lemma.

For convenience, we define, for each x ∈ X, the mapping Zx : X → Y by

Zx (·) := f (x̄) + ∇f (x̄)(· − x̄) − f (x) − ∇f (x)(· − x),

and the set-valued mapping φx : X ⇒ X by

φx (·) := Q−1

x̄ Zx (·) .

(21)

Then

Zx x − Zx x ≤ ∇f (x̄) − ∇f (x)x − x for any x , x ∈ X. (22)

Our first main theorem, which provides some sufficient conditions ensuring the

convergence of the Gauss–Newton-type method with initial point x0 , is as follows.

Theorem 3.1 Suppose that η > 1 and Q−1

x̄ (·) is Lipschitz-like on Brȳ (ȳ) relative to

Brx̄ (x̄) with constant M. Let

(23)

ε0 ≥

sup ∇f (x) − ∇f x ,

x,x ∈B rx̄ (x̄)

2

and let r̄ be defined by (11). Let δ > 0 be such that

r

(a) δ ≤ min{ r4x̄ , 3εr̄ 0 , 5εȳ0 , 1},

(b) ε0 M(1 + 2η) ≤ 1,

(c) ȳ < ε0 δ.

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

Suppose that

225

lim dist ȳ, f (x) + F (x) = 0.

x→x̄

(24)

Then there exists some δ̂ > 0 such that any sequence {xn } generated by Algorithm 3.2

with initial point in Bδ̂ (x̄) converges to a solution x ∗ of (5).

Proof By assumption (b), we obtain

η

q :=

1

ηMε0

1+2η

≤

= .

1

1 − Mε0 1 − 1+2η

2

Take 0 < δ̂ ≤ δ be such that

dist 0, f (x0 ) + F (x0 ) ≤ ε0 δ

for each x0 ∈ Bδ̂ (x̄)

(25)

(noting that such δ̂ exists by (24) and assumption (c)). Let x0 ∈ Bδ̂ (x̄). We will proceed by induction to show that Algorithm 3.2 generates at least one sequence and any

sequence {xn } generated by Algorithm 3.2 satisfies the following assertions:

xn − x̄ ≤ 2δ

(26)

xn+1 − xn ≤ q n+1 δ

(27)

and

for each n = 0, 1, 2, . . . . For this purpose, we define

rx :=

3

ε0 Mx − x̄ + Mȳ for each x ∈ X.

2

By assumptions (b) and (c), we see that 3Mε0 ≤ 1 and ȳ < ε0 δ. It follows that

3

rx < (3Mε0 δ) ≤ 2δ

2

for each x ∈ B2δ (x̄).

(28)

Note that (26) is trivial for n = 0. Firstly, we need to show that x1 exists and (27)

holds for n = 0. To complete this, we have to prove that D(x0 ) = ∅ by applying

Lemma 2.2 to the mapping φx0 with η0 := x̄. Let us check that both assumptions (3)

and (4) of Lemma 2.2 hold with r := rx0 and λ := 13 . Since x̄ ∈ Q−1

x̄ (ȳ) ∩ Bδ (x̄) by

(9), according to the definition of the excess e and the mapping φx0 in (21), we obtain

dist x̄, φx0 (x̄) ≤ e Q−1

x̄ (ȳ) ∩ Bδ (x̄), φx0 (x̄)

−1 (29)

≤ e Q−1

x̄ (ȳ) ∩ Brx̄ (x̄), Qx̄ Zx0 (x̄)

(note that Bδ (x̄) ⊆ Brx̄ (x̄)). By the choice of ε0 , we see that

Zx (x) − ȳ = f (x̄) + ∇f (x̄)(x − x̄) − f (x0 ) − ∇f (x0 )(x − x0 ) − ȳ 0

≤ f (x̄) − f (x0 ) − ∇f (x0 )(x̄ − x0 )

Author's personal copy

226

J Optim Theory Appl (2013) 158:216–233

+ ∇f (x0 ) − ∇f (x̄)x̄ − x + ȳ

≤ ε0 x̄ − x0 + x̄ − x + ȳ.

(30)

Note that x0 − x̄ ≤ δ̂ ≤ δ, 5δε0 ≤ rȳ by assumption (a) and ȳ < ε0 δ by assumption (c). It follows from (30) that, for each x ∈ Bδ (x̄),

Zx (x) − ȳ ≤ ε0 x̄ − x0 + x̄ − x + ȳ ≤ 5δε0 ≤ rȳ .

0

In particular,

Zx0 (x̄) ∈ Brȳ (ȳ)

and Zx0 (x̄) − ȳ ≤ ε0 x̄ − x0 + ȳ.

Hence, by (29) and the assumed Lipschitz-like property, we have

dist x̄, φx0 (x̄) ≤ M ȳ − Zx0 (x̄) ≤ Mε0 x0 − x̄ + Mȳ

1

= 1−

rx0 = (1 − λ)r,

3

that is, assumption (3) of Lemma 2.2 is checked.

To fulfill assumption (4) of Lemma 2.2, let x , x ∈ Brx0 (x̄). Then we have x , x ∈

Brx0 (x̄) ⊆ B2δ (x̄) ⊆ Brx̄ (x̄) by (28) and assumption (a), and Zx0 (x ), Zx0 (x ) ∈

Brȳ (ȳ) by (30). This, together with the assumed Lipschitz-like property, implies that

e φx0 x ∩ Brx0 (x̄), φx0 x ≤ e φx0 x ∩ Brx̄ (x̄), φx0 x ∩ Brx̄ (x̄), Q−1

= e Q−1

x̄ Zx0 x

x̄ Zx0 x

≤ M Zx0 x − Zx0 x .

Applying (22), we get

Zx x − Zx x ≤ ∇f (x̄) − ∇f (x0 )x − x ≤ ε0 x − x .

0

0

Combining the above two inequalities yields

1

e φx0 x ∩ Brx0 (x̄), φx0 x ≤ Mε0 x − x ≤ x − x = λx − x .

3

This means that assumption (4) of Lemma 2.2 is also checked. Thus Lemma 2.2 is

applicable and there exists x̂1 ∈ Brx0 (x̄) satisfying x̂1 ∈ φx0 (x̂1 ). Hence 0 ∈ f (x0 ) +

∇f (x0 )(x̂1 − x0 ) + F (x̂1 ) and so D(x0 ) = ∅.

Below we show that (27) also holds for n = 0. Note by (23) that

ε0 ≥ sup ∇f (x) − ∇f (x̄)

x∈B rx̄ (x̄)

2

and note also that r̄ > 0 by assumption (a). Therefore assumption (13) is satisfied

by (12). Since Q−1

x̄ (·) is Lipschitz-like on Brȳ (ȳ) relative to Brx̄ (x̄), it follows from

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

227

Lemma 3.1 that the mapping Q−1

x (·) is Lipschitz-like on Br̄ (ȳ) relative to B rx̄ (x̄)

with constant

M

1−Mε0

2

for each x ∈ B rx̄ (x̄). In particular, Q−1

x0 (·) is Lipschitz-like on

2

Br̄ (ȳ) relative to B rx̄ (x̄) with constant

2

M

1−Mε0

as x0 ∈ Bδ̂ (x̄) ⊂ Bδ (x̄) ⊂ B rx̄ (x̄) by

2

assumption (a) and the choice of δ̂. Furthermore, assumptions (a) and (c) imply that

r̄

ȳ < ε0 δ ≤ ,

3

(31)

r̄

dist 0, Qx0 (x0 ) = dist 0, f (x0 ) + F (x0 ) ≤ ε0 δ ≤ .

3

(32)

and (25) implies that

Thus Lemma 2.1 is applicable and hence by applying it we have

dist x0 , Qx0 −1 (0) ≤

M

dist 0, Qx0 (x0 )

1 − Mε0

(noting that x0 ∈ B rx̄ (x̄) as observed earlier and 0 ∈ B r̄ (ȳ) by (31)). This, together

2

3

with (7), yields

dist 0, D(x0 ) = dist x0 , Q−1

x0 (0) ≤

M

dist 0, Qx0 (x0 ) .

1 − Mε0

(33)

According to (6) in Algorithm 3.2 and using (32) and (33), we have

x1 − x0 = d0 ≤ η dist 0, D(x0 )

≤

ηM

ηMε0 δ

dist 0, Qx0 (x0 ) ≤

< qδ.

1 − Mε0

1 − Mε0

This shows that (27) holds for n = 0.

We assume that x1 , x2 , . . . , xk are constructed such that (26) and (27) hold for

n = 0, 1, . . . , k − 1. We show that there exists xk+1 such that assertions (26) and (27)

hold for n = k. Since (26) and (27) are true for each n ≤ k − 1, we have the following

inequality:

xk − x̄ ≤

k−1

di + x0 − x̄ ≤ δ

i=0

≤

k−1

q i+1 + δ

i=0

δq

+ δ ≤ 2δ.

1−q

This shows that (26) holds for n = k. Now with almost the same argument as we did

for the case where n = 0, we can show that assertion (27) holds for n = k. The proof

is complete.

In particular, in the case where x̄ is a solution of (5), that is, ȳ = 0, Theorem 3.1

is reduced to the following corollary, which gives a local convergent result for the

Gauss–Newton-type method.

Author's personal copy

228

J Optim Theory Appl (2013) 158:216–233

Corollary 3.1 Suppose that η > 1, 0 ∈ f (x̄) + F (x̄), and Q−1

x̄ (·) is pseudo-Lipschitz

around (0, x̄). Let r̃ > 0, and suppose that ∇f is continuous on Br̃ (x̄) and

(34)

lim dist 0, f (x) + F (x) = 0.

x→x̄

Then there exists some δ̂ > 0 such that any sequence {xn } generated by Algorithm 3.2

with an initial point in Bδ̂ (x̄) converges to a solution x ∗ of (5).

Proof Since Q−1

x̄ (·) is pseudo-Lipschitz around (0, x̄), there exist constants r0 , r̂x̄

and M such that Q−1

x̄ (·) is Lipschitz-like on Br0 (ȳ) relative to Br̂x̄ (x̄) with constant M. Then, for each 0 < r ≤ r̂x̄ , one has

−1

e Q−1

x̄ (y1 ) ∩ Br (x̄), Qx̄ (y2 ) ≤ My1 − y2 for any y1 , y2 ∈ Br0 (0),

that is, Q−1

x̄ (·) is Lipschitz-like on Br0 (0) relative to Br (x̄) with constant M. Let ε0 ∈

1

. By the continuity of ∇f , we can choose rx̄ ∈ ]0, r̂x̄ [

]0, 1[ be such that Mε0 ≤ 1+2η

rx̄

such that 2 ≤ r̃, r0 − 2ε0 rx̄ > 0 and

∇f (x) − ∇f x .

ε0 ≥

sup

x, x ∈B rx̄ (x̄)

2

Then

rx̄ (1 − Mε0 )

r̄ = min r0 − 2ε0 rx̄ ,

> 0,

4M

and

rx̄ r̄

r0

> 0.

,

min

,

4 3ε0 5ε0

Thus we can choose 0 < δ ≤ 1 such that

rx̄ r̄

r0

,

.

δ ≤ min

,

4 3ε0 5ε0

Now it is routine to check that inequalities (a)–(c) of Theorem 3.1 are satisfied. Thus

we can apply Theorem 3.1 to complete the proof.

3.2 Quadratic Convergence

In the following theorem we show that, if the derivative of f is Lipschitz continuous

around x̄, then any sequence generated by Algorithm 3.2, with initial point near x̄, is

quadratically convergent.

Theorem 3.2 Suppose that Q−1

x̄ (·) is Lipschitz-like on Brȳ (ȳ) relative to Brx̄ (x̄) with

constant M and ∇f is Lipschitz continuous on B rx̄ (x̄) with Lipschitz constant L. Let

2

η > 1 and let

2 rx̄ (1 − MLrx̄ )

.

r̄ := min rȳ − 2Lrx̄ ,

4M

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

229

Let δ > 0 be such that

r

ȳ

, 6r̄, 1},

(a) δ ≤ min{ r4x̄ , 11L

(b) (M + 1)L(ηδ + 2rx̄ ) ≤ 2,

2

(c) ȳ < Lδ4 .

Suppose that (24) holds. Then there exists some δ̂ > 0 such that any sequence {xn }

generated by Algorithm 3.2 with an initial point in Bδ̂ (x̄) converges quadratically to

a solution x ∗ of (5).

Proof By assumption (b), one sees

q :=

LηMδ

≤ 1.

2(1 − MLrx̄ )

Select a δ̂ ∈ ]0, δ] with

Lδ 2

dist 0, f (x0 ) + F (x0 ) ≤

4

for each x0 ∈ Bδ̂ (x̄)

(noting that such δ̂ exists by (24) and assumption (c)). Let x0 ∈ Bδ̂ (x̄). As in the proof

for Theorem 3.1, we use induction to show that Algorithm 3.2 generates at least one

sequence and any sequence {xn } generated by Algorithm 3.2 satisfies the following

assertions:

xn − x̄ ≤ 2δ

(35)

2n

1

δ

dn ≤ q

2

(36)

and

for each n = 0, 1, 2, . . . . For this purpose, we define

rx :=

Due to η > 1 and δ ≤

9

MLx − x̄2 + 2Mȳ

10

rx̄

4

for each x ∈ X.

in assumption (a), it follows from assumption (b) that

9(M + 1)Lδ = (M + 1)L(δ + 8δ) ≤ ML(ηδ + 2rx̄ ) ≤ 2.

This gives

MLδ ≤

2

9

2

and Lδ ≤ ,

9

and so

ȳ <

Lδ 2

2

r̄

≤

· 6r̄ =

4

9·4

3

(37)

Author's personal copy

230

J Optim Theory Appl (2013) 158:216–233

thanks to assumption (c). Combining the first inequality in (37) and assumption (c)

implies that

9

1

2

2

rx <

4MLδ + MLδ ≤ 2δ

10

2

for each x ∈ B2δ (x̄).

(38)

Note that (35) is trivial for n = 0. To show that the point x1 exists and (36) holds for

n = 0, it suffices to prove that D(x0 ) = ∅. We will do that by applying Lemma 2.2 to

the mapping φx0 with η0 := x̄. To do this, let us check that both assumptions (3) and

(4) of Lemma 2.2 hold with r := rx0 and λ := 49 . Noting that x̄ ∈ Q−1

x̄ (ȳ) ∩ Bδ (x̄)

and by the definition of the excess e, we obtain

dist x̄, φx0 (x̄) ≤ e Q−1

x̄ (ȳ) ∩ Bδ (x̄), φx0 (x̄)

−1 = e Q−1

x̄ (ȳ) ∩ Bδ (x̄), Qx̄ Zx0 (x̄) .

(39)

Letting x ∈ B2δ (x̄) ⊆ B rx̄ (x̄), we conclude from the assumed Lipschitz continuity of

2

∇f that

Zx (x) − ȳ = f (x̄) + ∇f (x̄)(x − x̄) − f (x0 ) − ∇f (x0 )(x − x0 ) − ȳ 0

≤ f (x) − f (x̄) − ∇f (x̄)(x − x̄)

+ f (x) − f (x0 ) − ∇f (x0 )(x − x0 ) + ȳ

≤

L

x − x̄2 + x − x0 2 + ȳ.

2

(40)

Therefore, we have

2

2

Zx (x̄) − ȳ ≤ L x̄ − x0 2 + ȳ ≤ Lδ + Lδ ≤ rȳ

0

2

2

4

2

because x0 − x̄ ≤ δ̂ ≤ δ, 11Lδ ≤ rȳ and δ ≤ 1 by assumption (a) and ȳ < Lδ4 by

assumption (c). Hence, by (39) and the assumed Lipschitz-like property, we have

ML

dist x̄, φx0 (x̄) ≤ M ȳ − Zx0 (x̄) ≤

x̄ − x0 2 + Mȳ

2

4

rx0 = (1 − λ)r,

= 1−

9

that is, assumption (3) of Lemma 2.2 is satisfied.

Next, we show that assumption (4) of Lemma 2.2 is satisfied. To do this, let

x , x ∈ Brx0 (x̄). Then we have x , x ∈ Brx0 (x̄) ⊆ B2δ (x̄) ⊆ Brx̄ (x̄) by (38) and

Zx0 (x ), Zx0 (x ) ∈ Brȳ (ȳ) by (40). This, together with the assumed Lipschitz-like

property, implies that

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

231

e φx0 x ∩ Brx0 (x̄), φx0 x ≤ e φx0 x ∩ Brx̄ (x̄), φx0 x ∩ Brx̄ (x̄), Q−1

= e Q−1

x̄ Zx0 x

x̄ Zx0 x

≤ M Zx0 x − Zx0 x .

By the choice of x0 , (22) yields

Zx x − Zx x ≤ ∇f (x̄) − ∇f (x0 )x − x 0

0

≤ Lx̄ − x0 x − x ≤ Lδ x − x .

It follows from the second inequality in (37) that

4

e φx0 x ∩ Brx0 (x̄), φx0 x ≤ MLδ x − x ≤ x − x = λx − x .

9

This means that assumption (4) of Lemma 2.2 is also satisfied. Thus Lemma 2.2 is

applicable to getting the existence of a point x̂1 ∈ Brx0 (x̄) such that x̂1 ∈ φx0 (x̂1 ), that

is, D(x0 ) = ∅.

Below we show that assertion (36) holds also for n = 0. Since ∇f is Lipschitz

continuous on B rx̄ (x̄) with the Lipschitz constant L, we have

2

Lrx̄ ≥

sup ∇f (x) − ∇f (x̄).

(41)

x∈B rx̄ (x̄)

2

It is clear that r̄ > 0 by assumption (a). Therefore (12) and (41) imply that assumption

(13) is satisfied with ε0 := Lrx̄ . By the choice of δ̂ and assumption (a), one has x0 ∈

Bδ̂ (x̄) ⊂ Bδ (x̄) ⊂ B rx̄ (x̄). Since Q−1

x̄ (·) is Lipschitz-like on Brȳ (ȳ) relative to Brx̄ (x̄),

2

it follows from Lemma 3.1 that Q−1

x0 (·) is Lipschitz-like on Br̄ (ȳ) relative to B rx̄ (x̄)

2

M

. The remainder of the proof is similar to the corresponding

with constant 1−MLr

x̄

part of the proof of Theorem 3.1 and so we omit it.

Consider the special case where x̄ is a solution of (5) (that is, ȳ = 0) in Theorem 3.2. The following corollary describes the local quadratic convergence of the

Gauss–Newton-type method. The proof is similar to that we did for Corollary 3.1.

Corollary 3.2 Suppose that η > 1, 0 ∈ f (x̄) + F (x̄), and Q−1

x̄ (·) is pseudo-Lipschitz

around (0, x̄). Let r̃ > 0, and suppose that ∇f is Lipschitz continuous on Br̃ (x̄)

with Lipschitz constant L and (34) holds. Then there exists some δ̂ > 0 such that

any sequence {xn } generated by Algorithm 3.2 with initial point in Bδ̂ (x̄) converges

quadratically to a solution x ∗ of (5).

Remark 3.1 For the case where η = 1, the question whether the results are true

for the Gauss–Newton-type method is a little bit complicated. However, from the

proofs of the main theorems, one sees that all the results in the present paper remain

true provided that, for any x ∈ Ω with D(x) = ∅, there exists d̄ ∈ D(x) such that

d̄ = mind∈D(x) d. The following proposition provides some sufficient conditions

Author's personal copy

232

J Optim Theory Appl (2013) 158:216–233

ensuring the existence of such d̄ ∈ D(x). Thus, by Proposition 3.1, Theorems 3.1

and 3.2 as well as Corollaries 3.1 and 3.2 are true for η = 1 provided that X is finite

dimensional, or X is reflexive and the graph of F is convex.

Proposition 3.1 Suppose that X is finite dimensional, or X is reflexive and the graph

of F is convex. Then there exists d̄ ∈ D(x) such that d̄ = mind∈D(x) d for any

x ∈ Ω with D(x) = ∅.

Proof Let x ∈ Ω be such that D(x) = ∅. By assumption, D(x) is closed. Hence the

conclusion holds trivially, if X is finite dimensional. Below we consider the case

where X is reflexive and the graph of F is convex. Then the set-valued mapping

T (·) := f (x) + ∇f (x)(· − x) + F (·) has closed convex graph. Hence D(x) = T −1 (0)

is weakly closed and convex. This means that D(x) is weakly compact; hence the

conclusion follows.

4 Concluding Remarks

We have established semi-local convergence and local convergence results for the

Gauss–Newton-type method with η > 1 under the assumptions that Q−1

x̄ (·) is

Lipschitz-like and ∇f is continuous. In particular, if ∇f is additionally Lipschitz

continuous, we further show that the Gauss–Newton-type method is quadratically

convergent. As noted in Remark 3.1, our results are also true for η = 1 in some special cases. The Gauss–Newton-type method and the established convergence results

seem new for the generalized equation problem (5).

Acknowledgements The authors thank the referees and the associate editor for their valuable comments

and constructive suggestions which improved the presentation of this manuscript. Research work of the

first author is fully supported by Chinese Scholarship Council, and research work of the third author is

partially supported by National Natural Science Foundation (grant 11171300) and Zhejiang Provincial

Natural Science Foundation (grant Y6110006) of China.

References

1. Robinson, S.M.: Generalized equations and their solutions, part I: basic theory. Math. Program. Stud.

10, 128–141 (1979)

2. Robinson, S.M.: Generalized equations and their solutions, part II: applications to nonlinear programming. Math. Program. Stud. 19, 200–221 (1982)

3. Ferris, M.C., Pang, J.S.: Engineering and economic applications of complementarity problems. SIAM

Rev. 39, 669–713 (1997)

4. Dontchev, A.L.: Local convergence of the Newton method for generalized equation. C. R. Acad. Sci.

Paris, Ser. I 322, 327–331 (1996)

5. Dedieu, J.P., Kim, M.H.: Newton’s method for analytic systems of equations with constant rank

derivatives. J. Complex. 18, 187–209 (2002)

6. Dedieu, J.P., Shub, M.: Newton’s method for overdetermined systems of equations. Math. Comput.

69, 1099–1115 (2000)

7. Li, C., Zhang, W.H., Jin, X.Q.: Convergence and uniqueness properties of Gauss–Newton’s method.

Comput. Math. Appl. 47, 1057–1067 (2004)

8. He, J.S., Wang, J.H., Li, C.: Newton’s method for underdetemined systems of equations under the

modified γ -condition. Numer. Funct. Anal. Optim. 28, 663–679 (2007)

Author's personal copy

J Optim Theory Appl (2013) 158:216–233

233

9. Xu, X.B., Li, C.: Convergence of newton’s method for systems of equations with constant rank derivatives. J. Comput. Math. 25, 705–718 (2007)

10. Xu, X.B., Li, C.: Convergence criterion of Newton’s method for singular systems with constant rank

derivatives. J. Math. Anal. Appl. 345, 689–701 (2008)

11. Robinson, S.M.: Extension of Newton’s method to nonlinear functions with values in a cone. Numer.

Math. 19, 341–347 (1972)

12. Li, C., Ng, K.F.: Majorizing functions and convergence of the Gauss–Newton method for convex

composite optimization. SIAM J. Optim. 18, 613–642 (2007)

13. Aubin, J.P.: Lipschitz behavior of solutions to convex minimization problems. Math. Oper. Res. 9,

87–111 (1984)

14. Jean-Alexis, C., Piétrus, A.: On the convergence of some methods for variational inclusions. Rev. R.

Acad. Cien. Serie A. Mat. 102, 355–361 (2008)

15. Argyros, I.K., Hilout, S.: Local convergence of Newton-like methods for generalized equations. Appl.

Math. Comput. 197, 507–514 (2008)

16. Dontchev, A.L.: The Graves theorem revisited. J. Convex Anal. 3, 45–53 (1996)

17. Haliout, S., Piétrus, A.: A semilocal convergence of a secant-type method for solving generalized

equations. Positivity 10, 693–700 (2006)

18. Pietrus, A.: Does Newton’s method for set-valued maps converges uniformly in mild differentiability

context? Rev. Colomb. Mat. 34, 49–56 (2000)

19. Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation I: Basic Theory. Springer,

Berlin (2006)

20. Aubin, J.P., Frankowska, H.: Set-Valued Analysis. Birkhäuser, Boston (1990)

21. Mordukhovich, B.S.: Sensitivity analysis in nonsmooth optimization. In: Field, D.A., Komkov, V.

(eds.) Theoretical Aspects of Industrial Design. SIAM Proc. Appl. Math., vol. 58, pp. 32–46 (1992)

22. Penot, J.P.: Metric regularity, openness and Lipschitzian behavior of multifunctions. Nonlinear Anal.

13, 629–643 (1989)

23. Dontchev, A.L., Hager, W.W.: An inverse mapping theorem for set-valued maps. Proc. Am. Math.

Soc. 121, 481–489 (1994)

24. Burke, J.V., Ferris, M.C.: A Gauss–Newton method for convex composite optimization. Math. Program. 71, 179–194 (1995)

25. Li, C., Wang, X.H.: On convergence of the Gauss–Newton method for convex composite optimization.

Math. Program., Ser. A 91, 349–356 (2002)

26. Dontchev, A.L.: Uniform convergence of the Newton method for Aubin continuous maps. Serdica

Math. J. 22, 385–398 (1996)