PIECEWISE LINEAR NCP FUNCTION FOR QP FREE FEASIBLE METHOD Pu Dingguo Zhou Yan

advertisement

Appl. Math. J. Chinese Univ. Ser. B

2006, 21(3): 289-301

PIECEWISE LINEAR NCP FUNCTION FOR QP FREE

FEASIBLE METHOD

Pu Dingguo

1

Zhou Yan1,2

Abstract. In this paper, a QP-free feasible method with piecewise NCP functions is proposed

for nonlinear inequality constrained optimization problems. The new NCP functions are piecewise linear-rational, regular pseudo-smooth and have nice properties. This method is based on

the solutions of linear systems of equation reformulation of KKT optimality conditions, by using

the piecewise NCP functions. This method is implementable and globally convergent without

assuming the strict complementarity condition, the isolatedness of accumulation points. Furthermore, the gradients of active constraints are not requested to be linearly independent. The

submatrix which may be obtained by quasi-Newton methods, is not requested to be uniformly

positive definite. Preliminary numerical results indicate that this new QP-free method is quite

promising.

§1 Introduction

Consider the constrained nonlinear optimization problem (NLP)

min f (x), x ∈ Rn ,

s.t. G(x) ≤ 0,

(1)

where f : Rn → R and G(x) = (g1 (x), g2 (x), . . . , gm (x))T : Rn → Rm are Lipchitz continuously

differentiable functions.

We denote by D = {x ∈ Rn |G(x) < 0} and D̄ = cl(D) the strictly feasible set and the

feasible set of Problem (NLP), respectively.

The Lagrangian function associated with Problem (NLP) is the function

L(x, λ) = f (x) + λT G(x),

(2)

where λ = (λ1 , λ2 , · · · , λm )T ∈ Rm is the multiplier vector. For simplicity, we use (x, λ) to

denote the column vector (xT , λT )T .

Received:2005-10-20.

MR Subject Classification: 90C30,65K10.

Keywords: constrained optimization, semismooth, nonlinear complementarity, convergence.

Supported by the Natural Science Foundation of China (10371089, 10571137).

290

Appl. Math. J. Chinese Univ. Ser. B

Vol. 21, No. 3

A Karush-Kuhn-Tucker (KKT) point (x̄, λ̄) ∈ Rn ×Rm is a point that satisfies the necessary

optimality conditions for Problem (NLP),

∇x L(x̄, λ̄) = 0, G(x̄) ≤ 0, λ̄ ≥ 0, λ̄i gi (x̄) = 0,

(3)

where 1 ≤ i ≤ m. We also say x̄ is a KKT point if there exists a λ̄ such that (x̄, λ̄) satisfy (3).

Finding KKT points for Problem (NLP) can be equivalently reformulated as solving the

mixed nonlinear complementarity of Problem (NCP) in (3), Problem (NCP) has attracted

much attention due to its various applications, see [1, 2]. One method to solve the nonlinear

complementarity problem is to construct a Newton method for solving a system of nonlinear

equations,see [3, 4].

Qi H D and Qi L Q[5] proposed a new QP-free method which ensures the strict feasibility

of all iterates. Their work is based on the Fischer-Burmeister NCP function. They proved the

global convergence without isolatedness of accumulation point and the strict complementarity

condition. They also proved the superlinear convergence under mild conditions.

Stimulated by the progress in the above aspects, in this paper, a QP free feasible method

with piecewise NCP functions is proposed for the minimization of a smooth function subject

to smooth inequality constraints. The new NCP functions are piecewise linear-rational, regular

pseudo-smooth and have nice properties. This method is based on the solutions of linear

systems of equation reformulation of KKT optimality conditions, by using the piecewise NCP

functions. The method is an iterative method in which, locally, the iteration can be viewed

as a perturbation of a Newton-quasi Newton iteration on the primal variables for the solution

of equalities in KKT first order conditions of optimality. This method ensures the feasibility

of all iterations. We modify the Qi H D and Qi L Q’s[5] method slightly to obtain the local

convergence under some weaker conditions. In particular, this method is implementable and

globally convergent without assuming the strict complementarity condition, the isolatedness of

accumulation points. Furthermore, the gradients of active constraints are not requested to be

linearly independent. The submatrix which may be obtained by quasi Newton methods, is not

requested to be uniformly positive definite. We also prove that the method has superlinear

convergence rate under some mild conditions. Preliminary numerical results indicate that this

smoothing QP-free infeasible method is quite promising.

Definition 1.1. NCP pair and SNCP pair We call a pair (a, b) ∈ R2 to be an NCP pair

if a ≥ 0, b ≥ 0 and ab = 0; and call (a, b) to be an SNCP pair if (a, b) is an NCP pair and

a2 + b2 6= 0.

Definition 1.2. NCP function. A function φ : R2 → R is called an NCP function if

φ(a, b) = 0, if and only if (a, b) is an NCP pair.

The following definition is presented and its properties are discussed by Qi L Q[5] .

Definition 1.3. Pseudo-smooth function. Let ψ : R2 → R be a strongly semismooth

function. Denote Pψ as the set of points where ψ takes zero. Let Eψ be the extreme point set

of Pψ . We say that ψ is a Pseudo-smooth function if it is smooth everywhere in R2 \Eψ .

Two most famous NCP functions are the min function and the Fischer-Burmeister NCP

function[6] . However, the min function may not be differentiable at infinite points, and the

Pu Dingguo,et al.

PIECEWISE LINEAR NCP FUNCTION FOR QP FREE FEASIBLE...

291

inexact generalized Newton direction with this NCP function may not be a descent direction.

The Fischer-Burmeister NCP function is irrational. This makes some originally linear terms,

such as the Lagrangian multipliers in KKT system for the variational inequality problem or the

constrained nonlinear programming problems, to become nonlinear after reformulation, see [7].

In this paper, we present a new class of interesting piecewise linear NCP functions ψ. The

3-piecewise linear NCP function is as follows.

2

3a − a /b if b ≥ a > 0, or 3b > −a ≥ 0,

2

ψ(a, b) =

(4)

3b − b /a if a > b > 0 or 3a > −b ≥ 0,

9a + 9b

if 0 ≥ a and − a ≥ 3b, or − 3a ≤ b ≤ 0.

If (a, b) 6= (0, 0), then

Ã

!

3

−

2a/b

a2 /b2

Ã

!

b2 /a2

∇ψ(a, b) =

3 − 2b/a

à !

9

9

if b ≥ a > 0, or 3b > −a ≥ 0,

if a > b > 0 or 3a > −b ≥ 0,

(5)

if 0 ≥ a and − a ≥ 3b, or − 3a ≤ b ≤ 0,

and

(Ã

Aψ = ∂B ψ(0, 0) =

3 − 2t

t2

!

) (Ã

: −3 ≤ t ≤ 1 ∪

t2

3 − 2t

!

)

: −3 ≤ t ≤ 1 .

(6)

The 4-piecewise linear NCP function ψ is as follows. ψ(0, 0) = 0 and

2

k a

2kb − b2 /a

ψ(a, b) =

2k 2 a + 2kb + b2 /a

2

k a + 4kb

if

if

if

if

b > k|a|,

a ≥ |b|/k and a > 0,

a ≤ −|b|/k and a < 0,

b < −k|a|.

(7)

If (a, b) 6= (0, 0), then

Ã

!

k2

0

Ã

!

2

2

b

/a

2k − 2b/a

Ã

!

∇ψ(a, b) =

2k − b2 /a2

2k + 2b/a

Ã

!

2

k

4k

and

if b > k|a|,

if a ≥ |b|/k and a > 0,

(8)

if a ≤ −|b|/k and a < 0,

if b < −k|a|,

292

Appl. Math. J. Chinese Univ. Ser. B

(Ã

Aψ = ∂B ψ(0, 0) =

k 2 t2

2k − 2t

!

) (Ã

: |t| ≤ 1 ∪

2 − k 2 t2

2k − 2t

Vol. 21, No. 3

!

)

: |t| ≤ 1 .

(9)

In this paper, we use the 3-piecewise linear NCP function ψ(a, b).

It is easy to check the following Proposition.

Proposition 1.1. For the new function ψ(a, b) the following holds.

1. ψ(a, b) = 0 ⇐⇒ a ≥ 0, b ≥ 0, ab = 0;

2. the square of ψ is continuously differentiable;

3. ψ is twice continuously differentiable everywhere except at the origin, but it is strongly

semismooth at the origin and is a pseudo-smooth NCP function;

4. for any (α, β) ∈ ∂B ψ(a, b), (a, b) 6= (0, 0), or any (α, β) ∈ ∂B ψ(0, 0), α2 + β 2 ≥ 1 > 0.

To construct the semismooth equation Φ(x, Λ) = 0 , we use the form Φ(x, Λ) = 0 which is

T

equivalently reformulated as KKT point conditions. Let Φ(x, Λ) = (φ1 (x, Λ) · · · φn+m (x, Λ)) ,

where

φi (x, Λ) = ∇xi L(x, Λ), 1 ≤ i ≤ n,

and

φi (x, Λ) = ψ((−gj (x)), λj ), n + 1 ≤ i = n + j ≤ n + m.

If 1 ≤ i ≤ n, or if (gj (x), λj ) 6= (0, 0) and n + 1 ≤ i = j + n ≤ n + m, then φi (x, Λ) is

continuously differentiable at (x, Λ) ∈ Rn+m .

If (gj (x), λj ) = (0, 0) and n + 1 ≤ i = j + n ≤ n + m, then φi (x, Λ) is strongly semismooth

at (x, Λ) ∈ Rn+m .

Given any s ∈ Rn+m , s 6= 0, there is a directional derivative φ0i ((x, Λ); s) of φi (x, Λ) at

(x, Λ) in the direction s ∈ Rn+m such that, for α > 0,

φi ((x, Λ) + αs) = φi (x, Λ) + αφ0i ((x, Λ); s) + o(α),

(10)

If we use piecewise linear NCP functions, the generalized Newton direction dk is a descent

direction of Θk = kΦ(z k )k2 at xk (Φ(x, Λ).

We consider that ψ(a, b) is the new NCP function (4). In this case, for n + 1 ≤ i = j + n ≤

n + m, we havef or( − g(x), 0) 6= (0, 0)

Ã

!

−(3

+

2g

(x))∇g

(x)/λ

j

i

j

(λ /g (x))2 ej

à j j

!

−(λj /gj (x))2 ∇gi (x)

∇φi =

(3 + 2λj /gj (x))ej

Ã

!

−9∇g

(x)

i

9e

j

if λj ≥ −gj (x) > 0

or 3λj > gj (x) ≥ 0,

if − gj (x) > λj > 0

or − 3gj (x) > −λj ≥ 0,

(11)

if 0 ≥ −gj (x) and gj (x) ≥ 3λj ,

or 3gj (x) ≤ λj ≤ 0,

where ej = (0, · · · , 0, 1, 0 · · · , 0)T ∈ Rm is the jth column of the unit matrix, its jth element is

1, and other elements are 0.

If gj (x) = 0, λj = 0, and n + 1 ≤ i = j + n ≤ n + m, then φi (x, Λ) is semismooth and

directionally differentiable at (x, Λ). We have

Pu Dingguo,et al.

PIECEWISE LINEAR NCP FUNCTION FOR QP FREE FEASIBLE...

(Ã

Aψ = ∂B ψ(0, 0)

!

)

−(3 − 2t)∇gi (x)

=

: −3 ≤ t ≤ 1 ∪

t2 ej

(Ã

!

)

−t2 ∇gi (x)

: −3 ≤ t ≤ 1 .

(3 − 2t)ej

293

(12)

We define the index sets I0 and I1 as follows:

I1 (x, Λ) = {i|(gj (x), λj ) 6= (0, 0); I0 (x, Λ) = {i|(λj , gj (x)) = (0, 0)}.

(13)

The paper is organized as follows. In Section 2 we propose a QP free feasible method. In

Section 3 we show that the algorithm is well defined. In Section 4 and Section 5 we discuss the

conditions of global convergence and superlinear convergence of the algorithm, respectively. In

Section 6 we give a brief discussion and some numerical tests.

§2 Algorithm

Let (ξj (x, µ), γj (x, µ)) = (−1, 1) if j ∈ I0 , otherwise let

(ξj (x, µ), γj (x, µ)) = ∇ψ(a, b)|a=−gj (x),b=µj . We have ξj (x, µ) < 0 γj (x, µ)) > 0,

−(3 + 2gj (x)/µj ), if µj ≥ −gj (x) > 0 or 3µj > gj (x) ≥ 0,

ξj (x, µ) =

−(µj /gj (x))2 , if − gj (x) > µj > 0 or − 3gj (x) > −µj ≥ 0,

−9, if 0 ≥ −gj (x) and gj (x) ≥ 3µj or 3gj (x) ≤ µj ≤ 0,

(14)

and

2

(µj /gj (x)) ej , if µj ≥ −gj (x) > 0 or 3µj > gj (x) ≥ 0,

γ(x, µ) =

(3 + 2µj /gj (x)), if − gj (x) > µj > 0 or − 3gj (x) > −µj ≥ 0,

9, if 0 ≥ −gj (x) and gj (x) ≥ 3µj or 3gj (x) ≤ µj ≤ 0,

q

In the following algorithm, let ξjk = ξj (xk , µk ) and γjk = γj (xk , µk ), ηjk = 2γjk ,

Ã

k

V =

k

V11

k

V21

k

V12

k

V22

!

Ã

=

H k + ck1 In

diag(ξ k )(∇Gk )T

∇Gk

diag(η k )

(15)

!

,

(16)

where In is the n order unit matrix, ck1 = c1 min{1, kΦ̄k kν }, Φ̄k = Φ(xk , λ̄k ), λ̄k is obtained in

Algorithm 1, c1 ∈ (0, 1), diag(ξ k ) or diag(η k ) denotes the diagonal matrix whose jth diagonal

element is ξj (xk , µk ) or ηj (xk , µk ), respectively.

Algorithm 2.1

Step 0. Initialization.

Give an initial guess (x0 , µ0 ), x0 ∈ D, µ0 ≥ 0, λ̄0 = µ0 , c1 ∈ (0, 1), τ ∈ (0, 1), ν > 1,

µ̄ ≥ µ0 > 0, κ ∈ (0, 1), θ ∈ (0, 1). Give a symmetric positive definite matrix H 0 . Denote

∇Gk = ∇G(xk ), V k = V (xk , µk ), f k = f (xk ) and so on.

Step 1. Compute.

294

Appl. Math. J. Chinese Univ. Ser. B

Compute dk0 and λk0 by solving the following linear system in (d, λ):

Ã

! Ã

!

k

d

−∇f

Vk

=

.

λ

0

Vol. 21, No. 3

(17)

If dk0 = 0, then stop. Otherwise, compute dk1 and λk1 by solving the following linear system

in (d, λ):

!

Ã

! Ã

d

−∇f k

k

.

(18)

V

=

3

diag(ξ k )(λk0

λ

−)

k0

k2

where λk0

and λk2 by solving the following linear system in (d, λ):

− = min{λ , 0}. Compute d

Ã

! Ã

!

d

−∇f k

k

V

=

,

(19)

k0 3

λ

diag(ξ k )(λ−

) + kdk1 kν diag(ξ k )e

where e = (1, . . . , 1)T ∈ Rm . Let

Ã

!

Ã

!

Ã

!

dk

dk1

dk2

k

k

=b

+ρ

,

λk

λk1

λk2

(20)

where bk = (1 − ρk ) and

ρk = (θ − 1)

(dk1 )T ∇f k

¯P

¯

¯ m

¯ k1 ν .

1 + ¯ j=1 λk0

j ¯ kd k

(21)

ˆ the solution of the least square problem in d.

Compute a correction d,

min dT Hd, s.t. gi (xk + dk ) + dT ∇gik = −ψ k for any i ∈ Ik ,

(22)

where Ik = {i|gik ≥ −λki } and

½

½¯

¯

k ν

ψ = max kd k , max ¯¯

k

i∈Ik

¯κ ¾

¾

¯

ξik

k 2

¯

− 1¯ kd k .

−ηik λki

(23)

If (22) has no solution or if kdˆk k ≥ kdk k, set kdˆk k = 0.

Step 2. Line search.

Let tk = τ j , where j is the smallest non-negative integer such that

f (xk + tk dk + (tk )2 dˆk ) ≤ f k + θtk (dk )T ∇f k

(24)

gi (xk + tk dk + (tk )2 dˆk ) < 0, 1 ≤ i ≤ m.

(25)

and

Step 3. Update.

Set xk+1 = xk + tk dk + (tk )2 dˆk . Set λ̄k+1 = min{λk0 , µ̄e}, Φ̄k+1 = Φ(xk+1 , λ̄k+1 ) and

µk+1 = min{max{λk0 , kdk ke}, µ̄e}. If Φ̄k+1 = 0 or Φ(xk+1 , µk+1 ) = 0 then stop; otherwise

update H k and obtain a symmetric positive definite matrix H k+1 . Set k = k + 1. Go to Step

1.

Pu Dingguo,et al.

PIECEWISE LINEAR NCP FUNCTION FOR QP FREE FEASIBLE...

295

§3 Implement of algorithm

In this section, we assume that the following assumptions A1-A3 hold.

A1 The strictly feasible set D is nonempty. The level set S = {x|f (x) ≤ f (x0 ) and x ∈ D̄}

is bounded.

A2 f and gi are Lipschitz continuously differentiable, and for all y, z ∈ Rn+m , kL(y) −

L(z)k ≤ c2 ky − zk.

A3 H k is positive definite and there exists a positive number m1 such that 0 < dT H k d ≤

m1 kdk2 for all d ∈ Rn , d 6= 0.

Lemma 3.1. If Φ̄k 6= 0, then V k is nonsingular. Furthermore, assume that (x∗ , µ∗ ) is an

accumulation point of {(xk , µk )}, (xk(i) , µk(i) ) → (x∗ , µ∗ ), Φ̄k(i) → Φ∗ and V k(i) → V ∗ . If

k(i) −1

∗

Φ∗ 6= 0, then k(V

Ã

!) k is bounded and V is nonsingular.

u

Proof. If V k

= 0 for some (u, v) ∈ Rn+m , where u = (u1 . . . , un )T , v = (v1 . . . , vm )T ,

v

then we have

(H k + ck1 In )u + ∇Gk v = 0,

(26)

and

diag(ξ k )(∇Gk )T u + (diag(η k ))v = 0.

(27)

Assume Φ̄k 6= 0. Obviously, ck1 6= 0 and, by the definitions of ξjk and ηjk , ξjk < 0 and ηjk > 0,

j = 1, 2, · · · , m. Thus, diag(η k ) is nonsingular. We have

v = −(diag(η k ))−1 diag(ξ k )(∇Gk )T u.

(28)

Putting (28) into (26), we have

uT (H k + ck1 In )u − uT ∇Gk diag(ξ k )(diag(η k ))−1 (∇Gk )T u = 0.

(29)

uT (H k + ck1 In )u = 0 and u = 0 are implied by the fact that H k + ck1 In is positive definite and

−∇Gk diag(ξ k )(diag(η k ))−1 (∇Gk )T is positive semi-definite, then v = 0 by (27). The first part

of this lemma holds.

k(i)

On the other hand, without loss of generality we may assume that c1

→ c∗1 6= 0,

diag(ξ k(i) ) → diag(ξ ∗ ), diag(η k(i) ) → diag(η ∗ ) and H k(i) → H ∗ . We know ηj∗ > 0 for all

j = 1, 2, · · · , m. H k(i) → H ∗ implies that H ∗ is positive semi-definite and H ∗ + c∗j In is positive

definite. By replacing index k by ∗ in the above proof, it is easy to check that V ∗ is nonsingular.

Assumption V k(i) → V ∗ implies that k(V k(i) )−1 k is bounded. This lemma holds.

If Φ̄k = 0 or Φ(xk , µk ) = 0 , then (xk , λ̄k ) or (xk , µk ) is a KKT point of Problem (NLP).

Without loss of generality, in the sequel we may assume that Φ̄k 6= 0 and Φ(xk , µk ) 6= 0 for all

k.

Because V k is nonsingular, (17) or (18) always has a unique solution.

V k is nonsingular and Ak = (V k )−1 exists. Let

Ã

k

A =

H k + ck1 In

diag(ξ k )(∇Gk )T

∇Gk

diag(η k )

!−1

Ã

=

Ak11

Ak21

Ak12

Ak22

!

.

(30)

296

Appl. Math. J. Chinese Univ. Ser. B

Vol. 21, No. 3

By calculating directly we have

Ak11

= (H k + ck1 In )−1 + (H k + ck1 In )−1 (∇Gk )(Qk )−1 diag(ξ k )(∇Gk )T (H k + ck1 In )−1 ;

Ak12

= −(H k + ck1 In )−1 (∇Gk )(Qk )−1 ;

Ak21

= −(Qk )−1 diag(ξ k )(∇Gk )T (H k + ck1 In )−1 ;

Ak22

= (Qk )−1 .

where Qk = diag(η k ) − diag(ξ k )(∇Gk )T (H k + ck1 In )−1 (∇Gk ).

Lemma 3.2. If Φ̄k 6= 0, then dk0 = 0 if and only if ∇f (xk ) = 0, and dk0 = 0 implies λk0 = 0

and (xk , λk0 ) is a KKT point of Problem (NLP).

Proof. If ∇f (xk ) = 0, then dk0 = 0 and λk0 = 0 by (17). If dk0 = 0, then (17) implies

∇Gk λk0 = −∇f (xk ) and diag(η k )λk0 = 0. So, λk0 = 0 and ∇f (xk ) = 0.

Without loss of generality, we assume that the algorithm never terminates at any k, i.e.,

dk0 6= 0 for all k in the remainder part of this paper.

Lemma 3.3. If dk0 6= 0, then

1. ck1 kdk0 k2 ≤ (dk0 )T (H k + c̄k1 In )dk0 ≤ −(dk0 )T ∇f k .

P

4

2. (dk1 )T ∇f k = (dk0 )T ∇f k − i:λk0 <0 (λk0

i ) .

i

k T

k

k1 T

k

3. (d ) ∇f ≤ θ(d ) ∇f .

Proof. (17) implies

(H k + ck1 In )dk0 + ∇Gk λk0 = −∇f k

(31)

diag(ξ k )(∇Gk )T dk0 + (diag(η k ))λk0 = 0.

(32)

λk0 = −(diag(η k ))−1 diag(ξ k )(∇Gk )T dk0 .

(33)

and

We have

Putting (33) into (31), we have

−(dk0 )T ∇f k = (dk0 )T ((H k dk0 + ck1 In ) + ∇Gk λk0 )

= (dk0 )T (H k + ck1 In )dk0

−(dk0 )T ∇Gk (diag(η k ))−1 diag(ξ k )(∇Gk )T dk0 .

(34)

(dk0 )T ∇Gk (diag(η k ))−1 diag(ξ k )(∇Gk )T dk0 ≤ 0 implies

ck1 kdk0 k2 ≤ (dk0 )T (H k dk0 + ck1 In )dk0 ≤ −(dk0 )T ∇f k .

(35)

The first part of the lemma holds. (17) and (30) imply

(dk0 )T = −Ak11 ∇f k , λk0 = −Ak21 ∇f k .

(36)

Pu Dingguo,et al.

PIECEWISE LINEAR NCP FUNCTION FOR QP FREE FEASIBLE...

297

The property of the matrix implies

(Qk )−1 diag(ξ k ) = ((diag(ξ k ))−1 Qk )−1

= {(diag(ξ k ))−1 [diag(η k ) − diag(ξ k )(∇Gk )T (H k + ck1 In )−1 (∇Gk )]}−1

= {[diag(η k ) − (∇Gk )T (H k + ck1 In )−1 (∇Gk )diag(ξ k )](diag(ξ k ))−1 }−1

=

[(Qk )T (diag(ξ k ))−1 ]−1 = diag(ξ k )((Qk )T )−1 .

(37)

(18), (30) and (37) imply Ak12 diag(ξ k ) = (Ak21 )T and

3

(dk1 )T ∇f k = −(Ak11 ∇f k )T ∇f k − [(Ak12 )T diag(ξ k )]T (∇f k )(λk0

−)

X

4

= (dk0 )T ∇f k −

(λk0

i ) .

(38)

i:λk0

<0

i

The second part of this lemma holds. Finally, (19)-(21) and (38) imply

(dk2 − dk1 )T ∇f k = kdk1 kν [Ak12 diag(ξ k )e]T ∇f k = kdk1 kν

m

X

λk0

j=1

and

(dk )T ∇f k = (1 − ρk )(dk1 )T ∇f k + ρk (dk2 )T ∇f k ≤ θ(dk1 )T ∇f k .

(39)

This lemma holds.

It is easy to prove the following (see [5])

Lemma 3.4. If dk0 6= 0, then there is a t̄ such that, for all t ∈ (0, t̄), (24) and (25) are satisfied.

Proof. If dk0 6= 0, then by the continuous differentiability of f , we have

f k − f (xk + tdk + t2 dˆk ) ≥ −t(dk )T ∇f k + O(t2 ).

(40)

(40), gik < 0 and the continuous differentiability of gi imply that there is a t̄ > 0 such that, for

any 0 < t ≤ t̄, (24) and (25) are satisfied. This lemma holds.

Lemmas 3.1-3.4 show that Algorithm 2.1 can be implemented.

§4 Convergence

In this section we assume that assumptions A1-A3 hold.

Lemma 4.1. Assume xk(i) → x∗ and Φ̄k(i) > ε > 0 for some ε, then the sequences of

{(dk(i)0 , λk(i)0 )}, {(dk(i)1 , λk(i)1 )} and {(dk(i)2 , λk(i)2 )} are all bounded on k = 0, 1, · · ·.

Proof. If xk(i) → x∗ and Φ̄k(i) > ε > 0 for some ε, then the matrix sequence {(V k(i) )−1 }

is proved to be uniformly bounded in Lemma 3.1. {xk(i) } is bounded due to the assumption

A3. The solvability of system (17) means that {(dk(i)0 , λk(i)0 )} is bounded, which implies the

boundedness of {dk(i)1 } of the right-hand side of (18). Hence {(dk(i)1 , λk(i)1 )} is also bounded.

298

Appl. Math. J. Chinese Univ. Ser. B

Vol. 21, No. 3

Finally, the boundedness of {dk(i)1 , λk(i)1 )} implies the boundedness of the right-hand side of

(19). Hence {(dk(i)2 , λk(i)2 )} is also bounded.

Lemma 4.2. Assume xk(i) → x∗ and Φ̄k(i) > ε > 0 for some ε. There is a c3 > 0 such that,

for all k = 1, 2, · · ·,

kdk(i) − dk(i)1 k ≤ c3 kdk(i)0 k.

Proof. It is from the Lemma 3.1 that there exists a c3 > 0 such that, for all k = 0, 1, · · ·,

c3 ≥ 2mρk k(V k(i) )−1 k. Let

∆dk(i) = dk(i) − dk(i)1 and ∆λk(i) = λk(i) − λk(i)1 ,

then by (18)-(20), (∆xk(i) , ∆λk(i) ) is the solution of

Ã

! Ã

!

k(i)

∆d

0

V k(i)

=

.

∆λk(i)

−ρk kdk(i)0 kν diag(ξ k )e

(41)

It is easy to see that

k(∆xk(i) , ∆λk(i) )k ≤ c3 kdk(i)0 kν

The lemma holds.

Lemma 4.3. Assume xk(i) → x∗ and λk(i) → λ∗ ,

1. if dk(i) → 0, then λ∗j ≥ 0 for any 1 ≤ j ≤ m;

2. if dk(i)0 → 0, then x∗ is a KKT point of Problem (NLP);

3. if dk(i) → 0 and Φ̄k(i) > ε > 0 for some ε, then x∗ is a KKT point of Problem (NLP).

Proof. It follows from Lemma 3.3 that

X

k(i)0 4

k(i)

(λi

) .

(42)

(dk(i) )T ∇f k(i) ≤ −c1 θkdk(i)0 k2 − θ

k(i)0

i:λi

<0

Hence {dk(i) } → 0 implies

X

k(i)0

i:λi

k(i)0 4

(λi

) → 0 and λ∗j ≥ 0, 1 ≤ j ≤ m.

(43)

<0

The first part of this lemma holds.

Because {λ̄k(i) } ≤ µ̄ and µk(i) are bounded, there is an accumulation point λ̄∗ of {λ̄k(i) }.

Without loss of generality, we may assume that ck(i) → c∗ , µk(i) → µ∗ and λ̄k(i) → λ̄∗ . (42)

implies that, for any accumulation point λ∗ of {λk(i) }, λ∗i ≥ 0, 1 ≤ i ≤ m. Taking limitation in

both sides of (17), and noting dk(i)0 → 0, we obtain (λ∗ )T ∇G∗ = −∇f ∗ and diag(η ∗ )λ∗ = 0.

If −gi∗ > 0, for some 1 ≤ i ≤ n, then −ηi∗ + c∗i ≥ δ > 0 and λ∗i = 0, that is, for any 1 ≤ i ≤ m,

gi∗ λ∗i = 0. The second part of this lemma holds. If dk(i) → 0 and Φ̄k(i) > ε > 0 for some ε, then

(42) implies dk(i)0 → 0, so x∗ is a KKT point of the Problem (NLP). This lemma holds.

By using Lemma 4.3, the proof of the following lemma is the same as that of Lemma 3.8 in

[3].

Lemma 4.4. Assume xk(i) → x∗ and Φ̄k(i) > ε > 0 for some ε. If dk(i)−1 → 0, then (x∗ , λ∗ ) is

a KKT point of Problem (NLP), where λ∗ is an accumulation point of {λk(i) }.

Pu Dingguo,et al.

PIECEWISE LINEAR NCP FUNCTION FOR QP FREE FEASIBLE...

299

The following result is the same as that in Lemma 3.6 of [5].

Lemma 4.5. Assume xk(i) → x∗ and Φ̄k(i) > ε > 0 for some ε. If lim inf{kdk(i)−1k k} > 0, then

(x∗ , λ∗ ) is a KKT point of Problem (NLP), where λ∗ is an accumulation point of {λk(i) }.

The following global convergence theorem holds.

Theorem 4.1. If x∗ is a limit point of {xk }, then x∗ is a KKT point of Problem (NLP).

Proof. Assume (xk(i) , λ̄k(i) ) → (x∗ , λ̄∗ ). If Φ̄∗ = 0, then (x∗ , λ̄∗ ) is a KKT point of Problem

(NLP).

If Φ̄∗ 6= 0, then λk(i) is bounded by Lemma 4.1. It follows from Lemmas 4.2-4.5 that (x∗ , λ∗ )

is a KKT point, where λ∗ is an accumulation point of {λk(i) }. The proof of this theorem is

completed.

§5 Superlinear convergence

Let I2 (x, λ) = {i|gi (x) = 0, λi > 0} and X(x, λ) = {d|dT ∇gi (x) ≥ 0, i ∈ I2 (x, λ); }.

We need the following conception and assumptions for the superlinear convergence.

Definition 5.1 A point (x, λ) is said to satisfy the strong second-order sufficient condition

for Problem(NLP) if it satisfies the first-order KKT conditions (3) and if dT V d > 0 for all

d ∈ X(x, λ), d 6= 0 and any V ∈ ∂B Φ(x, λ).

Notice that the strong second-order sufficient condition implies that x is a strict local minimum of Problem (NLP) (see [8]).

A4 {∇gi (x∗ )} are linear independent, where i ∈ I(x∗ ) = {i|gi (x∗ ) = 0}, x∗ is an accumulation point of {xk } and a KKT point of Problem (NLP) as well.

A5 H k is uniformly positive definite and there exist two positive numbers m1 and m2 such

that 0 < m2 kdk2 ≤ dT H k d ≤ m1 kdk2 for all d ∈ Rn and all k.

A6 The strong second-order sufficient condition for Problem(NLP) holds at each KKT point

(x∗ , λ∗ ).

A7 The strict complementarity condition holds at each KKT point (x∗ , µ∗ ).

A8 The sequence of {H k } satisfies

kP k (H k − ∇2x L(xk , µk ))dk1 k

→ 0,

kdk1 k

(44)

where P k = I − N k ((N k )T N k )−1 N k )T and N k = (∇gik ), i ∈ I k = {i|gik = 0}.

Assumption A7 implies that Φ is continuously differentiable at each KKT point (x∗ , µ∗ ).

Like the proof of Lemma 3.1, we get

Lemma 5.1. Assume A4 and A5 hold, then {k(V k )−1 k} and {k(V̂ k )−1 k} are bounded.

Furthermore, if V ∗ is an accumulation matrix of {V k }, then V ∗ is nonsingular.

Lemma 5.1 and the proof of Lemma 4.1 imply

Lemma 5.2. Assume A4 and A5 hold. The sequences of {(dk(i)0 , λk(i)0 )}, {(dk(i)1 , λk(i)1 )} and

{(dk(i)2 , λk(i)2 )} are all bounded on k = 0, 1, . . ..

A9 λk0 < µ̄ and λ̄k = λk0 for sufficiently large k.

It is easy to check that limk→∞ Φ(xk , λ̄k ) = limk→∞ Φ(xk , λk0 ) = 0. (44) is equivalent to

the following

k(P k (H k + ck1 ) − ∇2x L(xk , µk ))dk1 k

→ 0.

(45)

kdk1 k

300

Appl. Math. J. Chinese Univ. Ser. B

Vol. 21, No. 3

We have kckj k/kΦ̄k k → 0 and c̄k1 /kΦ̄k k → 0 as k → ∞, respectively.

Theorem 5.1. Assume A1-A3 and A6 hold. If (x∗ , λ∗ ) is an accumulation point of {(xk , λk0 )},

then

1. (x∗ , λ∗ ) is a KKT point of Problem (NLP),

2.(xk , λk0 ) → (x∗ , λ∗ ),

3. dk0 → 0, dk1 → 0 and dk2 → 0.

The proof of Theorem 5.1 is as the same as that of Theorem 3.7, Lemma 3.1 and Corollary

3.3 in [5].

Assume A1 and A3-A9 hold. The proof of Lemma 5.2 and Theorem 5.1 is the same as that

of Lemma 4.6 and Theorem 4.9 in [5].

Lemma 5.3. For k large enough the step tk = 1 is accepted by the line search.

Theorem 5.2. Let Algorithm 2.1 be implemented to generate a sequence {(xk , λk )}, and

(x∗ , λ∗ ) be an accumulation point of {(xk , λk )}, then (x∗ , λ∗ ) is an KKT point of Problem

(NLP), and (xk , λk ) converges to (x∗ , λ∗ ) superlinearly.

§6 Discussion and numerical tests

In this section, we give some numerical results of Algorithm 2.1 for constrained optimization

problems, the details about implementation are described as follows.

1. Termination criterion. We use the termination condition on Φ and ε. Because Φ(x, µ) = 0

if and only if (x, µ) is a KKT point, the termination criterion is kΦk k ≤ 10−5 .

2. Update H k . H k is updated by BFGS method. In particular, we set

H k+1 = H k −

where

(

k

y =

and

H k sk (sk )T H k

y k (y k )T

+

,

(sk )T H k sk

(sk )T y k

ŷ k

θk ŷ k + (1 − θk )H k sk

(sk )T ŷ k ≥ 0.2(sk )T H k sk ,

otherwise,

k

k+1

− xk ,

s =x

k

ŷ = ∇f (xk ) − ∇f (xk+1 ) + (∇g(xk+1 ) − ∇g(xk ))µk ,

k

θ = 0.8(sk )T H k sk /((sk )T H k sk − (sk )T y k ).

3. The test problems are chosen from [9].

4. The parameters are set as follows:

c1 = 0.1, τ = 0.5, ν > 2, µ̄ = 105 , κ = 0.9 and θ = 0.8.

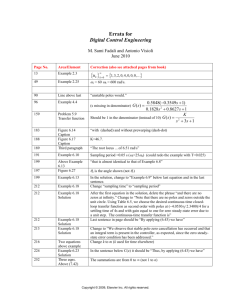

In Table 1 which present the results of the numerical experiments, we use the following

notations:

Feasible: the method in this paper,

Problem: the number of problems in [9],

x0 : the starting vector,

It: the number of iterations,

kΦk: the value of kΦ(·)k at the final iterate (xk , µk ),

Pu Dingguo,et al.

PIECEWISE LINEAR NCP FUNCTION FOR QP FREE FEASIBLE...

301

ε: the value of ε at the final iteration (xk , µk ),

FV: the objective function value at the final iteration.

Table 1

Problem

4

5

12

24

30

35

0

x

(1.125,0.125)

(0,0)

(0,0)

(1,0.5)

(1,1,1)

(0,1.05,2.9)

Feasible

It

kΦk

4

1.0e-08

8 6.25e-06

21 4.25e-06

26 2.25e-06

21 9.05e-06

20 1.35e-06

FV

2.6667e+00

-1.9132e+00

1.1132e+00

-1.1543e+00

1.0000e+00

1.1111e-01

From Table 1 we can see that the numerical results indicate that this method is quite

promising.

References

1 Ferris M C, Pang J S. Engineering and economic applications of complementarity problems,

SIAM Review, 39 (1997), 669-713.

2 Harker P T, Pang J S. Finite-dimensional variational and nonlinear complementarity problems:

a survey of theory, algorithm and applications, Mathematical Programming, 48 (1990), 161-220.

3 Panier E R, Tits A L, Herskovits J N. A QP-free, globally, locally superlinear convergent method

for the inequality constrained optimization problems, SIAM Journal on Control and Optimization, 36 (1988) 788-811.

4 Pu D G, Zhou Y, Zhang H Y. A QP free feasible method, Journal of Computational Mathematics,

2004, 22: 651-660.

5 Qi H D, Qi L Q. A New QP-free, globally V, locally superlinear convergent feasible method for

the solution of inequality constrained optimization problems, SIAM Journal on Optimization, 11

(2000) 113-132.

6 Fischer A. A special Newton-type optimization method, Optimization, 24 (1992), 269-284.

7 Qi L Q, Jiang H. Semismooth Karush-Kuhn-Tuchker equations and convergence anaylsie of Newton and quasi-Newton methods for solving these equations, Mathematics of Operations Research,

22(1997), 301-325.

8 Qi L Q. Convergence analysis of some method for solving nonsmooth equations, Mathematics of

Operations Research, 18(1993), 227-243.

9 Hock W, Schittkowski K. Test Example for Nonlinear Programming Codes, Lecture Notes in

Econom. and Math. Systems 187, Berlin: Springer-Verlag, 1981.

1 Department of Applied Mathematics, Tongji University, Shanghai, 200092, China.

2 Department of Management Science and Engineering, Qingdao University, Qingdao, 266071, China.