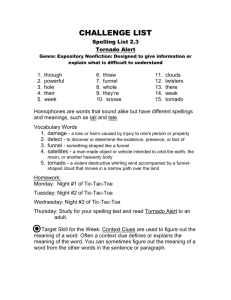

ic er t: on

advertisement

Presented by: Chris (“CJ”) Janneck 11/12/01 Ridin’ The Storm Out: Two Articles on Electronic Wind and Digital Water Articles Discussed We’ll talk about the Tornado Codes, first. Byers et. al, 1999 Accessing Multiple Mirror Sites in Parallel: Using Tornado Codes to Speed Up Downloads Byers et al, 1998 A Digital Fountain Approach to Reliable Distribution of Bulk Data Obstacles to Overcome Often, in these situations, feedback from the receiver is not available Highly unreliable, and takes a fair amount of time Presently, (good) mirroring works by selecting a lightly-loaded, uncongested server, and all data is received from there We want to be able to download from multiple sites in parallel Multicast is a technology to broadcast data to many users simultaneously They require slightly more than k packets to reconstitute data Tornado Codes are very similar to standard erasure codes a.k.a.Forward Error Correction Codes Takes a file of k packets, encodes it into n packets such that the initial file can be reconstituted from any k-packet subset Using a modification of “erasure codes” How We Can Accomplish This Different transmission rates/sources Several renegotiations may be necessary This is, if renegotiation is even possible Realistically, renegotiation is difficult If there are problems, renegotiate Ideally, a server broadcasts packets until a receiver gets k of them Inadequacy of a Basic Scheme (why this is no walk in the park) A constant flow of data is available, and, regardless of which k packets are received, any k packets can be used to recreate the data Much like a fountain – it does not matter which drops of water are drunk to quench one’s thirst for drinking Enter: the “Digital Fountain” Metaphor “Stretch Factor” “Decoding Inefficiency” “Distinctness Inefficiency” “Reception Inefficiency” Tornado Vocab Results of Tests The “average” decoding inefficiency is good approximation for true behavior Figure 4 – Decoding Inefficiency As Trans. Rate equalizes, and No. of Senders increase, R.I. And Speedup increase Figure 3 – Trans. Rate and No. of Senders As diff. In Trans. Rate increases, R.I. And Speedup decrease Figure 2 – Trans. Rate of Senders As No. Senders increases, R.I. And Speedup / Stretch increase Figure 1 – Number of Senders As number of senders grows exponentially from 100 to 100K, the Reception Inefficiency changes very little, with either 2, 3 or 4 senders Figure 5 – Number of Senders That’s nice, but does it scale? Possible Enhancements Reliability is not major concern because only k*epsilon packets needed Integrate some level of renegotiation Doesn’t scale well – not good many-to-many Receiver requests start and end offset Guarantees, for some period of time, no duplicates are sent Synchronization of Senders “The variety of protocols and tunable parameters we describe demonstrates that one can develop a spectrum of solutions that trade off the necessary feedback, the bandwidth requirements, the download time, the memory requirements, and the complexity of the solution in various ways.” Conclusions If multiple senders at the same time is the “worst-case” scenario, then why does it attain the best speedup? Questions The other article A Digital Fountain Approach to Reliable Distribution of Bulk Data “A digital fountain allows any number of heterogeneous clients to acquire bulk data with optimal efficiency at times of their choosing.” Definition (from the abstract): Digital Fountain Suffers from “feedback implosion” Distributing data to many recipients Multicast analog of Unicast protocols are not scalable And, why, if it ain’t broken? Problem Domain – What are we trying to fix? Therefore, use a digital fountain instead Limitation on lossy networks Use of “erasure codes” Some deficits of standard Reed-Solomon codes Use of a “data carousel” to ensure full reliability Remnants from the Other Side Can take on some slower, some faster clients, and handle “swinging” Tolerant Able to handle recipients at any time, whenever they want it On Demand Should not take much longer, nor require more effort than others Efficient Delivers stuff in its entirety Must be: Reliable Wanted: One Single, Reliable Protocol Tornado Review Definitions used again: “Stretch Factor” Encoding/Decoding times/overhead Redundancy/Overlap Tornado Codes use only EX-OR operatoins Tables 2 and 3 pretty much speak for themselves Encoding/Decoding Times Figure 4 – shows the reception efficiency of Tornado codes and interleaved codes What happens when decoding time is set comparable to Tornado codes? Table 4 – shows Speedup of Tornado over interleaved codes What happens when reception efficiency is set comparable to Tornado codes? Simulation/Comparison Tests Used “layered” multicast techniques Congestion is simulated/controlled by “synchronization points” (SP’s) Server generates periodic bursts Packets are sent in correspondence to the “One Level Property” Encoded data is sent in “rounds” Implementation – Part I Implementation – Part II Berkeley Carnegie Mellon University Cornell University Servers/Clients set up at 3 locations: UDP unicast: control information Multicast transmission thread Client implements statistical approach – does not perform preliminary decoding before all packets arrive Server runs 2 threads: Quite pleased with experimental results Efficiency under 100% at low loss levels (~13%) due to switching between subscription levels Distinctness efficiency could be raised by using larger stretch factors Results Dispersity routing end-to-end of data in a packet-routing network Enhanced mirrored data Could bring about new opportunities for reliable multicast protocols Other applications: Conclusions ! ! ! This team seems heavily concerned with “efficient” data transmission, but how does this compare (practically, time-wise) to traditional methods? Is the much faster decoding enough to overlook a little data duplication? What are some inherent concerns in widespread multicasting? Questions – Round 2