Frame-work in Learning theory Dominique Picard Laboratoire Probabilit´es et Mod`eles Al´eatoires

advertisement

Frame-work in Learning theory

Dominique Picard

Laboratoire Probabilités et Modèles Aléatoires

Universités Paris VII

Joint work with G. Kerkyacharian (LPMA)

Texas A&M- October 2007.

http ://www.proba.jussieu.fr/mathdoc/preprints/index.html

1

Bounded regression/learning problem : Model

1. Yi = fρ (Xi ) + ǫi , i = 1 . . . n

2. ǫi′ s, i.i.d. bounded random variables

3. Xi′ s i.i.d. random variables on a set X = compact domain of Rd .

Let ρ be the common (unknown ) law of the vector Z = (X, Y)

4. fρ is a bounded unknown function.

5. Two kind of hypotheses

(a) fρ (Xi ) orthogonal to ǫi (learning)

(b) Xi ⊥⊥εi (bounded regression theory)

Cucker and Smale, Poggio and Smale,..

2

Aim of the game

^ (X, Y)n

1. Minimize among ’estimators’ f^ = f(x,

1)

^ := Eρ (f)

^ :=

E(f)

R

^ − y)2 dρ(x, y)

f(x)

(

X×R

2.

fρ (x) =

Z

ydρ(y|x)

3.

^ = kf^ − fρ k2ρ + err(fρ )

E(f)

X

R

R

2

^

^

4. E(f) = X (f(x) − fρ (x)) dρX (x) + X×R (fρ (x) − y)2 dρ(x, y)

3

Measuring the risk

^

1. Mean square error : Eρ⊗n kf((X,

Y)n

1 ) − fρ kρX

^

2. Probability bounds : Pρ⊗n {kf((X,

Y)n

1 ) − fρ kρX > η}

4

Mean square Errors and Probability bounds

– Assume fρ belongs to a set Θ, ρ ∈ M(Θ) consider the Accuracy

Confidence Function :

–

^ ρX > η}

ACn (Θ, η) := inf sup Pρ⊗n {kfρ − fk

f^ ρ∈M(Θ)

–

−cnη2

ACn (Θ, η) ≥ C{

e

,

η ≥ ηn ,

η ≤ ηn ,

1,

DeVore, Kerkyacharian, P, Temlyakov

5

•

2

ACn (Θ, η) ≥ C{

e−cnη ,

η ≥ ηn ,

η ≤ ηn ,

1,

• ln N̄(Θ, ηn ) ∼ c2 nη2n

•

N̄(Θ, δ) := sup{N : ∃ f0 , f1 , ...fN ∈ Θ, with

c0 δ ≤ kfi − fj kL2 (ρX ) ≤ c1 δ, ∀i 6= j}.

6

–

−cnη2

inf

sup

f^ ρ∈M(Θ)

^ > η} ≥ C{

Pρ⊗n {kfρ − fk

e

,

1,

η ≥ ηn ,

η ≤ ηn ,

s

− 2s+d

– ηn = n

for the Besov space Bsq (L∞ (Rd ))

– In statistics, minimax results

inf

sup

d

f^ ρ∈M ′ (Bs

q (L∞ (R )))

s

^ dx ≥ cn− 2s+d

Ekfρ − fk

Ibraguimov, Hasminski, Stone 80-82

7

Mean square estimates

n

1X

^

f = Argmin{

(Yi − f(Xi ))2 , f ∈ Hn }

n

i=1

1. 2 important problems :

(a) Not always easy to implement

(b) depending on Θ : Search for ’Universal’ estimates : working

for a class of spaces Θ

8

Oracle Case

n

1X

Kk (Xi )Kl (Xi ) = δkl

(P) :

n

i=1

( (Kk ) o.n.b. for the empirical measure on the Xi′ s)

Pp

(1)

1. Hn = {f = j=1 αj Kj } (linear)

Pp

P

(2)

2. Hn = {f = j=1 αj Kj ,

|αj | ≤ κ}

(l1 constraint)

Pp

(3)

3. Hn = {f = j=1 αj Kj , #{|αj | 6= 0} ≤ κ}

(sparsity)

9

α

^k =

1

n

Pn

i=1

(1)

(2)

^ k I{|^

αk | ≥ λ}

α

^k = α

αk )|^

αk − λ|+ ,

α

^ k = sign(^

1.

(1)

Hn

= {f =

.

(2)

2. Hn = {f =

.

3.

(3)

Hn

.

= {f =

Pp

j=1

Pp

αj Kj }

f^ =

j=1 αj Kj ,

Pp

j=1

Kk (Xi )Yi ,

P

Pp

j=1

|αj | ≤ κ}

Pp

(1)

^

f =

α

^ j Kj

(1)

^ j Kj

j=1 α

αj Kj #{|αj | 6= 0} ≤ κ}

Pp

(2)

(2)

^

f = j=1 α

^ j Kj

10

Universality properties

α

^k =

1

n

Pn

i=1

(1)

(2)

^ k I{|^

αk | ≥ λ}

α

^k = α

αk )|^

αk − λ|+ ,

α

^ k = sign(^

f^(1) =

Pp

Kk (Xi )Yi ,

(1)

f^(2) =

^ j Kj ,

j=1 α

11

Pp

(2)

^ j Kj

j=1 α

How to mimic te oracle ?

1. Condition (P) :

1

n

Pn

i=1

Kr (Xi )Kl (Xi ) = δrl is not realistic.

2. How to replace (P) by P(δ) ′ δ − close ′ to (P) ?

12

Consider for instance the sparsity penalty

We want to minimize :

n

p

i=1

j=1

X

1X

6 0}

C(α) :=

αj Kj (Xi ))2 + λ#{αj =

(Yi −

n

1

kY − Kt αk22 + λ#{αj 6= 0}

n

1

1

= kY − projV (Y)k22 + kprojV (Y) − Kt αk22 + λ#{αj =

6 0}

n

n

Pp

V = {( j=1 bj Kj (Xi ))n

i=1 , bj ∈ R}, Kji = Kj (Xi ) p × n matrix

=

13

Case λ = 0

C(α) =

1

1

kY − projV (Y)k22 + kprojV (Y) − Kt αk22 .

n

n

Kt α

^ = projV (Y)

Kt α

^ = Kt (KKt )−1 KY

α

^ = (KKt )−1 KY

Regression text-books

14

Case λ 6= 0

C(α) =

1

1

kY − projV (Y)k22 + kprojV (Y) − Kt αk22 + λ#{αj 6= 0}

n

n

Minimize C(α) equivalent to minimize D(α)

1

kprojV (Y) − Kt αk22 + λ#{αj 6= 0}

n

1

= (α − α

^ )t KKt (α − α

^ ) + λ#{αj 6= 0}

n

D(α) =

15

Condition (P) :

• then the p × p matrix

1

n

Pn

i=1

Kr (Xi )Kl (Xi ) = δrl

1

KKt = Id

n

n

1X

Kl (Xi )Kk (Xi ))kl

=(

n

Mnp =

(Mnp )kl

i=1

• D(α) =

Pp

2

(α

−

α

^

)

+ λ#{αj 6= 0}

j

j

j=1

(2)

^ k I{|^

αk | ≥ cλ} as a solution.

has α

^k = α

• Simplicity of calculation : α

^ = (KKt )−1 KY =

p

1X

α

^j =

Kj (Xi )Yi

n

j=1

16

1

n KY

δ-Near Identity property

Mnp

(1 − δ)

p

X

j=1

1

= KKt

n

x2j ≤ xt Mnp x ≤ (1 − δ)

p

X

x2j

j=1

p

p

p

j=1

j=1

j=1

(1 − δ)sup|xj | ≤ sup|(Mnp x)j | ≤ (1 − δ)sup|xj |

17

Estimation procedure

tn

=

log n

,

n

√

λn = T tn ,

p=[

z = (z1 , . . . , zp )t = (KKt )−1 KY,

z̃l

= zl I{|zl | ≥ λn }

f^ =

p

X

z̃l Kl (·)

l=1

18

n 1

]2

log n

Results

1. If fρ is sparse i.e. ∃ 0 < q < 2, ∀ p, ∃(α1 , . . . , αp )

P

−1

(a) kfρ − p

j=1 αj Kj k∞ ≤ Cp

(b) ∀ λ > 0, #{|αl | ≥ λ} ≤ Cλ−q ,

ηn = [

log n 1 − q

]2 4 .

n

−cnp−1 η2

^ ρ^ > (1 − δ)

ρ{kfρ − fk

−1

η} ≤ T {

e

1,

Quasi-optimality

19

∧ n−γ ,

η ≥ Dηn ,

η ≤ Dηn ,

1. Our conditions depend on the family of functions {Kj , j ≥ 1}.

2. If the Kj ’s can be tensor products of wavelet bases for instance

then for

d d

s := −

q 2

s

f ∈ Bsr (L∞ (Rd )) implies the conditions above and ηn = n− 2s+d .

20

Near Identity property : How to make it work ?

d=1

1. Take {φk , k ≥ 1} be a smooth orthonormal basis of L2 [0, 1](dx)

2. H with H(Xi ) =

i

n

3. Change the time scale : Kk = φk (H)

Pn

Pn

1

1

4. Pn (k, l) = n i=1 Kk (Xi )Kl (Xi ) = n i=1 φk ( ni )φl ( ni ) ∼ δkl

21

1.5

1.0

0.5

−0.5 0.0

−1.5

x

0

10

20

30

40

Index

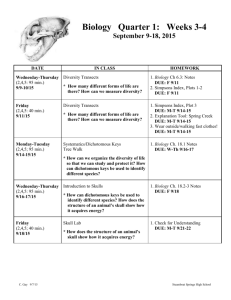

Fig. 1 – Ordering by arrival times

22

1.5

1.0

0.5

s

−0.5 0.0

−1.5

0

10

20

30

Index

Fig. 2 – Sorting

23

40

Choosing H

•

•

•

•

•

Ordering the Xi′ s : (X1 , . . . , Xn ) → (X(1) ≤ . . . ≤ X(n) )

Pn

1

^

Consider Gn (x) = n i=1 I{Xi ≤ x}

^ n (X(i) ) = i

G

n

^ n is stable (i.e. close to G(x) = ρ(X ≤ x))

H=G

^ n ) ∼ φl (G)

φl (G

24

Near Identity property

d≥2

Finding H such that H(Xi ) = ( in1 , . . . , ind ), for instance in a ’stable

way’ is a difficult problem.

25

Near Identity property

K1 , . . . , Kp NIP

if there exist a measure µ and cells C1 , . . . , CN such that :

Z

| Kl (x)Kr (x)dµ(x) − δlr | ≤ δ1 (l, r)

Z

N

X

1

|

Kl (ξi )Kr (ξi ) − Kl (x)Kr (x)dµ(x)| ≤ δ2 (l, r),

N

i=1

∀ξ1 ∈ C1 , . . . , ξN ∈ CN

p

X

r=1

[δ1 (l, r) + δ2 (l, r)] ≤ δ

26

Examples : Tensor products of bases, uniform cells

1. d = 1, µ Lebesgue measure, on [0, 1], K1 , . . . , Kp is a smooth

p

.

orthonormal basis (Fourier, wavelet,...) δ1 = 0, δ2 (l, r) = N

Pp

2

1

• r=1 δ2 (l, r) ≤ pN ≤ c log1N := δ for p = [ logNN ] 2

√

(p ≤ δN is enough)

2. d > 1, µ Lebesgue measure, on [0, 1]d K1 , . . . , Kp tensor

products of the previous basis. N = md , p = Γ d .

p sup(1,H(l,r))

d

δ1 = 0, δ2 (l, r) = [ N ]

l = (l1 , . . . , ld ), r = (r1 , . . . , rd ),

•

Pp

1

p2 d

[N]

=

r=1 δ2 (l, r) ≤

√

(p ≤ δd N is enough)

H(l, r) =

i≤d

I{li 6= ri }

1

c

1

[log N] d

27

P

:= δ for p ∼ [ logNN ] 2

How to relate these assumptions with the near Identity condition ?

What we have here :

N

1 X

Kl (ξi )Kr (ξi ) ξ1 ∈ C1 , . . . , ξN ∈ CN ’not too far from’ δlr

N

i=1

What we want

n

1X

Kl (Xi )Kr (Xi ) ’not too far from’ δlr

n

i=1

28

2

1

y

0

−1

−2

−2

−1

0

x

29

1

2

2

1

y

0

−1

−2

−2

−1

0

1

x

30

Fig. 3 – Typical

situation

2

2

1

y

0

−1

−2

−2

−1

0

x

31

1

2

2

1

y

0

−1

−2

−2

−1

0

x

32

1

2

Procedure

1. We choose cells Cl such that there exist at least one among the

observation points Xi ’s in each cell.

2. We keep only one data point in each cell. (reducing the set of

observation :

(X1 , Y1 ), . . . , (Xn , Yn ),

3. n −→ N, δ ∼

1

log N

→ (X1 , Y1 ), . . . , (XN , YN )

near identity property.

4. If ρX is absolutely continuous with respect to µ, with density

lower and upper bounded, then N ∼ [ lognn ] with overwhelming

probability.

33

Estimation procedure

tN

=

z =

z̃l

=

log N

,

N

λN

√

= T tN ,

p=[

(z1 , . . . , zp )t = (KKt )−1 KY,

zl I{|zl | ≥ λN }

f^ =

p

X

z̃l Kl (·)

l=1

34

N 1

]2

log N

1. If fρ is sparse i.e. ∃ 0 < q < 2, ∀ p, ∃(α1 , . . . , αp )

Pp

(a) kfρ − j=1 αj Kj k∞ ≤ Cp−1

(b) ∀ λ > 0, #{|αl | ≥ λ} ≤ Cλ−q ,

ηN

log N 1 − q

]2 4 .

=[

N

^ > (1 − δ)−1 η} ≤ T {

ρ{kfρ − fk

e−cNp

1,

35

−1

η2

∧ N−γ ,

η ≥ DηN ,

η ≤ DηN ,

^ = kfρ − fk

^ ρ^

kfρ − fk

or (if ρX << µ)

^ = kfρ − fk

^ ρX

kfρ − fk

36

What to do with the remaining data ?

Empirical Bayes

(see Johnstone and Silverman)

• Hard thresholding (in practice) is not the best choice.

• Better choices are obtained using rules issued from Bayesian

procedures using a prior of the form :

ωδ{0} + (1 − ω)g

where g is a Gaussian ( with large variance) or a Laplace distribution.

With the associated procedure

z∗l = zl I{|zl | ≥ t(ω)}

37

• the parameter ω in the a priori distribution can again be ’learned’

using the observed data if the sample is divided into two pieces -one

used to learn this parameter, the other one to operate the bayesian

procedure itself, with the learned parameter ω,

^

z∗l = zl I{|zl | ≥ t(ω)}

^

• In our context, the remaining data, naturally serve to choose the

hyper parameter of the a priori distribution.

38

Condition under which the results are still valid

Learning → Regression :

Yi = fρ (Xi ) + εi ,

39

Xi ⊥⊥εi

3

2

1

−3

−2

−1

0

y

−4

−3

−2

−1

0

x

40

1

2

3

Examples : Wavelet frames on the sphere, Voronoi cells

Uniform cells can be replaced by Voronoi cells contructed on an

N-net on the sphere (or on the ball), with an adapted basis (spherical

harmonics, in the case of the sphere).

41