Topic 9. Inference on Proportions (Ch. 14) 1) Introduction.

advertisement

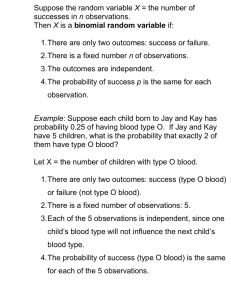

Topic 9. Inference on Proportions (Ch. 14) 1) Introduction. • In Chapter 7, we studied the binomial distribution. Suppose one has an experiment which 1) is repeated n times, with each trial having one of two possible outcomes, success (S) or failure (F), 2) has independent trials, and 3) has constant probability of success on each trial, i.e. P(S) = p. Then the number of successes, x, has the binomial distribution, specifically P ( x ) = ( nx ) p x q n − x (1) where q = 1-p. • Often, we would like to make statistical inferences concerning p from sample data, i.e. from x and n. 2) Normal Approximation to the Binomial. • The binomial calculations can be tedious. In Chapter 7, we learned to approximate binomial probabilities for rare events (with large n and small p) using the Poisson distribution. We can also use a Normal approximation. • Consider the previous problem on the number of smokers in a random sample of n = 30 people. If p = 0.29, one could find the probability of 6 or fewer smokers by calculating P(0) through P(6), and adding. One would find P ( X ≤ 6) = 0.190. • Let’s consider using a normal approximation for this. If 1) np ≥ 5 and 2) n(1 − p ) ≥ 5, then the binomial distribution is fairly symmetric. (Do we need this condition for the Poisson approximation?) Recall that the mean and variance of the binomial were μ = np and σ 2 = npq. The logic is to approximate some binomial probability, e.g. P ( X ≤ x) by a normal distribution with the same mean and variance as with the binomial. We indicated previously the possibility of this in Chapter 7, e.g. with Figure 7.7. • For example, on the problem relating to the number of smokers in a sample of n = 30 people, one has μ = np = 8.7 σ 2 = npq = 6.177 σ = 2.419 hence using the normal approximation (because both np > 5 and n(1-p) > 5) 6 − 8.7 ⎞ ⎛ P ( X ≤ 6) = P ⎜ z ≤ ⎟ 2.49 ⎠ ⎝ = P ( z < −1.09) = 0.138. • The above approximation isn’t very accurate, because the true value is 0.190. The principal reason is that we are approximating a discrete distribution using a continuous one. Particularly in cases with a relatively small n, one should use the continuity correction. Note that the binomial probability P ( X ≤ 6) corresponds better using the normal distribution to the probability P ( X ≤ 6.5). Why? In this case, note the normal probability approximation is 6.5 − 8.7 ⎞ ⎛ P ( X ≤ 6.5) = P ⎜ z ≤ ⎟ 2.49 ⎠ ⎝ = P ( z < −0.89) = 0.187 which is a close approximation. 3) Sampling Distribution of a Proportion. • Often, the parameter p is unknown in a binomial experiment, and one problem of interest is statistical inference concerning p. Suppose one has n trials with x successes. One can show that the best estimate of p is the observed proportion, pˆ , i.e. pˆ = x / n . For example, if one sampled n = 50 M&M’s and found x = 6 greens, then our best estimate of p, the proportion of greens in the population is pˆ = 0.12. • Clearly, p̂ is a statistic, and hence has a sampling distribution (as did x before). This sampling distribution has the following properties: 1) The mean (center) is μ p̂ = p 2) The standard error (spread) is σ pˆ = p (1 − p / n 3) The shape of the distribution is approximately normal for large n. In summary, ( pˆ ~ N p, p (1 − p ) / n ) (2) Property 3 is not surprising in light of our previous normal approximation claim. Moreover, suppose one codes the data so that S = 1 and F = 0. Then averaging 0’s and 1’s one can show 2 p̂ = x and hence use the central limit theorem to show that p̂ has a normal distribution for large n. • Consider the following example. Suppose the 5-year survival rate of lung cancer patients is thought to be p = .1. Suppose one has n = 50 patients in a year, and that 10 survive, for pˆ = .20. What is the probability of this occurring given p = .1? Note that ⎛ ⎞ .2 − .1 P ( pˆ ≥ .2) = P ⎜ z ≥ ⎟ ⎜ (.1)(.9) / 50 ⎟⎠ ⎝ = P ( z ≥ 2.36) = 0.009 Hence, if p = .1, there is less than a 1% chance that pˆ = .2. What might you conclude based on your observed pˆ = .2 ? This is the essence of a hypothesis test. 4) Confidence Intervals for p. • Recall, from (2) ( ) pˆ ~ N p, p (1 − p ) / n . It follows that z= pˆ − p p(1 − p) / n (3) is a standard normal random variable. Recall, P (− zα / 2 < z < + zα / 2 ) = 1 − α Substituting (3) into (4), and using a little algebra on can show ( (4) ) P pˆ − zα / 2 p (1 − p ) / n < p < pˆ + zα / 2 p (1 − p ) / n = 1 − α Unfortunately, we don’t know p for the upper and lower limits, so we replace it by pˆ . It has been shown that ( ) P pˆ − zα / 2 pˆ (1 − pˆ ) / n < p < pˆ + zα / 2 pˆ (1 − pˆ ) / n = 1 − α . This is a 100(1 − α )% confidence interval for p. One could also find a one-sided confidence interval as before. • For example, a national survey of n = 1000 males find that x = 80 feel confident that they would win a dispute with an IRS agent. Hence 3 pˆ = 80 /1000 = .08 which is the best estimate of p. A 95% confidence interval for p is pˆ ± zα / 2 pˆ (1 − pˆ ) / n = .08 ± 1.96 .08(.92) /1000 = .08 ± .017 = (.063, .097). 5) Testing Hypotheses about p. • Hypothesis tests follow immediately because p̂ has a normal distribution. Recall from (3) that pˆ − p z= . p(1 − p) / n Therefore one could test a hypothesis such as H 0 : p = p0 using pˆ − p0 z= . p0 (1 − p0 ) / n • For example, suppose the 5-year survival rate for lung cancer patients over 40 is p = 0.082. We wish to test whether this same rate holds for men under 40. A sample of n = 52 such men yields pˆ = 0.115. Note our hypothesis testing framework is 1) H 0 : p = 0.082 2) H A : p ≠ 0.082 pˆ − 0.082 3) TS : z= 0.082(0.918) / 52 = pˆ − 0.082 .038 4) RR: reject if |z| > 1.96, for α = .05 5) with pˆ = 0.115, one has 0.115 − 0.082 z= = 0.87. 0.038 Hence, one would not reject H 0 , indeed the p-value is p = 0.384. 6) Sample Size Estimation. • Consider a one-sided test, such as H 0 : p ≤ p0 vs. H A : p > p0 . 4 Suppose we fix α, and want to set β for some specific value p, under H A . In other words, we want to reject H 0 with power 1 - β when p = p1. Using some algebra, the formula for n is 2 ⎡ z p (1 − p0 ) + z β p1 (1 − p1 ) ⎤ n=⎢ α 0 ⎥ . p1 − p0 ⎣⎢ ⎦⎥ The corresponding formula for a two-sided test is ⎡z p (1 − p0 ) + z β n = ⎢ α /2 0 p1 − p0 ⎣⎢ 2 p1 (1 − p1 ) ⎤ ⎥ . ⎦⎥ • For example, let’s reconsider our previous test in slightly revised form. Suppose we wish to test H 0 : p ≤ .082 at α = .05. Our goal is to reject H 0 with power 0.95 if p = .2. Then ⎡1.645 0.082(0.918) + 1.645 .2(.8) ⎤ n=⎢ ⎥ .2 − 0.082 ⎥⎦ ⎣⎢ = (9.4) 2 = 88.36 Hence n = 90 subjects would satisfy the criteria. 2 7) Comparing Two Populations. • This is a very common and hence very important problem. Suppose one has two populations and takes a sample from each. The data are Pop 1 2 Sample Size n1 n2 Successes x1 x2 Proportion p̂1 p̂2 We desire to test H 0 : p1 = p2 . (5) Note that if p1 = p2 as specified by H 0 , the best common estimate is x +x pˆ = 1 2 , n1 + n2 a weighted average of pˆ1 and pˆ 2 . If n1 and n2 are large enough, i.e. n1 pˆ , n2 pˆ , n1 (1 − pˆ ) and n2 (1 − pˆ ) > 5, then pˆ1 − pˆ 2 ~ N , and the test statistic for (5) is ( pˆ1 − pˆ 2 ) − ( p1 − p2 ) (6) z= . pˆ (1 − pˆ )(1/ n1 + 1/ n2 ) 5 • For example, suppose one investigates seat belt effectiveness from motor vehicle accident data. A sample of n1 = 123 children who were wearing seat belts had x1 = 3 fatalities, whereas a sample of n2 = 290 children who were not wearing a belt had x2 = 13 fatalities. Clearly, pˆ1 = 0.024 and pˆ 2 = 0.045, for a near doubling of the fatality rate. Is this sufficient evidence to conclude that seat belts reduce the fatality rate? Note 1) H 0 : p1 = p2 H A : p1 ≠ p2 TS: as given in (6) RR: with α = .05, reject if |z| > 1.96 Data show 3 + 13 pˆ = = 0.039. 123 + 290 0.024 − 0.045 z= Hence 0.039(0.961)(1/123 + 1/ 290) 2) 3) 4) 5) = −1.01. Therefore, we do not have sufficient evidence to reject H 0 , indeed the p-value is p = 0.312. • One way to illustrate these results graphically would be to give separate confidence intervals for p1 and p2 . They would be overlapping, thus illustrating visually why one does not have sufficient evidence to conclude p1 ≠ p2 . • A confidence interval on the difference ( p1 − p2 ) is pˆ1 (1− pˆ1 ) n1 ( pˆ1 − pˆ 2 ) ± zα / 2 + pˆ 2 (1− pˆ 2 ) n2 . For example, using the previous data on seat belt effectiveness, a 95% confidence interval for ( p1 − p2 ) is (0.024 − 0.045) ± 1.96 0.024(0.976) 123 + 0.045(0.955) 290 = −0.021 ± 0.036 = (−0.057, 0.015). In other words, we are 95% confident that the use of seat belts yield anywhere from a 5.7% reduction to a 1.5% increase in the fatality rate. Clearly, more data are needed to conclude statistically whether or not seat belts increase or decrease fatalities among children. 6