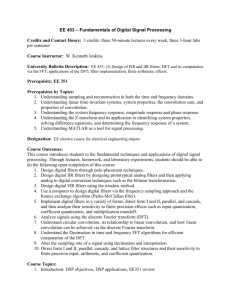

Digital Signal Processing Applications Sanjit K Mitra

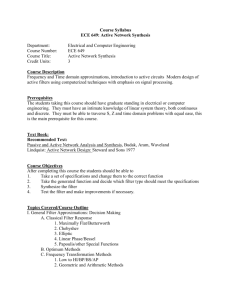

advertisement