Trimmed Means

advertisement

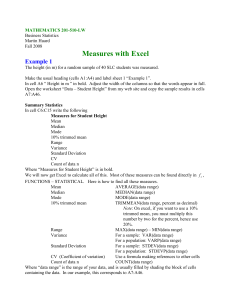

Trimmed Means RAND R. WILCOX Volume 4, pp. 2066–2067 in Encyclopedia of Statistics in Behavioral Science ISBN-13: 978-0-470-86080-9 ISBN-10: 0-470-86080-4 Editors Brian S. Everitt & David C. Howell John Wiley & Sons, Ltd, Chichester, 2005 Trimmed Means A trimmed mean is computed by removing a proportion of the largest and smallest observations and averaging the values that remain. Included as a special case are the usual sample mean (no trimming) and the median. As a simple illustration, consider the 11 values 6, 2, 10, 14, 9, 8, 22, 15, 13, 82, and 11. To compute a 10% trimmed mean, multiply the sample size by 0.1 and round the result down to the nearest integer. In the example, this yields g = l. Then, remove the g smallest values, as well as the g largest, and average the values that remain. In the illustration, this yields 12. In contrast, the sample mean is 17.45. To compute a 20% trimmed mean, proceed as before; only, now g is 0.2 times the sample sizes rounded down to the nearest integer. Some researchers have considered a more general type of trimmed mean [2], but the description just given is the one most commonly used. Why trim observations, and if one does trim, why not use the median? Consider the goal of achieving a relatively low standard error. Under normality, the optimal amount of trimming is zero. That is, use the untrimmed mean. But under very small departures from normality, the mean is no longer optimal and can perform rather poorly (e.g., [1], [3], [4], [8]). As we move toward situations in which outliers are common, the median will have a smaller standard error than the mean, but under normality, the median’s standard error is relatively high. So, the idea behind trimmed means is to use a compromise amount of trimming with the goal of achieving a relatively small standard error under both normal and nonnormal distributions. (For an alternative approach, see M Estimators of Location). Trimming observations with the goal of obtaining a more accurate estimator might seem counterintuitive, but this result has been known for over two centuries. For a nontechnical explanation, see [6]. Another motivation for trimming arises when sampling from a skewed distribution and testing some hypothesis. Skewness adversely affects control over the probability of a type I error and power when using methods based on means (e.g., [5], [7]). As the amount of trimming increases, these problems are reduced, but if too much trimming is used, power can be low. So, in particular, using a median to deal with a skewed distribution might make it less likely to reject when in fact the null hypothesis is false. Testing hypotheses on the basis of trimmed means is possible, but theoretically sound methods are not immediately obvious. These issues are easily addressed, however, and easy-to-use software is available as well, some of which is free [7, 8]. References [1] [2] [3] [4] [5] [6] [7] [8] Hampel, F.R., Ronchetti, E.M., Rousseeuw, P.J., & Stahel, W.A. (1986). Robust Statistics, Wiley, New York. Hogg, R.V. (1974). Adaptive robust procedures: a partial review and some suggestions for future applications and theory, Journal of the American Statistical Association 69, 909–922. Huber, P.J. (1981). Robust Statistics, Wiley, New York. Staudte, R.G. & Sheather, S.J. (1990). Robust Estimation and Testing, Wiley, New York. Westfall, P.H. & Young, S.S. (1993). Resampling Based Multiple Testing, Wiley, New York. Wilcox, R.R. (2001). Fundamentals of Modern Statistical Methods: Substantially Increasing Power and Accuracy, Springer, New York. Wilcox, R.R. (2003). Applying Conventional Statistical Techniques, Academic Press, San Diego. Wilcox, R.R. (2004). (in press). Introduction to Robust Estimation and Hypothesis Testing, 2nd Edition, Academic Press, San Diego. Further Reading Tukey, J.W. (1960). A survey of sampling from contaminated normal distributions, in Contributions to Probability and Statistics, I. Olkin, S. Ghurye, W. Hoeffding, W. Madow & H. Mann, eds, Stanford University Press, Stanford. RAND R. WILCOX