Nonparametric tests

advertisement

Nonparametric tests

If data do not come from a normal population (and if the sample is

not large), we cannot use a t-test. One useful approach to creating

test statistics is through the use of rank statistics.

Sign test for one population

The sign test assumes the data come from from a continuous

distribution with model

Yi = η + i , i = 1, . . . , n.

• η is the population median and i follow an unknown, continuous

distribution.

• Want to test H0 : η = η0 where η0 is known versus one of the

three common alternatives: H0 : η < η0 , H0 : η 6= η0 , or

H0 : η > η0 .

1

• Test statistic is B =

larger than η0 .

∗

Pn

i=1

I{yi > η0 }, the number of y1 , . . . , yn

• Under H0 , B ∗ ∼ bin(n, 0.5).

• Reject H0 if B ∗ is “unusual” relative to this binomial

distribution.

Example: Eye relief data.

Question: How would you form a “large sample” test statistic from

B ∗ ? You would not need to do that here, but this is common with

more complex test statistics with non-standard distributions.

2

Wilcoxin signed rank test

• Again, test H0 : η = η0 . However, this method assumes a

symmetric pdf around the median η.

• Test statistic built from ranks of

{|y1 − η0 |, |y2 − η0 |, . . . , |yn − η0 |}, denoted R1 , . . . , Rn .

• The signed rank for observation i is

R y >η

i

i

0

Ri+ =

.

0

yi ≤ η0

• The “signed rank” statistic is W

+

=

Pn

+

R

i=1 i .

• If W + is large, this is evidence that η > η0 .

• If W + is small, this is evidence that η < η0 .

3

• Both the sign test and the signed-rank test can be used with

paired data (e.g. we could test whether the median difference is

zero).

• When to use what? Use t-test when data are approximately

normal, or in large sample sizes. Use sign test when data are

highly skewed, multimodal, etc. Use signed rank test when data

are approximately symmetric but non-normal (e.g. heavy or

light-tailed, multimodal yet symmetric, etc.)

Example: Weather station data

Note: The sign test and signed-rank test are more flexible than the

t-test because they require less strict assumptions, but the t-test has

more power when the data are approximately normal.

4

Mann-Whitney-Wilcoxin nonparametric test (pp. 795–796)

The Mann-Whitney test assumes

iid

iid

Y11 , . . . Y1n1 ∼ F1 independent Y21 , . . . , Y2n2 ∼ F2 ,

where F1 is the cdf of data from the first group and F2 is the cdf of

data from the second group. The null hypothesis is H0 : F1 = F2 , i.e.

that the distributions of data in the two groups are identical.

The alternative is H1 : F1 6= F2 . One-sided tests can also be

performed.

Although the test statistic is built assuming F1 = F2 , the alternative

is often taken to be that the population medians are unequal. This is

a fine way to report the results of the test. Additionally, a CI will

give a plausible range for ∆ in the shift model F2 (x) = F1 (x − ∆). ∆

can be the difference in medians or means.

5

The Mann-Whitney test is intuitive. The data are

y11 , y12 , . . . , y1n1 and y21 , y22 , . . . , y2n2 .

For each observation j in the first group count the number of

observations in the second group cj that are smaller; ties result in

adding 0.5 to the count.

Assuming H0 is true, on average half the observations in group 2

would be above Y1j and half would be below if they come from the

same distribution. That is E(cj ) = 0.5n2 .

Pn1

cj and has mean

The sum of these guys is U = j=1

E(U ) = 0.5n1 n2 . The variance is a bit harder to derive, but is

Var(U ) = n1 n2 (n1 + n2 + 1)/12.

6

Something akin to the CLT tells us

U − E(U )

•

Z0 = p

∼ N (0, 1),

n1 n2 (n1 + n2 + 1)/12

when H0 is true. Seeing a U far away from what we expect under the

null gives evidence that H0 is false; U is then standardized as usual

(subtract off then mean we expect under the null and standardize by

an estimate of the standard deviation of U ).

A p-value can be computed as usual as well as a CI.

Example: Dental measurements.

Note: This test essentially boils down to replacing the observed

values with their ranks and carrying out a simple pooled t-test!

7

Regression models

Model: a mathematical approximation of the relationship among real

quantities (equation & assumptions about terms).

• We have seen several models for a single outcome variable from

either one or two populations.

• Now we’ll consider models that relate an outcome to one or more

continuous predictors.

8

Section 1.1: relationships between variables

• A functional relationship between two variables is

deterministic, e.g. Y = cos(2.1x) + 4.7. Although often an

approximation to reality (e.g. the solution to a differential

equation under simplifying assumptions), the relation itself is

“perfect.” (e.g. page 3)

• A statistical or stochastic relationship introduces some “error”

in seeing Y , typically a functional relationship plus noise.

(e.g. Figures 1.1, 1.2, and 1.3; pp. 4–5).

Statistical relationship: not a perfect line or curve, but a general

tendency plus slop.

9

Example:

x

y

1

1

2

1

3

2

4

2

5

4

Let’s obtain the plot in R: x=c(1,2,3,4,5); y=c(1,1,2,2,4)

Must decide what is the proper functional form for this relationship,

i.e. trend : Linear? Curved? Piecewise continuous?

10

Section 1.3: Simple linear regression model For a sample of

pairs {(xi , Yi )}ni=1 , let

Yi = β0 + β1 + i , i = 1, . . . , n,

where

• Y1 , . . . , Yn are realizations of the response variable,

• x1 , . . . , xn are the associated predictor variables,

• β0 is the intercept of the regression line,

• β1 is the slope of the regression line, and

• 1 , . . . , n are unobserved, uncorrelated random errors.

This model assumes that x and Y are linearly related, i.e. the mean

of Y changes linearly with x.

11

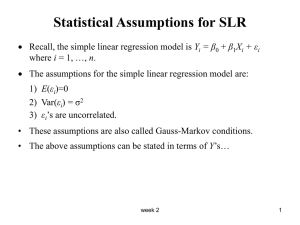

Assumptions about the random errors

We assume that E(i ) = 0 and var(i ) = σ 2 , and corr(i , j ) = 0 for

i 6= j: mean zero, constant variance, uncorrelated.

• β0 + β1 xi is the deterministic part of the model. It is fixed but

unknown.

• i represents the random part of the model.

The goal of statistics is often to separate signal from noise; which is

which here?

12

Note that

E(Yi ) = E(β0 + β1 xi + i ) = β0 + β1 xi + E(i ) = β0 + β1 xi ,

and similarly

var(Yi ) = var(β0 + β1 xi + i ) = var(i ) = σ 2 .

Also, corr(Yi , Yj ) = 0 for i 6= j.

(We will draw a picture...)

13

Example: Pages 10–11 (see picture). We have

E(Y ) = 9.5 + 2.1x.

When x = 45, our expected y-value is 104, but we will actually

observe a value somewhere around 104.

What does 9.5 represent here? Is it sensible/interpretable?

How is 2.1 interpreted here?

In general, β1 represents how the mean response changes when x is

increased one unit.

14

Matrix form: Note the simple linear regression model can be

written in matrix terms as

1

xn

β0

β1

+

x2

.

.

.

1

.

.

.

1

2

.

.

.

n

x1

Yn

=

Y2

.

.

.

1

Y1

,

or equivalently

Y = Xβ + ,

where

xn

This will be useful later on.

15

β1

, =

1

,β =

β0

x2

.

.

.

1

.

.

.

1

2

.

.

.

n

x1

Yn

,X =

Y=

Y2

.

.

.

1

Y1

.

Section 1.6: Estimation of (β0 , β1 )

β0 and β1 are unknown parameters to be estimated from the data:

(x1 , Y1 ), (x2 , Y2 ), . . . , (xn , Yn ).

They completely determine the unknown mean at each value of x:

E(Y ) = β0 + β1 x.

Since we expect the various Yi to be both above and below β0 + β1 xi

roughly the same amount (E(i ) = 0), a good-fitting line b0 + b1 x will

go through the “heart” of the data points in a scatterplot.

The method of least-squares formalizes this idea by minimizing the

sum of the squared deviations of the observed yi from the line

b0 + b1 xi .

16

Least squares method for estimating (β0 , β1 ). The sum of squared

deviations about the line is

Q(β0 , β1 ) =

n

X

2

[Yi − (β0 + β1 xi )] .

i=1

Least squares minimizes Q(β0 , β1 ) over all (β0 , β1 ).

Calculus shows that the least squares estimators are

Pn

(x − x̄)(Yi − Ȳ )

i=1

Pn i

b1 =

2

i=1 (xi − x̄)

b0

= Ȳ − b1 x̄

17

Proof :

∂Q

∂β1

=

n

X

2(Yi − β0 − β1 xi )(−xi )

i=1

= −2

" n

X

xi Yi − β0

i=1

∂Q

∂β0

=

n

X

n

X

xi − β1

i=1

n

X

i=1

2(Yi − β0 − β1 xi )(−1)

i=1

= −2

" n

X

Yi − nβ0 − β1

i=1

n

X

i=1

18

#

xi .

#

x2i .

Setting these equal to zero, and dropping indexes on the summations,

we have

P

P

P

xi Yi = b0 xi + b1 x2i

⇐ “normal” equations

P Yi = nb0 + b1 P xi

P

Multiply the first by n and multiply the second by

xi and subtract

yielding

X

X 2 X

X X

n

xi Yi −

xi

Yi = b1 n

x2i −

xi

.

Solving for b1 we get

P

P

P P

xi Yi − nȲ x̄

n xi Yi − xi Yi

P

.

=

b1 =

P 2

P 2

2

2

xi − nx̄

n xi − ( xi )

19

The second normal equation immediately gives

b0 = Ȳ − b1 x̄.

Our solution for b1 is correct but not as aesthetically pleasing as the

purported solution

Pn

(xi − x̄)(Yi − Ȳ )

i=1

P

.

b1 =

n

2

(x

−

x̄)

i=1 i

Show

X

X

(xi − x̄)(Yi − Ȳ ) =

xi Yi − nȲ x̄

X

X

2

(xi − x̄) =

x2i − nx̄2

20

The line Ŷ = b0 + b1 x is called the least squares estimated regression

line. Why are the least squares estimates (b0 , b1 ) “good?”

1. They are unbiased: E(b0 ) = β0 and E(b1 ) = β1 .

2. Among all linear unbiased estimators, they have the smallest

variance. They are best linear unbiased estimators, BLUEs.

We will show the first property in the next class. The second

property is formally called the “Gauss-Markov” theorem and is

proved in linear models. (Look it up on Wikipedia if interested.)

21