Thus far… Inferences When Comparing Two Means

advertisement

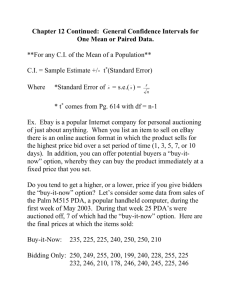

Thus far… Inferences When Comparing Two Means Dr. Tom Ilvento STAT 200 Testing differences between two means or proportions The same strategy will apply for testing differences between two means or proportions These will be two independent, random samples We will have Estimate (of the difference) Hypothesized value Standard error Knowledge of a sampling distribution We have made an inference from a single sample mean and proportion to a population, using The sample mean (or proportion) The sample standard deviation Knowledge of the sampling distribution for the mean (proportion) And it matters if sigma is known or unknown Testing differences between two means or proportions We can make a point estimate and a hypothesis of the difference of the two means Or a Confidence Interval around the difference of the two means With a few twists Mean Proportions Testing differences between two means or proportions We will also need to come up with: An estimator of the difference of two means/proportions The standard error of the sampling distribution for our estimator With two sample problems we have two sources of variability and sampling error We also must assume the samples are independent random samples Sigma known or unknown Should we pool the variance When testing Ho: we need to check if p1 = p2 What are independent, random samples? Independent samples means that each sample and the resulting variables do not influence the other sample If we sampled the same subjects at two different times we would not have independent samples If we sampled husband and wife, they would not be independent However, we have a strategy to assess change over time of the same subject – paired difference test 1 Decision Tree for two Proportions Decision Tree for Two Means Target H0: µ1-µ2=D Assumptions Independent random samples Sigma Known Test Statistic Testing z, using pop. variances H0:p1- p2 = 0 Independent random samples Large sample size (n1, n2>30) Known that p1 = p2 under H0 z, using pooled sample proportion Pp H0:p1- p2 = D0 Independent random samples Large sample size When D0 ≠ 0 z Independent random samples Sigma Unknown t, using sample Assumptions Test Statistic variances If we can assume Equal variances use pooled variance Sp2 Example Problem: Manufacturer of Roof Shingles Descriptive Statistics Studies have shown the weight is an important customer perception of quality, as well as a company cost consideration. The last stage of the assembly line packages the shingles before placement on wooden pallets. The data below are the weight (in pounds) of pallets of Boston and Vermont variety of shingles. Boston Mean Standard Error Median Mode Standard Deviation Sample Variance Kurtosis Skewness Range Minimum Maximum Sum Count Confidence Level(95.0%) Open up PALLET.xls Run Descriptives Produce a Box and Whisker for both groups Vermont 3124.21 3704.04 1.81 2.57 3122.00 3704.00 3098.00 3728.00 34.71 46.74 1204.99 2185.03 0.77 0.21 0.53 0.29 222.00 290.00 3044.00 3566.00 3266.00 3856.00 1149711.00 1222334.00 368 330 3.558 5.062 Lecture Notes problem B o x- and - w hi sker Pl o t o f Pal l et W eig ht i n p o und s V e r mont Bos t on 3040 3240 3440 3640 3840 4040 4240 Class with Lecture Notes n1= 86 mean1 = 8.48 s12= .94 Class without Lecture Notes n2= 35 mean2 = 7.80 s22= 2.99 Do the samples provide sufficient evidence to conclude that there is a difference in mean responses of the two groups? Use α = .01 2 We need to figure out the sampling distribution (mean1-mean2) Roof Shingles problem Null hypothesis H0: (µ1-µ2) = ? Alternative Assumptions Test Statistic Rejection Region Calculation Ha: (µ1-µ2) … ? two-tailed test Two independent samples, n is Large t* = tα=.05/2 = ? t* = t* ? tα=.025 Conclusion ? The mean of the sampling distribution for (mean1-mean2) Will equal = (µ1-µ2) = D0 We usually designate the expected difference as D0 under the the null hypothesis Most often we think of D0 = 0; no difference ?? H0: (µ1-µ2) = ? Standard Error of the difference of two means The Standard Error of the sampling distribution difference of two means is given as: 2 2 1 2 ( x1 − x2 ) 1 2 σ = σ n + σ n The sampling distribution of (mean1-mean2) is approximately normal for large samples under the Central Limit Theorem The Standard Error for the difference of two means Is based on two independent random samples We typically use the sample estimates of σ1 and σ2 Which are s1 and s2 σ (x −x ) = 1 2 s12 s22 + n1 n2 And then use the t-distribution The Test Statistic for our problem t* = (3124.2 − 3704.0) − 0 1204.99 2185.03 + 368 330 Comparison of two means Null hypothesis Alternative Assumptions Two independent samples, sigma is unknown Test Statistic t* = (3124.2-3704.0- 0)/[(1204.99/368)+(2185.03/330)].5 Rejection Region Calculation t* = (-579.8 – 0)/3.15 = -184.31 H0: (µ1-µ2) = 0 Ha: (µ1-µ2) … 0 two-tailed test Conclusion tα=.05/2, 603 d.f. = -1.964 or 1.964 t* = -184 t* < tα=.05/2, 603 d.f. -184 < -1.964 Reject H0: (µ1-µ2) = 0 3 What is the p-value for our statistic? t* = -184 IT IS HUGE!!!! Do this with EXCEL Data Analysis This is smaller than α = .05/2 P < .001 Therefore, we reject H0: (µ1-µ2) = 0 95% Confidence Interval for the Difference of Two Means Excel output t-Test: Two-Sample Assuming Unequal Variances Mean Variance Observations Hypothesized Mean Difference df t Stat P(T<=t) one-tail t Critical one-tail P(T<=t) two-tail t Critical two-tail Boston Vermont 3124.215 3704.042 1204.992 2185.032 368 330 0 603 -184.321 0 1.647385 0 1.963906 There may be times when we think the difference between the two samples is primarily the means But the variances are similar In this case we ought to use information from both samples to estimate sigmas We will use a t-test and the t distribution Assumptions The population variances are equal Random samples selected independently of each other ( x1 − x2 ) ± zα / 2σ ( x1 − x2 ) ( x1 − x2 ) ± tα / 2 s( x1 − x2 ) What about if the variances are equal to each other? Tools Data Analysis t-test: two sample assuming unequal variances Pick both variables Note labels or not Tell Excel where to put the output Dress it up The standard error for a small sample difference of means Since we assume σ1 = σ2 thus (s1 = s2) We should pool our estimate of the standard error of the sampling distribution Using information from both sample estimates 4 Step 2: Use the Pooled Estimate of the Variance to calculate the estimate of the Standard Error POOLED ESTIMATE OF THE VARIANCE Step 1: Then our formula will be a weighted average of s1 and s2 σˆ ( x − x ) = 1 2 (n − 1) s12 + (n2 − 1) s22 s = 1 (n1 − 1) + (n2 − 1) 2 p s 2p n1 + ⎛1 1⎞ = s 2p ⎜⎜ + ⎟⎟ n2 ⎝ n1 n2 ⎠ s 2p alternatively σˆ ( x − x ) = s p Note: the denominator reduces to (n1 +n2 –2) which is the d.f. for the t distribution 1 2 1 1 + n1 n2 Do this with Excel – two sample assuming equal variances What does pooling do for us? • Pooling generates a weighted average as the estimate of the variance t-Test: Two-Sample Assuming Equal Variances • The weights are the sample sizes for each sample Mean Variance Observations Pooled Variance Hypothesized Mean Difference df t Stat P(T<=t) one-tail t Critical one-tail P(T<=t) two-tail t Critical two-tail • A pooled estimate is thought to be a better estimate if we can assume the variances are equal • And our degrees of freedom are larger d.f.= n1 + n2 – 2 • Which means the t-value will be smaller What about the difference in proportions? Based on large sample only Same strategy as for the mean We calculate the difference in the two sample proportions Establish the sampling distribution for our estimator Calculate a standard error of this sampling distribution Conduct a test Boston Vermont 3124.215 3704.042 1204.992 2185.032 368 330 1668.258 0 696 -187.2495 0 1.647046 0 1.963378 Decision Tree for two Proportions Testing Assumptions Test Statistic H0:p1- p2 = 0 Independent random samples Large sample size (n1, n2>30) Known that p1 = p2 under H0 z, using pooled sample proportion Pp H0:p1- p2 = D0 Independent random samples Large sample size When D0 ≠ 0 z 5 Differences of Proportions For the null hypothesis H : (p -p ) = D 0 1 2 0 For Proportions The Standard Error for ( ps1 - ps2) s( p s 1 − p s 2 ) = ps1qs1 ps 2 qs 2 + n1 n2 100(1 - α )% C.I. = ( ps1 − ps 2 ) ± zα / 2 s( ps1 − ps 2 ) For Proportions So for Proportions Special Note: Under the Null Hypothesis where p1 – p2 =0 We use a pooled average, pp, based on adding the total number of successes and divide by the sum of the two sample sizes p= ( x1 + x2 ) (n1 + n2 ) For a confidence interval, use the sample estimates in this manner to generate the standard error – since there is no assumption that the variances are equal s( ps1 − ps 2 ) = p s1 qs1 ps 2 qs 2 + n1 n2 For a Hypothesis Test where we specify that the proportions are equal, (p1 – p2 = 0) we should pool the information and use this approach s( p s 1 − p s 2 ) = where x = # of successes for each group ⎛1 1⎞ pq ⎜⎜ + ⎟⎟ ⎝ n1 n2 ⎠ Gender Differences in Shopping for Clothing So for Proportions Since this is a large sample problem, we could use the sample estimates of ps1 and ps2 to estimate the standard error for a Confidence Interval A survey was taken of 500 people to determine various information concerning consumer behavior. One question asked, “Do you enjoy shopping for clothing?” The data are shown below by sex. For women 224 out of 260 indicated Yes For men, 136 out of 240 indicated yes Conduct a test that a higher proportion of women favor shopping using α=.01 Use PHSTAT – two sample Test, z-test for difference of two proportions 6 Use PHStat, z-test for difference in two proportions Z Test for Differences in Two Proportions Data Hypothesized Difference Level of Significance Group 1 Number of Successes Sample Size Group 2 Number of Successes Sample Size Intermediate Calculations Group 1 Proportion Group 2 Proportion Difference in Two Proportions Average Proportion Z Test Statistic Upper-Tail Test Upper Critical Value p -Value Reject the null hypothesis 0 0.01 224 260 136 240 0.862 0.567 0.295 0.7200 7.34 2.326 0.000 I conducted a test to determine if women indicated they enjoyed shopping for clothing more than men did. Because the Null Hypothesis was that the two groups are equal, I used a pooled estimate of the proportion who enjoyed shopping for clothing. Paired Difference Test For women, the proportion was .862 and for men it was .567 The results of my test indicate a highly significant difference between women and men, p < .001. I have evidence to suggest that women enjoy shopping for clothing more than men. Paired Difference Test Paired Difference Test The strategy is relatively simple We simply create a new variable which is the difference of the pre-test from the post test This new variable can be thought as a single random sample For this new variable we calculate sample estimates of the mean and standard deviation And then calculate C.I. or conduct a hypothesis test on this new variable Often times the mean difference is referred to as And the hypothesis is often: Example of paired difference data Patient When you have a situation where we record a pre and post test for the same individual We cannot treat the samples as independent In these cases we can do a Paired Difference Test. It’s called “Matched Pairs Test” in some books. Or Mean Difference in your book Difference 2-1 Time 1 Time 2 1 5 5 0 2 1 3 -2 3 0 0 0 4 1 1 0 5 0 1 -1 6 2 1 1 meanD H 0 : µD = 0 This is no different than any single mean test, large sample or small sample Alzheimer’s study: Paired Difference Test Twenty Alzheimer patients were asked to spell 24 homophone pairs given in random order Homophones are words that have the same pronunciation as another word with a different meaning and different spelling Examples: nun and none; doe and dough The number of confusions were recorded The test was repeated one year later 7 Alzheimer’s study: Paired Difference Test The researchers posed the following question: Do Alzheimer’s patients show a significant increase in mean homophone confusion over time? Use an alpha value of .05 Alzheimer’s study: Paired Difference Test Statistics Mean Standard Error Median Mode Standard Deviation Sample Variance Kurtosis Skewness Range Minimum Maximum Sum Count Time 1 4.15 0.78 5.00 5.00 3.50 12.24 -0.85 0.41 11 0 11 83 20 Time 2 Difference 5.80 0.94 5.50 3.00 4.21 17.75 0.13 0.64 16 0 16 116 20 1.65 0.72 1.00 0.00 3.20 10.24 0.08 0.48 12 -3 9 33 20 Alzheimer’s study: Paired Difference Test Null hypothesis Alternative Assumptions Test Statistic Rejection Region H 0 : µD = 0 Ha: µD > 0 one-tailed test, upper small sample, normal t* = (1.65 – 0)/(3.2/20.5) tα=.05, 19 d.f. = 1.729 Calculation t* = 2.31 Conclusion t* > tα=.05, 19 d.f. 2.31 > 1.729 Reject H0: µD = 0 8