Improving Tests of Abnormal Returns by Bootstrapping the Multivariate Regression... Event Parameters

advertisement

Improving Tests of Abnormal Returns by Bootstrapping the Multivariate Regression Model with

Event Parameters

Scott E. Hein

Texas Tech University

Peter Westfall

Texas Tech University

April 2004

We would like to thank the editors, Rene Garcia and Eric Renault, and two anonymous referees

and an associate editor, as well as Will Ashman, Keldon Bauer, Naomi Boyd, Phil English, Lisa

Kramer, Henry Oppenheimer, Bill Maxwell, Ted Moore, Ramesh Rao, Jon Scott, Shawn

Strothers, Kate Wilkerson, Eric Walden and Zhaohui Zhang for helpful comments. We would

also like to thank Jonathan Stewart and Brad Ewing for providing data used in this analysis.

Corresponding author and requests for reprints: Scott Hein, Jerry S. Rawls College of Business,

Texas Tech University, Lubbock, TX 79409-2101, (806) 742-3433, odhen@ba.ttu.edu.

Improving Tests of Abnormal Returns by Bootstrapping the Multivariate Regression Model with

Event Parameters

Abstract

Parametric dummy variable-based tests for event studies using multivariate regression are not

robust to nonnormality of the residual, even for arbitrarily large sample sizes. Bootstrap

alternatives are described, investigated, and compared for cases where there are nonnormalities,

cross-sectional and time series dependencies. Independent bootstrapping of residual vectors

from the multivariate regression model controls type I error rates in the presence of crosssectional correlation; and, surprisingly, even in the presence of time-series dependence

structures. The proposed methods not only improve upon parametric methods, but also allow

development of new and powerful event study tests for which there is no parametric counterpart.

Key words: Event study, Event parameter estimation, Cross-sectional correlation, Bootstrap,

Significance level, Simulation.

I. Introduction

Researchers have recently extensively applied the multivariate regression model

(MVRM), using a dummy variable representing a significant event date, to test the significance

of many different events on both financial asset prices and interest rates. Binder (1985a, b) and

MacKinlay (1997) provide surveys of event studies in finance and economics. Binder (1985a, b)

argues that a main advantage of employing an MVRM over examining cumulative abnormal

returns (CAR) lies in the fact that joint linear hypothesis tests can be carried out easily under the

former. This has been especially useful in testing whether a regulatory event has a significant

effect on a sample of firms.1 The MVRM approach relies on traditional t-statistics and F-tests to

test statistical significance, especially joint hypotheses, whereas testing joint linear hypotheses is

not typically done under the CAR approach. Rather the focus under the CAR approach has been

on the impact of events on individual firms.

Much research has been devoted to distributional concerns in the CAR analysis, since it

is widely recognized that the excess returns are generally not normally distributed. This

violation typically is generally associated with fat tails, but could also be attributed to other nonnormal characteristics such as skewness. It is understood that such problems will cause

significant statistical inference problems (Brown & Warner, 1980, 1985). Corrado (1989)

suggests a non-parametric rank test for event studies in the face of distributional problems. More

recently Lyon, Barber and Tsai (1999) suggest a bootstrap version of a skewness-adjusted tstatistic to control for the skewness bias in their tests of long-run abnormal returns in a CAR

setting.

There has been less analysis of distributional violations in the dummy variable/event

parameter estimation setting. The fact that tests on event parameter coefficients in the MVRM

can be seriously biased has gone largely unappreciated in the literature. Three exceptions to this

are Chou (2001), Kramer (2001) and Hein, Westfall and Zhang (2001, HWZ hereafter). These

papers find that violations of normality assumptions in the MVRM setting with event parameter

estimation do indeed have a significant influence on the statistical analysis of event significance.

As an example to show the need for bootstrapping, we consider the event tests of Stewart

and Hein (2002), who examined the December 4, 1990 announcement that the Federal Reserve

was eliminating the reserve requirement on non-personal time deposits. There was concern as to

whether the shock continued for some days after the announcement; hence event analysis was

performed for the days after the announcement. Stewart and Hein only show the univariate tests

of the events, but it is also desirable to find a multivariate overall summary to simplify

interpretation. We used the same data to test the hypothesis that the reserve requirement

announcement significantly affected the stocks of the largest banks in the country. Using the

largest five banks (Citicorp, Bank America, Nations, Chemical, and First Chicago), the

significance level of the multivariate normality-assuming test was p=0.039, while the HWZ

bootstrap shows p=0.078 (95% Confidence interval: 0.073-0.083, based on 10,000 samples). A

researcher using traditional MVRM procedures would have concluded that the event was

significant for the largest banks in the country at the 5% level, whereas the bootstrap indicates

that this significance is exaggerated.

Chou, Kramer, and HWZ provide different bootstrapping approaches aimed at rectifying

the bias in the event study tests: Chou and HWZ are similar in that they bootstrap the raw data to

estimate the distribution of the test statistics, hence such methods are called “data-based

bootstrap methods,” hereafter. On the other hand, Kramer bootstraps the test statistics themselves

to estimate their distribution, hereafter called a “test statistic-based bootstrap method”. The

current paper continues these research efforts by: (i) offering further analytical justifications for

these bootstrap methods, (ii) comparing different these different bootstrap methods in cases of

not only non-normality, but also time series and cross-sectional dependence structures, and (iii)

developing alternative, new methods that combine elements of the various proposals. We show

that the two basic types of bootstraps work well in the absence of cross-sectional correlation,

even though the data may exhibit time-series dependencies such as AR, ARCH and GARCH

effects. However, the test-statistic based bootstrap procedure results in grossly inflated type I

error rates in the presence of cross-sectional correlation (see also Bernard, 1987, for other

problems caused by cross-sectional correlation). The point is subtle but important: test statisticbased bootstrap methods are reasonable for event studies with multiple events at independent

time points, but should not be used for clustered event studies. Kramer noted this concern in her

dissertation, (Kramer, 1998); however, this caution was inadvertently left out of the published

article (Kramer, 2001).

Another serious concern with test statistic-based bootstraps is that it cannot be used at all

when the number of firms is small. In some cases, event tests on single firms are desirable.

Studies of dividend initiation or resumption like Boehme and Sorescu (2002) would illustrate

such an event study. While Boehme and Sorescu examine CARs, the data-based bootstrap not

only offers the flexibility to accommodate cross-sectional correlation, but also the ability to test

for events when there are a small number of firms, or just a single firm, as in this case.

On the other hand, the Kramer procedure of summing t-statistics has potentially more

power than the HWZ procedure that uses the less focused multivariate test of Binder (1985b) as

its base. In the final analysis, we recommend a new procedure, where the summed t-statistics are

used in conjunction with the data-based bootstrap. This new method has both good power and

control of type I error rates under the presence of cross-sectional correlation, as well as under

time series dependence structures, and is therefore our recommended procedure.

This paper is organized as follows: in section II we show that the classical parametric test

is inconsistent, with asymptotic type I error levels depending on kurtosis of the excess returns.

In section III motivate and present the data-based and test statistic-based bootstrap tests.

Theoretical results concerning effects of cross-sectional correlation are given in section IV, and

the various methods are compared theoretically and via simulation using a variety of models for

financial data in section V. Conclusions are given in section VI.

II. The multivariate regression model and asymptotic inconsistency

a. The model and event tests

Consider the traditional single market factor model

Rt = β0 + β1Rmt + εt, t = 1, … ,T;

(1)

where Rt is the return on a specific firm or portfolio of stocks, Rmt is the return on the market,

and εt represents the excess return. Additional predictors may be included in (1), these are

excluded to simplify the exposition. In matrix form,

R = Xβ + ε.

(2)

If the event time is t0, define the indicator vector D, having all elements 0's except for time t0,

where the value is 1. The event test is based on the model

R = Xβ + Dγ + ε,

(3)

and the event test is a test of H0: γ = 0, tested using the simple t-test, as reported in any standard

software that performs OLS analysis. The numerator of the t-statistic is the OLS estimate of γ,

which is simply the deleted residual of model (1) for time t0.

The MVRM models may be expressed as the simultaneous equations,

Ri = Xβi + Dγi + εi,

for i= 1,…,g (firms or portfolios).

(4)

Observations within row t of ε = [ε1 | … | εg] are cross-sectionally correlated. Row vectors of

ε are assumed to be independent and identically distributed (i.i.d.) in the classical MVRM model,

and this is also a major assumption of the resampling method. However, we find that i.i.d.assuming bootstrap method is marginally robust to non-i.i.d. data.

The clustered event null hypothesis is the multivariate (composite) test

H0: [γ1 | … | γg] = [0 | … | 0]

(5)

Traditional methodologies for testing (5) with MVRM and SUR models are discussed by Binder

(1985a,b), Schipper and Thompson (1985), and Karafiath (1988). Cross-sectional correlations

are allowed for elements within a given row of the residual matrix; a key feature of MVRM is

that such cross-section correlations are incorporated in the test of (5). The test of (5) is computed

easily and automatically with standard statistical software packages, using exact (under

normality) F-tests.

The hypothesis (5) tested for a given event may be restated in a more general sense

amenable to semi-parametric bootstrap-based testing as follows:

H0: The distribution of the abnormal return (or vector of abnormal returns in case of

MVRM) for the event time t0 is identical to the distribution of the abnormal returns (or

vectors of abnormal returns) for a given set of times.

The researcher determines the definition of the "given set of times". It may include all

times other than the event, all times other than a collection of event times, or a collection of

times from another time horizon. Under the assumption of normally distributed abnormal

returns, Schipper and Thompson (1985) give an exact test of H0. However, as with all tests, the

true type I error level differs from the nominal (usually 0.05) level under non-normality.

b. Asymptotic inconsistency

It is a common conception that type I error rates approach the nominal α-levels (typically

0.05) with larger sample sizes, because of the central limit theorem. However, such is not the

case for dummy variable based event study tests. To see why, consider model (3). The event

test is a test of H0: γ = 0, tested using the simple t-test. The numerator of the t-statistic is the

OLS estimate of γ, which is simply the deleted residual of model (2) for time t0. The argument

for large-sample validity of a regression test, despite nonnormally distributed residuals, requires

large-sample normality of the parameter estimate. Such a statement is made using the central

limit theorem, provided that the parameter estimate can, in some sense, be viewed as an

"average," or at least as a weighted average that is not dominated by one or a few weights. In the

case of event tests under our concern, the estimated event parameter does not become normally

distributed as the sample size (T) increases, since it is just the deleted residual itself, and not an

(weighted) average of residuals, as required by the central limit theorem. The distribution of the

estimated event parameter comes closer to the distribution of the true residual at time t0, but

unless this distribution is truly normal, the distribution of the estimated event parameter will not

converge to normal.

For a more formal view of this problem, consider the development of the asymptotic

normality of the OLS vector presented in Greene (1990, 295-296). The requirement that the

parameter estimate be an appropriate type of weighted average is equivalently formulated by the

requirement that (1/T)(G'G) converges (as T tends to infinity) to a non-singular matrix, where G

= [X | D]. However, in the case of event studies, the convergence requirement fails: Since

(1/T)(D'X) = (1/T)[1 | Rmt0] tends to [0 | 0 ] and (1/T)(D'D) = (1/T)(1) tends to (0) as T tends to

infinity, the matrix (1/T)(G'G) tends to a matrix whose third row and third column (assuming a

single-factor market model as in (1)) are composed entirely of zeros, and is therefore not

invertible. Thus, the conditions needed for the asymptotic normality of the estimates do not hold

for this type of event model test, and convergence of the type I errors to their nominal levels

cannot be assumed. This failure to converge to normality greatly affects the probability of

finding a "significant" event, even for large T, as shown below.

c. Kurtosis and convergence

Suppose that the data consist of known true residuals [εt1,…,εtg], as would be the case

when T is so large that the parameters can be estimated with essentially no error. Suppose also,

without loss of generality, that the variances are identically 1.0. If they are not, simply divide

each εtj by its standard deviation, whose values can be assumed to be known for large T.

Assume also that the variables are i.i.d. Suppose t0=1 is the event day in question. In this case,

g

the parametric test reduces to the statistic S = ∑ ε1i2 , with H0 rejected when S ≥ χ g2,1−α , where

i =1

χ g2,1−α denotes the (1-α) quantile of the chi-squared distribution with g degrees of freedom. This

test is exact (i.e., the type I error probability is exactly α) when ε1i has a normal distribution.

Now, suppose we apply the normality-assuming test when ε1i has a non-normal

distribution with kurtosis κ. Then by the central limit theorem,

g −1/ 2 ( S − g ) /(κ + 2)1/ 2 →d N (0,1) and g −1/ 2 ( χ g2,1−α − g ) / 21/ 2 → Z1−α , where Z1−α = Φ −1 (1 − α )

denotes the 1-α quantile of the standard normal distribution. Then

P ( S ≥ χ g2,1−α ) = P( g −1/ 2 ( S − g ) /(κ + 2)1/ 2 ≥ g −1/ 2 ( χ g2,1−α − g ) /(κ + 2)1/ 2 )

→ P( Z ≥ Z1−α (2 /(κ + 2))1/ 2 ) = 1 − Φ ( Z1−α (2 /(κ + 2))1/ 2 )

For outlier-prone data, as are common in financial markets, κ>0, and hence

Z1-α(2/(κ+2))1/2 < Z1-α, so the true type I error level exceeds α, with greater excess for larger κ, in

our asymptotic framework. Conversely, if the error distribution is less outlier-prone than normal

so that κ<0, the usual parametric test is too conservative.

III. Alternative Bootstrap Solutions

One of the major benefits of using the bootstrap is that the researcher need not specify

any distributions at all. It would be presumptuous to suppose that a single distribution applies

equally across all securities, as minor shocks are amplified to a greater extent for some market

sectors than others, resulting in differential kurtosis across sectors. It would be equally

presumptuous to assume that there is a single distribution that applies across all historic time

regimes.

First, the data-based bootstrap of HWZ and Chou is described, and then the test statisticbased bootstrap of Kramer is described.

a. The Data-based Bootstrap

We wish to estimate quantities such as p = P( S ≥ s | FS 0 ) for event test statistics S used to

test H0, where the probability is calculated under the true null distribution function FS0 of S.

When s is an observed (fixed) value of the random variable S, p is the “true p-value” of the test.

Under the multivariate normal assumption, the distribution FS0 is simply related to the ordinary

ANOVA F distribution and is known exactly as discussed by Schipper and Thompson (1985).

However, when the distributions are non-normal, the distribution FS0 depends on non-normal

characteristics such as kurtosis, and is unknown. We follow the motivation of the bootstrap

using the “plug-in principle” (Efron and Tibshirani, 1998, p. 35), estimating p = P( S ≥ s | FS 0 ) by

pˆ = P( S ≥ s | FˆS 0 ) , for a suitable estimate of FˆS 0 of the null distribution of S.

Using notation of Schipper and Thompson (1985, equation 3), suppose the event test

statistic is given by S = Γˆ ' A '[ A{ X '(Σˆ −1 ⊗ I ) X }−1 A ']−1 AΓ̂ with covariance matrix Σ̂ estimated as

in their equation (4). Then S depends on the data Ri in (4, above) only through the least squares

estimates of the event parameters and the sample residual covariance matrix. Noting that the

sample residual covariance matrix is a function of the sample OLS residuals ei, the sample

covariance matrix is identical, no matter whether it is calculated from the data Ri or the true

residuals εi: ei = (I-G(G’G)-1G’)Ri = (I-G(G’G)-1G’)εi. Similarly, under the null hypothesis, γˆ i

is identical, no matter whether it is calculated from the data Ri or the true residuals εi:

γˆ i =(0 0 1) (G’G)-1G’Ri = γi+(0 0 1) (G’G)-1G’εi =(0 0 1) (G’G)-1G’εi under the null hypothesis.

Thus, under H0: γi=0, i=1,…,g, the test statistic is identical, no matter whether it is computed

using the Ri or the εi: S(R1,…, Rg) = S(ε1,…,εg) under H0, and the null distribution of S is

completely determined by the multivariate distribution of the row vector ε=[ε1:…: εg]. Hence

p = P( S (R 1 ,..., R g ) ≥ s | FS 0 ) = P ( S (ε 1 ,..., ε g ) ≥ s | Fε ). Applying the plug-in principle, we

estimate p = P ( S ≥ s | Fε ) as pˆ = P( S ≥ s | Fˆε ) . 2

The plug-in estimate is conveniently evaluated by using the bootstrap to estimate the

distribution Fε . Since we do not know the true residuals vectors, we estimate Fε using the

empirical distribution of the sample residual vectors (see Freedman and Peters, 1984 for the

univariate regression case; see Rocke, 1989 for the multivariate case). Below is the bootstrap

algorithm as presented in HWZ:

1. Fit model (4). Obtain the usual statistic S for testing H0 using the traditional method

(assuming normality). Obtain also the T x g sample residual matrix e = [e1| … | eg].

2. Exclude the row corresponding to D = 1 from e, leaving the (T-1) x g matrix e-.

3. Sample T row vectors, one at a time and with replacement, from e-. This gives a

T x g matrix [ R1* | … | Rg* ].

4. Fit the MVRM model Ri* = Xβi + Dγi + εi, i = 1, …,g, and obtain the test statistic S*

using the same technique used to obtain the statistic S from the original sample.

5. Repeat 3 and 4 NBOOT times. The bootstrap p-value of the test is the proportion of the

NBOOT samples yielding an S* statistic that is greater than or equal to the original S

statistic from step 1.

In steps 1 and 2, the researcher finds residual vectors that are used to estimate the

(multivariate) distribution of the abnormal returns. The residual for the event day must be

excluded because its residual is identically zero, and residuals of exactly zero are not anticipated

under ordinary circumstances.3 In steps 3 and 4 the researcher creates the bootstrap response

variable. Because the null distribution of the test statistic depends only upon the residuals, as

described above, the bootstrap response variable need not contain the Xbi component.

The bootstrap estimate of p̂ found in step 5 has a Monte Carlo standard error equal to

pˆ (1 − pˆ ) / NBOOT. Thus, choosing a sufficiently large value of NBOOT can control the Monte

Carlo error; we recommend choosing NBOOT as large as computing resources will comfortably

allow to estimate the p-values with sufficient precision. While it is possible to reduce the

number of bootstraps if a simple "reject/accept" decision is all that is required (Davidson and

MacKinnon, 2000), ultimately one should report p-values in scientific reports. So that other

researchers may verify results, it is best that reported statistics, including p-values, not contain

random noise; we therefore recommend reducing such unnecessary noise in published reports by

employing as large a number of resampled data sets, as time constraints allow. With the easy

availability of modern high-speed computing, one can easily avoid unnecessary Monte Carlo

error (see also Andrews and Buchinsky, 1998).

b. The Test Statistic-Based Bootstrap

Kramer (2001) recently proposed a different bootstrap approach for event studies,

g

considering the test statistic Z =

∑

ti/(g1/2st) as a measure of the effect of the event, where ti is

i =1

the t-statistic from the univariate dummy-variable-based regression model for firm i, and st is the

sample standard deviation of the g t-statistics t1,…,tg.. The p-value of the test is obtained by

bootstrapping as follows: (i) create a pseudo-population of t-statistics ti* = ti - t (where t = Σ

ti/g), reflecting the null hypothesis case where the true mean of the t-statistics is zero; (ii) sample

g values with replacement from the pseudo-population and compute Z* from these pseudovalues; (iii) repeat (ii) NBOOT times, obtaining Z1*, …, ZNBOOT*. The p-value for the test is

then 2×min(pU, pL), where pL is the proportion of the NBOOT bootstrap samples yielding Zi* ≤ Z

and pU is the proportion yielding Zi* ≥ Z. In other words, Kramer suggests bootstrapping the test

statistic Z under the assumption that the statistics are independent. Lyon, Barber and Tsai (1999)

also suggest bootstrapping the test statistic, but they do so in a cumulative abnormal return

setting, as opposed to the dummy variable setting.

c. Type I error rates of bootstrap procedures under independence

Since both test-statistic based and data based bootstraps are justified only asymptotically,

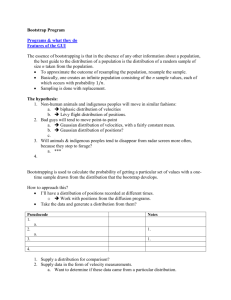

simulation is required to evaluate their finite-sample operating characteristics. Figure 1 shows

the results of a simulation to compare traditional normality-assuming F-tests, the data-based

bootstrap, and the test-statistic based bootstrap. Data were simulated from the market model,

where the distribution of the market return was chosen as normal4, and the abnormal returns were

generated independently from distributions varying from extremely heavy-tailed to normal. To

model heaviness of the tail, we used t-distributions with 1, 2, 4, and 8 degrees of freedom (T1,

T2, T4, and T8)5, to simulate extremely heavy to lighter tails; and we included the normal

distribution for completeness. The bootstrapped p-values are calculated using 999 samples, and

the number of replications of the bootstrap is 10,000 in all cases6. The simulated type I error

rates for nominal α=0.05 level tests are shown in Figure 1.

We note the following from these simulations: First, as the number of firms (portfolios),

g, increases, actual type I error rate for the traditional method becomes larger (see panels (a) and

(d)). In contrast, both the data-based bootstrap and the test-statistic based bootstrap maintain the

type I error rate better for larger g. This finding is important, as there are many examples in the

finance literature where the event is supposed to affect a large number (g) of firms. (See, for

example, Stewart and Hein (2002).) Second, the closer the underlying distribution of the

abnormal returns is to the normal distribution (i.e., the less heavy-tailed it is), the closer the

actual type I error rate is to 0.05 for the traditional method, as expected. Third, even with larger

sample sizes, the true level of the traditional test does not converge to 0.05, (compare (a) with

(d)), confirming the argument that the central limit theorem does not apply in this test setting; on

the other hand, the data-based bootstrap does appear to converge asymptotically (compare (b)

with (e)). Finally, we note that the test-statistic based bootstrap cannot be used at all when g=1,

because it is undefined. When g=2, the algorithm produces bootstrap t-statistics that are

uniformly equal to 0, hence the bootstrap critical values also are zero, meaning that the event

hypothesis is always rejected. Thus, the test-statistic based bootstrap cannot be used for g=1 or

g=2. While unusual, the case g=1 still may be desired to assess event effects on individual firms

such as dividend announcements. On the other hand, the test statistic-based bootstrap controls

type I errors even better with g ≥ 4 than does the data-based bootstrap. It should be noted that

this simulation study considers independent returns only, and it also does not consider power of

the tests, we next consider robustness to dependence structures and power.

IV. The Effect of Cross-Sectional Correlation

While the data-based bootstrap is valid under cross-sectional correlation, as occurs in

clustered studies, the test statistic-based bootstrap is not. A simple structural model will shed

light on the problem. Suppose again that the data consist only of true residuals vectors, again

appealing to the large T case, and that the residuals are dependent with εti = ξt + δij, where (ξt,

δt1,...,δtg) are independent, mean zero, normal random variables with Var(ξt) ≡ σ ξ2 and Var(δti)

≡ σ δ2 . This model is sensible if the g multivariate responses at time t share an “industry effect” ξt,

in which case there will be a commonality to their excess returns. In this model, the excess

returns {εt1,…,εtg} at time t are dependent, with variance Var (ε ti ) = σ ξ2 + σ δ2 , and with common

cross-sectional correlation ρ = Corr (ε ti , ε tj ) = σ ξ2 /(σ ξ2 + σ δ2 ) (ρ is also known as the intraclass

correlation coefficient). Assuming large T, the variances Var (ε ti ) = σ ξ2 + σ δ2 are estimated

precisely, so the t-statistics for testing the null hypothesis that day t=1 is a non-event day are ti ≅

ε1i /(σ ξ2 + σ δ2 )1/ 2 . The sample variance of the t-statistics is

g

σ δ2

1 g

1

2

2

= 1 − ρ for large g. Thus, for large

s =

(δ1i − δ1 ) ≅ 2

∑ (ti − t ) ≅ ( g − 1)(σ 2 + σ 2 ) ∑

g − 1 i =1

σ ξ + σ δ2

i =1

ξ

δ

2

t

g

g and T, Kramer’s test-statistic is approximated by Z ≅ g −1/ 2 ∑ ε1i /{(σ ξ2 + σ δ2 )(1 − ρ )}1/ 2 . Now,

i =1

the “pseudo-population” of centered statistics {ti - t }≅ { (δ1i − δ1 ) /(σ ξ2 + σ δ2 )1/ 2 } approximates a

normal distribution with mean zero and variance (1-ρ) for large g; hence, the Z* statistics

obtained by sampling from this pseudo-population will approximate a normal distribution with

mean zero and variance one (the scale factor (1-ρ) vanishes because of the standardization by st).

This implies that the critical values of the bootstrap procedure are approximately ± Z1-α / 2 , and

that one rejects the null hypothesis that day t=1 is a non-event day approximately when

Z ≥ Z1-α / 2 .

Noting that Var(Z) ≅

g

⎛

⎞ 1+(g-1)ρ

1

(eg, Johnson and

Var ⎜ g -1/2 ∑ ε1i /(σ ξ2 +σ δ2 )1/2 ⎟ =

(1 − ρ )

1− ρ

i =1

⎝

⎠

Wichern, 1998, p. 470), the method has type I error level approximately equal to α when ρ=0

since the variance of the Z score is unity in that case. However, in general the true type I error

level is approximately equal to 2(1 − Φ ( Z1−α / 2 /((1 + ( g − 1) ρ ) /(1 − ρ ))1/ 2 )) . Thus, under the

assumed model with ρ ≠0, the true type I error level of the Kramer test approaches 1.0 as g

increases. In a nutshell, the problem is that natural structural effects ξt resulting in cross-sectional

correlation are incorrectly determined to be effects of an event, which causes type I error rates to

approach 1.0 for large g.

V. Comparison of Methods

In this section we begin by developing new alternative test procedures resulting from

combining aspects of the alternative bootstrap approaches. We then report simulation results that

compare these approaches in a number of different dimensions.

a. Old and new bootstrap methods

Kramer's summed Z statistic is more powerful than the general multivariate test statistic

when the event effects are all in the same direction. However, use of the standard deviation of

the t-statistics for normalization of Z can decrease power in cases where g is small, as random

noise incurred by estimating the standard deviation is included in the test statistic. While use of

such standardization to create pivotal statistics are known to improve the convergence rate of the

type I error in i.i.d. cases (Babu and Singh, 1983), the present case differs somewhat in that (a)

the statistics ti are already standardized and hence at least partially pivotal (free of mean and

scale factors), and (b) we consider that the ti are non-i.i.d. Pesarin (2001, p.148) considers using

the statistic Σti (the Liptak test) in related multivariate contexts, and uses resampling to estimate

its distribution.

To correct for lack of type I error control, we apply the data-based bootstrap algorithm of

section III.a. to the statistic S = Z, and call this the “BK” test. We also consider the same

bootstrapping of the statistic S = Σti test suggested by Pesarin, calling this the "BT" test. It

should be noted that, unlike the HWZ test, there is no parametric equivalent to the BT and BK

tests; bootstrapping is essential. We show below that BT tends to have higher power under

consistent shifts, and that it maintains the type I error rate. In summary, we compare the

following four bootstrap methods:

•

Method HWZ: The data-based bootstrap of the traditional parametric F test (the HWZ

proposal).

•

Method BT: The data-based bootstrap of the Σti statistic (a new proposal).

•

Method BK: The data-based bootstrap of Kramer's Z statistic (a new proposal).

•

Method K: The test statistic-based bootstrap of Kramer’s Z statistic (Kramer’s proposal).

b. Simulation study: Cross-sectional correlation effects

The simulation study summarized by Figure 1 assumes zero cross-sectional correlation and

does not consider power, only level. Our purpose now is to compare the various bootstrap

methods in terms of level and power, for non-independent data. Since the methods are supposed

to work under a variety of distributions, they should work in particular for the normal

distribution, so we initially study normal models, using a variety of different correlation

structures. We consider a normal MVRM with T=100 and equicorrelated cross-sectional errors

(as would be implied, for example, by the structural model of section IV) with no event effects.

We let g = 5 and g = 30 in two separate cases. Further, we allow the cross-sectional correlation

parameter to vary as ρ=0.0, 0.1, …,0.9. Table I displays simulated type I error rates using b=999

bootstrap samples and NSIM=1000 simulations. All entries should be close to the nominal

α=0.05 level.

[ Insert Table I Here ]

It is clear from Table I that the Kramer method does not control the type I error rate when

there is cross-sectional correlation. For g=5, with ρ>0.2, the rejection rate is consistently over

twice the nominal α = 0.05 level, and the problem is worse with larger g as shown in Panel B.

The values in Panel B for the Kramer method closely agree with the theoretical

values 2(1 − Φ ( Z1−α / 2 /((1 + ( g − 1) ρ ) /(1 − ρ ))1/ 2 )) developed in section IV.

We also note that the bootstrapped HWZ method is too conservative for g = 30. The reason

for this is that g is large (30) relative to T (100). In such cases, the covariance matrix of the

bootstrapped residual vectors tends toward singularity because of the repeated vectors in the

bootstrapped samples. This creates larger-than-expected bootstrapped F statistics, which in turn

creates larger-than-expected bootstrap p-values. This problem disappears when g is small

relative to T; we found in unreported simulations that the type I error level of HWZ is

approximately correct when g=10 and T=100, (estimated value of 0.053), and only slightly

conservative when g=15 when T=100 (estimated value of 0.041). Thus, we cannot recommend

the HWZ method where the number of firms or portfolios (g) examined is large relative to the

number of time points (T) because the researcher will too frequently fail to reject the null.

On the other hand, the BT and BK methods perform well in terms of preserving type I error,

showing again that bootstrapping of the residuals is superior to bootstrapping the test statistic in

the face of cross sectional correlation in returns. There is a slight tendency toward excess type I

errors in the independence case for BT and BK. Westfall and Young (1993, p. 127) document a

similar phenomenon in a related application, and note that such effects diminish with increasing

T.

c. Simulation study: Power

To compare the various bootstrap methods in terms of power, we assume that the first

observation is the event. We model this with a common mean shift γ, ranging from 0.0 to 4.0 for

all g variables, chosen to reflect a range of power values between 0 and 1. Since the variances

are unity in this study, the mean shifts are equivalently 0 to 4 standard deviations. Again, we

consider g = 5 and g = 30, with T = 100 in both cases. To simplify matters, and to make a fair

comparison with the Kramer method, we assume zero cross sectional correlation. Table II

shows simulated power in this case.

[Insert Table II Here]

Based on the simulations above, we can recommend the data-based bootstrapped,

modified Kramer test, BT, because the BT procedure (i) maintains the type I error level and (ii)

has higher power. As noted above, the HWZ approach suffers in power when g is large relative

to T, and it can be too conservative when g is large relative to T. We should also note in fairness

that the HWZ approach could detect cases where the event effects go in opposite directions (for

example if γ increased for some firms and decreased for others), whereas the BT, BK, and K

methods are virtually powerless in such instances. The HWZ approach would be more powerful

in cases such as examining dividend announcements -- initiations and omissions -simultaneously, say. Such cases may be somewhat rare, but HWZ has greater power in such

cases.

d. The effect of time-series dependence: Analytic and simulation results

Excess returns can be serially correlated, as well as cross-correlated. Interestingly, the

data-based bootstrap we have described is robust to autocorrelation despite its use of independent

sampling. The reason for this is precisely the same reason that it is non-robust to non-normality.

Namely, the test is based on a single random observation rather than a sum. If the single

suspected residual is large relative to the estimated distribution, then the event is called

significant. All that is required is that the marginal distribution be estimated consistently in order

for marginal type I error control to be maintained asymptotically. It is well known that OLS

estimates and error variances are consistent under AR disturbances (Greene, 1993, p. 422),

suggesting that the normality-assuming dummy variable tests should become essentially a

function of the true residuals and their variances for large T. Further, the empirical distribution

of the residuals converges almost surely to the true distribution under stationarity conditions

given by Yu (1993) and Zhengyan and Chuanrong (1996, Chapter 12), implying that the

bootstrap test procedures should maintain type I error rates in large samples under these

conditions as well.

Specifically, consider again the case where the test is based on known residuals and

known variances (taken to be 1.0 without loss of generality) and consider the BT method in the

case with g=1. In this case, the test statistic is simply ε1, assuming again that t=1 denotes the

suspected event day, and the critical values for the bootstrap test procedures are the α/2 and 1-

α/2 quantiles of the distribution of ε*, where ε* is sampled with replacement from the pseudopopulation {ε2,…,εT}. In this case, the true bootstrap critical values are evaluated without

resorting to Monte Carlo sampling as F̂ε ,α / 2 and F̂ε ,1−α / 2 , respectively the (suitably interpolated)

α/2 and 1-α/2 empirical quantiles of the data {ε2,…,εT}. The empirical distribution of data from

stationary processes converges to the marginal distribution under conditions given by Yu (1993)

and Zhengyan and Chuanrong (1996, Chapter 12), in which case P(reject H0 | H0 true) = P(ε1

≤ F̂ε ,α / 2 or ε1 ≥ F̂ε ,1−α / 2 | H0 true) → P(ε1 ≤ Fε ,α / 2 or ε1 ≥ Fε ,1−α / 2 | H0 true) = α, for continuous

Fε. This result may seem surprising because it is generally thought that independence-based

bootstrapping fails in the case of non-i.i.d. data (Politis, 2003); however, the difference in the

present case is that we are bootstrapping the distribution of a single observation at a single point

in time, not of an average or some other combined statistic over the entire history.

We note that this asymptotic control of type I error rates is only in the marginal sense;

that is, the control is not conditional upon the recent history but rather unconditional, averaging

over all histories. Conditioning upon the recent histories, the independence-assuming bootstrap

test will have type I error rates that are sometimes greater, sometimes less, than the nominal

level. On average, over all histories, the type I error level is controlled. Marginal control of type

I error rates is a good property, and one that any procedure should possess. In particular, it is

instructive to compare marginal type I error rates of different procedures; those that do not

control marginal type I error rates are clearly inferior. On the other hand, the practical

implementation of a procedure that controls the type I error only marginally requires that recent

history be ignored. This is clearly an undesirable feature; therefore, despite the robustness of the

independence-assuming bootstrap to time series dependencies, it can only be recommended

when the data series is “reasonably close” to i.i.d. (although arbitrary cross-sectional correlation

is allowable in any case, even if there are perfect cross-sectional dependencies in the case of BT

and BK). Further research is needed to determine how significant the time series dependence

must be for the conditionality issue to become problematic for the i.i.d. bootstrap.

Table III shows results of simulations to estimate marginal type I error rates when T=100;

g=5 as a function of the serial autocorrelation parameter φ when there is no cross-sectional

correlation.

[Insert Table III Here]

Table III shows that all bootstrap tests control the type I error rates reasonably well, even

under substantial autocorrelation. However, the Kramer method remains not valid when there is

cross-sectional correlation (simulation results for cross-sectional correlation and time-series

dependence are not shown but available from the authors). We conclude that autocorrelation

itself is not a serious problem for marginal type I error rates of bootstrap-based dummy-variable

event tests, despite the fact that the model assumes i.i.d. residuals.

e. Simulation from observed populations

So far, the simulations have been somewhat contrived, and the connection between the

simulation models and real return data are not well established. It is well known that observed

excess returns frequently exhibit cross-sectional correlations, non-normalities, autoregressive

effects, and conditional heteroscedasticity. Rather than develop a simulation model that

incorporates all of these characteristics, we instead utilize existing financial data for our

simulation model. We used a data set consisting of daily returns on the S&P 500 as well as the

five insurance sub-indexes created by Standard and Poors, from January 3, 1990 to November 6,

2001 (3086 consecutive trading days). The excess returns are fairly highly correlated cross-

sectionally, with (min, ave, max) = (.41, .56, .79). There are also significant ARCH(1),

GARCH(1), and AR(1) effects in the univariate models.

In our simulation model, we randomly sampled the 6-dimensional vectors in 10 blocks of

20 consecutive time points to create simulated samples of length T=200 that preserve time-series

dependencies, cross-sectional dependencies, and non-normalities of the original data. The

starting point for the 20 consecutive time points was chosen at random from the possible starting

points 1,…,(3086-20+1), thus the selected series possibly overlap, and data occurring earlier in

the original “population” can occur later in the simulated sample. Künsch (1989), pioneered this

type of block resampling for time series data, and Hall et al. (1995) provide recommendations for

block sizes. Samples from the population exhibited similar time-series (autoregressive and

conditional heteroscedastic) characteristics, cross-sectional dependencies, and distributional

characteristics as found in the population, albeit with generally less statistical significance due to

the smaller sample size, and with somewhat smaller time series effect sizes due to local

independence induced by block resampling.

The event day was chosen at random from the simulated series of 200 observations. We

would expect that the various procedures should control the type I error rates in this analysis,

since there is nothing special about the chosen day. Results are shown in Table IV, panel A,

comparing the methods HWZ, BT, BK, and K described above, in addition to the classical

parametric F test with no bootstrapping (called BINDER in the Table). The simulation from

observed financial data confirms the essential results from the previous simulations. Namely, the

simulation confirms that: (1) the normality-assuming test fails to control the type I error rate, as

the Binder statistic too frequently rejects the null hypothesis, (2) the Kramer test statistic-based

method fails to control the type I error rate in the presence of cross-sectional correlation, and (3)

the data-bootstrap methods do control the type I error rate. Again, as in the previous section, we

reiterate that these are unconditional type I error levels, and that, while we would rather control

conditional type I error levels, unconditional control remains desirable as a basis for comparison

of the various procedures.

For all simulations, there were 10,000 samples of size T=200 as described above, and for

all bootstrap methods, B=999 simulations were used.

[ Insert Table IV Here ]

To evaluate power, we added common effects γ=.01,…,.05 to the excess returns for all

five indexes, allowing for a range of power from 0 to 1. The results are shown in Table IV,

panel B. Among the bootstrap tests that control the type I error levels, the BT test is preferred

for γ>.01, presumably because the normalization in the BK procedure adds excess variability.

On the other hand, the common shift alternative is meant to favor both BT and BK over HWZ; if

the alternative were that the variance was increased and not the mean, resulting in large positive,

as well as negative, excess returns, then the HWZ test would be preferred. Since the type I errors

of the Binder and Kramer tests are uncontrolled, it is inappropriate to compare their power with

the other procedures without a size adjustment. However, since these tests are anti-conservative,

a size adjustment must make their power smaller, and we can therefore conclude that the sizeadjusted Kramer test must be less powerful than the BT test for γ>.03, and that the size-adjusted

binder test must be less powerful than the BT test for γ>0.

VI. Conclusion

In this paper, we examine the popular test statistics used for event studies in MVRM

models. We show that when excess returns come from non-normal distributions, these traditional

test statistics are misspecified. The traditionally calculated p-values are biased downward

dramatically when the number of firms (or portfolios) is large and the residual distribution is

heavy-tailed, causing the researcher to conclude an event is significant to financial markets too

frequently. Importantly, this bias does not diminish as the sample size increases. Bootstrap

methods correctly and automatically adapt to non-normal characteristics of the data, reducing

this misspecification significantly and providing more accurate p-values.

Further analysis shows that Kramer's (2001) bootstrap method is preferred in terms of the

form of summed-t test statistic, which has good power, but is not preferred when there is crosssectional correlation, in which case the test has grossly inflated type I error probability. We

recommend a modification of the Kramer method using data-based bootstrapping, which

provides good power and maintains the type I error control. We also note that this type of

bootstrap is marginally robust in the face of time series dependence, despite its resampling of

independent residual vectors.

It is standard practice to use the t-distribution rather than the z-distribution for analysis of

mean values when the variance is unknown, simply because the procedures based on the tdistribution are generally more accurate. We argue for general use of the bootstrap rather than

the traditional method of MVRM tests of key events, for precisely the same reasons. Since the

bootstrap method performs better over a range of possible cases, as we have shown in numerous

simulations, and since its performance is not noticeably inferior in the case of normal

distributions, we feel it is generally much more prudent to use the bootstrap p-values than to use

the traditional p-values, regardless of the form of the underlying distribution. At the very least,

traditional analysis of event studies should be supplemented with a bootstrap analysis, so

investigators can evaluate the robustness of their inferences.

Limitations of the research are the restriction to MVRM models with a common event

time, and the lack of control of conditional error rates under non-i.i.d. time series data. Since the

BT method works well under cross-correlated data, extensions to separate, independent event

times, as considered by Kramer, should also work well, but we leave this for future study.

Further research is also needed to evaluate the conditionality issues described in section V.

Software to perform these analyses is available freely from the authors.

Footnotes

1

Smirlock and Kaufold (1987), Karafiath and Glascock (1989), Cornett and Tehranian

(1989 and 1990), De Jong and Thompson (1990), Eyssell and Arshadi (1990), Demirguc-Kunt

and Huizinga (1993), Madura et al. (1993), Unal, Demirguc-Kunt and Leung (1993), Clark and

Perfect (1996), Cornett, Davidson, and Rangan (1996), Johnson and Sarkar (1996), Bin and Chen

(1998), Cosimano and McDonald (1998), Sinkey and Carter (1999), and Stewart and Hein

(2002) are examples of empirical studies that use such an approach in examining the significance

of a wide variety of events.

2

All calculations are conditional on the observed matrix G; see Westfall and Young, 1993,

p. 123, for further discussion; see also the description of the SAS/STAT software PROC

MULTTEST, SAS Institute (1999), which uses an identical method to estimate p-values from

tests in multivariate linear models.

3

A technical distinction between the bootstrap approach suggested by Chou and HWZ is

that Chou fits null restricted models and does not suggest removing the residual from the

bootstrap sample. In our formulation, fitting null restricted models is not needed, and the

residuals that are identically zero should be removed. In cases where multiple event parameters

are modeled, one should also exclude any other sample residuals that are forced to be zero; the

program that is freely available from the authors allows this.

4

The distribution of the test statistic depends mainly on the distribution of the residuals; the

distribution chosen for the market return has relatively little effect.

5

Student t distributions are used for fitting financial models with heavy tails; see e.g. the

SAS/ETS procedure PROC AUTOREG.

6

While a large bootstrap sample size is recommended for reporting significance from an analysis

of a data set to ensure replicability, it is not as essential that the bootstrap sample size be so large

for simulation studies, in which case the major contributor to variability is the outer loop; see

Westfall and Young (1993, pp. 38-41).

Table I. Simulated type I error rates as a function of cross-sectional correlation.

Panel A: g = 5

ρ=0 ρ=0.1 ρ=0.2 ρ=0.3 ρ=0.4 ρ=0.5 ρ=0.6 ρ=0.7 ρ=0.8 ρ=0.9

HWZ 0.059 0.059 0.059 0.059 0.059 0.059 0.059 0.059 0.059 0.059

BT 0.055 0.057 0.055 0.057 0.056 0.058 0.056 0.057 0.060 0.056

BK 0.053 0.056 0.049 0.053 0.050 0.042 0.041 0.045 0.050 0.049

K

0.057 0.078 0.113 0.168 0.220 0.275 0.335 0.418 0.500 0.624

Panel B: g = 30

ρ=0 ρ=0.1 ρ=0.2 ρ=0.3 ρ=0.4 ρ=0.5 ρ=0.6 ρ=0.7 ρ=0.8 ρ=0.9

HWZ 0.002 0.002 0.002 0.002 0.002 0.002 0.002 0.002 0.002 0.002

BT 0.070 0.057 0.056 0.059 0.062 0.064 0.051 0.058 0.056 0.049

BK 0.073 0.057 0.051 0.055 0.056 0.053 0.055 0.058 0.053 0.050

K

0.056 0.366 0.516 0.590 0.652 0.702 0.718 0.813 0.851 0.885

Table II. Simulated power as a function of event effect γ.

Panel A: g = 5

γ=0 γ=0.2 γ=0.5 γ=0.75 γ=1.0 γ=1.5 γ=2.0 γ=3.0 γ=4.0

HWZ 0.05 0.04 0.09 0.19 0.28 0.74 0.95

1

1

BT 0.02 0.09 0.2 0.42 0.6 0.92 0.99

1

1

BK 0.05 0.07 0.17 0.34 0.58 0.78 0.92 0.97

1

K

0.06 0.08 0.16 0.27 0.42 0.65 0.75 0.88 0.93

Panel B: g = 30

γ=0 γ=0.1 γ=0.2 γ=0.3 γ=0.4 γ=0.5 γ=0.6 γ=0.7 γ=0.8 γ=0.9 γ=1.0

HWZ 0.002 0.002 0.003 0.007 0.011 0.013 0.042 0.072 0.130 0.1970.291

BT 0.070 0.092 0.206 0.363 0.573 0.751 0.871 0.949 0.988 0.9991.000

BK 0.073 0.090 0.193 0.335 0.539 0.697 0.844 0.930 0.975 0.9921.000

K

0.056 0.087 0.185 0.345 0.535 0.703 0.853 0.933 0.977 0.9960.999

Table III. Simulated type I error rates as a function of serial correlation φ.

φ=0 φ=0.1 φ=0.2 φ=0.3 φ=0.4 φ=0.5 φ=0.6 φ=0.7 φ=0.8 φ=0.9

HWZ 0.052 0.054 0.056 0.057 0.057 0.059 0.072 0.085 0.113 0.176

BT 0.062 0.062 0.067 0.066 0.068 0.07 0.067 0.071 0.079 0.093

BK 0.062 0.06 0.064 0.065 0.067 0.064 0.062 0.067 0.067 0.061

K

0.053 0.052 0.047 0.048 0.045 0.048 0.046 0.046 0.052 0.045

Table IV. Simulated type I error rates and power for the various procedures under block

sampling from a financial data series, where the samples exhibit ARCH, GARCH,

autoregressive, and cross-sectional correlation effects.

Panel A: Type I error rates

Binder

HWZ

BT

BK

K

Nominal type I error rate

α=0.01 α=0.05 α=0.10

0.058

0.105

0.137

0.014

0.055

0.104

0.015

0.054

0.103

0.014

0.053

0.105

0.097

0.275

0.358

Panel B: Power for the nominal α=0.05 tests.

Binder

HWZ

BT

BK

K

γ=0.01 γ=0.02 γ=0.03 γ=0.04 γ=0.05

0.139 0.346 0.712 0.935 0.987

0.071 0.183 0.465 0.784 0.933

0.148 0.543 0.858 0.970 0.993

0.193 0.463 0.674 0.820 0.885

0.542 0.776 0.880 0.925 0.946

0.2

0.15

T1

Type I error

T2

T4

0.1

T8

T2

T4

0.1

T8

Z

Z

0.05

2

4

8

1

Number of Firms (or Portfolios)

a)

2

4

1

0.2

Type I error

Type I error

T8

T2

T4

0.1

T8

T2

T4

0.1

T8

Z

0.05

0.05

0

0

4

T1

Z

0.05

2

8

0.15

T1

Z

1

4

0.2

0.15

T1

T4

2

(c)

0.2

0.1

Z

Number of Firms (or Portfolios)

Number of Firms (or Portfolios)

T2

T4

T8

8

(b)

0.15

T2

0.1

0

0

1

T1

0.05

0.05

0

0

1

8

2

4

1

8

(e)

2

4

8

Number of Firms (or Portfolios)

Number of Firms (or Portfolios)

Number of Firms (or Portfolios)

(d)

0.15

T1

Type I error

Type I error

0.15

0.2

Type I error

0.2

(f)

Figure 1: True type I error rates for nominal α=0.05 event tests with normal and nonnormal distributions. (a) traditional F test,

(b) data bootstrap F test (HWZ), (c) test statistic bootstrap (Kramer), all for T=200; (d), (e), (f) repeat (a), (b), (c) when T=50. True

type I error rates are 0% when g=1 for test statistic bootstrap, 100% when g=2.

Figure 1: True type I error rates for nominal α=0.05 event tests with normal and nonnormal distributions. (a) traditional F test,

(b) data bootstrap F test (HWZ), (c) test statistic bootstrap (Kramer), all for T=200; (d), (e), (f) repeat (a), (b), (c) when T=50.

True type I error rates are 0% when g=1 for test statistic bootstrap, 100% when g=2.

References

Andrews, D.W.K. and M. Buchinsky. (1998). “On the number of bootstrap repetitions for

bootstrap standard errors, confidence intervals, confidence regions, and tests.” Cowles

Foundation Discussion Paper No. 1141R, Yale University, revised.

Babu, G.J. and K. Singh. (1983). “Inference on means using the bootstrap.” Annals of Statistics

11, 999-1003.

Bernard, V. L.. (1987). “Cross-sectional dependence and problems in inference in market-based

accounting research.” Journal of Accounting Research 25 (1), 1-48.

Bin, F. and D. Chen. (1998). “Casino legislation debates and gaming stock returns: a United

States empirical study.” International Journal of Management 15 (4), 397-406.

Binder, J. J. (1985a). “Measuring the effects of regulation with stock price data.” Rand Journal of

Economics 16, 167-183.

Binder, J. J. (1985b) “On the use of multivariate regression models in event studies.” Journal of

Accounting Research 23, 370-383.

Binder, J. J. (1998). “The Event Study Methodology Since 1969.” Review of Quantitative

Finance and Accounting 11, 111-137.

Boehme, R.D. and S.M. Sorescu. (2002). “The Long-run performance following divided

Imitations and resumptions: under reaction or product of chance?” Journal of Finance 57,

871-900.

Chou, P.H. (2001). "Bootstrap Tests For Multivariate Event Studies", Advances in Investment

Analysis and Portfolio Management.

Clark, J.A. and S.B. Perfect. (1996). “The economic effects of client losses on OTC bank

derivative dealers: Evidence from the capital market.” Journal of Money, Credit, and

Banking 28, 527-545.

Cornett, M.M., W.N. Davidson, and N. Rangan. (1996). “Deregulation in investment banking:

Industry concentration following Rule 415.” Journal of Banking and Finance 20, 85-113.

Cornett, M.M. and H. Tehranian. (1989). “Stock market reaction to the depository institutions

deregulation and monetary control act of 1980.” Journal of Banking and Finance 13, 81100.

Cornett, M.M. and H. Tehranian. (1990). “An examination of the impact of the Garn-St.

Germain depository institutions act of 1982 on commercial banks and savings and loans.”

Journal of Finance 45, 95-111.

Cosimano, T.F. and B. McDonald. (1998). “What's different among banks?” Journal of

Monetary Economics 41, 57-70.

Davidson, R. and J.G. MacKinnon. (2000). “Bootstrap tests: How many bootstraps?”

Econometrics Reviews 19, 55-68.

De Jong, P. and R. Thompson. (1990). “Testing linear hypothesis in the SUR framework with

identical explanatory variables.” Research in Finance 8, 59-76.

Demirguc-Kunt, A. and H. Huizinga. (1993). “Official credits to developing countries: implicit

transfers to the banks.” Journal of Money, Credit, and Banking 25, 76-89.

Efron, B. and R.Tibshirani. (1998). “An Introduction to the Bootstrap.” CRC Press LLC.

Eyssell, T.H. and N. Arshadi. (1990). “The wealth effects of risk-based capital requirements in

banking.” Journal of Banking and Finance 14, 179-197.

Fama, Eugene. (1998). “Market Efficiency, long-term returns, and behavioral finance.” Journal

of Financial Economics 49, 283-306.

Freedman, D.A., and S.C. Peters. (1984). “Bootstrapping an Econometric Model: Some

Empirical Results.” Journal of Business and Economic Statistics 2, 150-158.

Greene, W.H. (1993). Econometric Analysis, Second Edition. Prentice Hall: New Jersey.

Hall, P., Horowitz, J.L., and B.Y. Jing. (1995). “On blocking rules for the bootstrap with

dependent data.” Biometrika 82, 561-574.

Hein, S.E., P. Westfall, and Z. Zhang. (2001). “Improvements on Event Study Tests:

Bootstrapping the Multivariate Regression Model.” Texas Tech University, Working

Paper.

Horowitz, J. L. (2001). “The bootstrap and hypothesis tests in econometrics.” Journal of

Econometrics 100, 37-40.

Johnson, R.A. and D.W. Wichern. (1998). Applied Multivariate Statistical Analysis, 4th ed,

Prentice-Hall.

Johnson, S.A. and S.K. Sarkar. (1996). “The valuation effects of the 1977 community

reinvestment act and its enforcement.” Journal of Banking and Finance 20, 783-803.

Karafiath, I. (1988) “Using dummy variables in the event study methodology.” Financial Review

23, 351-357.

Karafiath, I. and J. Glascock. (1989). “Intra-industry effects of a regulatory shift: Capital market

evidence from Penn Square.” Financial Review 24, 123-134.

Kramer, L.A. (1998). "Banking on Event Studies: Statistical Problems, a New Bootstrap Solution

and an Application to Failed Bank Acquisitions." Ph.D. Dissertation, University of

British Columbia.

Kramer, L.A (2001). “Alternative Methods for Robust Analysis in Event Study Applications.”

Advances in Investment Analysis and Portfolio Management 8, 109-132.

Künsch, H.R. (1989). “The jackknife and bootstrap for general stationary observations.” Annals

of Statistics 17, 1217-1241.

Lyon, J.D., B.M. Barber, and C.L. Tsai. (1999). “Improved Methods for Tests of Long-Run

Abnormal Stock Returns.” Journal of Finance 54, 165-201.

Madura, J., A.J. Tucker, and E. Zarrick. (1992). “Reaction of bank share prices to the ThirdWorld debt reduction plan.” Journal of Banking and Finance 16, 853-868.

MacKinlay, A.C. (1997). “Event Studies in Economics and Finance.” Journal of Economic

Literature 35, 13-39.

Mitchell, M. and E. Stafford. (2000). “Managerial decisions and long-term stock price

performance.” Journal of Business 73, 287-329.

MacKinnon, J.G. (1999). “Bootstrap Testing in Econometrics.” Presented at the May 29. 1999

CEA Annual Meeting.

Pesarin, F. (2001). Multivariate Permutation Tests. Wiley, New York.

Politis, D.N. (2003). “The impact of bootstrap methods on time series analyis.” Statistical

Science 18, 219-230.

Rau, P.R. and T. Vermaelen. (1998). “Glamour, value, and the post-acquisition performance of

acquiring firms.” Journal of Financial Economics 49, 223-253.

Rocke, D. M. (1989). “Bootstrap Bartlett adjustment in seemingly unrelated regression.” Journal

of the American Statistical Association 84, 598-601.

SAS Institute, Inc. (1990). SAS Guide to Macro Processing, Version 6, Second Edition. SAS

Institute Inc., Cary, NC.

SAS Institute, Inc. (1999). SAS/STAT User's Guide, Version 8. SAS Institute Inc., Cary, NC.

Schipper, K. and R. Thompson. (1985). “The impact of merger-related regulations using exact

distributions of test statistics.” Journal of Accounting Research 23, 408-415.

Sefcik, S. E. and R. Thompson. (1986). “An approach to statistical inference in cross-sectional

models with security abnormal returns as the dependent variable.” Journal of Accounting

Research 24, 316-334.

Sinkey, J.F. and D.A. Carter. (1999). “The reaction of bank stock prices to news of derivatives

losses by corporate clients.” Journal of Banking and Finance 23, 1725-1743.

Smirlock, M. and H. Kaufold. (1987). “Bank foreign lending, mandatory disclosure rules, and

the reaction of bank stock prices to the Mexican debt crisis.” Journal of Business 60, 347364.

Stewart, J.D. and S.E. Hein. (2002). “An investigation of the Impact of the 1990 reserve

requirement change on financial asset prices.” Journal of Financial Research 25, 367-382.

Unal, H., A. Demirguc-Kunt and K. Leung. (1993). “The Brady Plan, 1989 Mexican DebtReduction Agreement, and Bank Stock Returns in United States and Japan.” Journal of

Money, Credit, and Banking 25, 410-429.

Westfall, P.H. and Young, S.S. (1993). Resampling-Based Multiple Testing: Examples and

Methods for P-Value Adjustment. Wiley: New York.

Yu, H. (1993). “A Glivenko-Cantelli lemma and weak convergence for empirical processes of

associated sequences.” Probability Theory and Related Fields 95, 357-370.

Zellner, A. (1962). “An efficient method of estimating seemingly unrelated regressions and tests

for aggregation bias.” Journal of the American Statistical Association 57, 348-368.

Zhengyan, L. and L. Chuanrong. (1996). Limit Theory for Mixing Dependent Random

Variables. Science Press, New York.