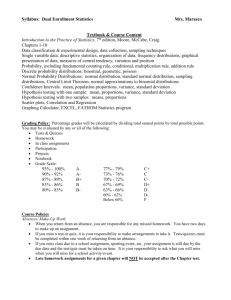

Keeping the Symbols Straight: What are all those Sigma’s and s’s?

advertisement

Keeping the Symbols Straight: What are all those Sigma’s and s’s? We use many kinds of variances, standard deviations, and standard errors in statistics,and often the only way to distinguish between them is by the superscripts or subscripts. Here are some helpful hints for reading and interpreting these symbols: Let’s start by reviewing the three kinds of distributions we work with and the general system used to write the symbols associated with each: Population distributions are real distributions of real variables, but usually their parameters (values) are unknown to the researcher, because we cannot study the entire population. Greek symbols such as F and F 2 are used to represent these real but unknown values. We test hypotheses and make estimates about populations. Sample distributions are real and contain the measurements collected from the cases in the sample. The sample statistics (sample values) are known to the researcher and are usually represented by Roman letters such as s and s2. Sampling distributions are theoretical distributions of all the results of some calculation performed on all possible samples taken from the same population. We have dealt with sampling distributions of a mean, a proportion, a difference between two means, and a difference between two proportions. The properties of sampling distributions are theoretical but may be known to the researcher through the Central Limit Theorem. The theoretical standard errors of sampling distributions may be represented with Greek symbols (e.g. F 0 or F 01-02), but in practice we usually have to estimate the standard error from the sample data. In that case, we use Roman letters to represent the estimated standard error (e.g. s0 or s01-02 ). N o matter what kind of distribution we are working with, the definitions of standard deviation, variance, and standard error are the same. A standard deviation is a measure of variability of a distribution. It is based on the sum of the squared deviations of the values from the mean. A variance is just the square of a standard deviation. If we want to describe the variability of a distribution, it’s easier to use the standard deviation, since that will be in the same units of measurement as the variable being measured. The variance is used more as an intermediate step in calculating something else, such as a standard error of the difference. (In some cases, we may want to test hypotheses about variances, but we have not dealt with that topic yet). A standard error is the standard deviation of a sampling distribution. It provides information on how narrowly or widely the possible sample values are distributed around the population parameter - e.g. what proportion of all possible sample means will fall within a given distance of the true population mean. It is used to estimate sampling error. In contrast to the standard deviation and variance of the population and sample distributions (which are real), the standard error is theoretical and is based on probability theory and assumptions. With the above in mind, here is a list of the symbols we have used to date, along with their definitions: F = the population standard deviation (real, but unknown) F2 = the population variance (real, but unkown) s = the sample standard deviation (real, and can be calculated from the sample data: %E(X-0)2/(N-1) s2 = the sample variance (real, and can be calculated from the sample data) F0 = the theoretical standard error of the mean (the standard deviation of the distribution of all the means calculated from all possible samples - theoretical, but can be estimated) s0 = the estimated standard error of the mean calculated from the sample data: s/%N Fp = the theoretical standard error of the proportion (the standard deviation of the distribution of all the proportions calculated from all possible samples - theoretical, but can be estimated) sp = the estimated standard error of the proportion calculated from the sample data: %p(1-p)/N F01-02 = the theoretical standard error of the difference between means (the standard deviation of the distribution of all the differences (01-02) calculated from all possible samples - theoretical, but can be estimated) s01-02 = the estimated standard error of the difference between means, calculated from the sample %(s12/N1) + (s22/N2) (large samples, or equal N’s) %s2p(1/N1 + 1/N2) (small samples and/or very unequal N’s) ... see below s2p = pooled variance estimate: an estimate of the population variance made by calculating a weighted average of two sample variances: [(N1-1)s12 +(N2-1)s22]/(N1+N2-2). Do not confuse this with the standard error of the proportion! The context should allow you to determine which it is. Fp1-p2 = the theoretical standard error of the difference between proportions (the standard deviation of the distribution of all the differences (p1-p2) calculated from all possible samples theoretical, but can be estimated) sp1-p2 = estimated standard error of difference in proportions, calculated from the sample: %pc(1-pc)(1/N1 + 1/N2) where pc = (N1p1 + N2p2)/(N1+N2)