Statistics Multivariate Studies One Way ANOVA and Multiple Comparisons

advertisement

One Way ANOVA and Multiple Comparisons

Multivariate Studies

Observational Study: conditions to which

subjects are exposed are not controlled by

the investigator. (no attempt is made to

control or influence the variables of interest)

Statistics

Analysis of Variance:

Comparing More Than 2 Means

Experimental Study: conditions to which

subjects are exposed to are controlled by

the investigator. (treatments are used in

order to observe the response)

1

2

Experiment

1. Investigator Controls One or More

Independent Variables

Experiments

T

T

Called Treatment Variables or Factors

Contain Two or More Levels (Subcategories)

2. Observes Effect on Dependent Variable

T

Response to Levels of Independent Variable

3. Experimental Design: Plan Used to Test

Hypotheses

3

Examples of Experiments

4

Experimental Designs

1. Thirty Locations Are Randomly Assigned 1

of 4 (Levels) Health Promotion Banners

(Independent Variable) to See the Effect on Using

Stairs (Dependent Variable).

2. Two Hundred Consumers Are Randomly Assigned

1 of 3 (Levels) Brands of Juice (Independent

Variable) to Study Reaction (Dependent Variable).

5

Experimental

Experimental

Designs

Designs

Completely

Completely

Randomized

Randomized

Randomized

Randomized

Block

Block

Factorial

Factorial

One-Way

One-Way

ANOVA

ANOVA

Randomized

Randomized

Block

Block FF Test

Test

Two-Way

Two-Way

ANOVA

ANOVA

6

ANOVA - 1

One Way ANOVA and Multiple Comparisons

Experimental Designs

Completely Randomized

Design

Experimental

Experimental

Designs

Designs

Completely

Completely

Randomized

Randomized

Randomized

Randomized

Block

Block

Factorial

Factorial

One-Way

One-Way

ANOVA

ANOVA

Randomized

Randomized

Block

Block FF Test

Test

Two-Way

Two-Way

ANOVA

ANOVA

7

Completely Randomized

Design

Randomized Design Example

1. Experimental Units (Subjects) Are

Assigned Randomly to Treatments

T

Factor

Factor levels

levels

(Treatments)

(Treatments)

Factor

Factor (Training

(Training Method

Method)

Level

Level

Level

Level 11

Level

Level

Subjects are Assumed Homogeneous

22

2. One Factor or Independent Variable

T

8

Experimental

Experimental

units

units

2 or More Treatment Levels or

Classifications

3. Analyzed by One-Way ANOVA

33

. . .

.

. . .

..

Dependent

Dependent

variable

variable

21

21 hrs.

hrs.

17

17 hrs.

hrs.

31

31 hrs.

hrs.

27

27 hrs.

hrs.

25

25 hrs.

hrs.

28

28 hrs.

hrs.

(Response)

(Response)

29

29 hrs.

hrs.

20

20 hrs.

hrs.

22

22 hrs.

hrs.

9

10

Experimental Designs

One-Way ANOVA F-Test

Experimental

Experimental

Designs

Designs

11

Completely

Completely

Randomized

Randomized

Randomized

Randomized

Block

Block

Factorial

Factorial

One-Way

One-Way

ANOVA

ANOVA

Randomized

Randomized

Block

Block FF Test

Test

Two-Way

Two-Way

ANOVA

ANOVA

12

ANOVA - 2

One Way ANOVA and Multiple Comparisons

One-Way ANOVA F-Test

Assumptions

One-Way ANOVA F-Test

1. Tests the Equality of 2 or More (t)

Population Means (µ1=µ2= …=µt )

2. Variables

T

1. Randomness & Independence of Errors

T

2. Normality

One Nominal Scaled Independent Variable

2 or More (t) Treatment Levels or Classifications

T

Independent Random Samples are Drawn

T

One Interval or Ratio Scaled Dependent

Variable

Populations are Normally Distributed

3. Homogeneity of Variance (σ1=σ2= …=σt )

3. Used to Analyze Completely Randomized

Experimental Designs

T

Populations have Equal Variances

13

14

One-Way ANOVA F-Test

Hypotheses

Example: Hourly wage for three ethnic group

H0: µ1 = µ2 = µ3 = ... = µt

T

T

f(y)

All Population Means are

Equal

No Treatment Effect

Ha: Not All µj Are Equal

T

T

T

Why Variances?

CASE I

y

µ1 = µ2 = µ3

At Least 1 Pop. Mean is

Different

Treatment Effect

µ1 ≠ µ2 ≠ ... ≠ µt Is Wrong

f(y)

y

µ1 = µ2 µ3

Average

CASE II

1

2

3

1

5.90

5.51

5.01

5.90

6.31

2

4.52

5.92

5.50

5.00

4.42

3.54

6.93

5.89

5.50

4.99

7.51

4.73

4.48

5.91

5.49

4.98

7.89

7.20

5.55

5.88

5.50

5.02

3.78

5.72

3.52

5.90

5.50

5.00

5.90

5.50

5.00

15

16

Why Variances?

Why Variances?

Case I

Same treatment variation

Different random variation

Case II

6.0

3

8

5.8

A

7

Pop 1 Pop 2 Pop 3

CASE2

CASE1

5.6

5.4

6

5

5.2

Pop 5

Pop 6

3

4.8

0.0

Pop 4

4

5.0

1.0

2.0

GROUPID

3.0

4.0

0.0

1.0

2.0

3.0

4.0

GROUPID

Variances WITHIN differ

17

Possible to conclude means are equal! 18

ANOVA - 3

One Way ANOVA and Multiple Comparisons

One-Way ANOVA

Basic Idea

Why Variances?

Same treatment variation Different treatment variation

Different random

Same random variation

variation

A

Pop 1 Pop 2 Pop 3

Pop 4

Pop 5

B

3.If Treatment Variation Is Significantly

Greater Than Random Variation then

Means Are Not Equal

Pop 5

Pop 4

Pop 6

Pop 6

Variances WITHIN differ

Pop 1 Pop 2 Pop 3

1.Compares 2 Types of Variation to Test

Equality of Means

2.Comparison Basis Is Ratio of Variances

Variances AMONG differ

4.Variation Measures Are Obtained by

‘Partitioning’ Total Variation

Possible to conclude means are equal! 19

One-Way ANOVA

Partitions Total Variation

Notations

yij :

y i⋅ :

Total variation

Variation due to

treatment

Variation due to

random sampling

Sum of Squares Among

Sum of Squares Between

Sum of Squares Treatment

Among Groups Variation

20

Sum of Squares Within

Sum of Squares Error

Within Groups Variation

y ⋅⋅ :

the j-th element from the i-th treatment

the i-th treatment mean

the overall sample mean

nT : the total sample size (n1 + n2 + … + nt)

21

Total Variation

22

Treatment Variation

TSS = ( y11 − y⋅⋅ )2 + ( y21 − y⋅⋅ )2 + + ( yij − y⋅⋅ )2

t ni

SSB = n1( y1⋅ − y⋅⋅ )2 + n2 ( y2⋅ − y⋅⋅ )2 + + nt ( yt⋅ − y⋅⋅ )2

t

= ¦ ni ( yi⋅ − y⋅⋅ ) 2

= ¦ ¦ ( yij − y⋅⋅ ) 2

i =1

i =1 j =1

Response, y

Response, y

y3

y

y

y1

Group 1

Group 2

Group 3

Group 1

23

y2

Group 2

Group 3

24

ANOVA - 4

One Way ANOVA and Multiple Comparisons

One-Way ANOVA F-Test

Test Statistic

Random (Error) Variation

SSW = ( y11 − y1⋅ )2 + ( y21 − y2⋅ )2 + + ( ytj − yt⋅ )2

t ni

t

i =1 j =1

i =1

1. Test Statistic

= ¦ ¦ ( yij − yi⋅ ) 2 = ¦ (ni − 1) si2

T

F = MSB / MSW

MSB Is Mean Square for Treatment

Response, y

MSW Is Mean Square for Error

2. Degrees of Freedom

y3

y2

y1

T

T

ν1 = t -1

ν2 = nT - t

t = # Populations, Groups, or Levels

Group 1

Group 2

nT = Total Sample Size

Group 3

25

26

One-Way ANOVA

Summary Table

One-Way ANOVA F-Test

Critical Value

Source of Degrees Sum of

Variation

of

Squares

Freedom

Treatment

t-1

SSB

Mean

F

Square

(Variance)

MSB

MSB =

SSB/(t - 1) MSW

Error

MSW =

SSW/(n

SSW/(nT - t)

(Between samples)

(Within samples)

Total

nT - t

SSW

If means are equal,

F = MSB / MSW ≈ 1.

Only reject large F !

Reject H0

α

Do Not

Reject H0

F

0

Fα (t-1, n

nT - 1 TSS =

SSB+SSW

T

–t)

Always OneOne-Tail!

© 19841984-1994 T/Maker Co.

27

28

One-Way ANOVA F-Test

Example

As production manager,

you want to see if 3 filling

machines have different

mean filling times. You

assign 15 similarly trained

& experienced workers, 5

per machine, to the

machines. At the .05

level, is there a difference

in mean filling times?

Mach1

25.40

26.31

24.10

23.74

25.10

Mach2

23.40

21.80

23.50

22.75

21.60

One-Way ANOVA F-Test

Solution

H0: µ1 = µ2 = µ3

Ha: Not All Equal

α = .05

ν 1 = 2, ν 2 = 12

Critical Value(s):

Mach3

20.00

22.20

19.75

20.60

20.40

α = .05

0

29

3.89

F

Test Statistic:

F=

MSB 23.5820

= 25.6

=

.9211

MSW

Decision:

Reject at α = .05

Conclusion:

There Is Evidence Pop.

Means Are Different

30

ANOVA - 5

One Way ANOVA and Multiple Comparisons

Summary Table

Solution

Source of Degrees of Sum of

Variation Freedom Squares

Treatment

(Machines)

3-1=2

Mean

Square

(Variance)

F

23.5820

25.60

47.1640

Error

15 - 3 = 12 11.0532

Total

15 - 1 = 14 58.2172

One-Way ANOVA F-Test

Thinking Challenge

.9211

From Computer

You’re a trainer for Microsoft

Corp. Is there a difference in

mean learning times of 12

people using 4 different

training methods (α

α =.05)?

M1 M2 M3 M4

10 11 13 18

9 16

8 23

5

9

9 25

Use the following table.

© 1984-1994 T/Maker Co.

31

32

Summary Table

(Partially Completed)

One-Way ANOVA F-Test

Solution*

H0: µ1 = µ2 = µ3 = µ4

Source of Degrees of Sum of

Freedom Squares

Variation

v.s.

Treatment

(Methods)

348

Ha: Not All Equal

Error

80

α = .05

Total

Mean

Square

(Variance)

33

34

Summary Table

Solution*

Source of Degrees of Sum of

Freedom Squares

Variation

One-Way ANOVA F-Test

Solution*

Mean

Square

(Variance)

F

11.6

Treatment

(Methods)

4-1=3

348

116

Error

12 - 4 = 8

80

10

Total

12 - 1 = 11

428

F

H0: µ1 = µ2 = µ3 = µ4 Test Statistic:

MSB 116

Ha: Not All Equal

F=

=

= 116

11.6

α = .05

10

MSW

ν1=3 ν2=8

p-value = .003

Critical Value(s):

Decision:

Reject at α = .05

α = .05

0

35

4.07

F

Conclusion:

There Is Evidence Pop.

Means Are Different

36

ANOVA - 6

One Way ANOVA and Multiple Comparisons

Side-by-side Boxplot

Linear Model for CRD

Let yij be the j-th sample observation

from the population i,

yij = µ + αi + εij

µ : over all mean

αi : i-th treatment effect

εij : error term, or random variation of yij

about µi where µi = µ + αi

30

SCORE

20

10

0

N=

3

3

1.00

2.00

3

3

3.00

4.00

METHOD

37

One-Way ANOVA F-Test

Hypotheses

38

Error Term Assumptions

H0: µ1 = µ2 = µ3 = ... = µt

T All Population Means are Equal

T No Treatment Effect

For parametric F test, εij’s are independent

and normally distributed with constant

variance σε2.

The normality assumption can be checked

by using the estimates (residuals)

is equivalent to

eij = yij − yi⋅

H0: α1 = α2 = α3 = ... = αt

Test the normality of the residuals.

39

40

Error Term Assumptions

What if the assumptions

are not satisfied?

Equal variances assumption can be

verified by using Hartley’s test (very

sensitive to normality) or Levine’s Test.

Levine’s test can be done

by applying

~

ANOVA on d ij = | eij − ei ⋅ |

~

where ei ⋅ is the sample median of the ith sample.

41

Try a nonparametric method:

Kruskal-Wallis Test

42

ANOVA - 7

One Way ANOVA and Multiple Comparisons

Birth Weight Example

Birth Weight Example

Example: The birth weight of an infant has been hypothesized to be

associated with the smoking status of the mother during the first trimester of

pregnancy. The mothers are divided into four groups according to smoking

habit, and the sample of birth weights in pounds within each group is given as

follow:

Group 1: (Mother is a nonsmoker)

7.5 6.9 7.4 9.2 8.3 7.6

Group 2: (Mother is an ex-smoker but not during the pregnancy)

5.8 7.1 8.2 7.1 7.8

Group 3: (Mother is a current smoker and smoke less than 1 pack per day)

5.9 6.2 5.8 4.7 7.2 6.2

Group 4: (Mother is a current smoker and smoke more than 1 pack per day)

6.8 5.7 4.9 6.2 5.8 5.4

43

Hypothesis:

H0 : µ1 = µ2 = µ3 = µ4

Ha : µi ≠ µj for at least one pair of (i, j), i, j = 1, 2, 3, 4.

Test Statistic:

( 6 − 1)s1 + (5 − 1)s 2 + (6 − 1)s3 + (7 − 1) s 4

= .609,

24 − 4

2

x3 = 6.0

x4 = 5.8571

s2 = .9138

s3 = .8075

s4 = .6161

n1 = 6

n2 = 5

n3 = 6

n4 = 7

44

Decision Rule: If F > F.05, 3, 20 = 3.10 , the null hypothesis

would be rejected. This implies that the difference between

group means is significant, p-value is less than .001, since

F.001, 3, 20 = 8.10 < 9.088.

2

Conclusion: The test statistic F = 9.088 > F.05, 3, 20 = 3.10,

and p-value < 0.05, null hypothesis is rejected. There is

significant difference between group means.

where n = 6 + 5 + 6 + 7 = 24 , sW2 is a good estimate of σ 2.

Between groups variability:

s B2 =

5.537

= 9.088

.609

s1= .8134

Within groups variability:

2

=

Over all mean, x = 6.7222

x1 = 7.8167

x2 = 7.2

Under H0, F statistic follows an F-distribution, Fdf 1 , df 2 , with degrees

of freedom, df1 = 4 – 1 = 3, df2 = 24 – 4 = 20. (Table 5, page A-11.)

2

s B2

sW2

Decision Rule & Conclusion

Mean Squares for F Test

sW2 =

F =

6( x1 − x ) 2 + 5( x 2 − x ) 2 + 6( x3 − x ) 2 + 7( x 4 − x ) 2

= 5.537

4 −1

45

46

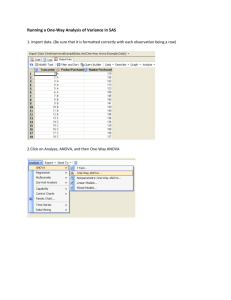

Error Bar Chart

SPSS output:

SPSS Error Bar Chart

9

ANOVA

95% CI Birth Weight

8

BWEIGHT

7

3

Mean Square

5.537

Within Groups

12.185

20

.609

Total

28.796

23

Between Groups

6

5

df

sw2

4

N=

Sum of Squares

16.611

6

5

6

7

Non smoker

Ex-smoker

Smoker <1

Smoker >1

F

9.088

Sig.

.001

sB2

Smoker Categories

47

48

ANOVA - 8

One Way ANOVA and Multiple Comparisons

Tukey’s B

Post Hoc Analysis

The following output tables are from Bonferroni and Tukey’s-b options.

BWEIGHT

Multiple Comparisons

Dependent Variable: BWEIGHT

Subset for alpha = .05

Bonferroni

SMOKEST

(I) SMOKEST

Non smoker

(J) SMOKEST

Ex-smoker

Ex-smoker

Smoker <1

Smoker >1

Mean

Difference (I-J)

.6167

95% Confidence Interval

Std. Error

.4727

Sig.

1.000

Lower Bound

-.7668

Upper Bound

2.0002

Smoker <1

1.8167*

.4507

.004

.4975

3.1358

Smoker >1

1.9595*

.4343

.001

.6884

3.2307

Non smoker

-.6167

.4727

1.000

-2.0002

.7668

Smoker <1

1.2000

.4727

.117

-.1835

2.5835

Smoker >1

1.3429*

.4570

.049

5.020E-03

2.6807

Non smoker

-1.8167*

.4507

.004

-3.1358

-.4975

Ex-smoker

-1.2000

.4727

.117

-2.5835

.1835

Smoker >1

.1429

.4343

1.000

-1.1283

1.4140

Non smoker

-1.9595*

.4343

.001

-3.2307

-.6884

Ex-smoker

-1.3429*

.4570

.049

-2.6807

-5.0200E-03

Smoker <1

-.1429

.4343

1.000

-1.4140

1.1283

N

1

2

Tukey B a,b Smoker >1

7

5.8571

Smoker <1

6

6.0000

Ex-smoker

5

7.2000

Non smoker

6

7.8167

Means for groups in homogeneous subsets are displayed.

a. Uses Harmonic Mean Sample Size = 5.915.

b. The group sizes are unequal. The harmonic mean of the

group sizes is used. Type I error levels are not guaranteed.

49

*. The mean difference is significant at the .05 level.

50

Bonferroni Adjustment Method

Confidence Interval

Multiple Comparisons using Confidence Interval

Estimate for difference of two means with sw2 as

thepooled estimate of common variance for

multiple comparisons and with

Bonferroni correction: (α∗ is corrected level of sig.)

[Since (1 – (1−

−α∗)c) ≤ cα∗ = α ]

Example: (from the previous problem about smoking mothers)

For comparing “Smoke < 1” & “Smoke > 1”

§k ·

k!

Number of pairs to be compared is c = ¨¨ ¸¸ =

© 2 ¹ 2! ( k − 2)!

2

2

x1 − x2 ± tα * ⋅

2

sw

n1

+

sw

α

n2 where α*= Number of pairs to be compared .

1−α* is the corrected confidence level.

51

α = 0.05, α* ≈ α/c = 0.05/{4!/(2!2!)} = .05/6 = .008,

tα*/2 = t.004 ≈ 2.9, (degrees of freedom for sw2 = 24−4=20)

sw2 = .609

5.8571 − 6.000 ± 2.9 ⋅

.609 .609

+

6

7

(−1.410, 1.124)

This interval does contain zero. It implies the difference

between the means of “Smoke < 1” & “Smoke > 1” groups is

insignificant.

52

Multiple Comparisons of

Means

Multiple Comparisons

Dependent Variable: BWEIGHT

Bonferroni

(J) SMOKEST

Mean

Difference (I-J)

Std. Error

Non smoker

Ex-smoker

.6167

.4727

Smoker <1

1.8167*

.4507

Smoker >1

1.9595*

.4343

.001

Non smoker

-.6167

.4727

1.000

-2.0002

.7668

Smoker <1

1.2000

.4727

.117

-.1835

2.5835

Smoker >1

1.3429*

.4570

.049

5.020E-03

2.6807

Non smoker

-1.8167*

.4507

.004

-3.1358

-.4975

Ex-smoker

Ex-smoker

Smoker <1

Smoker >1

• Additional tests to find out where

the difference lie using an

experimental-wise error rate α.

95% Confidence Interval

(I) SMOKEST

Sig.

1.000

.004

Lower Bound

Upper Bound

-.7668

2.0002

.4975

3.1358

.6884

3.2307

-1.2000

.4727

.117

-2.5835

.1835

Smoker >1

.1429

.4343

1.000

-1.1283

1.4140

Non smoker

-1.9595*

.4343

.001

-3.2307

-.6884

Ex-smoker

-1.3429*

.4570

.049

-2.6807

-5.0200E-03

Smoker <1

-.1429

.4343

1.000

-1.4140

1.1283

• Perform a series of

two-sample t-tests.

§ p·

p ⋅ ( p − 1) / 2 = ¨ ¸

©2¹

• Bonferroni Correction: For a overall

level of significance α, the level of

α

*

significance for each test is α =

*. The mean difference is significant at the .05 level.

(−1.410, 1.124)

53

§ p·

¨ ¸

©2¹

54

ANOVA - 9

One Way ANOVA and Multiple Comparisons

Multiple Comparison

Procedures

Planned Multiple Comparison

Procedures

•Planned Comparisons

•Planned orthogonal comparisons

•Unplanned Comparisons

•Planned non-orthogonal comparisons

a Pairwise versus control group (Dunnett’s)

•Data Snooping

55

Post-hoc Multiple Comparison

Procedures

56

Error in Multiple Comparison

Procedures

Individual error rate:

•All pairwise (Fisher-Hayter, Tukey’s HSD)

The probability that a contrast (or a comparison) will be

falsely declared significant in an experiment. (αΙ)

•Pairwise versus control group (Dunnett’s)

•Non-pairwise (Scheffé’s)

Experiment-wise error rate:

The probability that at least one contrast will be falsely

declared significant in an experiment. (1–(1–αΙ)m)

•Bonferroni Method

Family-wise error rate:

The probability that at least one contrast will be falsely

declared significant in a family of k contrasts. (1–(1–αΙ)k)

57

Multiple Comparison

Procedures

(Similar to confidence level in multiple comparison tests.)

58

Multiple Comparison

Procedures in SPSS

Strongly protected family-wise error

The Bonferroni and Tukey's honestly significant difference tests

are commonly used multiple comparison tests. The Bonferroni test,

based on Student's t statistic, adjusts the observed significance level

for the fact that multiple comparisons are made. Sidak's t test also

adjusts the significance level and provides tighter bounds than the

Bonferroni test. Tukey's honestly significant difference test uses the

Studentized range statistic to make all pairwise comparisons between

groups and sets the experimentwise error rate to the error rate for the

collection for all pairwise comparisons. When testing a large number

of pairs of means, Tukey's honestly significant difference test is

more powerful than the Bonferroni test. For a small number of

pairs, Bonferroni is more powerful.

•Fisher-Hayter

•Tukey’s HSD

•Bonferroni’s procedure

•Scheffe’s procedure

Weakly protected family-wise error

•Fisher’s Least Significance Difference procedure

•Student-Newman-Keuls procedure (Mod. Tukey’s)

•Duncan’s procedure (Multiple range)

(From SPSS Help)

59

60

ANOVA - 10