Improving Technical Writing

advertisement

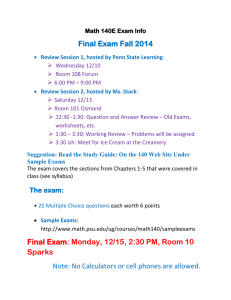

The Luddite Exam: Not Using Technology to Gauge Student Writing Development John Brocato June 2007 Summary This presentation discusses a case-study-based writing exam, written by hand during a threehour exam period, that gauges the writing development of engineering students. 2 Research Questions 1. How can we reliably gauge student writing development over time? 2. How can we do so in a controlled, fair setting? 3 Why Answer These Questions? To Measure Student Performance To Ensure Graduate Proficiency 4 To Improve Communication Pedagogy To Gauge Student Progress To Assess Program Effectiveness Why not use technology? •Plagiarism (wireless access) •Reliance on software •Security of exam materials All are necessary evils for routine writing. 5 [1] What does Luddite mean? Luddite describes a “[m]ember of organized groups of early 19thcentury English craftsmen who surreptitiously destroyed the textile machinery that was replacing them…. The term Luddite was later used to describe anyone opposed to technological change” [2]. 6 Luddites Smashing a Loom [3] DISCLAIMER: Our program contains no Luddites (that we’re aware). We simply think the name is apt and attention-getting. We embrace technology! Approach Application – Allow students to apply what they have learned. Purity – Ensure their work is untainted. Reuse – Restrict exam materials for reuse and purity. Fairness – Weight “capstone” documents fairly. 7 Methods 1. We prepared students by explicitly describing the exam requirements and using case studies prior to the exam. 2. We allowed students to use their own copies of required textbooks (but nothing else). 8 Methods (cont.) 3. We controlled content by providing the case study (see provided sample) at the exam. 4. We required students to write their exams by hand. 5. We monitored students closely, assisting when necessary. 9 Methods (cont.) 6. We required students to turn the case study back in with their exams. 7. We recorded the times students submitted their exams. 8. We graded exams with the same type of rubric we use for routine writing (see provided sample). 10 Findings Grades increased on the Luddite Exam compared to documents written in unrestricted settings. 11 Findings (cont.) Paper Averages* Fall 2005 (43 students) Spring 2006 (51 students) Summer 2006 (37 students) Fall 2006 (25 students) Spring 2007 (29 students) Exam Averages Difference 80.82 80.89 + 0.07 83.63 85.1 + 1.47 85.36 88.01 + 2.65 82.43 86.01 + 3.58 86.15 91.9 + 5.75 *Scores are out of 100 possible points divided according to standard letter-grade breakdowns: A = 100-90, B = 89-80, C = 79-70, D = 69-60, F = 59-0. 12 Conclusions The Luddite Exam is an effective way to gauge student writing development over time. The consistent increase in student performance likely stems from enhanced student ability as well as increased instructor skill in preparing students for the exam. 13 Future Work Implement the Luddite Exam programmatically/outside the specialized course (already begun). Formally survey student perceptions on the exam’s usefulness. Enable computer use on the exam without affecting the major objectives. 14 References [1] G. Larson & Steve Martin, “Wait! Wait! … Cancel that. I guess it says ‘helf,’” in The Complete Far Side 19801994 (2 volumes), Andrews & McMeel, Kansas City, MO, 2003, p. 205. [2] “Luddite,” in Britannica Concise Encyclopedia, June 19, 2007. [Online.] Available: http://www.britannica.com/ebc/article-9370681 [3] “Luddites Smashing a Loom,” in “Smash the (Bad) Machines!,” June 19, 2007. [Online.] Available: http://www.stephaniesyjuco.com/antifactory/blog/2005_0 7_01_archive.html 15