B+-tree and Hashing

advertisement

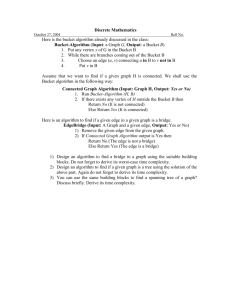

Advanced Data Structures NTUA Spring 2007 B+-trees and External memory Hashing Model of Computation Data stored on disk(s) Minimum transfer unit: a page(or block) = b bytes or B records N records -> N/B = n pages I/O complexity: in number of pages CPU Memory Disk I/O complexity An ideal index has space O(N/B), update overhead O(1) or O(logB(N/B)) and search complexity O(a/B) or O(logB(N/B) + a/B) where a is the number of records in the answer But, sometimes CPU performance is also important… minimize cache misses -> don’t waste CPU cycles B+-tree http://en.wikipedia.org/wiki/B-tree Records must be ordered over an attribute, SSN, Name, etc. Queries: exact match and range queries over the indexed attribute: “find the name of the student with ID=087-34-7892” or “find all students with gpa between 3.00 and 3.5” B+-tree:properties Insert/delete at log F (N/B) cost; keep tree heightbalanced. (F = fanout) Minimum 50% occupancy (except for root). Each node contains d <= m <= 2d entries. Two types of nodes: index (non-leaf) nodes and (leaf) data nodes; each node is stored in 1 page (disk based method) [BM72] Rudolf Bayer and McCreight, E. M. Organization and Maintenance of Large Ordered Indexes. Acta Informatica 1, 173189, 1972 180 200 150 156 179 120 130 100 101 110 30 35 3 5 11 120 150 180 30 100 Example Root to keys < 57 to keys 57 k<81 95 81 57 Index node to keys 81k<95 to keys 95 To record with key 85 To record with key 81 To record with key 57 95 81 57 Data node From non-leaf node to next leaf in sequence B+tree rules tree of order n (1) All leaves at same lowest level (balanced tree) (2) Pointers in leaves point to records except for “sequence pointer” (3) Number of pointers/keys for B+tree Max Max Min ptrs keys ptrs Non-leaf (non-root) Leaf (non-root) Root Min keys n n-1 n/2 n/2- 1 n n-1 (n-1)/2 (n-1)/2 n n-1 2 1 Insert into B+tree (a) simple case space available in leaf (b) leaf overflow (c) non-leaf overflow (d) new root 30 31 32 3 5 11 30 100 (a) Insert key = 32 n=4 (a) Insert key = 7 30 30 31 3 57 11 3 5 7 100 n=4 180 200 160 179 150 156 179 180 120 150 180 160 100 (c) Insert key = 160 n=4 (d) New root, insert 45 40 45 40 30 32 40 20 25 10 12 1 2 3 10 20 30 30 new root n=4 Insertion Find correct leaf L. Put data entry onto L. If L has enough space, done! Else, must split L (into L and a new node L2) This can happen recursively Redistribute entries evenly, copy up middle key. Insert index entry pointing to L2 into parent of L. To split index node, redistribute entries evenly, but push up middle key. (Contrast with leaf splits.) Splits “grow” tree; root split increases height. Tree growth: gets wider or one level taller at top. Deletion from B+tree (a) Simple case - no example (b) Coalesce with neighbor (sibling) (c) Re-distribute keys (d) Cases (b) or (c) at non-leaf (a) Simple case n=5 40 50 10 40 100 Delete 30 10 20 30 (b) Coalesce with sibling n=5 40 50 10 40 100 Delete 50 10 20 30 40 (c) Redistribute keys n=5 35 40 50 10 40 35 100 Delete 50 10 20 30 35 (d) Non-leaf coalesce n=5 Delete 37 25 40 45 30 37 30 40 25 26 30 20 22 10 14 1 3 10 20 25 40 new root Deletion Start at root, find leaf L where entry belongs. Remove the entry. If L is at least half-full, done! If L has only d-1 entries, Try to re-distribute, borrowing from sibling (adjacent node with same parent as L). If re-distribution fails, merge L and sibling. If merge occurred, must delete entry (pointing to L or sibling) from parent of L. Merge could propagate to root, decreasing height. Complexity Optimal method for 1-d range queries: Tree height: logd(N/d) Space: O(N/d) Updates: O(logd(N/d)) Query:O(logd(N/d) + a/d) d = B/2 180 200 120 150 180 30 100 Root 150 156 179 120 130 100 101 110 30 35 3 5 11 Example Range[32, 160] Other issues Internal node architecture [Lomet01]: Reduce the overhead of tree traversal. Prefix compression: In index nodes store only the prefix that differentiate consecutive sub-trees. Fanout is increased. Cache sensitive B+-tree Place keys in a way that reduces the cache faults during the binary search in each node. Eliminate pointers so a cache line contains more keys for comparison. References [BM72] Rudolf Bayer and McCreight, E. M. Organization and Maintenance of Large Ordered Indexes. Acta Informatica 1, 173-189, 1972 [L01] David B. Lomet: The Evolution of Effective B-tree: Page Organization and Techniques: A Personal Account. SIGMOD Record 30(3): 64-69 (2001) http://www.acm.org/sigmod/record/issues/0109/a1-lomet.pdf [B-Y95] Ricardo A. Baeza-Yates: Fringe Analysis Revisited. ACM Comput. Surv. 27(1): 111-119 (1995) Selection Queries B+-tree is perfect, but.... to answer a selection query (ssn=10) needs to traverse a full path. In practice, 3-4 block accesses (depending on the height of the tree, buffering) Any better approach? Yes! Hashing static hashing dynamic hashing Hashing Hash-based indexes are best for equality selections. Cannot support range searches. Static and dynamic hashing techniques exist; trade-offs similar to ISAM vs. B+ trees. Static Hashing # primary pages fixed, allocated sequentially, never deallocated; overflow pages if needed. h(k) MOD N= bucket to which data entry with key k belongs. (N = # of buckets) h(key) mod N key 0 1 h N-1 Primary bucket pages Overflow pages Static Hashing (Contd.) Buckets contain data entries. Hash fn works on search key field of record r. Use its value MOD N to distribute values over range 0 ... N-1. h(key) = (a * key + b) usually works well. a and b are constants… more later. Long overflow chains can develop and degrade performance. Extensible and Linear Hashing: Dynamic techniques to fix this problem. Extensible Hashing Situation: Bucket (primary page) becomes full. Why not re-organize file by doubling # of buckets? Reading and writing all pages is expensive! Idea: Use directory of pointers to buckets, double # of buckets by doubling the directory, splitting just the bucket that overflowed! Directory much smaller than file, so doubling it is much cheaper. Only one page of data entries is split. No overflow page! Trick lies in how hash function is adjusted! Example • Directory is array of size 4. • Bucket for record r has entry with index = `global depth’ least significant bits of h(r); – If h(r) = 5 = binary 101, it is in bucket pointed to by 01. – If h(r) = 7 = binary 111, it is in bucket pointed to by 11. LOCAL DEPTH 2 4* 12* 32* 16* Bucket A GLOBAL DEPTH 2 1 00 1* 01 10 11 2 DIRECTORY 10* 5* 7* 13* Bucket B Bucket C we denote r by h(r). Handling Inserts Find bucket where record belongs. If there’s room, put it there. Else, if bucket is full, split it: increment local depth of original page allocate new page with new local depth re-distribute records from original page. add entry for the new page to the directory Example: Insert 21, then 19, 15 LOCAL DEPTH 2 4* 12* 32* 16* Bucket A GLOBAL DEPTH 21 = 10101 19 = 10011 15 = 01111 2 2 1 00 1* 01 10 11 2 DIRECTORY 5* 21* 7* 13* Bucket C 10* 2 7* Bucket B 19* 15* DATA PAGES Bucket D Insert h(r)=20 (Causes Doubling) LOCAL DEPTH GLOBAL DEPTH 2 00 3 20 = 10100 2 Bucket A 16* 4* 12*32* 32*16* 11 3 32* 16* GLOBAL DEPTH 2 1* 5* 21*13* Bucket B 01 10 LOCAL DEPTH 3 000 2 1* 5* 21* 13* Bucket B 001 2 10* Bucket C Bucket D 011 10* 101 2 110 15* 7* 19* Bucket D 111 3 4* 12* 20* 2 100 2 15* 7* 19* 010 Bucket A2 (`split image' of Bucket A) 3 4* 12* 20* Bucket A2 (`split image' of Bucket A) Points to Note 20 = binary 10100. Last 2 bits (00) tell us r belongs in either A or A2. Last 3 bits needed to tell which. Global depth of directory: Max # of bits needed to tell which bucket an entry belongs to. Local depth of a bucket: # of bits used to determine if an entry belongs to this bucket. When does bucket split cause directory doubling? Before insert, local depth of bucket = global depth. Insert causes local depth to become > global depth; directory is doubled by copying it over and `fixing’ pointer to split image page. Linear Hashing This is another dynamic hashing scheme, alternative to Extensible Hashing. Motivation: Ext. Hashing uses a directory that grows by doubling… Can we do better? (smoother growth) LH: split buckets from left to right, regardless of which one overflowed (simple, but it works!!) Linear Hashing (Contd.) Directory avoided in LH by using overflow pages. (chaining approach) Splitting proceeds in `rounds’. Round ends when all NR initial (for round R) buckets are split. Current round number is Level. Search: To find bucket for data entry r, find hLevel(r): If hLevel(r) in range `Next to NR’ , r belongs here. Else, r could belong to bucket hLevel(r) or bucket hLevel(r) + NR; must apply hLevel+1(r) to find out. Family of hash functions: h0, h1, h2, h3, …. hi+1 (k) = hi(k) or hi+1 (k) = hi(k) + 2i-1N0 Linear Hashing: Example Initially: h(x) = x mod N (N=4 here) Assume 3 records/bucket Insert 17 = 17 mod 4 1 Bucket id 0 1 2 3 13 4 8 5 9 6 7 11 Linear Hashing: Example Initially: h(x) = x mod N (N=4 here) Assume 3 records/bucket Insert 17 = 17 mod 4 1Overflow for Bucket 1 Bucket id 0 1 2 3 13 4 8 5 9 6 7 11 Split bucket 0, anyway!! Linear Hashing: Example To split bucket 0, use another function h1(x): h0(x) = x mod N , h1(x) = x mod (2*N) Split pointer 17 0 1 2 3 13 4 8 5 9 6 7 11 Linear Hashing: Example To split bucket 0, use another function h1(x): h0(x) = x mod N , h1(x) = x mod (2*N) Split pointer 17 Bucket id 0 1 2 3 4 13 8 5 9 6 7 11 4 Linear Hashing: Example To split bucket 0, use another function h1(x): h0(x) = x mod N , h1(x) = x mod (2*N) Bucket id 0 8 1 5 2 13 9 17 6 3 7 11 4 4 Linear Hashing: Example h0(x) = x mod N , h1(x) = x mod (2*N) Insert 15 and 3 Bucket id 0 8 1 5 13 17 2 9 6 3 7 4 11 4 Linear Hashing: Example h0(x) = x mod N , h1(x) = x mod (2*N) Bucket id 0 1 2 17 8 9 3 4 5 15 6 7 11 3 4 13 5 Linear Hashing: Search h0(x) = x mod N (for the un-split buckets) h1(x) = x mod (2*N) (for the split ones) Bucket id 0 8 1 17 9 2 6 3 15 7 11 3 4 4 5 13 5 Linear Hashing: Search Algorithm for Search: Search(k) 1 b = h0(k) 2 if b < split-pointer then 3 b = h1(k) 4 read bucket b and search there References [Litwin80] Witold Litwin: Linear Hashing: A New Tool for File and Table Addressing. VLDB 1980: 212-223 http://www.cs.bu.edu/faculty/gkollios/ada01/Papers/linear-hashing.PDF [B-YS-P98] Ricardo A. Baeza-Yates, Hector Soza-Pollman: Analysis of Linear Hashing Revisited. Nord. J. Comput. 5(1): (1998)