Chapter 7 Editable Lecture Notecards

advertisement

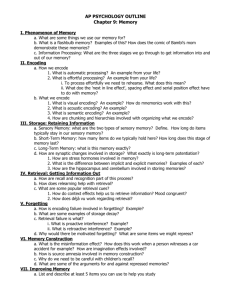

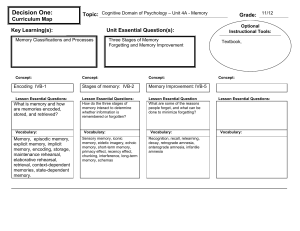

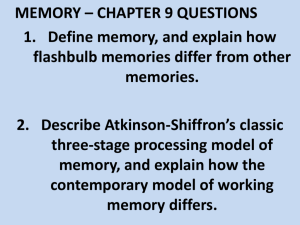

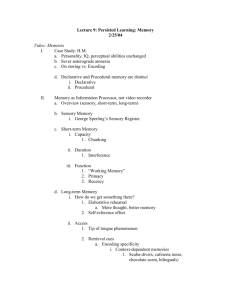

:: Slide 1 :: :: Slide 2 :: Memory is a fascinating process that has intrigued psychologists for decades. Over the past century, psychologists have realized that memory is an extremely complex process, which sometime acts in mysterious ways. For example, we all remember the location, time, age, and partner with whom we shared our first kiss. But what about more frequent memories, such as identifying a U.S. penny? We all have handled 1000s of pennies, yet most people cannot identify the correct one. To understand this strange case and others, we have to understand the three basic processes of memory. :: Slide 3 :: :: Slide 4 :: Three enduring questions that psychologists ask of memory, correspond to these three key processes. These processes and memory itself are often viewed as analogous to a modern day computer. We will begin with encoding, which involves forming a memory code from some stimulus. The first question is, how does information get into memory? Like a scanner or copier, we have to encode stimuli into a language our brain understands. The second question is, how is that information stored or maintained into memory? Like hard drives in computers, information is organized and stored for later use. The third question is, how is the stored information retrieved or pulled back out of memory? Like a computer monitor retrieving information to display, we too call up information and “display” it in various forms (e.g., talking, thinking, moving). Although technology serves as a nice analogy, the inner workings of our memory are far more complex. There are multiple levels of processing, which we will get to soon, but we first have to address a vital aspect of memory: attention, which involves focusing awareness on a narrowed range of stimuli. It’s probably not a shock to you that attention is crucial to memory. For example, no matter how many hours you listened to me talk about memory, if you’re not paying attention, you will forget most of it. Memory is negatively affected by inattention, especially when we try to multi-task, as in driving while talking on a cell-phone. One study investigated this and found that talking on a cell phone seriously impaired their braking skills. Those talking on the cell phone ran more red lights and took longer to brake. :: Slide 5 :: :: Slide 6 :: While attention is necessary, there are qualitative differences in how you attend to something. Elaboration is a process by which a stimulus is linked to other information at the time of encoding. For example, you are studying phobias for your psychology test, and you apply this information to your own fear of spiders. Elaboration often consists of thinking of examples, and self-generated examples seem to work best. If you’re talking on a cell phone in a car with the music in the background, you’re likely attending to the conversation more than the music, the music more than your foot on the gas pedal, etc… These differences in attending affect how well we remember things and form the levels-of-processing theory. In this theory, the most basic type is structural encoding. Visual imagery involves the creation of visual images to represent the words to be remembered, and concrete words are much easier to create images of (for example, juggler is easier to visualize than truth). Structural encoding is shallow processing that emphasizes the physical structure of the stimulus, as in encoding the shapes that form letters in the alphabet. Dual-coding theory holds that memory is enhanced by forming semantic or visual codes, since either can lead to recall. The next level of encoding is phonemic, which emphasizes what a word sounds like, as in reading aloud or to oneself. The last and deepest level of encoding is semantic, which emphasizes the meaning of verbal input. This level of encoding requires thinking about the content and actions the words represent, as in understanding the meaning of an argument in an article. Self-referent encoding involves deciding how or whether information is personally relevant, that is, information that is personally meaningful is more memorable. :: Slide 7 :: :: Slide 8 :: Now that we understand more about the process of encoding and its importance, we turn now to the next step in memory – what is done with all the information, which thanks to encoding, is now in a language our brain can understand. How do we organize and store information over time? Sensory memory is one of two temporary storage buffers that information must pass through before reaching long-term storage. As the name implies, sensory memory preserves information through the senses, in its original form (e.g., if you see something, the sensory memory is vision, not any other sense). This and related questions were addressed by two researchers: Atkinson and Shiffrin. They proposed that memory is made up of three memory storages: sensory memory, short-term memory, and long-term memory. It is important to realize that, like the computer example of memory, information processing is a metaphor – the three storages do not refer to tiny “lockers” in the brain where information actually sits. What’s great about sensory memory is that it allows us to experience a visual pattern, sound, or touch even after the event has come and gone. In doing this, sensory memory gives us additional time to recognize things and memorize them. But the extra time isn’t much – for vision and audition, sensory memories only last about .25 seconds. You can see this characteristic of sensory memory, called an afterimage, when a flashlight or sparkler is moved about quickly, creating what appears to be a continuous figure. :: Slide 9 :: :: Slide 10 :: After its brief stay in sensory memory, information moves to what Atkinson and Shiffrin called short-term memory. Short-term memory is the second buffer, after sensory memory, before information is stored long-term. In addition to time decay and interference, short-term memory, like an iPod or hard drive, has a limited capacity – it can only store but so much information. Short-term memory gets its name because it has a limited storage capacity that can only maintain information for 20 seconds. Several studies have shown that when asked to recall basic information, such as consonants, participants perform poorly. This was first discovered by George Miller in the 50s, when he found that most participants could only remember 7 plus or minus 2 digits. When we need to memorize more than these amounts, the information already stored in our short-term memory is displaced. But alas, we have ways of overcoming the 7 plus or minus 2 rule. Researchers attribute this poor performance to time-related decay of memory, but also to interference, as when other information gets in the way of what’s being stored. Rather than viewing information broken into bits, we can store multiple bits as chunks, a processing known as chunking. For example, take the letters FBINBCCIAIBM. To counteract time effects, many people use rehearsal, the process of repetitively verbalizing or thinking about information. In doing this, they recycle the information back into short-term memory. We all do this when we have to remember bits of information; we repeat telephone numbers, e-mails, web addresses, etc. Few could recall these from short-term memory, but by using chunking, we can put these letters into meaningful, easy-to-memorize units, such as FBI-CIA-NBC-IBM. In this demonstration, we went from working with 12 down to 4 pieces to remember. :: Slide 11 :: :: Slide 12 :: Decades after Miller discovered the magic number 7 rule, Alan Baddeley proposed a more complex model of short-term memory he called “working memory”. His working memory theory divided short-term memory into four components. The final stop for memory storage is in our long-term memory. Long-term memory is an unlimited capacity storage that can hold information over long periods of time. The first component is the phonological loop [click to enlarge]. This component is nothing new; it is present in earlier models of short-term memory. This component is at work when we recite or think of information to keep it in our memory. The second component is the visuospatial sketchpad [click to enlarge]. This allows people to temporarily hold and manipulate visual images. For example, if you needed to rearrange the clothes, shoes, and other junk in your closet, the visuospatial sketchpad would be at work. The third component is the central executive system [click to enlarge]. This system is in charge of directing and dividing focus and attention. If you’re listening to the TV, talking on the phone, and trying to read for this class, your central executive system is trying to manage and divide your attention to these tasks. The final component is called the episodic buffer [click to enlarge]. In the episodic buffer, all the information in short-term memory comes together to be integrated in ways that allow it to be passed on to long-term memory more easily. One question about the nature of long-term memory is, after it’s passed on from shortterm memory, can the memories stay forever or do they fade out with time? Think of long-term storage as a barrel infinite in size, full of memories represented by marbles. When we forget things, is it because we can’t find the “right” marble, or is there a hole somewhere in our barrel where memories can disappear? :: Slide 13 :: :: Slide 14 :: Some claim that flashbulb memories, which are unusually vivid and detailed recollections of momentous events, provide evidence that what gets to longterm memory, stays there. The general consensus among psychologists is that memories are formed in various ways depending on many factors, such as the type of information (e.g., fact vs. event). One way in which we organize information is through our schemas. Researchers have found, however, that although some memories stick around forever, they are often inaccurate and people feel overly confident in their reports of them. Schemas are organized clusters of knowledge about an object or event abstracted from previous experience. For example, a schema of a classroom might include desks, students, chalkboards, teacher, etc. When confronted with a novel classroom, we will more likely remember things that are consistent with our schemas. However, we also may remember things that violate our schema expectations. So if you walk into a classroom, and see an animal, you’ll likely remember that, since most classroom schemas do not contain animals. Another way we organize information is through semantic networks. Semantic networks consist of nodes representing concepts, joined together by pathways that link related concepts. For example, the phrase “fire truck” may be organized in a network of similarly related words, such as truck, fire, and red. In this network, words closer to one another are more strongly related. This information is extremely relevant when considering eyewitness testimony. A final, much more complex explanation of how we store knowledge is found in parallel distributed processing, in which cognitive processes depend on patterns of activation in highly interconnected networks. This more recent theory is being integrated with what we are learning about neural networks in the brain. :: Slide 15 :: :: Slide 16 :: This video shows how psychologists use high-tech brain imaging procedures and computer simulations to better understand learning and memory. The journey into long-term memory is complex and takes considerable effort, but reaching long-term memory is only beneficial if you’re able to retrieve that information later. When you are not be able to retrieve information that feels as if it’s just out of your reach, you are experiencing the tip-of-the-tongue phenomenon. Though this shows a failure in retrieval, researchers have shown that retrieval can occur more frequently when retrieval cues are present. Retrieval cues are stimuli that help gain access to memories. Other cues, called context cues can aid our retrieval of memories. Working with context cues involves putting yourself in the context in which a memory occurred. For example, you may forget what you were looking for when going from your bedroom to the kitchen, but once you return to the bedroom you might remember “Oh yeah, I wanted a glass of water”. This is because the context clues in your bedroom help you retrieve the memory. :: Slide 17 :: :: Slide 18 :: When we retrieve information, it’s never an exact replay of the past. Instead, we pull up reconstructions of the past that can be distorted and include inaccurate information. Another explanation for why we sometimes fail to retrieve memories accurately is due to source monitoring. Our poor abilities to retrieve information accurately has been extensively studied and is now known as the misinformation effect, which occurs when individuals recall of an event is altered by misleading post-event information. Source monitoring is the process of making inferences about the origins of memories. Johnson and colleagues argue that when we store memories, we don’t label where/who we got them from. They believe this process occurs when we retrieve the memories. A great example was shown by Loftus and Palmer, in this video [click to play]. Other research has consistently found that people introduce inaccuracies in the simple story-telling we do every day. These findings have helped psychologist understand that memory is not a perfect process and that its more malleable than once thought. For example, you may hear a blurb on CNN but may say your friend told you about it earlier. This is an example of a source monitoring error in which a memory derived from one source is misattributed to another source. Source monitoring can help us understand why some people “recall” something that was only verbally suggested to them or confuse their sources of information. :: Slide 19 :: :: Slide 20 :: Although we normally think of forgetting as a “bad thing”, it’s actually quite adaptive. Just imagine the clutter we would have if we remembered everything. But even with its adaptive function, forgetting can be problematic, like when we forget a definition of a term for a test, or where our keys are, or worse. There are three general ways of measuring forgetting, but psychologists prefer to use the term retention, focusing on the proportion of what is remembered, rather than what is forgotten. Psychologists are interested in how and why we forget. One of the first to research forgetting was Hermann Ebbinghaus. Ebbinghaus used nonsense syllables (random strings of vowels and consonants) as a means of investigating memory. Interestingly, he himself was the subject of his experiments, and laboriously memorized nonsense syllables to test his recall after various amounts of time had passed – he plotted these experiments to show a forgetting curve. In doing this, he noticed that he forgot a lot of the syllables shortly after memorizing them. Though we now know that memorizing things with meaning are less forgettable than Ebbinhaus’ experiments, his research spurred psychologists to consider how we measure forgetting. First is a recall measure, which requires a person to reproduce information on their own without any cues. If asked to memorize 10 words then say them out loud, this would be a recall test. Second is a recognition measure, which requires a person to select previously learned information from an array of options. All students take part in this process when completing multiple-choice or true-false questions on exams. Third is a relearning measure, which requires a person to memorize information a second time to determine how much time or effort is saved since learning it before. For example, if it took you 5 minutes to memorize a list of words and then only 2 minutes the second time, your relearning measure would be 3 minutes. In other words, you remembered 60% of what you memorized to begin with. :: Slide 21 :: :: Slide 22 :: The difficulty of a recognition test can vary greatly, depending on the number, similarity, and plausibility of the options provided as possible answers. Many students find the question shown to be difficult. Measuring forgetting is only half the battle. To truly understand what’s going on we have to explore the possible causes for why we forget – factors that affect encoding, storage, and retrieval. What if the answer choices were Jimmy Carter, John F. Kennedy, Harry Truman, and James Madison? [Click to continue] Three of these choices are readily dismissed, rendering the question easier to answer. One explanation may be that we think we are forgetting something, but in fact we never learned it to begin with. The penny example from earlier demonstrated this type of pseudoforgetting. The problem at work here is actually ineffective encoding due to lack of attention, rather than any storage or retrieval errors. Another explanation is that over time memory traces fade away, known as the decay theory. While this explanation has some merit, much of our forgetting is actually better understood through interference theory. :: Slide 23 :: :: Slide 24 :: Interference theory, which proposes that people forget information because of competition from other material, is a well-documented process and can account at least for some of our forgetting. Forgetting can also be because of failures in information retrieval. For example, why is it that we can remember a piece of information in one setting but not in another? One study by McGeoch and McDonald found that the amount of interference depended on the similarity between material. [Click to continue] For example, when introducing an interference task of synonyms, high interference occurred, but with numbers that were not associated with the test material, little interference occurred. This type of interference in which new information impairs previously learned information is called retrograde interference. Proactive interference is just the opposite, when old information interferes with new information, as when your old phone number interferes with your recalling the new number. One explanation has to do with the similarity between the environment or way that we learned something and the setting in which we try to retrieve it. Let’s pretend we learned a definition of psychology in Spanish while in Mexico. We would probably be more likely to recall that definition in Mexico or maybe Spanish class, where retrieval cues are more similar to the context in which it was learned. This example highlights the encoding specificity principle, which says that the value of a retrieval cue depends on how well it corresponds to the memory code. This explanation of forgetting as well as the others that have been mentioned seem as if they occur automatically without our control, but there is another theory of why we forget, one in which we are motivated to do. :: Slide 25 :: :: Slide 26 :: Over a century ago, Freud offered a new explanation for retrieval failures – that we keep distressing thoughts and feelings buried in the unconscious. Many psychologists, especially memory researchers, are skeptical of recovered memories of abuse; however, they do not imply that people reporting these memories are lying or have bad intentions. We repress memories or we are motivated to forget. This theory or forgetting is a hot and controversial topic in present-day psychology, primarily due to a recent surge in lawsuits in which a potential victim claims that he/she was sexually abused, but just recently recalled the repressed memory. Many psychologists and psychiatrists believe that repressed memories exist, as seen in many of their patients, especially in abuse and other traumatic experiences, but still others deny their accuracy. Instead, they point to findings on the misinformation effect, source monitoring, suggestibility, and leading questions as contributors to the “uncovering” or repressed memories. Skeptics also highlight cases in which the truth about abuse has been found and the memories proven false. Of course, rebuttals from therapists are made on the account that repression is a natural response to trauma and dismiss much of the laboratory work on implanting false memories. To say that all recovered memories are false would certainly be incorrect, but great caution must be taken in interpreting these claims. Regardless of one’s standpoint on this issue, recovered memories have generated large amounts of research and will continue to be a focus for memory researchers. :: Slide 27 :: :: Slide 28 :: For decades, researchers have tried to trace the physiological processes of memories and even to pinpoint specific memories. As unfortunate as serious head injuries are, they are a rich source of information regarding the anatomy of memory. After serious trauma, some individuals develop amnesia, or extensive memory loss. Similar to how interference is categorized, amnesia can be either retrograde or anterograde. Consolidation refers to a hypothetical process involving gradual conversion of information into durable memory codes for storage in long-term memory. These areas are those around the hippocampus, which comprise the medial temporal lobe. Retrograde amnesia results in loss of memories for events that occurred prior to the injury whereas anterograde amnesia results in loss of memories for events that occur after the injury. By examining the brain structure and functioning of individuals with serious brain injury, scientists have identified some areas that may be important in the consolidation of memories. Other areas, such as the cortex, are involved in memory, but the search for the anatomy of memory is in its infancy. :: Slide 29 :: :: Slide 30 :: More detailed accounts of memory processes at the cellular level over the past 20 years have led to some intriguing theories about how we encode, store, and retrieve memories. Some memory theorists propose different systems of memory. The most basic distinction is made between declarative and nondeclarative memory. One theory, developed by Thompson, suggests that memories depend on localized neural circuits. That is, memories may create unique, reusable pathways on which signals flow. Future research in this area may some day allow us to trace pathways of specific memories. Another line of research suggests that memories form because of alterations in synaptic activity at specific sites in the brain. Memories may also depend on a process called long-term potentiation, which is a long-lasting increase in neural excitability at synapses along a specific neural pathway. A final process called neurogenesis, or the formation of new neurons, may also contribute to the shaping of neural circuits that may underlie memories. Newly formed neurons appear to be more excitable than older ones, and this may mean they can more quickly be used to form new memories. Declarative memory handles factual information, whereas nondeclarative memory houses memory for actions, skills, conditioned responses, and emotional memories. If you know that a bike has two wheels, pedals, handlebars, etc., you are using declarative memory. However, if you know how to ride a bike, this is nondeclarative memory. These two memory systems differ in interesting ways. For example, writing out the notes to a song (declarative) is effortful and can be easily forgotten, but the process of playing that song (nondeclarative) probably involves less cognitive effort and is less likely to be forgotten. :: Slide 31 :: :: Slide 32 :: If the medial temporal area of the brain is destroyed, due to injury or disease, people often develop amnesia. Endel Tulving has further divided declarative memory into two separate systems: episodic and semantic. Learn how habit memory, a second robust, but unconscious memory system, plays a role in helping people with amnesia to learn a declarative task. Episodic memory is made up of chronological, or temporally dated, recollections of personal experiences. Episodic memory includes all memories in which a “time stamp” is made. That is, you remember an event associated with some information. Semantic memory contains general knowledge that is not tied to the time when it was learned. For example, that January 1st marks the new year, dogs have four legs, etc. are examples of information related to semantic memory. An appropriate analogy is to think of both as books. Episodic memory is an autobiography whereas semantic memory is an encyclopedia. :: Slide 33 :: :: Slide XX :: Researchers have broken down memories not only into systems, but also into two specific types: prospective and retrospective. Left blank Prospective memory involves remembering to perform actions in the future. For example, remembering to walk the dog or take out the trash involve prospective memory. Retrospective memories involve remembering events from the past or previously learned information. For example, in retrospective memory you may try to remember who won the Super Bowl last year or what last week’s lecture covered. Research is under way to determine whether these proposed systems correlate with actual neural processes. :: Slide XX :: :: Slide XX :: Left blank Left blank