On-the-Fly Pipeline Parallelism

advertisement

On-the-Fly Pipeline Parallelism

SPAA 2013

I-Ting Angelina Lee*, Charles E. Leiserson*, Tao B. Schardl*,

Jim Sukha†, and Zhunping Zhang*

MIT CSAIL*

Intel Corporation†

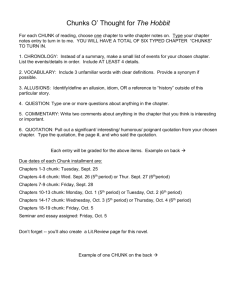

Dedup PARSEC Benchmark [BKS08]

Dedup compresses a stream of data by compressing unique elements

and removing duplicates.

int fd_out = open_output_file();

bool done = false;

while(!done) {

chunk_t *chunk = get_next_chunk();

if(chunk == NULL) { done = true; }

else {

chunk->is_dup = deduplicate(chunk);

if(!chunk->is_dup) compress(chunk);

write_to_file(fd_out, chunk);

}

Stage 0: While

there is more data,

read the next chunk

from the stream.

Stage 1: Check

for duplicates.

Stage 2: Compress

first-seen chunk.

Stage 3: Write to

output file.

}

2

Parallelism in Dedup

while(!done) {

chunk_t *chunk = get_next_chunk();

if(chunk == NULL) { done = true; }

else {

Stage 0

Stage 1

chunk->is_dup =

deduplicate(chunk);

Stage 2

if(!chunk->is_dup)

compress(chunk);

Stage 3

write_to_file(fd_out, chunk);

}

}

i0

i1

i2

i3

i4

i5

Stage 0

...

Stage 1

...

Stage 2

...

Stage 3

...

Let’s model Dedup’s

execution as a

pipeline dag.

A node denotes

the execution of a

stage in an

iteration.

Edges denote

dependencies

between nodes.

Dedup exhibits

pipeline parallelism.

: cross edge

3

Pipeline Parallelism

We can measure parallelism in terms of work and span [CLRS09].

Example:

= weight 1

= weight 8

Work T1: The sum of the weights of the nodes in the dag.

T1 = 75

Span T∞: The length of a longest path in the dag.

T∞ = 20

Parallelism T1 / T∞: The maximum possible speedup.

T1 / T∞ = 3.75

4

Executing a Parallel Pipeline

To execute Dedup in

parallel, we must

Stage 0

answer two questions.

while(!done) {

chunk_t *chunk = get_next_chunk();

if(chunk == NULL) { done = true; }

else {

Stage 1 How do we encode

chunk->is_dup =

deduplicate(chunk);

Stage 2

if(!chunk->is_dup)

compress(chunk);

Stage 3

the parallelism in

Dedup?

write_to_file(fd_out, chunk);

}

}

i0

i1

i2

i3

i4

i5

Stage 0

...

Stage 1

...

Stage 2

...

Stage 3

...

How do we assign

work to parallel

processors to

execute this

computation

efficiently?

5

On-the-Fly Pipeline Parallelism

SPAA

July 24, 2013

I-Ting Angelina Lee*, Charles E. Leiserson*, Tao B. Schardl*,

Jim Sukha†, and Zhunping Zhang*

MIT CSAIL*

Intel Corporation†

Construct-and-Run Pipelining

tbb::pipeline pipeline;

GetChunk_Filter filter1(SERIAL, item);

Deduplicate_Filter filter2(SERIAL);

Compress_Filter filter3(PARALLEL);

WriteToFile_Filter filter4(SERIAL, out_item);

pipeline.add_filter(filter1);

pipeline.add_filter(filter2);

pipeline.add_filter(filter3);

pipeline.add_filter(filter4);

pipeline.run(pipeline_depth);

A construct-and-run pipeline specifies the stages and their

dependencies a priori before execution.

Ex: TBB [MRR12], StreamIt [GTA06], GRAMPS [SLY+11]

7

On-the-Fly Pipelining of X264

I

P

P

P

I

P

P

I

P

P

P

Not easily expressible

using TBB's pipeline

construct [RCJ11].

An on-the-fly pipeline is constructed dynamically as the

program executes.

8

On-the-Fly Pipeline Parallelism in Cilk-P

We have incorporated on-the-fly pipeline parallelism into a

Cilk-based work-stealing runtime system, named Cilk-P, which

features:

simple linguistics for specifying on-the-fly pipeline

parallelism that are composable with Cilk's existing forkjoin primitives; and

PIPER, a theoretically sound randomized work-stealing

scheduler that handles both pipeline and fork-join

parallelism.

We hand-compiled 3 applications with pipeline parallelism

(ferret, dedup, and x264 from PARSEC [BKS08]) to run on Cilk-P.

Empirical results indicate that Cilk-P exhibits low serial

overhead and good scalability.

9

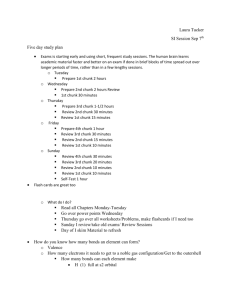

Outline

On-the-Fly Pipeline Parallelism

The Pipeline Linguistics in Cilk-P

The PIPER Scheduler

Empirical Evaluation

Concluding Remarks

10

The Pipeline Linguistics in Cilk-P

int fd_out = open_output_file();

bool done = false;

while(!done) {

chunk_t *chunk = get_next_chunk();

if(chunk == NULL) { done = true; }

else {

chunk->is_dup = deduplicate(chunk);

if(!chunk->is_dup) compress(chunk);

write_to_file(fd_out, chunk);

}

}

11

The Pipeline Linguistics in Cilk-P

Loop iterations may execute in

int fd_out = open_output_file();

parallel in a pipelined fashion,

bool done = false;

where stage 0 executes serially.

pipe_while(!done) {

chunk_t *chunk = get_next_chunk();

if(chunk == NULL) { done = true; }

else {

pipe_wait(1);

chunk->is_dup = deduplicate(chunk);

pipe_continue(2);

if(!chunk->is_dup) compress(chunk);

pipe_wait(3);

write_to_file(fd_out, chunk);

}

}

End the current stage, advance to

stage 1, and wait for the previous

iteration to finish stage 1.

End the current stage

and advance to stage 2.

The Pipeline Linguistics in Cilk-P

pipe_while(!done) {

chunk_t *chunk = get_next_chunk();

if(chunk == NULL) { done = true; }

else {

pipe_wait(1);

chunk->is_dup = deduplicate(chunk);

pipe_continue(2);

if(!chunk->is_dup) compress(chunk);

pipe_wait(3);

write_to_file(fd_out, chunk);

}

}

These keywords denote

the logical parallelism

of the computation.

Stage 0

...

Stage 1

...

Stage 2

...

Stage 3

...

: cross edge

The pipe_while

enforces that stage 0

executes serially.

The pipe_wait(1)

enforces cross edges

across stage 1.

The pipe_wait(3)

enforces cross edges

13

across stage 3.

The Pipeline Linguistics in Cilk-P

int fd_out = open_output_file();

bool done = false;

pipe_while(!done) {

chunk_t *chunk = get_next_chunk();

if(chunk == NULL) { done = true; }

else {

pipe_wait(1);

chunk->is_dup = deduplicate(chunk);

pipe_continue(2);

if(!chunk->is_dup) compress(chunk);

pipe_wait(3);

write_to_file(fd_out, chunk);

}

}

These keywords have serial semantics — when elided or replaced with

its serial counterpart, a legal serial code results, whose semantics is one

of the legal interpretation of the parallel code [FLR98].

14

On-the-Fly Pipelining of X264

The program controls the execution of pipe_wait and pipe_continue

statements, thus supporting on-the-fly pipeline parallelism.

I

P

P

P

I

P

P

I

P

P

P

Program control can thus:

Skip stages;

Make cross edges data

dependent; and

Vary the number of

stages across iterations.

We can pipeline the x264

video encoder using Cilk-P.

15

Pipelining X264 with Pthreads

The scheduling logics are embedded in the application code.

I

P

P

P

I

P

P

I

P

P

P

The main

control thread

pthread_create

pthread_join

16

Pipelining X264 with Pthreads

The scheduling logics are embedded in the application code.

I

P

P

P

I

P

P

I

P

P

P

pthread_mutex_lock

update my_var

pthread_cond_broadcast

pthread_mutex_unlock

pthread_mutex_lock

while(my_var < value) {

pthread_cond_wait

}

pthread_mutex_unlock

17

Pipelining X264 with Pthreads

The scheduling logics are embedded in the application code.

I

P

P

P

I

P

P

I

P

P

P

The cross-edge

dependencies are

enforced via data

synchronization with

locks and conditional

variables.

18

X264 Performance Comparison

16

Speedup over serial execution

14

12

10

Pthreads

8

Cilk-P

6

4

2

0

0

2

4

6

8

10

12

14

16

Number of processors (P)

Cilk-P achieves comparable performance to Pthreads on x264

without explicit data synchronization.

19

Outline

On-the-Fly Pipeline Overview

The Pipeline Linguistics in Cilk-P

The PIPER Scheduler

A Work-Stealing Scheduler

Handling Runaway Pipeline

Avoiding Synchronization Overhead

Concluding Remarks

20

Guarantees of a Standard

Work-Stealing Scheduler [BL99,ABP01]

Definition.

TP — execution time on P processors

T1 — work

T∞ — span

T1 / T∞ — parallelism

SP — stack space on P processors

S1 — stack space of a serial execution

Given a computation dag with fork-join parallelism, it achieves:

Time bound: TP ≤ T1 / P + O(T∞ + lg P) expected time

linear speedup when P ≪ T1 / T∞

Space bound: SP ≤ PS1

The Work-First Principle [FLR98]. Minimize the scheduling

overhead borne by the work path (T1) and amortize it against the

steal path (T∞).

21

A Work-Stealing Scheduler

(Based on [BL99,ABP01])

i0

i1

i2

i3

i4

i5

1

5

9

13

17

21

...

2

6

10

14

18

22

...

3

7

11

15

19

23

...

4

8

12

16

20

24

...

Execute 17

P

: done

P

P

: not done

: ready

Each worker maintains its

own set of ready nodes.

If executing a node enables:

a. two nodes: mark one

ready and execute the

other one;

b. one node: execute the

enabled node;

c. zero nodes: execute a

node in its ready set.

If the ready set is empty,

steal from a randomly

chosen worker.

: executing

22

A Work-Stealing Scheduler

(Based on [BL99,ABP01])

i0

i1

i2

i3

i4

i5

1

5

9

13

17

21

...

2

6

10

14

18

22

...

3

7

11

15

19

23

...

4

8

12

16

20

24

...

Execute 18

P

: done

P

P

: not done

: ready

Each worker maintains its

own set of ready nodes.

If executing a node enables:

a. two nodes: mark one

ready and execute the

other one;

b. one node: execute the

enabled node;

c. zero nodes: execute a

node in its ready set.

If the ready set is empty,

steal from a randomly

chosen worker.

: executing

23

A Work-Stealing Scheduler

(Based on [BL99,ABP01])

i0

i1

i2

i3

i4

i5

1

5

9

13

17

21

...

2

6

10

14

18

22

...

3

7

11

15

19

23

...

4

8

12

16

20

24

...

Steal!

P

P

P

P

Each worker maintains its

own set of ready nodes.

If executing a node enables:

a. two nodes: mark one

ready and execute the

other one;

b. one node: execute the

enabled node;

c. zero nodes: execute a

node in its ready set.

If the ready set is empty,

steal from a randomly

chosen worker.

A node has at most two outgoing edges, so a standard work-stealing

scheduler just works ... well, almost.

24

Outline

On-the-Fly Pipeline Overview

The Pipeline Linguistics in Cilk-P

The PIPER Scheduler

A Work-Stealing Scheduler

Handling Runaway Pipeline

Avoiding Synchronization Overhead

Concluding Remarks

25

Runaway Pipeline

i0

i1

i2

i3

i4

i5

1

5

9

13

17

21

...

2

6

10

14

18

22

...

3

7

11

15

19

23

...

4

8

12

16

20

24

...

P

P

A runaway pipeline:

where the scheduler

allows many new

iterations to be started

before finishing old ones.

Problem: Unbounded space usage!

26

Runaway Pipeline

i0

i1

i2

i3

i4

i5

1

5

9

13

17

21

...

2

6

10

14

18

22

...

3

7

11

15

19

23

...

4

8

12

16

20

24

...

K=4

A runaway pipeline:

where the scheduler

allows many new

iterations to be started

before finishing old ones.

Steal!

P

P

Problem: Unbounded space usage!

Cilk-P automatically throttles pipelines by inserting a throttling edge

between iteration i and iteration i+K, where K is the throttling limit.

27

Outline

On-the-Fly Pipeline Overview

The Pipeline Linguistics in Cilk-P

The PIPER Scheduler

A Work-Stealing Scheduler

Handling Runaway Pipeline

Avoiding Synchronization Overhead

Concluding Remarks

28

Synchronization Overhead

i0

i1

i2

i3

i4

i5

1

5

9

13

17

21

...

2

6

10

14

18

22

...

3

7

11

15

19

23

...

4

8

12

16

20

24

...

P

If two predecessors of a

node are executed by

different workers,

synchronization is

necessary — whoever

finishes last enables the

node.

P

29

Synchronization Overhead

i0

i1

i2

i3

i4

i5

1

5

9

13

17

21

...

2

6

10

14

18

22

...

3

7

11

15

19

23

...

4

8

12

16

20

24

...

check right!

P

check left!

P

If two predecessors of a

node are executed by

different workers,

synchronization is

necessary — whoever

finishes last enables the

node.

At pipe_wait(j), iteration i must check

left to see if stage j is done in iteration i-1.

At the end of a stage, iteration i must check

right to see if it enabled a node in iteration i+1.

Cilk-P implements “lazy enabling” to mitigate the check-right overhead

and “dependency folding” to mitigate the check-left overhead.

30

Lazy Enabling

i0

i1

i2

i3

i4

i5

1

5

9

13

17

21

...

2

6

10

14

18

22

...

3

7

11

15

19

23

...

4

8

12

16

20

24

...

check i2?

Steal!

P

P

P

Idea:

Be really really lazy about

the check-right operation.

Punt the responsibility of checking

right onto a thief stealing or until

the worker runs out of nodes to

execute in its iteration.

Lazy enabling is in accordance with the work-first principle [FLR98].

31

PIPER's Guarantees

Definition. TP — execution time on P processors

T1 — work

T∞ — span of the throttled dag

T1 / T∞ — parallelism

SP — stack space on P processors

S1 — stack space of a serial execution

K — throttling limit

f — maximum frame size

D — depth of nested pipelines

Time bound:

TP ≤ T1 / P + O(T∞ + lg P) expected time

linear speedup when P ≪ T1 / T∞

Space bound:

SP ≤ P(S1 + fDK)

32

Outline

On-the-Fly Pipeline Parallelism

The Pipeline Linguistics in Cilk-P

The PIPER Scheduler

Empirical Evaluation

Concluding Remarks

33

Experimental Setup

All experiments were ran on an AMD Opteron system with 4

quad-core 2GHz CPU’s having a total of 8 GBytes of memory.

Code compiled using GCC (or G++ for TBB) 4.4.5 using –O3

optimization (except for x264 which uses –O4 by default).

The Pthreaded implementation of ferret and dedup employ the

oversubscription method that creates more than one thread

per pipeline stage. We limit the number of cores used by the

Pthreaded implementations using taskset but experimented to

find the best configuration.

All benchmarks are throttled similarly.

Each data point shown is the average of 10 runs, typically with

standard deviation less than a few percent.

34

Ferret Performance Comparison

16

Speedup over serial execution

14

12

10

Pthreads

8

TBB

6

Cilk-P

4

2

0

0

2

4

6

8

10

12

14

16

Number of processors (P)

Throttling limit = 10P

No performance penalty incurred for using the more general

on-the-fly pipeline instead of a construct-and-run pipeline.

35

Dedup Performance Comparison

16

Speedup over serial execution

14

12

10

Pthreads

8

TBB

6

Cilk-P

4

2

0

0

2

4

6

8

10

12

14

16

Number of processors (P)

Throttling limit = 4P

Measured parallelism for Cilk-P (and TBB)’s pipeline is merely 7.4.

The Pthreaded implementation has more parallelism due to unordered stages.

36

X264 Performance Comparison

16

Speedup over serial execution

14

12

10

Pthreads

8

Cilk-P

6

4

2

0

0

2

4

6

8

10

12

14

16

Number of processors (P)

Cilk-P achieves comparable performance to Pthreads on x264

without explicit data synchronization.

37

On-the-Fly Pipeline Parallelism in Cilk-P

We have incorporated on-the-fly pipeline parallelism into a Cilk-based

work-stealing runtime system, named Cilk-P, which features:

simple linguistics that:

composable with Cilk's forkjoin primitives;

AND

specifies on-the-fly

pipelines;

has serial semantics; and

allow users to synchronize

via control constructs.

the PIPER scheduler that:

supports both pipeline

and fork-join parallelism;

asymptotically efficient;

uses bounded space; and

empirically demonstrates

low serial overhead and

good scalability.

Intel has created an experimental branch of its Cilk Plus runtime with support for

on-the-fly pipelines based on Cilk-P:

https://intelcilkruntime@bitbucket.org/intelcilkruntime/intel-cilk-runtime.git

38

39

Impact of Throttling

We automatically throttle to save space, but the user shouldn’t

worry about throttling affecting performance.

How does throttling a pipeline computation affect its performance?

...

...

...

...

If the dag is regular, then the work and span of the throttled dag

asymptotically match that of the unthrottled dag.

40

Impact of Throttling

We automatically throttle to save space, but the user shouldn’t

worry about throttling affecting performance.

How does throttling a pipeline computation affect its performance?

...

...

...

T11/3 + 1

T11/3 + 1

(T12/3 + T11/3)/2

If the dag is irregular, then there are pipelines where no throttling

scheduler can achieve speedup.

41

Dedup Performance Comparison

16

Speedup over serial execution

14

12

10

Pthreads

TBB

8

Cilk-P

6

Modified Cilk-P

4

2

0

0

2

4

6

8

10

12

14

16

Number of processors (P)

Throttling limit = 4P

Modified Cilk-P uses a single worker thread for writing out output, like the

Pthreaded implementation, which helps performance as well.

42

The Cilk Programming Model

The named child function

may execute in parallel

with the parent caller.

int fib(int n) {

if(n < 2) { return n; }

int x = cilk_spawn fib(n-1);

int y = fib(n-2);

Control cannot pass this

cilk_sync;

point until all spawned

return (x + y);

children have returned.

}

Cilk keywords grant permission for parallel execution.

They do not command parallel execution.

43

Pipelining with TBB

1. Create a pipeline object.

2. Execute.

…

tbb::parallel_pipeline(

INNER_PIPELINE_NUM_TOKENS,

tbb::make_filter< void, one_chunk* > (

tbb::filter::serial, get_next_chunk) &

& tbb::make_filter< one_chunk*, one_procd_chunk* > (

tbb::filter::parallel, deduplicate) &

tbb::make_filter< one_procd_chunk*, one_procd_chunk* > (

tbb::filter::parallel, compress) &

tbb::make_filter< one_procd_chunk*, void > (

tbb::filter::serial, write_to_file)

);

…

44

Pipelining with Pthreads

1. Encode each stage in

its own thread.

2. Assign threads to

workers.

void *Fragment(void *targs) {

…

chunk = get_next_chunk();

buf_insert(&send_buf, chunk);

…

}

void *Compress(void *targs) {

…

chunk = buf_remove(&recv_buf);

compress(chunk);

buf_insert(&send_buf, chunk);

…

}

void *Reorder(void *targs) {

3. Execute.

void *Deduplicate(void *targs) {

…

chunk = buf_remove(&recv_buf);

is_dup = deduplicate(chunk);

if (!is_dup)

buf_insert(

&send_buf_compress,

chunk);

else

buf_insert(

&send_buf_reorder,

chunk);

…

}

…

chunk = buf_remove(&recv_buf);

write_or_enqueue(chunk);

…

}

45

Pipelining X264 with Pthreads

The cross-edge dependencies are enforced via data synchronization

with locks and conditional variables.

I

P

P

P

I

P

P

I

P

P

P

Encoding a video with 512

frames on 16 processors:

Total # of invocations for:

pthread_mutex_lock:

202776

pthread_cond_broadcast:

34816

pthread_cond_wait:

10068

in application code.

46