Slides for Lecture 11- Part 1

advertisement

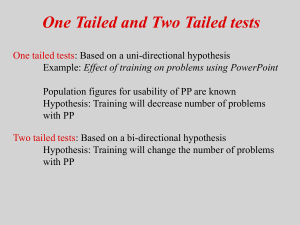

If you think you made a lot of mistakes in the survey project…. Think of how much you accomplished and the mistakes you did not make… • Went from not knowing much about surveys to having designed, deployed, and completed one in 1 ½ months • Actually got people to respond! • Did not end up with 100 open ended responses which you had to content analyze! One Tailed and Two Tailed tests One tailed tests: Based on a uni-directional hypothesis Example: Effect of training on problems using PowerPoint Population figures for usability of PP are known Hypothesis: Training will decrease number of problems with PP Two tailed tests: Based on a bi-directional hypothesis Hypothesis: Training will change the number of problems with PP If we know the population mean Sampling Distribution Population for usability of Powerpoint 1400 Identify region Unidirectional hypothesis: .05 level 1200 Frequency 1000 800 Bidirectional hypothesis: .05 level 600 400 Std. Dev = .45 200 Mean = 5.65 N = 10000.00 0 Mean Usability Index • What does it mean if our significance level is .05? For a uni-directional hypothesis For a bi-directional hypothesis PowerPoint example: • Unidirectional If we set significance level at .05 level, • 5% of the time we will higher mean by chance • 95% of the time the higher mean mean will be real • Bidirectional If we set significance level at .05 level • 2.5 % of the time we will find higher mean by chance • 2.5% of the time we will find lower mean by chance • 95% of time difference will be real Changing significance levels •What happens if we decrease our significance level from .01 to .05 Probability of finding differences that don’t exist goes up (criteria becomes more lenient) •What happens if we increase our significance from .01 to .001 Probability of not finding differences that exist goes up (criteria becomes more conservative) • PowerPoint example: If we set significance level at .05 level, • 5% of the time we will find a difference by chance • 95% of the time the difference will be real If we set significance level at .01 level • 1% of the time we will find a difference by chance • 99% of time difference will be real • For usability, if you are set out to find problems: setting lenient criteria might work better (you will identify more problems) • Effect of decreasing significance level from .01 to .05 Probability of finding differences that don’t exist goes up (criteria becomes more lenient) Also called Type I error (Alpha) • Effect of increasing significance from .01 to .001 Probability of not finding differences that exist goes up (criteria becomes more conservative) Also called Type II error (Beta) Degree of Freedom • The number of independent pieces of information remaining after estimating one or more parameters • Example: List= 1, 2, 3, 4 Average= 2.5 • For average to remain the same three of the numbers can be anything you want, fourth is fixed • New List = 1, 5, 2.5, __ Average = 2.5 Major Points • T tests: are differences significant? • One sample t tests, comparing one mean to population • Within subjects test: Comparing mean in condition 1 to mean in condition 2 • Between Subjects test: Comparing mean in condition 1 to mean in condition 2 Effect of training on Powerpoint use • Does training lead to lesser problems with PP? • 9 subjects were trained on the use of PP. • Then designed a presentation with PP. No of problems they had was DV Powerpoint study data 21 • Mean = 23.89 24 21 • SD = 4.20 26 32 27 21 25 18 Mean SD 23.89 4.20 Results of Powerpoint study. • Results Mean number of problems = 23.89 • Assume we know that without training the mean would be 30, but not the standard deviation Population mean = 30 • Is 23.89 enough smaller than 30 to conclude that training affected results? One sample t test cont. • Assume mean of population known, but standard deviation (SD) not known • Substitute sample SD for population SD (standard error) • Gives you the t statistics • Compare t to tabled values which show critical values of t t Test for One Mean • Get mean difference between sample and population mean • Use sample SD as variance metric = 4.40 X 30 23.89 6.11 t 1.48 s 4.40 1.46 n 9 Degrees of Freedom • Skewness of sampling distribution of variance decreases as n increases • t will differ from z less as sample size increases • Therefore need to adjust t accordingly • df = n - 1 • t based on df Looking up critical t (Table E.6) Two-Tailed Significance Level df 4 5 6 7 8 9 .10 1.812 1.753 1.725 1.708 1.697 1.660 .05 2.228 2.131 2.086 2.060 2.042 1.984 .02 2.764 2.602 2.528 2.485 2.457 2.364 .01 3.169 2.947 2.845 2.787 2.750 2.626 Conclusions • Critical t= n = 9, t.05 = 2.62 (two tail significance) • If t > 2.62, reject H0 • Conclude that training leads to less problems Factors Affecting t • Difference between sample and population means • Magnitude of sample variance • Sample size Factors Affecting Decision • Significance level a • One-tailed versus two-tailed test Sampling Distribution of the Mean • We need to know what kinds of sample means to expect if training has no effect. i. e. What kinds of sample means if population mean = 23.89 Recall the sampling distribution of the mean. Sampling Distribution of the Mean--cont. • The sampling distribution of the mean depends on Mean of sampled population St. dev. of sampled population Size of sample Sampling Distribution Number of problems with Powerpoint Use 1400 1200 Frequency 1000 800 600 400 Std. Dev = .45 200 Mean = 23.89 0 N = 10000.00 Mean Number of problems Cont. Sampling Distribution of the mean--cont. • Shape of the sampled population Approaches normal Rate of approach depends on sample size Also depends on the shape of the population distribution Implications of the Central Limit Theorem • Given a population with mean = and standard deviation = s, the sampling distribution of the mean (the distribution of sample means) has a mean = , and a standard deviation = s /n. • The distribution approaches normal as n, the sample size, increases. Demonstration • Let population be very skewed • Draw samples of 3 and calculate means • Draw samples of 10 and calculate means • Plot means • Note changes in means, standard deviations, and shapes Cont. Parent Population Skewed Population 3000 Frequency 2000 1000 Std. Dev = 2.43 Mean = 3.0 N = 10000.00 0 .0 20 .0 18 .0 16 .0 14 .0 12 .0 10 0 8. 0 6. 0 4. 0 2. 0 0. X Cont. Sampling Distribution n = 3 Sampling Distribution Sample size = n = 3 Frequency 2000 1000 Std. Dev = 1.40 Mean = 2.99 N = 10000.00 0 0 .0 13 0 .0 12 0 .0 11 0 .0 10 00 9. 00 8. 00 7. 00 6. 00 5. 00 4. 00 3. 00 2. 00 1. 00 0. Sample Mean Cont. Sampling Distribution n = 10 Sampling Distribution Sample size = n = 10 1600 1400 Frequency 1200 1000 800 600 400 Std. Dev = .77 200 Mean = 2.99 N = 10000.00 0 50 6. 00 6. 50 5. 00 5. 50 4. 00 4. 50 3. 00 3. 50 2. 00 2. 50 1. 00 1. Sample Mean Cont. Demonstration--cont. • Means have stayed at 3.00 throughout-except for minor sampling error • Standard deviations have decreased appropriately • Shapes have become more normal--see superimposed normal distribution for reference Within subjects t tests • Related samples • Difference scores • t tests on difference scores • Advantages and disadvantages Related Samples • The same participants give us data on two measures e. g. Before and After treatment Usability problems before training on PP and after training • With related samples, someone high on one measure probably high on other(individual variability). Cont. Related Samples--cont. • Correlation between before and after scores Causes a change in the statistic we can use • Sometimes called matched samples or repeated measures Difference Scores • Calculate difference between first and second score e. g. Difference = Before - After • Base subsequent analysis on difference scores Ignoring Before and After data Effect of training Before Mean St. Dev. 21 24 21 26 32 27 21 25 18 23.84 4.20 After 15 15 17 20 17 20 8 19 10 15.67 4.24 Diff. 6 9 4 6 15 7 13 6 8 8.17 3.60 Results • The training decreased the number of problems with Powerpoint • Was this enough of a change to be significant? • Before and After scores are not independent. See raw data r = .64 Cont. Results--cont. • If no change, mean of differences should be zero So, test the obtained mean of difference scores against = 0. Use same test as in one sample test t test D and sD = mean and standard deviation of differences. D 8.22 8.22 t 6.85 sD 3.6 1.2 n 9 df = n - 1 = 9 - 1 = 8 Cont. t test--cont. • With 8 df, t.025 = +2.306 (Table E.6) • We calculated t = 6.85 • Since 6.85 > 2.306, reject H0 • Conclude that the mean number of problems after training was less than mean number before training Advantages of Related Samples • Eliminate subject-to-subject variability • Control for extraneous variables • Need fewer subjects Disadvantages of Related Samples • Order effects • Carry-over effects • Subjects no longer naïve • Change may just be a function of time • Sometimes not logically possible Between subjects t test • Distribution of differences between means • Heterogeneity of Variance • Nonnormality Powerpoint training again • Effect of training on problems using Powerpoint Same study as before --almost • Now we have two independent groups Trained versus untrained users We want to compare mean number of problems between groups Effect of training Before Mean St. Dev. 21 24 21 26 32 27 21 25 18 23.84 4.20 After 15 15 17 20 17 20 8 19 10 15.67 4.24 Diff. 6 9 4 6 15 7 13 6 8 8.17 3.60 Differences from within subjects test Cannot compute pairwise differences, since we cannot compare two random people We want to test differences between the two sample means (not between a sample and population) Analysis • How are sample means distributed if H0 is true? • Need sampling distribution of differences between means Same idea as before, except statistic is (X1 - X2) (mean 1 – mean2) Sampling Distribution of Mean Differences • Mean of sampling distribution = 1 - 2 • Standard deviation of sampling distribution (standard error of mean differences) = sX X 1 2 1 2 2 2 s s n1 n2 Cont. Sampling Distribution--cont. • Distribution approaches normal as n increases. • Later we will modify this to “pool” variances. Analysis--cont. • Same basic formula as before, but with accommodation to 2 groups. X1 X 2 X1 X 2 t 2 2 sX X s1 s2 n1 n2 1 2 • Note parallels with earlier t Degrees of Freedom • Each group has 6 subjects. • Each group has n - 1 = 9 - 1 = 8 df • Total df = n1 - 1 + n2 - 1 = n1 + n2 - 2 9 + 9 - 2 = 16 df • t.025(16) = +2.12 (approx.) Conclusions • T = 4.13 • Critical t = 2.12 • Since 4.13 > 2.12, reject H0. • Conclude that those who get training have less problems than those without training Assumptions • Two major assumptions Both groups are sampled from populations with the same variance • “homogeneity of variance” Both groups are sampled from normal populations • Assumption of normality Frequently violated with little harm. Heterogeneous Variances • Refers to case of unequal population variances. • We don’t pool the sample variances. • We adjust df and look t up in tables for adjusted df. • Minimum df = smaller n - 1. Most software calculates optimal df.