Evolutionary Computation II: General Methods and Theory

advertisement

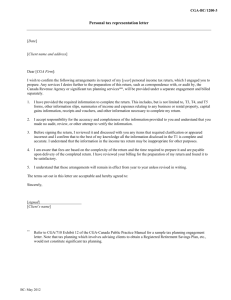

Slides for Introduction to Stochastic Search and Optimization (ISSO) by J. C. Spall CHAPTER 10 EVOLUTIONARY COMPUTATION II: GENERAL METHODS AND THEORY •Organization of chapter in ISSO – Introduction – Evolution strategy and evolutionary programming; comparisons with GAs – Schema theory for GAs – What makes a problem hard? – Convergence theory – No free lunch theorems Methods of EC • Genetic algorithms (GAs), evolution strategy (ES), and evolutionary programming (EP) are most common EC methods • Many modern EC implementations borrow aspects from one or more EC methods • Generally: ES generally for function optimization; EP for AI applications such as automatic programming 10-2 ES Algorithm with Noise-Free Loss Measurements Step 0 (initialization) Randomly or deterministically generate initial population of N values of and evaluate L for each of the values. Step 1 (offspring) Generate offspring from current population of N candidate values such that all values satisfy direct or indirect constraints on . Step 2 (selection) For (N +)-ES, select N best values from combined population of N original values plus offspring; for (N,)-ES, select N best values from population of > N offspring only. Step 3 (repeat or terminate) Repeat steps 1 and 2 or terminate. 10-3 Schema Theory for GAs • Key innovation in Holland (1975) is a form of theoretical foundation for GAs based on schemas – Represents first attempt at serious theoretical analysis – But not entirely successful, as “leap of faith” required to relate schema theory to actual convergence of GA • “GAs work by discovering, emphasizing, and recombining good ‘building blocks’ of solutions in a highly parallel fashion.” (Melanie Mitchell, An Introduction to Genetic Algorithms [p. 27], 1996, paraphrasing John Holland) – Statement above more intuitive than formal – Notion of building block is characterized via schemas – Schemas are propagated or destroyed according to the laws of probability 10-4 Schema Theory for GAs • Schema is template for chromosomes in GAs • Example: [* 1 0 * * * * 1], where the * symbol represents a don’t care (or free) element – [1 1 0 0 1 1 0 1] is specific instance of this schema • Schemas sometimes called building blocks of GAs • Two fundamental results: Schema theorem and implicit parallelism • Schema theorem says that better templates dominate the population as generations proceed • Implicit parallelism says that GA processes >> N schemas at each iteration • Schema theory is controversial – Not connected to algorithm performance in same direct way as usual convergence theory for iterates of algorithm 10-5 Convergence Theory via Markov Chains • Schema theory inadequate – Mathematics behind schema theory not fully rigorous – Unjustified claims about implications of schema theory • More rigorous convergence theory exists – Pertains to noise-free loss (fitness) measurements – Pertains to finite representation (e.g., bit coding or floating point representation on digital computer) • Convergence theory relies on Markov chains • Each state in chain represents possible population • Markov transition matrix P contains all information for Markov chain analysis 10-6 GA Markov Chain Model • GAs with binary bit coding can be modeled as (discrete state) Markov chains • Recall states in chain represent possible populations • ith element of probability vector pk represents probability of achieving ith population at iteration k • Transition matrix: The i, j element of P represents the probability of population i producing population j through the selection, crossover and mutation operations – Depends on loss (fitness) function, selection method, and reproduction and mutation parameters • Given transition matrix P, it is known that pTk +1 = pTk P 10-7 Rudolph (1994) and Markov Chain Analysis for Canonical GA • Rudolph (1994, IEEE Trans. Neural Nets.) uses Markov chain analysis to study “canonical GA” (CGA) • CGA includes binary bit coding, crossover, mutation, and “roulette wheel” selection – CGA is focus of seminal book, Holland (1975) • CGA does not include elitismlack of elitism is critical aspect of theoretical analysis • CGA assumes mutation probability 0 < Pm < 1 and singlepoint crossover probability 0 Pc 1 • Key preliminary result: CGA is ergodic Markov chain: – Exists a unique limiting distribution for the states of chain – Nonzero probability of being in any state regardless of initial condition 10-8 Rudolph (1994) and Markov Chain Analysis for CGA (cont’d) • Ergodicity for CGA provides a negative result on convergence in Rudolph (1994) • Let Lˆmin,k denote lowest of N (= population size) loss values within population at iteration k – Lˆmin,k represents loss value for in population k that has maximum fitness value • Main theorem: CGA satisfies lim P Lˆmin,k L( ) 1 k (above limit on left-hand side exists by ergodicity) • Implies CGA does not converge to the global optimum 10-9 Rudolph (1994) and Markov Chain Analysis for CGA (cont’d) • Fundamental problem with CGA is that optimal solutions are found but then lost • CGA has no mechanism for retaining optimal solution • Rudolph discusses modification to CGA yielding positive convergence results • Appends “super individual” to each population – Super individual represents best chromosome so far – Not eligible for GA operations (selection, crossover, mutation) – Not same as elitism • CGA with added super individual converges in probability 10-10 Contrast of Suzuki (1995) and Rudolph (1994) in Markov Chain Analysis for GA • Suzuki (1995, IEEE Trans. Systems, Man, and Cyber.) uses Markov chain analysis to study GA with elitism – Same as CGA of Rudolph (1994) except for elitism • Suzuki (1995) only considers unique states (populations) – Rudolph (1994) includes redundant states • With N = population size and B = no. of bits/chromosome: B B N 2 1 (N 2 1)! unique states in Suzuki (1995), B (2 1)! N ! N 2NB states in Rudolph (1994) (much larger than number of unique states above) • Above affects bookkeeping; does not fundamentally change relative results of Suzuki (1995) and Rudolph (1994) 10-11 Convergence Under Elitism • In both CGA case (Rudolph, 1994) and case with elitism (Suzuki, 1995) the limit p exists: pT lim pT0 P k k (dimension of p differs according to definition of states, unique or nonunique as on previous slide) • Suzuki (1995) assumes each population includes one elite element and that crossover probability Pc = 1 • Let p j represent jth element of p , and J represent indices j where population j includes chromosome achieving L() • Then from Suzuki (1995): jJ p j 1 • Implies GA with elitism converges in probability to set of optima 10-12 Calculation of Stationary Distribution • Markov chain theory provides useful conceptual device • Practical calculation difficult due to explosive growth of number of possible populations (states) • Growth is in terms of factorials of N and bit string length (B) • Practical calculation of pk usually impossible due to difficulty in getting P • Transition matrix can be very large in practice – E.g., if N = B = 6, P is 108108 matrix! – Real problems have N and B much larger than 6 • Ongoing work attempts to severely reduce dimension by limiting states to only most important (e.g., Spears, 1999; Moey and Rowe, 2004) 10-13 Example 10.2 from ISSO: Markov Chain Calculations for Small-Scale Implementation • Consider L() = sin , = [0, 15] • Function has local and global minimum; plot on next slide • Several GA implementations with very small population sizes (N) and numbers of bits (B) • Small scale implementations imply Markov transition matrices are computable – But still not trivial, as matrix dimensions range from approximately 20002000 to 40004000 10-14 Loss Function for Example 10.2 in ISSO Markov chain theory provides probability of finding solution ( = 15) in given number of iterations 10-15 Example 10.2 (cont’d): Probability Calculations for Very Small-Scale GAs Probability that GA with elitism produces population containing optimal solution GA iteration 0 5 10 20 30 40 50 100 150 Crossover (Pc) = 1.0 Mutation (Pm) = 0.05 0.03 0.08 0.15 0.32 0.48 0.62 0.74 0.97 1.00 Population (N) = 2 Bit length (B) = 6 Pc = 1.0 Pm = 0.05 N=4 B=4 0.21 0.51 0.69 0.92 1.00 Pc = 1.0 Pm = 0.05 N=2 B=4 0.12 0.23 0.34 0.55 0.75 0.93 1.00 -- -- -- -- -- -10-16 Summary of GA Convergence Theory • Schema theory (Holland, 1975) was most popular method for theoretical analysis until approximately mid-1990s – Schema theory not fully rigorous and not fully connected to actual algorithm performance • Markov chain theory provides more formal means of convergence—and convergence rate—analysis • Rudolph (1994) used Markov chains to provide largely negative result on convergence for canonical GAs – Canonical GA does not converge to optimum • Suzuki (1995) considered GAs with elitism; unlike Rudolph (1994), GA is now convergent • Challenges exist in practical calculation of Markov transition matrix 10-17 No Free Lunch Theorems (Reprise, Chap. 1) • No free lunch (NFL) Theorems apply to EC algorithms – Theorems imply there can be no universally efficient EC algorithm – Performance of one algorithm when averaged over all problems is identical to that of any other algorithm • Suppose EC algorithm A applied to loss L – Let Lˆ n denote lowest loss value from most recent N population elements after n N unique function evaluations • Consider the probability that Lˆ n after n unique evaluations of the loss: n P Lˆ L, A NFL theorems state that the sum of above probabilities over all loss functions is independent of A 10-18 Comparison of Algorithms for Stochastic Optimization in Chaps. 2 – 10 of ISSO • Table next slide is rough summary of relative merits of several algorithms for stochastic optimization – Comparisons based on semi-subjective impressions from numerical experience (author and others) and theoretical or analytical evidence – NFL theorems not generally relevant as only considering “typical” problems of interest, not all possible problems • Table does not consider root-finding per se • Table is for “basic” implementation forms of algorithms • Ratings range from L (low), ML (medium-low), M (medium), MH (mediumhigh), and H (high) – These scales are for stochastic optimization setting and have no meaning relative to classical deterministic methods 10-19 Comparison of Algorithms Rand. search RLS Stoch SPSA grad. (basic) Ease of implementation H MH M Efficiency in high dimen. M (algs. H H ASP SAN GA M M M ML MH MH MH M Highly variable L M MH M MH MH H N/A ML MH ML MH MH ML MH H H M ML ML B&C) Generality of loss fn. Global optimization Handles noise in loss/gradient Real-time applications Theoretical foundation L H H MH M ML L MH H H H H MH MH Sexiness L M M M M MH H 10-20