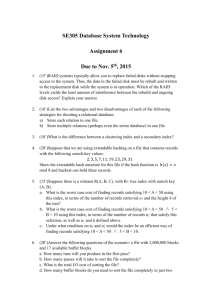

20141001-CONS5773-OOWstorage-04 - Indico

advertisement

October 1st 2014, Oracle OpenWorld 2014 Eric Grancher, head of database services group, CERN-IT Video screen capture at https://indico.cern.ch/event/344531/ CERN DB blog: http://cern.ch/db-blog/ Storage, why does it matter? • • • • • Database Concepts 12c Release 1 (12.1) "An essential task of a relational database is data storage." Performance (even if DB would be in memory, commit are synchronous operations) Availability, many (most?) recovery due to failing IO subsystems Most of the architecture can fail, storage not! Fantastic evolution in recent years, more to come! 3 Outlook • • • • • Oracle IOs Technologies Planning Lessons from experience / failure Capturing information 4 Understand DB IO patterns (1/2) • You do not know what you cannot measure (24x7!) • Oracle DB does different types of IO • Many OS tools enable to understand them • Oracle DB provides timing and statistics information about the IO operations (as seen from the DB) 5 Understand DB IO patterns (2/2) • • • snapper (Tanel Poder) strace (Linux) / truss (Solaris) perf / gdb (see Frits Hoogland’s blog) 6 7 8 Overload at CPU level (1/2) • • • Observed many times: “the storage is slow” (and storage administrators/specialists say “storage is fine / not loaded”) Typically happens that observed (from Oracle rdbms point of view) IO wait times are long if CPU load is high Instrumentation / on-off CPU Overload at CPU level (2/2) t1 t2 t1 t2 t1 t2 Oracle OS Acceptable load IO time t1 t2 t1 t2 Oracle OS Off cpu IO High load Technology • • • • Rotating disk Flash based devices Local and shared storage Functionality 11 Rotating disk (1/2) • Highest capacity / cost credit: (WLCG) computing Model update credit: Wikipedia 12 Rotating disk (2/2) • Well known technology… but complex credit: Computer Architecture: A Quantitative Approach 13 Flash memory / NAND (1/2) • • Block erasure (128kB) Memory wear credit: Intel (2x) credit: Wikipedia 14 Flash memory / NAND (2/2) • • • • Multi-level (MLC) and single-level (SLC), even TLC Low latency 4kB: read in 0.02ms, write in 0.2ms Complex algorithms to improve write performance, use over-provisioning Enormous differences 15 Local versus shared (1/2) • • • Locally attached storage Remotely attached storage (NFS, FC) Real Application Cluster credit: Broadberry credit: Oracle 16 Local versus shared (2/2) • Serial ATA (SATA): • • • • PCI Express: 1.5 GB/s PCI-Express 2.0 x4 Serial Attached SCSI (SAS) • • • • Current: SATA 3.0, 6 Gb/s, half duplex, 550 MB/s SATA express: 2 PCIe 3.0 lanes -> 1969 MB/s 6/12 Gb/s, multiple initiators, full duplex NFS: 10GbE FC: 16 Gb/s: 1.6GB/s (+ duplex) Infiniband: QDR 4x 40Gb/s 17 Bandwidth (1/2) • How many spinning disks to fill a 16GFC channel? • • • Each spinning disk (15k RPM) at 170 MB/s. 1.6GB/s / 170 MB/s ~= 9.4 Each flash disk SATA 3.0 at 550 MB/s. 1.6GB/s / 550 MB/s ~= 2.9 Link aggregation! references credit: Tomshardware, wikipedia 18 Bandwidth (2/2) • Only few disk saturate storage networking, so • • • either many independent sources and ports on the server or (FC) multipathing or Direct NFS link aggregation • PCI Express (local) • Balanced systems in term of storage networking require good planning 19 Queuing / higher load 20 Writeback / memory caching • • Major gain (x ms to <1ms), but it requires solid execution 9 August 2010 • • • • 18 August 2010 • • • • Power stop No Battery Backup Unit and write-back enabled Database corruption, 2 minutes data loss Disk failure Double controller failures (write-back) Database corruption 1 September 2010 • • • Planned intervention, clean database stop Power stop (write-back data has not been flushed) Database corruption including backup on disk 21 Functionality • Thin provisioning (ex: db1 5TB in a 15TB volume) • Snapshot at the storage level, restore at the storage level (ex: 10TB in 15s) • Cloning (*) at the storage level Integration with Oracle Multitenant 22 ASM, (cluster/network) file system • • • • [754305.1] 11.2 DBCA does not support raw devices anymore [12.1 doc] Raw devices have been desupported and deprecated in 12.1 Local filesystem, cluster and NFS for RAC ASM 24 Filesystem • • • • • • FILESYSTEMIO_OPTIONS=SETALL enables direct IO and asynchronous IO NFS with Direct NFS Cluster filesystem (example ACFS) Advanced features like snapshots Important to do regular de-fragmentation if there is some sort of copy-on-write Simplicity 25 ASM • • • • ASMLib /dev/oracleasm controversial: (ASMLib in RHEL6) ASM filter driver -> AFD:* /dev/oracleafd/ ACFS interesting, many use cases, logs in databases for example Setup has evolved at CERN: • • • • Powerpath / EMC RHEL3: Qlogic driver RHEL4: dm + chmod RHEL5/6: udev (permission) and dm (multipathing) 26 Planning • • • • • If random IOs are crucial: “IOPS for sale, capacity for free” (Jeffrey Steiner) Read: for IOPS, for bandwidth Write: log/redo and DB writer Identify capacity, latency, bandwidth, functionality needs Validate with reference workloads (SLOB, fio) and your workload (Real Application Testing) 27 Real Application Testing Capture Original Replay Target 28 Disks (1/5) • • • Disks are not reliable (any vendor, enterprise or not...), “there are only two types of disk drives in the industry. Drives that have failed, and drives that are about to fail “ (Jeff Bonwick) RAID 4/5 gives a false sense reliability (see next slide) Experience: datafile not reachable anymore, disk array double error, (smart) move to local disk, 3rd disk failure, major recovery, bandwidth issue, 4th disk failure... Post-mortem 29 29 Disks (2/5) 30 Disks (3/5), lessons 1* – 3 – 5* 2 – 4* – 6 • • Monitoring storage is crucial Regular media check is very important (will it be possible to read when needed what is not read on a regular basis) • • 1 – 3* – 5 2* – 4 – 6* • Parity drive / parity blocks ASM partner extent Note:416046.1 “A corruption in the secondary extent will normally only be seen if the block in the primary extent is also corrupt. ASM fixes corrupt blocks in secondary extent automatically at next write of the block.” (if it is over-written before it is needed ), >=11.1 preferred read failure group before RMAN full backup, >=12.1 ASM diskgroup scrubbing (ALTER DISKGROUP data SCRUB) Double parity / triple mirroring * Primary extent 31 Disks (4/5) • Disks are larger and larger • • • speed stay ~constant -> issue with speed bit error rate stay constant (10-14 to 10-16), increasing issue with availability With x as the size and α the “bit error rate” 32 Disks, redundancy comparison (5/5) 5 14 28 Bit error rate 10^-14 1 TB SATA desktop RAID 1 7.68E-02 5 14 28 Bit error rate 10^-15 1TB SATA enterprise RAID 1 7.96E-03 RAID 5 (n+1) 3.29E-01 6.73E-01 8.93E-01 RAID 5 (n+1) 3.92E-02 1.06E-01 2.01E-01 ~RAID 6 (n+2) 1.60E-14 1.46E-13 6.05E-13 ~RAID 6 (n+2) 1.60E-16 1.46E-15 6.05E-15 ~triple mirror 8.00E-16 8.00E-16 8.00E-16 ~triple mirror 8.00E-18 8.00E-18 8.00E-18 Bit error rate 10^-16 10TB SATA enterprise 450GB FC RAID 1 Bit error rate 10^-15 RAID 1 4.00E-04 7.68E-02 RAID 5 (n+1) 2.00E-03 5.58E-03 1.11E-02 RAID 5 (n+1) 3.29E-01 6.73E-01 8.93E-01 ~RAID 6 (n+2) 7.20E-19 6.55E-18 2.72E-17 ~RAID 6 (n+2) 1.60E-15 1.46E-14 6.05E-14 ~triple mirror 3.60E-20 3.60E-20 3.60E-20 ~triple mirror 8E-17 8E-17 8E-17 33 33 Upgrades (1/3) • • • “If it is working, you should not change it!”… But often changes come through the little door (disk replacement, IPv6 enablement on the network, “just a new volume”, etc.) Example: 34 Upgrades (2/3) • SunCluster, new storage (3510) introduced, 1 LUN ok • Second LUN introduced (April), all fine • Standard maintenance operation (July), cluster does not restart • Multipathing: DMP / MPxIO, cluster SCSI reservations on the shared disks 35 Upgrades (3/3) • • • • 23 hours downtime in a critical path to LHC magnets testing Some production with reduced HW availability Important stress... Up to date multi-pathing layer would have avoided it (or no change) 36 Measure • • • “You can't manage what you don't measure” The Oracle DB has wait information (v$session, v$event_histogram) AWR / statspack, set retention It has additional information, ready to be used… 37 38 AWR history 39 Slow IO is different than IO outlier • • • • • • • • • IO tests and planning: SLOB, fio, etc. help to size Latency variation is the user experience (C. Millsap) 1, 19, 0, 20, 10: average 10 9, 11, 10, 9.5, 10.5 : average 10 10.01, 9.99, 10, 10.1, 9.9: average 10 10.01, 9.99, 10.01, 9.99, 500 , 10.01, 9.99: average ~10 Ex: web page: 5 SQL statements, 10 IOs per request 50 IOs at 0.2 ms = 5 ms 50 Ios at 300 ms = 1500 ms = 1.5s 40 Pathological cases, latency • Spinning disk latency O(10ms), Flash O(0.1ms) • Is it always in this order of magnitude? If not, you should know, with detailed and timing(*) information. • Reasons include bugs, disk failures, temporary or global overload, etc. (*) correlation with other sources (OS logs, storage sub-system logs, ASM logs, etc.) 41 Complementing AWR for IO • • • AWR captures histogram information, not single IO AWR does not capture information on ADG In addition, desirable to extract information • • Longer term (across migrations, upgrades, capacity planning) With timing, slow IO operations from ASH 42 Capture Active Session History long IO direct path read ON CPU db file sequential read session 5 session 4 session 3 session 2 session 1 log file parallel write lgwr time db file sequential read direct path read ON CPU log file parallel write Relative wait times indicative… 43 ASH long IO repository • Stores information about long(*) IO operations • Query it to identify major issues • Correlate with histograms and total IO operations (*) longer than expected, >1s, >100ms, >10ms 44 45 46 47 48 “Low latency computing” • from Kevin Closson Needed: specify storage with latency targets: • • • • With a specified workload 99% of IO operations take less than X 99.99% of IO operations take less than Y 100% of IO operations take less than Z 49 Conclusion • • Critical: availability, performance and functionality Performance: • • • • • “High-availability • • • • • • Spinning disk: IOPS matter, capacity “for free” Flash: “low latency computing’, bandwidth, especially in storage network Btw, absorbing lot of IO require CPU Planning: SLOB, fio, Real Application Testing HA is low technology” (Carel-Jan Engel) = complexity is the enemy of high-availability Mirror / triple-mirror / triple parity Some sort of scrubbing is essential (N)FS or ASM are both solid and capable, depends on what is simpler and best known in your organisation RAC adds requirement for shared storage, be careful that the storage interconnect is not a bottleneck Latency is complex, measure and keep long term statistics (AWR retention, extract data, data visualization), key differentiator between Flash solutions 50 I am a proud member of an EMEA Oracle User Group Are you a member yet? Meet us at south upper lobby of Moscone South www.iouc.org References • • • • • • • • • Kyle Hailey R scripts https://github.com/khailey/fio_scripts Kevin Closson SLOB http://kevinclosson.net/slob/ Frits Hoogland gdb/strace http://fritshoogland.wordpress.com/tag/oracle-io-performance-gdb-debuginternal-internals/ fio http://freecode.com/projects/fio Luca Canali OraLatencyMap http://db-blog.web.cern.ch/blog/luca-canali/2014-06-recent-updatesoralatencymap-and-pylatencymap My Oracle Support, White Paper: ASMLIB Installation & Configuration On MultiPath Mapper Devices (Step by Step Demo) On RAC Or Standalone Configurations. (Doc ID 1594584.1) Computer Architecture: A Quantitative Approach CERN DB blog: http://cern.ch/db-blog/ 53