Machine learning and Neural Networks

advertisement

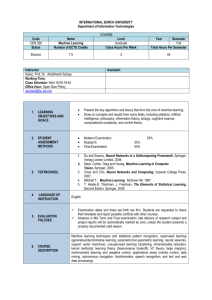

DEPARTMENT of COMPUTER SCIENCE and INFORMATION TECHNOLOGIES CEN 559 Machine Learning 2011-2012 Fall Term Dr. Abdülhamit Subaşı asubasi@ibu.edu.ba Office Hour: Open Door Policy Class Schedule:Monday 17:00-19:45 Course Objectives Present the key algorithms and theory that form the core of machine learning. Draw on concepts and results from many fields, including statistics, artifical intelligence, philosophy, information theory, biology, cognitive science, computational complexity, and control theory. Textbooks 1. Du and Swamy, Neural Networks in a Softcomputing Framework, Springer-Verlag London Limited, 2006. 2. Sebe, Cohen, Garg and Huang, Machine Learning in Computer Vision, Springer, 2005. 3. Chow and Cho, Neural Networks and Computing, Imperial College Press, 2007. 4. Mitchell T., Machine Learning, McGraw Hill, 1997. 5. T. Hastie,R. Tibshirani, J. Friedman, The Elements of Statistical Learning, Second Edition, Springer, 2008. Brief Contents Introduction Concept Learning Decision Tree Learning Artificial Neural Networks Evaluation Hypotheses Bayesian Learning Computational Learning Theory Reinforcement Learning Grading Midterm Examination 25% Research & Presentation 25% Final Examination 50% Minimum 15 pages word document, related PPT and presentation Research Topics: Linear Methods for Classification Linear Regression Logistic Regression Linear Discriminat Analysis Perceptron Kernel Smoothing Methods Ref5 Kernel Density Estimation and Classification (Naive Bayes) Mixture Models for Density Estimation and Classification Radial Basis Function Networks - Ref1 Basis Function Networks for Classification – Ref3 Advanced Radial Basis Function Networks– Ref3 Fundamentals of Machine Learning and Softcomputing –Ref1 Neural Networks Ref5 Multilayer Perceptrons- Ref1 Hopfield Networks and Boltzmann Machines - Ref1 SVM Ref5 KNN Ref5 Competitive Learning and Clustering - Ref1 Unsupervised Learning k means Ref5 Self-organizing Maps– Ref3 Research Topics: Principal Component Analysis Networks (PCA, ICA)- Ref1 Fuzzy Logic and Neurofuzzy Systems - Ref1 Evolutionary Algorithms and Evolving Neural Networks (PSO) - Ref1 Discussion and Outlook (SVM, CNN, WNN) - Ref1 Decision Tree Learning Duda&Hart Random Forest Ref5 PROBABILISTIC CLASSIFIERS-REF2 SEMI-SUPERVISED LEARNING-REF2 MAXIMUM LIKELIHOOD MINIMUM ENTROPY HMM-REF2 MARGIN DISTRIBUTION OPTIMIZATION-REF2 LEARNING THE STRUCTURE OF BAYESIAN NETWORK CLASSIFIERS-REF2 OFFICE ACTIVITY RECOGNITION-REF2 Model Assessment and Selection REF5 Cross-Validation Bootstrap Methods Performance ROC, statistic WEKA Machine Learning Tool TANGARA Machine Learning Tool ORANGE Machine Learning Tool NETICA Machine Learning Tool RAPID MINER Machine Learning Tool What is Machine Learning? Machine learning is the process in which a machine changes its structure, program, or data in response to external information in such a way that its expected future performance improves. Learning by machines can overlap with simpler processes, such as the addition of records to a database, but other cases are clear examples of what is called “learning,” such as a speech recognition program improving after hearing samples of a person’s speech. Components of a Learning Agent • Curiosity Element – problem generator; knows what the agent wants to achieve, takes risks (makes problems) to learn from • Learning Element – changes the future actions (the performance element) in accordance with the results from the performance analyzer • Performance Element – choosing actions based on percepts • Performance Analyzer – judges the effectiveness of the action, passes info to the learning element Why is machine learning important? Or, why not just program a computer to know everything it needs to know already? Many programs or computer-controlled robots must be prepared to deal with things that the creator would not know about, such as game-playing programs, speech programs, electronic “learning” pets, and robotic explorers. Here, they would have access to a range of unpredictable knowledge and thus would benefit from being able to draw conclusions independently. Relevance to AI • Helps programs handle new situations based on the input and output from old ones • Programs designed to adapt to humans will learn how to better interact • Could potentially save bulky programming and attempts to make a program “foolproof” • Makes nearly all programs more dynamic and more powerful while improving the efficiency of programming. Approaches to Machine Learning • Boolean logic and resolution • Evolutionary machine learning – many algorithms / neural networks are generated to solve a problem, the best ones survive • Statistical learning • Unsupervised learning – algorithm that models outputs from the input, knows nothing about the expected results • Supervised learning – algorithm that models outputs from the input and expected output • Reinforcement learning – algorithm that models outputs from observations Current Machine Learning Research Almost all types of AI are developing machine learning, since it makes programs dynamic. Examples: • Facial recognition – machines learn through many trials what objects are and aren’t faces • Language processing – machines learn the rules of English through example; some AI chatterbots start with little linguistic knowledge but can be taught almost any language through extensive conversation with humans Future of Machine Learning • Gaming – opponents will be able to learn from the player’s strategies and adapt to combat them • Personalized gadgets – devices that adapt to their owner as he changes (gets older, gets different tastes, changes his modes) • Exploration – machines will be able to explore environments unsuitable for humans and quickly adapt to strange properties Problems in Machine Learning • Learning by Example: • Noise in example classification • Correct knowledge representation • Heuristic Learning • Incomplete knowledge base • Continuous situations in which there is no absolute answer • Case-based Reasoning • Human knowledge to computer representation Problems in Machine Learning • Grammar – meaning pairs new rules must be relearned a number of times to gain “strength” • Conceptual Clustering Definitions can be very complicated Not much predictive power Successes in Research • Aspects of daily life using machine learning Optical character recognition Handwriting recognition Speech recognition Automated steering Assess credit card risk Filter news articles Refine information retrieval Data mining