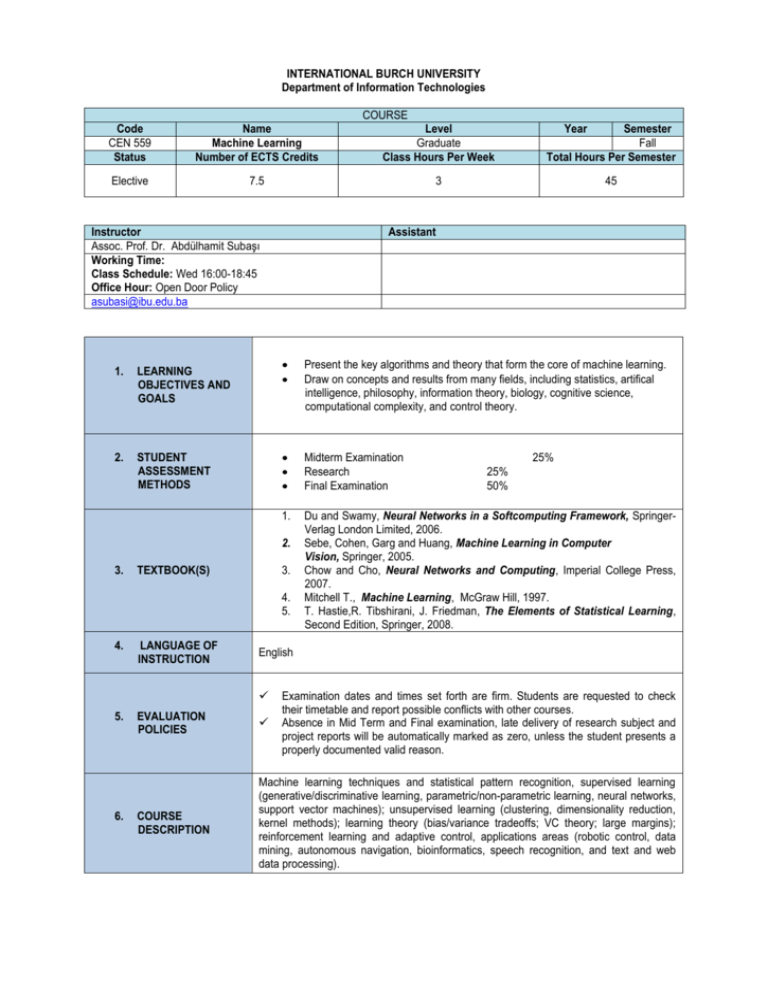

Present the key algorithms and theory that form the core of machine

advertisement

INTERNATIONAL BURCH UNIVERSITY Department of Information Technologies COURSE Code CEN 559 Status Name Machine Learning Number of ECTS Credits Level Graduate Class Hours Per Week Semester Fall Total Hours Per Semester Elective 7.5 3 45 Instructor Assoc. Prof. Dr. Abdülhamit Subaşı Working Time: Class Schedule: Wed 16:00-18:45 Office Hour: Open Door Policy asubasi@ibu.edu.ba 1. LEARNING OBJECTIVES AND GOALS 2. STUDENT ASSESSMENT METHODS Assistant Present the key algorithms and theory that form the core of machine learning. Draw on concepts and results from many fields, including statistics, artifical intelligence, philosophy, information theory, biology, cognitive science, computational complexity, and control theory. Midterm Examination Research Final Examination 1. Du and Swamy, Neural Networks in a Softcomputing Framework, SpringerVerlag London Limited, 2006. Sebe, Cohen, Garg and Huang, Machine Learning in Computer Vision, Springer, 2005. Chow and Cho, Neural Networks and Computing, Imperial College Press, 2007. Mitchell T., Machine Learning, McGraw Hill, 1997. T. Hastie,R. Tibshirani, J. Friedman, The Elements of Statistical Learning, Second Edition, Springer, 2008. 2. 3. TEXTBOOK(S) 3. 4. 5. 4. LANGUAGE OF INSTRUCTION 6. 25% 25% 50% English 5. Year Examination dates and times set forth are firm. Students are requested to check their timetable and report possible conflicts with other courses. Absence in Mid Term and Final examination, late delivery of research subject and project reports will be automatically marked as zero, unless the student presents a properly documented valid reason. EVALUATION POLICIES COURSE DESCRIPTION Machine learning techniques and statistical pattern recognition, supervised learning (generative/discriminative learning, parametric/non-parametric learning, neural networks, support vector machines); unsupervised learning (clustering, dimensionality reduction, kernel methods); learning theory (bias/variance tradeoffs; VC theory; large margins); reinforcement learning and adaptive control, applications areas (robotic control, data mining, autonomous navigation, bioinformatics, speech recognition, and text and web data processing). Demonstrate a systematic and critical understanding of the theories, principles and practices of computing; Creatively apply contemporary theories, processes and tools in the development and evaluation of solutions to problems in machine learning; Actively participate in, reflect upon, and take responsibility for, personal learning and development, within a framework of lifelong learning and continued professional development; Present issues and solutions in appropriate form to communicate effectively with peers and clients from specialist and non-specialist backgrounds; Work with minimum supervision, both individually and as a part of a team, demonstrating the interpersonal, organisation and problem-solving skills supported by related attitudes necessary to undertake employment. 7. INTENDED LEARNING OUTCOMES 8. LEARNING STRATEGY 9. SCHEDULE OF LECTURES AND READINGS 3 Week 6 3 Week 7 3 Week 8 3 Week 9 Week 10 3 3 Week 11 3 Week 12 Week 13 Week 14 Week 15 3 3 3 3 Lectures Lectures Lectures Lectures Lectures Lectures Lectures Lectures Lectures Lectures Lectures Lectures Lectures 5. Week 5 Concept of Learning Bayesian Learning, Computational Learning Theory Machine learning techniques and statistical pattern recognition supervised learning (generative/discriminative learning, parametric/non-parametric learning, neural networks) supervised learning (support vector machines) unsupervised learning (clustering, dimensionality reduction, kernel methods) learning theory (bias/variance tradeoffs; VC theory; large margins) Midterm reinforcement learning and adaptive control applications areas (robotic control, data mining, autonomous navigation, bioinformatics, speech recognition, and text and web data processing). Evaluation Hypotheses Decision Tree Learning Presentation Presentation Reading 3. 4. 3 Teaching Methods 2. Week 4 Topic Du and Swamy, Neural Networks in a Softcomputing Framework, Springer-Verlag London Limited, 2006. Sebe, Cohen, Garg and Huang, Machine Learning in Computer Vision, Springer, 2005. Chow and Cho, Neural Networks and Computing, Imperial College Press, 2007. Mitchell T., Machine Learning, McGraw Hill, 1997. Week 1 Week 2 Week 3 Class Hours 3 3 3 1. Date 1. Interactive lectures and communications with students 2. Discussions and group works 3. Presentations Plagiarism Notice: Plagiarism is a serious academic offense. Plagiarism is a form of cheating in which a student tries to pass off someone else's work or part of it as his or her own. It usually takes the form of presenting thoughts, terms, phrases, passages from the work of others as one's own. When it occurs it is usually found in essays, research papers or term papers. Typically, passages or ideas are 'lifted' from a source without proper credit being given to the source and its author. To avoid suspicion of plagiarism you should use appropriate references and footnotes. If you have any doubt as to what constitutes plagiarism you should consult your instructor. You should be aware that there are now internet tools that allow each submitted paper to be checked for plagiarism. Remember plagiarism is serious and may result in a reduced or failing grade or other disciplinary actions. Cheating: Cheating in any form whatsoever is unacceptable and will subject you to IBU disciplinary procedures. Cheating includes signing in others for attendance, exams or anything else; using prohibited electronic and paper aides; having others do your work; having others do your work, copying from others or allowing others to copy from you etc. Please do not cheat in any way! Please consult me if you have any questions. Presentation Research Topics: 1. Linear Methods for Classification Linear Regression Logistic Regression Linear Discriminat Analysis Perceptron 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. 20. 21. 22. 23. 24. 25. 26. 27. 28. 29. 30. 31. 32. 33. Adnan Hodzic Kernel Smoothing Methods Ref5 Rijad Halilovic Kernel Density Estimation and Classification (Naive Bayes) Mixture Models for Density Estimation and Classification Radial Basis Function Networks - Ref1 Sabehata Dogic Basis Function Networks for Classification – Ref3 Advanced Radial Basis Function Networks– Ref3 Fundamentals of Machine Learning and Softcomputing –Ref1 Neural Networks Ref5 Zerina Masetic Multilayer Perceptrons- Ref1 Hopfield Networks and Boltzmann Machines - Ref1 SVM Ref5 Vahidin KNN Ref5 Jasmin Kurti Competitive Learning and Clustering - Ref1 Hacer Konakli Unsupervised Learning k means Ref5 Self-organizing Maps– Ref3 Sevde Principal Component Analysis Networks (PCA, ICA)- Ref1 Mihret Sarac Fuzzy Logic and Neurofuzzy Systems - Ref1 Samed Jukic Evolutionary Algorithms and Evolving Neural Networks (PSO) - Ref1 Samila Discussion and Outlook (SVM, CNN, WNN) - Ref1 Decision Tree Learning Duda&Hart Zeynep Kara Random Forest Ref5 Nafia PROBABILISTIC CLASSIFIERS-REF2 Suleyman SEMI-SUPERVISED LEARNING-REF2 MAXIMUM LIKELIHOOD MINIMUM ENTROPY HMM-REF2 MARGIN DISTRIBUTION OPTIMIZATION-REF2 LEARNING THE STRUCTURE OF BAYESIAN NETWORK CLASSIFIERS-REF2 OFFICE ACTIVITY RECOGNITION-REF2 Model Assessment and Selection REF5 Armin Spahic Cross-Validation Bootstrap Methods Performance ROC, statistic Olcay WEKA Machine Learning Tool TANGARA Machine Learning Tool ORANGE Machine Learning Tool NETICA Machine Learning Tool RAPID MINER Machine Learning Tool Minimum 15 pages word document, related PPT and presentation