ucla-talk-renewal-op.. - University of Southern California

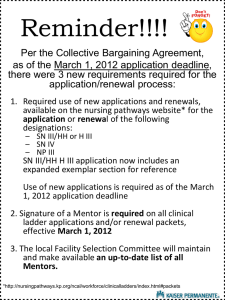

advertisement

Dynamic Optimization and Learning

for Renewal Systems -With applications to Wireless Networks and

Peer-to-Peer Networks

Task 3

Task 2

Task 1

T/R

T/R

T/R

t

T[0]

T[1]

T[2]

Network

Coordinator

T/R

Michael J. Neely, University of Southern California

T/R

Outline:

• Optimization of Renewal Systems

• Application 1: Task Processing in Wireless Networks

Quality-of-Information (ARL CTA project)

Task “deluge” problem

• Application 2: Peer-to-Peer Networks

Social networks (ARL CTA project)

Internet and wireless

References:

General Theory and Application 1:

•

•

M. J. Neely, Stochastic Network Optimization with Application to

Communication and Queueing Systems, Morgan & Claypool, 2010.

M. J. Neely, “Dynamic Optimization and Learning for Renewal Systems,” Proc.

Asilomar Conf. on Signals, Systems, and Computers, Nov. 2010.

Application 2 (Peer-to-Peer):

•

M. J. Neely and L. Golubchik, “Utility Optimization for Dynamic Peer-to-Peer

Networks with Tit-for-Tat Constraints,” Proc. IEEE INFOCOM, 2011.

These works are available on:

http://www-bcf.usc.edu/~mjneely/

A General Renewal System

y[0]

y[2]

y[1]

t

T[0]

T[1]

T[2]

•Renewal Frames r in {0, 1, 2, …}.

•π[r] = Policy chosen on frame r.

•P = Abstract policy space (π[r] in P for all r).

•Policy π[r] affects frame size and penalty vector on frame r.

π[r]

•y[r] = [y0(π[r]), y1(π[r]), …, yL(π[r])]

•T[r] = T(π[r]) = Frame Duration

A General Renewal System

y[0]

y[2]

y[1]

t

T[0]

T[1]

T[2]

•Renewal Frames r in {0, 1, 2, …}.

•π[r] = Policy chosen on frame r.

•P = Abstract policy space (π[r] in P for all r).

•Policy π[r] affects frame size and penalty vector on frame r.

These are random functions of π[r] (distribution depends on π[r]):

π[r]

•y[r] = [y0(π[r]), y1(π[r]), …, yL(π[r])]

•T[r] = T(π[r]) = Frame Duration

A General Renewal System

y[0]

y[2]

y[1]

t

T[0]

T[1]

T[2]

•Renewal Frames r in {0, 1, 2, …}.

•π[r] = Policy chosen on frame r.

•P = Abstract policy space (π[r] in P for all r).

•Policy π[r] affects frame size and penalty vector on frame r.

These are random functions of π[r] (distribution depends on π[r]):

π[r]

•y[r] = [1.2, 1.8, …, 0.4]

•T[r] = 8.1 = Frame Duration

A General Renewal System

y[0]

y[2]

y[1]

t

T[0]

T[1]

T[2]

•Renewal Frames r in {0, 1, 2, …}.

•π[r] = Policy chosen on frame r.

•P = Abstract policy space (π[r] in P for all r).

•Policy π[r] affects frame size and penalty vector on frame r.

These are random functions of π[r] (distribution depends on π[r]):

π[r]

•y[r] = [0.0, 3.8, …, -2.0]

•T[r] = 12.3 = Frame Duration

A General Renewal System

y[0]

y[2]

y[1]

t

T[0]

T[1]

T[2]

•Renewal Frames r in {0, 1, 2, …}.

•π[r] = Policy chosen on frame r.

•P = Abstract policy space (π[r] in P for all r).

•Policy π[r] affects frame size and penalty vector on frame r.

These are random functions of π[r] (distribution depends on π[r]):

π[r]

•y[r] = [1.7, 2.2, …, 0.9]

•T[r] = 5.6 = Frame Duration

Example 1: Opportunistic Scheduling

S[r] = (S1[r], S2[r], S3[r])

•All Frames = 1 Slot

•S[r] = (S1[r], S2[r], S3[r]) = Channel States for Slot r

•Policy π[r]:

On frame r: First observe S[r], then choose a

channel to serve (i.,e, {1, 2, 3}).

•Example Objectives: thruput, energy, fairness, etc.

Example 2: Convex Programs (Deterministic Problems)

Minimize: f(x1, x2, …, xN)

Subject to: gk(x1, x2, …, xN) ≤ 0 for all k in {1,…, K}

(x1, x2, …, xN) in A

Example 2: Convex Programs (Deterministic Problems)

Minimize: f(x1, x2, …, xN)

Subject to: gk(x1, x2, …, xN) ≤ 0 for all k in {1,…, K}

(x1, x2, …, xN) in A

Equivalent to:

Minimize: f(x1[r], x2[r], …, xN[r])

Subject to: gk(x1[r], x2[r], …, xN[r]) ≤ 0 for all k in {1,…, K}

(x1[r], x2[r], …, xN[r]) in A for all frames r

•All Frames = 1 Slot.

•Policy π[r] = (x1[r], x2[r], …, xN[r]) in A.

•Time average: f(x[r]) =

R-1

limR∞ (1/R)∑r=0 f(x[r])

Example 2: Convex Programs (Deterministic Problems)

Minimize: f(x1, x2, …, xN)

Subject to: gk(x1, x2, …, xN) ≤ 0 for all k in {1,…, K}

(x1, x2, …, xN) in A

Equivalent to:

Minimize: f(x1[r], x2[r], …, xN[r])

Subject to: gk(x1[r], x2[r], …, xN[r]) ≤ 0 for all k in {1,…, K}

(x1[r], x2[r], …, xN[r]) in A for all frames r

Jensen’s Inequality: The time average of the dynamic

solution (x1[r], x2[r], …, xN[r]) solves the original convex

program!

Example 3: Markov Decision Problems

2

4

1

3

•M(t) = Recurrent Markov Chain (continuous or discrete)

•Renewals are defined as recurrences to state 1.

•T[r] = random inter-renewal frame size (frame r).

•y[r] = penalties incurred over frame r.

•π[r] = policy that affects transition probs over frame r.

•Objective: Minimize time average of one penalty

subj. to time average constraints on others.

Example 4: Task Processing over Networks

T/R

T/R

Task 3

Task 2

Task 1

Network

Coordinator

T/R

T/R

T/R

T/R

•Infinite Sequence of Tasks.

•E.g.: Query sensors and/or perform computations.

•Renewal Frame r = Processing Time for Frame r.

•Policy Types:

•Low Level: {Specify Transmission Decisions over Net}

•High Level: {Backpressure1, Backpressure2, Shortest Path}

•Example Objective: Maximize quality of information per unit time

subject to per-node power constraints.

R→∞ J.

R Neely

Michael

r= 1

Quick Review of Renewal-Reward Theory

(Pop Quiz Next Slide!)

R

r = 1 y0 [r ]

Time

Avg. = for

limy0[r]: R

Define

the frame-average

R→∞

T[r

]

r

=

1

R

1 1

R

y0= lim

y0r[r= ]1 y0[r ]

R

R r= 1 R

=R→∞lim

R→∞ 1

r = 1 T[r ]

R

y0

=

T

The time-average for y0[r] is then: R

TimeRAvg.

y0[r=]

lim

R→∞

r= 1

R

r = 1 T[r ]

=

r = 11 y0 [rR]

lim

RR

r]= 1 0

R→∞

T[r

r= 1

R

1

R→∞

R

1

= 1]

R= 1 y0r [r

r

lim R1 R

=

R→∞

r = 1 T[r ]

R

=

lim

y [r ]

y0

=

T

T[ry]

0

T

*If i.i.d. over frames, by LLN this is the same as E{y0}/E{T}.

Michael J. Neely

Pop Quiz: (10 points)

•Let y0[r] = Energy Expended on frame r.

•Time avg. power = (Total Energy Use)/(Total Time)

•Suppose (for simplicity) behavior is i.i.d. over frames.

y0[r ]

E

To minimize time average power, which one should

T[r

]

we minimize?

(a)

E

y0[r ]

T[r ]

(b)

E { y0[r ]}

E { T[r ]}

Michael J. Neely

Pop Quiz: (10 points)

•Let y0[r] = Energy Expended on frame r.

•Time avg. power = (Total Energy Use)/(Total Time)

•Suppose (for simplicity) behavior is i.i.d. over frames.

y0[r ]

E

To minimize time average power, which one should

T[r

]

we minimize?

(a)

E

y0[r ]

T[r ]

(b)

E { y0[r ]}

E { T[r ]}

Two General Problem Types:

Renewal Slides

1) Minimize time average subject to time average constraints:

Minimize:

y0 / T

Michael J. Neely

Subject to: (1) yl / T ≤ cl ∀l ∈ { 1, . . . , L }

(2) π[r ] ∈ P ∀r ∈ { 0, 1, 2, . . .}

2) Maximize concave function φ(x1, …, xL) of time average:

Maximize:

φ(y1/ T, y2/ T, . . . , yL / T)

Subject to: (1) yl / T ≤ cl ∀l ∈ { 1, . . . , L }

(2) π[r ] ∈ P ∀r ∈ { 0, 1, 2, . . .}

Solving the Problem (Type 1):

Minimize:

y0 / T

Subject to: (1) yl / T ≤ cl ∀l ∈ { 1, . . . , L }

(2) π[r ] ∈ P ∀r ∈ { 0, 1, 2, . . .}

Renewal Slides

Define a “Virtual Queue” for each inequality constraint:

Michael J. Neely

yl[r]

Zl[r]

clT[r]

Zl[r+1] = max[Zl[r] – clT[r] + yl[r], 0]

Queue Stable iff

yl ≤ cl T

iff

yl / T ≤ cl

Lyapunov Function and “Drift-Plus-Penalty Ratio”:

Minimize:

y0 / T

Subject to: (1) yl / T ≤ cl ∀l ∈ { 1, . . . , L}

(2) π[r ] ∈ P ∀r ∈ { 0, 1, 2, . . .}

Z1(t)

Z2(t)

•Scalar measure of queue sizes:

L[r] = Z1[r]2 + Z2[r]2 + … + ZL[r]2

Δ(Z[r]) = E{L[r+1] – L[r] | Z[r]} = “Frame-Based Lyap. Drift”

•Algorithm Technique: Every frame r, observe Z1[r], …, ZL[r].

Then choose a policy π[r] in P to minimize:

“Drift-Plus-Penalty Ratio” =

Δ(Z[r]) + VE{y0[r]|Z[r]}

E{T|Z[r]}

Renewal Slides

The Algorithm Becomes:

Michael J. Neely

Δ(Z[r]) + VE{y0[r]|Z[r]}

Minimize:

y0 / T

Subject to: (1) yl / T ≤ cl ∀l ∈ { 1, . . . , L}

(2) π[r ] ∈ P ∀r ∈ { 0, 1, 2, . . .}

E{T|Z[r]}

•Observe Z[r] = (Z1[r], …, ZL[r]). Choose π[r] in P to solve:

E V ŷ0(π[r ]) +

L

l = 1 Z l [r ]ŷl (π[r ])|Z

[r ]

E T̂(π[r ])|Z [r ]

•Then update virtual queues:

Zl[r+1] = max[Zl[r] – clT[r] + yl[r], 0]

Renewal

Slides

Δ(Z[r]) + VE{y [r]|Z[r]}

Renewal Slides

0

DPP Ratio:

E{T|Z[r]}

Michael J. Neely

Michael J. Neely

Theorem: Assume the constraints are feasible. Then

under this algorithm, we achieve:

(a) lim sup

1

R→∞

R

lim sup

R→∞

(b)

1

R

1

R

R

1

r = 1 yl [r ]

R

1R R

T[r ]

Rr = 1 r =l 1

y [r ]

≤ cl ∀l ∈ { 1, . . . , L } (w.p.1)

≤

c

l

R

1

R T[r ]

1

R R r =r 1= 1 E { y0 [r ]}

R

1R

E { T[r ]}

Rr = 1 r = 1 0

E { y [r ]}

R

r = 1 E { T[r ]}

∀l ∈ { 1, . . . , L } (w.p.1)

≤ r ati oopt + O(1/ V )

≤ r ati oopt + O(1/ V )

For all frames r in {1, 2, 3, …}

Application 1 – Task Processing:

T/R

T/R

Task 3

Task 2

Setup

Task 1

Network

Coordinator

Transmit

T/R

T/R

T/R

Idle I[r]

Frame r

•Every Task reveals random task parameters η[r]:

η[r] = [(qual1[r], T1[r]), (qual2[r], T2[r]), …, (qual5[r], T5[r])]

•Choose π[r] = [which node to transmit, how much idle]

in {1,2,3,4,5} X [0, Imax]

•Transmissions incur power

•We use a quality distribution that tends to be better for

higher-numbered nodes.

•Maximize quality/time subject to pav≤ 0.25 for all nodes.

π∈ P

val(θ)

E { a(π, η)

− θb(π, η)}

= πinf

Minimizing

the Drift-Plus-Penalty

Ratio:

∈P

E { a(π, η)}

•Minimizing a pure expectation,

rather

than a ratio,

E

{

a(π,

η)}

E { b(π,

η)} Neuro-DP).

is typically easier (see Bertsekas,

Tsitsiklis

E { b(π, η)}

•Define:

E { a(π, η)}

θ =∗ inf E { a(π, η)}

P E { b(π, η)}

θ =π∈inf

π∈ P E { b(π, η)}

∗

•“Bisection Lemma”:

∗

infinf

E {Ea(π,

η)

−

θ

{ a(π, η) − θ∗b(π,

b(π, η)}

η)} == 00

π∈ Pπ∈ P

∗θ∗

infinfE E

{ a(π,

η)

−

θb(π,

η)}

<

0

if

θ

>

{ a(π, η) − θb(π, η)} < 0 if θ > θ

π∈ P

π∈ P

∗ ∗

inf

E

{

a(π,

η)

−

θb(π,

η)}

>

0

if

θ

<

θ

inf E { a(π, η) − θb(π, η)} > 0 if θ < θ

π∈ P

π∈ P

Learning via Sampling

fromJ.theNeely

past:

Michael

•Suppose randomness characterized by:

{η1, η2, ..., ηW} (past random samples)

Renewal Slides

•Want to compute (over unknown random distribution of η):

Michael J. Neely

val(θ) = inf E { a(π, η) − θb(π, η)}

π∈ P

•Approximate this via W samples from the past:

1

˜

val(θ)

=

W

∗

W

E { a(π, η)}

[a(π,η)}

ηw ) − θb(π, ηw )]

Eπinf

{

b(π,

∈P

w= 1

θ = inf

E { a(π, η)}

Simulation:

Quality of Information / Unit Time

Alternative Alg. With Time Averaging

Drift-Plus-Penalty Ratio Alg. With Bisection

Sample Size W

y2/ T = 0.249547 ≤ 0.25 y3/ T =

/ T = 0.250032 ≤ 0.25 y5/ T =

Concluding Sims (values fory4W=10):

1

0.182335 ≤≤ 0.25

yy2/1/TT == 0.249547

0.25 y3/ T = 0.250018 ≤ 0.25

0.249547 ≤≤ 0.25

= 0.852950

yy4/2/TT == 0.250032

0.25 y5q.o.i

/ T =/ T0.250046

≤ 0.25, I dle

y3 / T

y4 / T

y5 / T

= 0.250018 ≤ 0.25

= 0.250032 ≤ 0.25

q.o.i / T = 0.852950 , I dle = 1.421260

= 0.250046 ≤ 0.25

q.o.i / T = 0.852950 , I dle = 1.421260

T/R

T/R

Task 3

Task 2

Setup

Task 1

Network

Coordinator

Transmit

Frame r

T/R

Idle I[r]

T/R

T/R

“Application 2” – Peer-to-Peer Wireless Networking:

1

2

•

•

•

•

•

3

Network

Cloud

4

5

N nodes.

Each node n has download social group Gn.

Gn is a subset of {1, …, N}.

Each file f is in some subset of nodes Nf.

Each node n can request download of a file f

from any node in Gn Nf

• Transmission rates (µab(t)) between nodes are

chosen in some (possibly time-varying) set G(t)

“Internet Cloud” Example 1:

Uplink capacity C1uplink

1

2

3

Network

Cloud

4

5

• G(t) = Constant (no variation).

• ∑bµnb(t) ≤ Cnuplink for all nodes n.

This example assumes uplink capacity is the

bottleneck.

“Internet Cloud” Example 2:

1

2

3

Network

Cloud

4

5

• G(t) specifies a single supportable (µab(t)).

No “transmission rate decisions.” The allowable

rates (µab(t)) are given to the peer-to-peer

system from some underlying transport and

routing protocol.

“Wireless Basestation” Example 3:

= base station

= wireless device

• Wireless device-to-device transmission

increases capacity.

• (µab(t)) chosen in G(t).

• Transmissions coordinated by base station.

“Commodities” for Request Allocation

• Multiple file downloads can be active.

• Each file corresponds to a subset of nodes.

• Queueing files according to subsets would

result in O(2N) queues.

(complexity explosion!).

Instead of that, without loss of optimality, we

use the following alternative commodity

structure…

“Commodities” for Request Allocation

j

(An(t), Nn(t))

n

k

Gn

Nn(t)

m

• Use subset info to determine the decision set.

“Commodities” for Request Allocation

j

(An(t), Nn(t))

n

k

Gn

Nn(t)

m

• Use subset info to determine the decision set.

• Choose which node will help download.

“Commodities” for Request Allocation

j

(An(t), Nn(t))

n

k

m

Qmn(t)

• Use subset info to determine the decision set.

• Choose which node will help download.

• That node queues the request:

Qmn(t+1) = max[Qmn(t) + Rmn(t) - µmn(t), 0]

• Subset info can now be thrown away.

Stochastic Network Optimization Problem:

Maximize:

∑n gn(∑a ran)

Subject to:

(1) Qmn < infinity

(Queue Stability Constraint)

(2) α ∑a ran ≤ β + ∑b rnb for all n

(Tit-for-Tat Constraint)

Stochastic Network Optimization Problem:

Maximize:

concave utility function

∑n gn(∑a ran)

Subject to:

(1) Qmn < infinity

(Queue Stability Constraint)

(2) α ∑a ran ≤ β + ∑b rnb for all n

(Tit-for-Tat Constraint)

Stochastic Network Optimization Problem:

Maximize:

concave utility function

∑n gn(∑a ran)

time average request rate

Subject to:

(1) Qmn < infinity

(Queue Stability Constraint)

(2) α ∑a ran ≤ β + ∑b rnb for all n

(Tit-for-Tat Constraint)

Stochastic Network Optimization Problem:

Maximize:

concave utility function

∑n gn(∑a ran)

time average request rate

Subject to:

(1) Qmn < infinity

(Queue Stability Constraint)

(2) α ∑a ran ≤ β + ∑b rnb for all n

(Tit-for-Tat Constraint)

α x Download rate

Stochastic Network Optimization Problem:

Maximize:

concave utility function

∑n gn(∑a ran)

time average request rate

Subject to:

(1) Qmn < infinity

(Queue Stability Constraint)

(2) α ∑a ran ≤ β + ∑b rnb for all n

(Tit-for-Tat Constraint)

α x Download rate

β + Upload rate

Solution Technique for Infocom paper

• Use “Drift-Plus-Penalty” framework in a new

“Universal Scheduling” scenario.

• We make no statistical assumptions on the

stochastic processes [S(t); (An(t), Nn(t))].

Resulting Algorithm:

• (Auxiliary Variables) For each n, choose an aux.

variable γn(t) in interval [0, Amax] to maximize:

Vgn(γn(t)) – Hn(t)gn(t)

• (Request Allocation) For each n, observe the

following value for all m in {Gn

Nn(t)}:

-Qmn(t) + Hn(t) + (Fm(t) – αFn(t))

Give An(t) to queue m with largest non-neg value,

Drop An(t) if all above values are negative.

• (Scheduling) Choose (µab(t)) in G(t) to maximize:

∑nb µnb(t)Qnb(t)

How the Incentives Work for node n:

Node n can only request downloads from others if

it finds a node m with a non-negative value of:

-Qmn(t) + Hn(t) + (Fm(t) – αFn(t))

Fn(t) = “Node n Reputation”

(Good reputation = Low value)

α x Receive Help(t)

Fn(t)

β + Help Others(t)

How the Incentives Work for node n:

Node n can only request downloads from others if

it finds a node m with a non-negative value of:

-Qmn(t) + Hn(t) + (Fm(t) – αFn(t))

Bounded

Compare Reputations!

Fn(t) = “Node n Reputation”

(Good reputation = Low value)

α x Receive Help(t)

Fn(t)

β + Help Others(t)

Concluding Theorem:

For any arbitrary [S(t); (An(t), Nn(t))] sample path,

we guarantee:

a) Qmn(t) ≤ Qmax = O(V) for all t, all (m,n).

b) All Tit-for-Tat constraints are satisfied.

c) For any T>0:

liminfK∞ [Achieved Utility(KT)] ≥

K

liminfK∞ (1/K)∑i=1

[“T-Slot-Lookahead-Utility[i]”]- BT/V

Frame 1

0

Frame 2

T

Frame 3

2T

3T

Conclusions for Peer-to-Peer Problem:

• Framework for posing peer-to-peer networking as

stochastic network optimization problems.

• Can compute optimal solution in polynomial time.

Conclusions overall:

• Renewal Optimization Framework can be viewed as

“Generalized Linear Programming”

• Variable Length Scheduling Modes

• Many applications (task processing, peer-to-peer

networks, Markov decision problems, linear

programs, convex programs, stock market, smart

grid, energy harvesting, and many more)

Solving the Problem (Type 2):

Maximize:

φ(y1/ T, y2/ T, . . . , yL / T)

Subject to: (1) yl / T ≤ cl ∀l ∈ { 1, . . . , L }

(2) π[r ] ∈ P ∀r ∈ { 0, 1, 2, . . .}

We reduce it to a problem with the structure of Type 1 via:

•Auxiliary Variables γ[r] = (γ1[r], …, γL[r]).

•The following variation on Jensen’s Inequality:

For any concave function φ(x1, .., xL) and any

(arbitrarily correlated) vector of random variables

(x1, x2, …, xL, T), where T>0, we have:

E{Tφ(X1, …, XL)}

E{T}

≤

φ(

E{T(X1, …, XL)}

E{T}

)

Michael

Neely

The Algorithm (type 2)Michael

Becomes:

J. J.

Neely

•On frame r, observe Z[r] = (Z1[r], …, ZL[r]).

•(Auxiliary Variables)

Choose γ1[r], …, γL[r] to max the below deterministic problem:

L

L [r ]γ [r ]

Maximize:

V

φ(γ

[r

],

.

.

.

,

γ

[r

])

−

G

1

L

Maximize: V φ(γ1[r ], . . . , γM [r ]) − l = 1 l =l1 Gl l[r ]γl [r ]

Subject to:

∀l ∈

. . ,.L. .} , L }

Subject

to: γm

γmi ni n≤ ≤γl γ[rl ][r≤] ≤γmγaxm ax

∀l {∈1, {. 1,

•(Policy Selection) Choose π[r] in P to minimize:

L

L

E

Z

[r

]ŷ

(π[r

])

−

l

l =L 1 l

l = 1LGl [r ]ŷl (π[r ])|Z [r ]

E

l = 1 Z l [r ]ŷl (π[r ])

−

l = 1 Gl [r ]ŷl (π[r ])|Z

[r ]

E T̂(π[r ])|Z [r ]

E T̂(π[r ])|Z [r ]

•Then update virtual queues:

Zl[r+1] = max[Zl[r] – clT[r] + yl[r], 0], Gl[r+1] = max[Gl[r] + γl[r]T[r] - yl[r], 0]