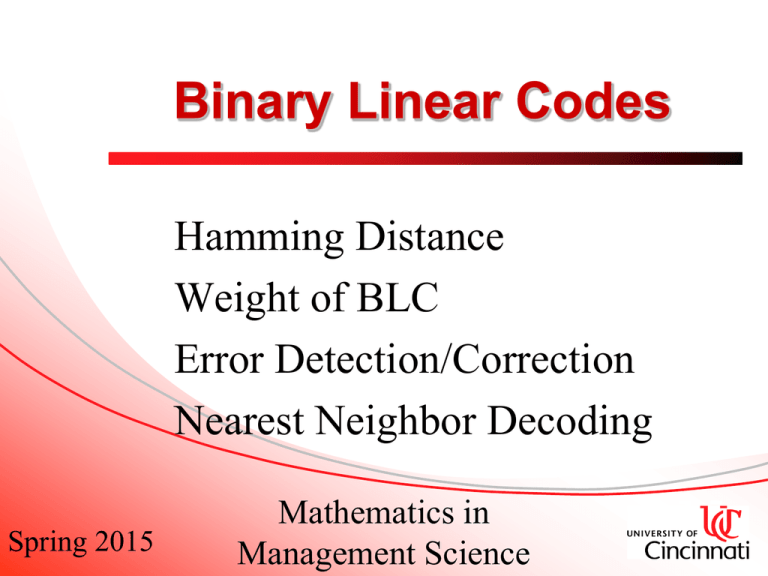

Binary Linear Codes

advertisement

Binary Linear Codes

Hamming Distance

Weight of BLC

Error Detection/Correction

Nearest Neighbor Decoding

Spring 2015

Mathematics in

Management Science

Repetition Code

Can increase accuracy via repetition. E.g., can repeat

the message 3 times:

1011 111000111111

This can correct 1 (isolated) error out of three

consecutive bits sent. However this method is not

practical:

transmission rate is only 33.33%

most errors are not isolated but in a burst,

normally (we hope) the channel is not so noisy

that we need to give up so much efficiency.

Error Correcting Codes

Want a coding scheme with high

transmission rate (efficient) and error

correction rate not too small.

This requires mathematical knowledge

and some ingenuity.

Hamming Codes

Earliest (and simplest) solution given by Richard W.

Hamming; born in Chicago on Feb 11, 1915; graduated

from U of Chicago with a B.S. in mathematics. In 1939,

he received an M.A. degree in mathematics from U of

Nebraska, and in 1942, a Ph.D. in math from U Illinois.

During the latter part of WWII, Hamming was at Los

Alamos, where he was involved in computing atomic

bomb designs. In 1946, he joined Bell Lab where he

worked in math, computing, engineering, and science.

Hamming Codes

Input was entered into a machine via a punched paper tape,

which had two holes per row. Each row was read as a unit. The

sensing relays would prevent further computation if more or less

than two holes were detected in a given row. Similar checks were

used in nearly every step of a computation.

If such a check failed when the operating personnel were not

present, the problem had to be rerun. This inefficiency led

Hamming to investigate the possibility of automatic error

correction. Many years later he said to an interviewer:

Two weekends in a row I came in and found that all my stuff had

been dumped and nothing was done. I was really aroused and

annoyed because I wanted those answers and two weekends had

been lost. And so I said, “Damn it, if the machine can detect an

error, why can’t it locate the position and correct it?”

Hamming Codes

In 1950, Hamming published his famous paper

on error-correcting codes, resulting in the use of

Hamming codes in modern computers and a

new branch of information theory. Hamming

died at the age of 82 in 1998.

Source: Adapted from T. Thompson,

From Error Correcting Codes Through

Sphere Packing to Simple Groups.

Hamming Codes

The Hamming [7,4,3]-code is a binary linear code

where:

Every codeword has length 7

First 4 digits give original signal

(remaining 3 are check digits)

Can correct only one error within the codeword

or can detect up to 2 errors.

Transmission rate is

4/7 ≈ 57% and

error correction rate

is 1/7 ≈ 14%

Also have Hamming [15,11,3]-codes

and [31,26,3]-codes etc. (3 means

that 2 two different codewords are at

least 3 bits apart)

Reed-Muller Codes

Used by Mariner 9 to transmit b&w photos of Mars in 1972.

It is a [32,6,16] linear code.

Every codeword has length 32 bits

Only 6 bits are original information

Can correct up to 7 bits of error in a codeword.

Since only 6 bits are useful, it can only describe 26 = 64

different shades of gray.

Transmission rate is 6/32 ≈ 18.8%,

& error correction rate is 7/32 ≈ 22%

Golay Codes

From 1979 through 1981, Voyager took color photos of

Jupiter and Saturn. Each pixel required a 12-bit string to

represent the 4096 possible shades of color. The source

information was encoded using a binary [24,12,8]-code,

known as the Golay code (introduced in 1948).

Each codeword has 24 bits, with 12 bits of information,

and can correct up to 3 errors in each codeword.

Transmission rate is 12/24 = 50% with

error correction rate is 3/24 = 12.5%

Golay Codes

Golay codes are linear block-based error

correcting codes with a wide range of

applications such as:

• Real-time audio & video communications

• Packet data communications

• Mobile and personal communications

• Radio communications.

Binary Codewords

A binary codeword is a string of binary digits:

e.g. 00110111 is an 8-bit codeword

A binary code is a collection of codewords all with

the same length.

A binary code C of length n is a collection of

binary codewords all of length n and it is called

linear if it is a subspace of {0,1}n.

In other words, 0…0 is in C and the sum of two

codewords is also a codeword.

Binary Arithmetic

Binary digits are 0 and 1.

Addition:

0 + 0 = 0, 0 + 1 = 1, 1 + 1 = 0

Subtraction: same as addition (i.e. a - b = a + b)

Multiplication: 0×0 = 0, 0×1 = 0, 1×1 = 1

Advantages of Linear Codes

Easy to encode and decode.

Lotsa space to accommodate errors.

The encoding process

Nearest Neighbor Decoding

Spp parity-check sums detect an error.

Compute distances between received

word and all codewords.

The codeword that differs in fewest bits

is used in place of received word.

Thus get automatic error correction by

choosing “closest” permissible answer.

Hamming Distance

The Hamming distance between two

binary strings is the number of bits in

which the two strings differ.

dist btwn pixd words is 3

Hamming Distance

The Hamming distances between the

string v=1010110 and all the

Hamming (7,4) code words:

Nearest Neighbor Decoding

From above see that the nearest

codeword to

v=1010110

is

w=1000110

So, anytime that v is received, we

replace it with w .

Why NND Works

With 7 bits have 27=128 possible

words, but only 16=24 are legitimate

codewords. Lotsa 7-bit words, but few

are codewords.

Spp receive v , but not codeword. If

have codeword w at distance 1 from v

then w is the only such codeword.

But could have two codewords at

distance 2 from v.

Error Correction

When errors occur, cannot tell how

many errors—all we know is that

something is wrong.

Highest probability is that the received

(corrupted) data should really be the

closest code word.

Error Correction

Nearest Neighbor Decoding says to

use the nearest codeword (one which

agrees with the received string in the

most positions), provided there is only

one such code word.

Detecting & Correcting Errors

Valid code words must satisfy parity

check-sums; if not, have an error.

But, if bunch of errors, a code word

could get transformed to some other

code word.

How many 1-bit errors does it take to

change a legal code word into a

different legal code word?

Weight of a Binary Code

Suppose the weight of some binary

code is t; so, it takes (at least) t 1-bit

changes to convert any codeword into

another codeword.

Therefore, we can detect up to t-1

single bit errors.

The Hamming (7,4) code has weight

t=3. Thus using it we can detect 1 or 2

single bit errors.

Weight of a Binary Code

Suppose the weight of some binary

linear code is t.

Then, can detect up to t-1 single bit

errors,

or, we can correct up to

(t-1)/2 errors (if t is odd),

(t-2)/2 errors (if t is even).

Cannot do both.

Example

Above code created using check-sums

c1= (a1+a2+a3 ) mod 2

c2= (a1 +a3) mod 2

c3= ( a2+a3) mod 2

Example

Above code has weight 3, so can correct single

bit error or detect 2 errors.

Received word v=110001 decodes as

w=110011.

Extended Hamming (7,4)

Append parity bit to end of each codeword

so that new codeword has even parity.

Extended Hamming (7,4)

Get weight t=4, so can detect 3 (or fewer)

errors, or can correct (t-2)/2=2/2=1 error.

Message

CodeWord

Message

CodeWord

0000

00000000

0110

01100101

0001

00010111

0101

01011100

0010

00101110

0011

00111001

0100

01001011

1110

11101000

1000

10001101

1101

11010001

1100

11000110

1011

10110100

1010

10100011

0111

01110010

1001

10011010

1111

11111111

Summary

For a binary linear code of weight t, we

can choose to either detect errors or to

correct errors, but cannot do both.

Summary

Error-detection capability:

The code is guaranteed to detect the

presence of errors provided the total

number of errors is strictly fewer than t

(i.e., t −1 or fewer), but cannot tell how

many errors occur.

Summary

Error-correction capability:

NND is guaranteed to recover the

correct codeword if there are strictly

fewer than t/2 errors, so

(t − 1)/2 or fewer for odd t

(t − 2)/2 or fewer for even t.

Example

Consider flwg binary linear code C :

Messages consist of 3 data bits.

Codewords are 7 bits obtained by

appending 4 parity bits:

• c1= (a1+a2) mod 2

• c2= (a2 +a3) mod 2

• c3= (a1+a3) mod 2

• c4= (a1+ a2+a3) mod 2

Example

How many code words are there in C?

(23 = 8)

C is what percent of all 7-bit binary

numbers?

((23/27) × 100 = 6.25%)

List the code words in C.

0000000, 0010111, 0101101, 0111010,

1001011, 1011100, 1100110, 1110001

Example

What is the weight of C?

(t = 4)

![Slides 3 - start [kondor.etf.rs]](http://s2.studylib.net/store/data/010042273_1-46762134bc93b52f370894d59a0e95be-300x300.png)