Research Methodology An Introduction

advertisement

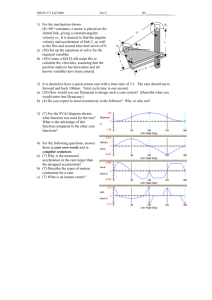

Research Methodology Lecture #3 1 Roadmap Understand What is Research Define Research Problem How to solve it? -Research methods -Experiment Design How to write and publish an IT paper? 2 How to solve the problem? Understanding the problem Distinguishing the unknown, the data and the condition Devising a plan Connecting the data to the unknown, finding related problems, relying on previous findings Carrying out the plan Validating each step, (if possible) proving correctness Looking back Checking the results, contemplating alternative solutions, exploring 3 further potential of result/method Research Methods Vs Methodology Research Methods are the methods by which you conduct research into a subject or a topic. involve conduct of experiments, tests, surveys ,…. Research Methodology is the way in which research problems are solved systematically. It is the Science of studying how research is conducted Scientifically involves the learning of the various techniques that can be used in the conduct of research and in the conduct of tests, experiments, surveys and critical studies. 4 http://www.differencebetween.com/difference-between-research-methods-and-vs-researchmethodology/ Research Approaches Quantitative Approach (Uses experimental, inferential and simulation approaches to research) Qualitative Approach (Uses techniques like in-depth interview, focus group interviews) 5 Shashikant S Kulkarni,Research Methodology An Introduction Types of Research in general Descriptive Analytical Applied Fundamental Quantitative Qualitative Conceptual Empirical Other Types 6 Shashikant S Kulkarni,Research Methodology An Introduction Descriptive Vs Analytical In Descriptive Research, the Researcher has to only report what is happening or what has happened. In Analytical Research, the Researcher has to use the already available facts or information, and analyse them to make a critical evaluation of the subject 7 Shashikant S Kulkarni,Research Methodology An Introduction Applied Vs Fundamental Applied Research , is an attempt to find solution to an immediate problem encountered by a firm, an Industry, a business organization, or the Society. ‘Fundamental’ Research , is gathering knowledge for knowledge’s sake is called ‘Pure’ or ‘Basic’. 8 Shashikant S Kulkarni,Research Methodology An Introduction Quantitative Vs Qualitative Quantitative Research involves the measurement of quantity or amount. (ex: Economic & Statistical methods) Qualitative Research is concerned with the aspects related to or involving quality or Kind.(ex: Motivational Research involving behavioural Sciences) 9 Shashikant S Kulkarni,Research Methodology An Introduction Conceptual Vs Empirical Conceptual Research, The Research related to some abstract idea or theory. (Ex: Philosophers and Thinkers using this to developing new concepts) Empirical Research relies on the observation or experience with hardly any regard for theory and system. 10 Shashikant S Kulkarni,Research Methodology An Introduction Other Types of Research One-time or Longitudinal Research (On the basis time) Laboratory Research or Field-setting or Simulational Research (On the basis of environment) Historical Research 11 Shashikant S Kulkarni,Research Methodology An Introduction Research Method Classification in Computer Science Scientific: understanding nature Engineering: providing solutions Empirical: data centric models Analytical: theoretical formalism Computing: hybrid of methods 12 From W.R.Adrion, Research Methodology in Software Engineering, ACM SE Notes, Jan. 1993 Scientist vs. Engineer A scientist sees a phenomenon and asks “why?” and proceeds to research the answer to the question. An engineer sees a practical problem and wants to know “how” to solve it and “how” to implement that solution, or “how” to do it better if a solution exists. A scientist builds in order to learn, but an engineer learns in order to build 13 The Scientific Method Observe real world Propose a model or theory of some real world phenomena Measure and analyze above Validate hypotheses of the model or theory If possible, repeat 14 The Engineering Method Observe existing solutions Propose better solutions Build or develop better solution Measure, analyze, and evaluate 15 Repeat until no further improvements are possible The Empirical Method Propose a model Develop statistical or other basis for the model Apply to case studies Measure and analyze Validate and then repeat 16 The Analytical Method Propose a formal theory or set of axioms Develop a theory Derive results If possible, compare with empirical observations Refine theory if necessary 17 The Computing Method 18 Empirical Method example (1) Do algorithm animations assist learning?: an empirical study and analysis Algorithm animations are dynamic graphical illustrations of computer algorithms, and they are used as teaching aids to help explain how the algorithms work. Although many people believe that algorithm animations are useful this way, no empirical evidence has ever been presented supporting this belief. We have conducted an empirical study of a priority queue algorithm animation, and the study's results indicate that the animation only slightly assisted student understanding. In this article, we analyze those results and hypothesize why algorithm animations may not be as helpful as was initially hoped. We also develop guidelines for making algorithm animations more useful in the future. 19 Empirical Method example (2) An empirical study of FORTRAN programs A sample of programs, written in FORTRAN by a wide variety of people for a wide variety of applications, was chosen ‘at random’ in an attempt to discover quantitatively ‘what programmers really do’. Statistical results of this survey are presented here, together with some of their apparent implications for future work in compiler design. The principal conclusion which may be drawn is the importance of a program ‘profile’, namely a table of frequency counts which record how often each statement is performed in a typical run; there are strong indications that profile-keeping should become a standard practice in all computer systems, for casual users as well as system programmers. This paper is the report of a three month study undertaken by the author and about a dozen students and representatives of the software industry during the summer of 1970. It is hoped that a reader who studies this report will obtain a fairly clear conception of how FORTRAN is being used, and what compilers can do about it.. 20 Research Phases Informational: gathering information through reflection, literature, people survey Propositional: Proposing/formulating a hypothesis, method, algorithm, theory or solution Analytical: analyzing and exploring proposition, leading to formulation, principle or theory Evaluative: evaluating the proposal 21 R.L. Glass, A structure-based critique of contemporary computing research, Journal of Systems and Software Jan (1995) Method-Phase Matrix Methods/Phases Informational Propositional Analytical Evaluative Scientific Observe the world Propose a model or theory or behavior Measure and analyze Validate hypothesis of the model or theory; if possible repeat Engineering Observe existing solutions Propose better solutions; build or develop Measure and analyze Measure and analyze; repeat until no further improvements possible Empirical Propose a model; Apply to case develop statistical studies; measure or other methods and analyze Measure and analyze; validate model; repeat Analytical Propose a formal theory or set of axioms Derive results; compare with empirical observations if possible Develop a theory; derive results Experimenting: experiment design 23 Scientific method in one minute 1. 2. 3. 4. 5. 6. 24 Use experience and observations to gain insight about a phenomenon Construct a hypothesis Use hypothesis to predict outcomes Test hypothesis by experimenting Analyze outcome of experiment Go back to step 1 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Typical computer science scenario A particular task needs to be solved by a software system This task is currently solved by an existing system (a baseline) You propose a new, in your opinion, better system You argue why your proposed system is better than the baseline You support your arguments by providing evidence that your system 25 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Running example in this lecture Text entry on a Tablet PC: A. Handwriting recognition B. 26 Software keyboard http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Why experiments? Substantiate claims A research paper needs to provide evidence to convince other researchers of the paper’s main points Strengthen or falsify hypotheses “My system/technique/algorithm is [in some aspect] better than previously published systems/techniques/algorithms” Evaluate and improve/revise/reject models “The published model predicts users will type at 80 wpm on average after 40 minutes of practice with a thumb keyboard. In our experiment no one surpassed 25 wpm after several hours of practice.” 27 Gain further insights, stimulate thinking and creativity http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Experiments in Computer Science 28 Experiments in Computer Science 29 Experiment example 30 http://win.ua.ac.be/~sdemey/Tutorial_ResearchMethods/ Research Method : Feasibility Study Metaphor: Christopher Columbus and western route to India 1. Is it possible to solve a specific kind of problem effectively ? computer science perspective (Turing test, …) engineering perspective (build efficiently; fast — small) economic perspective (cost effective; profitable) 2. Is the technique new / novel / innovative ? compare against alternatives 3. 4. See literature survey; comparative study Proof by construction build a prototype often by applying on a “CASE” primarily qualitative; "lessons learned“ quantitative economic perspective: cost - benefit engineering perspective: speed - memory footprint http://win.ua.ac.be/~sdemey/Tutorial_ResearchMethods/ 31 Feasibility Study Example : A feasibility study for power management in LAN switches We examine the feasibility of introducing power management schemes in network devices in the LAN. Specifically, we investigate the possibility of putting various components on LAN switches to sleep during periods of low traffic activity. Traffic collected in our LAN indicates that there are significant periods of inactivity on specific switch interfaces. Using an abstract sleep model devised for LAN switches, we examine the potential energy savings possible for different times of day and different interfaces (e.g., interfaces connecting to hosts to switches, or interfaces connecting switches, or interfaces connecting switches and routers). Algorithms developed for sleeping, based on periodic protocol behavior as well as traffic estimation are shown to be capable of conserving significant amounts of energy. Our results show that sleeping is indeed feasible in the LAN and in some cases, with very little impact on other protocols. However, we note that in order to maximize energy savings while minimizing sleep-related losses, we need hardware that supports sleeping. 32 http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=1348125&tag=1 Pilot Case !Metaphor: Portugal (AmerigoVespucci) explores western route Here is an idea that has proven valuable; does it work for us ? Proven valuable accepted merits (e.g. “lessons learned” from feasibility study) there is some (implicit) theory explaining why the idea has merit does it work for us context is very important Demonstrated on a simple yet representative “CASE” “Pilot case” <> “Pilot Study” Proof by construction build a prototype apply on a “case” 33 http://win.ua.ac.be/~sdemey/Tutorial_ResearchMethods/ Pilot Case Example : Code quality analysis in open source software development Abstract Proponents of open source style software development claim that better software is produced using this model compared with the traditional closed model. However, there is little empirical evidence in support of these claims. In this paper, we present the results of a pilot case study aiming: (a) to understand the implications of structural quality; and (b) to figure out the benefits of structural quality analysis of the code delivered by open source style development. To this end, we have measured quality characteristics of 100 applications written for Linux, using a software measurement tool, and compared the results with the industrial standard that is proposed by the tool. Another target of this case study was to investigate the issue of modularity in open source as this characteristic is being considered crucial by the proponents of open source for this type of software development. We have empirically assessed the relationship between the size of the application components and the delivered quality measured through user satisfaction. We have determined that, up to a certain extent, the average component size of an application is negatively related to the user satisfaction for this application. 34 http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.102.7392 Comparative Study Here are two techniques, which one is better ? For a given purpose ! Where are the differences ? What are the tradeoffs ? Criteria check-list qualitative and quantitative qualitative: how to remain unbiased ? quantitative: represent what you want to know ? Often by applying the technique on a “CASE” Compare typically in the form of a table 35 http://win.ua.ac.be/~sdemey/Tutorial_ResearchMethods/ Comparative Study A comparative study of fuzzy rough sets Abstract 36 http://www.sciencedirect.com/science/article/pii/S016501140100032X Observational Study Understand phenomena through observations Metaphor: Diane Fossey “Gorillas in the Mist” Systematic collection of data derived from direct observation of the everyday life phenomena is best understood in the fullest possible context observation & participation interviews & questionnaires 37 http://win.ua.ac.be/~sdemey/Tutorial_ResearchMethods/ Observational Study Example: Action Research Action research is carried out by people who usually recognize a problem or limitation in their workplace situation and, together, devise a plan to counteract the problem, implement the plan, observe what happens, reflect on these outcomes, revise the plan, implement it, reflect, revise and so on. Conclusions primarily qualitative: classifications/observations/… 38 http://win.ua.ac.be/~sdemey/Tutorial_ResearchMethods/ Literature Survey What is known ? What questions are still open ? Systematic comprehensive” precise research question is prerequisite defined search strategy (rigor, completeness, replication) clearly defined scope criteria for inclusion and exclusion specify information to be obtained the “CASES” are the selected papers 39 Formal Model How can we understand/explain the world ? make a mathematical abstraction of a certain problem analytical model, stochastic model, logical model, re-write system, ... prove some important characteristics Example : A Formal Model of Crash Recovery in a Distributed System Abstract A formal model for atomic commit protocols for a distributed database system is introduced. The model is used to prove existence results about resilient protocols for site failures that do not partition the network and then for partitioned networks. For site failures, a pessimistic recovery technique, called independent recovery, is introduced and the class of failures for which resilient protocols exist is identified. For partitioned networks, two cases are studied: the pessimistic case in which messages are lost, and the optimistic case in which no messages are lost. In all cases, fundamental limitations on the resiliency of protocols are derived. 40 http://win.ua.ac.be/~sdemey/Tutorial_ResearchMethods/ Simulation What would happen if … ? study circumstances of phenomena in detail simulated because real world too expensive; too slow or impossible make prognoses about what can happen in certain situations test using real observations, typically obtained via a “CASE” Examples distributed systems (grid); network protocols too expensive or too slow to test in real life embedded systems — simulating hardware platforms 41 impossible to observe real clock-speed / http://win.ua.ac.be/~sdemey/Tutorial_ResearchMethods/ Back to our example Why this experiment? Despite decades of research there is no empirical data of text entry performance of handwriting recognition An inappropriate study of handwriting (sans recognition) from 1967 keeps getting cited in the literature, often through secondary or tertiary sources (handbooks, etc.) Based on these numerous citations in research papers, handwriting recognition is perceived to be rather slow However, there is no empirical evidence that supports this claim 42 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Controlled experiments and hypotheses A controlled experiment tests the validity of one or more hypothesis Here we will consider the simplest case: One method vs. another method Each method is referred to as a condition The null hypothesis H0 states there is no difference between the conditions Our hypothesis H1 states there is a difference between the conditions To show a statistically significant difference the null hypothesis H0 needs to be rejected 43 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Choice of baseline A baseline needs to be accepted by your readers as a suitable baseline Preferably the baseline is the best method that is currently available In practice a baseline is often a standard method which is well understood but often not representative of the state-of-theart 44 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Example Our example, two conditions 45 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Why this baseline? The software keyboard is well understood – Many empirical studies of their performance – Also exists expert computational performance models The software keyboard is the de-facto standard text entry method on tablets The literature compares handwriting recognition text entry performance against measures of the software keyboard 46 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Aim of controlled experiment To measure effects of the different conditions To control for all other confounding factors To be internally valid To be externally valid To be reproducible 47 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Experimental design Dependent and independent variables Within-subjects vs. between-subjects Mixed designs Single session vs. longitudinal experiments 48 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Dependent and independent variables Dependent variable: –What is measured – Typical examples (in CS): time, accuracy, memory usage Independent variable – What is manipulated – Typical examples (in CS): the system used by participants, feedback to participant (e.g. a beep versus a visual flash) 49 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Deciding what to manipulate and what to measure This is a key issue in research Boils down to your hypothesis: What do you believe? How can you substantiate your claim by making measures? What can you measure? Is it possible to protect internal validity without sacrificing external validity? 50 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Our example We let participants write phrases using either: Software keyboard (baseline) Handwriting recognition That is, we manipulate the input method We measure: Entry rate in words-per-minute Error rate in number of written characters that do not match the stimulus 51 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Between-subjects design Each participant is exposed to only one condition For example, in a study examining the effect of Bayer aspirin vs Tylenol on headaches, we can have 2 groups (those getting Bayer and those getting Tylenol). Participants get either Bayer OR Tylenol, but they do NOT get both. One of the simplest experimental designs Advantages: No risk of confuse or skill-transfer from one condition to the other Therefore no need to do counter-balancing or check for asymmetrical skill-transfer effects Disadvantages: Variance is not controlled within the participant Therefore demands more participants than a within-subjects design to show a statistically significant difference 52 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Within-subjects design Each participant is exposed to all conditions One of the most common experimental designs in practice Advantages: Variance is controlled within the participant Therefore requires fewer participants than a between-subjects design Disadvantages: More involved, requires counter-balancing of start condition to avoid transfer effects Risk of asymmetrical skill transfer 53 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Mixed designs It is also possible to combine within- and between-subjects 54 experimental designs Such designs are called mixed designs These are difficult to design because they are more difficult to control A mixed design can be a symptom of no clear set of hypotheses, or lack of ability to prioritise among them Often a mixed design can be broken down into smaller studies that study isolated phenomena separately http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Single session vs. longitudinal Do you believe participants will improve significantly over time? If so, how much will they improve? How are previous related studies set up in the literature? 55 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Method Participants Apparatus Procedure 56 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Participants How many? How is the sample constructed? Is it representative of the population we believe will use the interface? Are potential problematic confounds taken care off? Did participants receive any compensation? Was the study approved by the university ethics committee? [if applicable] 57 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Our example We recruited 12 volunteers from the university campus. We intentionally wanted a rather broad sample and recruited participants from many different departments with many different backgrounds. Six were men and six were women. Their ages ranged between 22-37 (mean = 27, sd = 4). Participants were screened for dyslexia and repetitive strain injury (RSI). Seven participants were native English speakers and five participants had English as their second language. No participant had used a handwriting recognition interface before. One participant had used a software keyboard before. No participant had regularly used a software keyboard before. Participants were compensated £10 per session. 58 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Apparatus Which equipment and which software? Needs to be described in sufficient detail to enable other researchers to replicate your experiment Typical information: Physical and logical screen size Sensor device characteristics CPU clock speed Computer brand/model Choices that are not obvious need to be motivated 59 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Apparatus, our example We used a Dell Latitude XT Tablet PC running Windows Vista Service Pack 1. The 12.1" color touch-screen had a resolution of 1280 × 800 pixels and a physical screen size of 261 × 163 mm. Participants used a capacitance-based pen to write directly onto the screen in both conditions. Both the handwriting recognizer and the software keyboard were docked to the lower part of the screen. The dimensions of the software keyboard were 1266 × 244 pixels and 257 × 50 mm. The dimensions of the handwriting recognizer writing area measured 1266 × 264 pixels and 257 × 55 mm. 60 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Procedure Describes how the experiment was carried out Needs to be described in sufficient detail for other researchers to be able to replicate your experiment Again, choices need to be motivated 61 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Procedure, our example The experiment consisted of one introductory session and ten testing sessions. In the introductory session the experimental procedure was explained to the participants. Participants were shown how to use the software keyboard and the handwriting recognizer, including demonstrations of how to correct errors. Each testing session lasted slightly less than one hour. Testing sessions were spaced at least 4 hours from each other and subsequent testing sessions were maximally separated by two days. In each testing session participants did both conditions (software keyboard and handwriting recognition). The order of the conditions alternated between sessions and the starting condition was balanced across participants. Each condition lasted 25 minutes. Between conditions there was a brief break. Participants were also instructed that they could rest at any time after completing an individual phrase. 62 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Procedure, our example In each condition participants were shown a phrase drawn from the phrase set provided by MacKenzie and Soukoreff [8]. Each participant had their own randomized copy of the phrase set. Participants were instructed to quickly and accurately write the presented phrase using either the software keyboard or the handwriting recognizer. Participants were instructed to correct any mistakes they spotted in their text. In the handwriting condition we instructed participants to write using their preferred style of handwriting (e.g. printed, cursive or a mixture of both). After they had written the phrase they pressed a Submit button and the next phrase was displayed. The Submit button was a rectangular button measuring 248 × 16 mm. It was placed 9 mm above the keyboard and handwriting recognizer writing area. 63 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ After the experiment Results Limitations and implications 64 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Our Example 65 http://www.cl.cam.ac.uk/teaching/0910/C00/L10/ Summary A well-designed controlled experiment provides you empirical evidence that your new method is better [in some aspects] than some previous method in the literature (a baseline) Important to consider the experimental design early Within vs. between Dependent and independent variables Internal and external validity Pilot study often a good idea (perhaps your method has a fatal flaw) Important to point out limitations and implications Experiments must be reproducible 66 http://www.cl.cam.ac.uk/teaching/0910/C00/L10