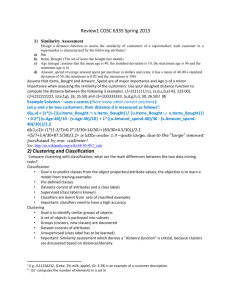

2014 Midterm Exam with Solution Sketches

advertisement

COSC 6335 Data Mining (Dr. Eick)

Solution Sketches

Midterm Exam

November 6, 2014

Your Name:

Your Student id:

Problem 1 --- K-Means/PAM [18]

Problem 2 --- DBSCAN [9]:

Problem 3 --- Similarity Assessment [11]

Problem 4 --- R-Programming [11]

Problem 4 --- Decision/Regression Trees [9]

Problem 5 --- APRIORI [7]:

Problem 6 --- Exploratory Data Analysis [5]:

:

Grade:

The exam is “open books” and you have 85 minutes to complete the exam.

The exam is slightly too long and you are expected to solve 90% of the

problems in the exam. The exam will count approx. 26% towards the course

grade.

1

1) K-Means and K-Medoids/PAM[18]

a) K-means is a very efficient clustering algorithm; why is this the case—what of KMeans’ properties contribute to its efficiency? [3]

The loop—which assigns objects to clusters and then recomputes centroids until

there is no more change—only takes a few iterations and the complexity of each

iteration is only O(k*n), where k is the number of clusters and n is the number

of objects in the dataset. [2]

K-means minimizes the mean-squared objective function without ever

computing the mean-squared error (implicit objective function)[1]

b) Assuming an Euclidian distance function is used, the shapes of clusters K-means can

discover are limited to convex polygons; what does this mean? What are the implications

of this statement? [3]

K-means cannot discover clusters whose shape cannot be represented as a convex

polygon, such as clusters that have shapes of the letters ‘T’, ‘V’ or O. Consequently,

such clusters cannot be directly be found with K-means and the best we can do is to

approximate those clusters using unions of K-means clusters.

c) However, the “Top 10 Data Mining Algorithm article, the authors say: “So, K-means

will falter whenever there are non-convex shaped clusters in the data. This problem may

be alleviated by rescaling the data to “whiten” it before clustering, or by using a

different distance measure that is more appropriate for the dataset”. Explain what the

authors have in mind to enable K-means to find non-convex clusters1? [3]

The authors suggest to alleviate the problem by modifying the distance function. This

can be done in two ways: Either by transforming the dataset using a scaling function

(or a kernel function) that then is used in conjunction with the original distance

function (e.g. Euclidean distance)—indirectly changing the distance measure— or by

directly changing the distance measure. The key idea is that convex shaped clusters in

the modified distance space, frequently correspond to non-convex clusters in the

original space, enabling K-means to find non-convex shaped clusters.

d) K-means has difficulties to cluster datasets that contain categorical attributes. What is

this difficulty? [2]

Categorical attributes have no ordering; consequently, it is difficult to define the mean

(or even the median) of a set of values of a categorical attributes; e.g. what is the

mean of {yellow, blue, blue, red, red}?

1

Hint: Recall the approach we used in Task7 of Project2 to enable K-means to find different clusters!

2

e) Assume we cluster a dataset with 5 objects with PAM/K-medoids for k=2 and this is

the Distance Matrix for the 5 objects (e.g. the entry 4 in the distance matrix indicates

that the distance of object 1 and 3 is 4) :

02456

0233

055

02

0

PAM’ s initial set of representatives is R={1,5}. What is the initial cluster PAM computes

from R and what is its fitness(squared error) [2]

Initial clustering: {1,2,3} and {4,5}

Fitness/Sum of Squared Distances from Cluster Objects to their Representative:

2^2 + 4^2 + 2^2 = 24

What computations does PAM perform in the next iteration? [2]

Every representative is replaced with a non-representative point while keeping the

rest of the representatives in place and the SSE is calculated. The clustering with the

lowest SSE is chosen in the next iteration.

What is the next cluster PAM obtains and what is its fitnees?[3]

Representative Set SSE

{2,5}

2^2 + 2^2 + 2^2

{3,5}

4^2 + 2^2 + 2^2

{4,5}

5^2 + 3^2 + 5^2

{1,2}

2^2 + 3^2 + 3^2

{1,3}

2^2 + 5^2 + 5^2

{1,4}

2^2 + 4^2 + 2^2

Next clustering: {1,2,3} and {4,5}. Representatives are {2,5}

Fitness: 2^2 + 2^2 + 2^2 = 12

2) DBSCAN [9]

a) Assume you run DBSCAN with MinPoints=6 and epsilon=0.1 for a dataset and we

obtain 4 clusters and 20% of the objects in the dataset are classified as outliers. Now we

run DBSCAN with MinPoints=5 and epsilon=0.1. How do expect the clustering results

to change? [4]

Less outliers [1], Clusters may increase in size [1], Some clusters may merge to form

larger clusters [1], More number of core points and border points [1]

b) What is a noise point/outlier for DBSCAN? [2]

A noise point is any point which is not a core point [1] and not in the epsilon radius

of a core point [1]

3

c) Assume you run DBSCAN in R and you obtain the following clustering results for a

dataset containing 180 objects.

0 1

border 90 2

seed

0 88

total 90 90

What does the displayed result mean with respect to number of clusters, outliers, border

points and core points?[3]

There is 1 cluster with 88 core points and 2 border points. There are 90 outliers.

3) Similarity Assessment [11]

Design a distance function to assess the similarity of customers of a supermarket; each

customer in a supermarket is characterized by the following attributes2:

a) Ssn

b) Items_Bought (The set of items the bought last month)

c) Amount_spend (Average amount spent per purchase in dollars and cents; it has a

mean of 50.00 a standard deviation of 40, the minimum is 0.05 and the maximum

is 600)

d) Age (is an ordinal attribute taking 5 values: child, young, medium, old, very_old)

Assume that Items_Bought and Amount_Spend are of major importance and Age is of a

minor importance when assessing the similarity of the customers. [8]

MANY POSSIBLE ANSWERS!!!

One possible answer: Ignore SSN as it is not important.

Find distance between items bought using Jaccard: 1 – Jaccard

Index(u.Items_Bought,v.Items_Bought)

Normalize Amount_spend using Z-score and find distance by L-1 norm

Assign 5 values to Age using a function : child = 0, young = 1, medium = 2, old = 3,

very old = 4

Find distance by taking L-1 norm and dividing by range i.e. 4

Assign weights 0.4 to Items_Bought, 0.4 to Amount_spend and 0.2 to age

So distance between 2 points:

d(u,v) = 0.4*(1 – Jaccard(u.Items_Bought, v.Items_Bought)) + 0.4*|(u.Amount)/40 –

(v.Amount)/40| + 0.2*|(u.Age) – (v.Age)|

with Jaccard(A,B)= |AB|/|AB|

Compute the distance of the following 2 customers

c1=(111111111, {A,B,C,D}, 40.00, ‘old’) and

c2=(222222222, {D,E,F}, 100.00, ‘young’)

with your distance function[3]:

d(c1,c2) = 0.4*(1 – 1/6) + 0.4*|(40-50)/40 – (100-50)/40| + 0.2*|3-1|/4

=0.33 + 0.6 + 0.1

=1.03

2

E.g. (111234232, {Coke, 2%-milk, apple}, 42.42, ‘medium’) is an example of a customer description.

4

4) R-Programming [11]

Write a generic function highest_percentage(x,y,n,class) which will return the number of

the cluster with highest percentage of samples from class in clustering x, where n is the

highest cluster number in the clustering x. The actual classes of the samples are in y.

For reference, you can use the iris dataset.

sepal length

sepal width

petal length

1

5.1

3.5

1.4

2

4.4

2.9

1.4

3

7.0

3.2

4.7

7

…

…

…

petal width

0.2

0.2

1.4

…

class

Setosa

Setosa

Versicolor

…

For example, if the function is called for a clustering result for the Iris Flower dataset

such that percentage of Setosa in Cluster 1 is 20%, percentage of Setosa in cluster 2 is

25% and percentage of Setosa in cluster 3 is 22%, a value of 2 will be assigned in the

code below to the variable Z:

Y<-kmeans(iris[1:4],3)

Z<-highest_percentage(Y$cluster,iris[,5],3,’Setosa’)

Your function should work for any dataset and any clustering result and should not

consider cluster 0 in case the clustering result supports outliers.

highest_percentage<-function(x,y,n,class) {

outliers = ifelse(0 %in% x,TRUE,FALSE)

if(outliers) { % decide start and end points depending on OUTLIERS

start = 2

end = n + 1

} else {

start = 1

end = n

}

t<-table(x,y)

tot<-apply(t,1,sum)

for(i in c(start:end)) {

t[i,]<-t[i,]*100/tot[i]

}

return(which.max(t[start:end,class]))

}

You can test this using the following:

km <- kmeans(iris[,1:4],3)

require(fpc)

dbs <- dbscan(iris[,1:4],eps=0.4,MinPts=5)

highest_percentage(km$cluster,iris[,5],3,'versicolor')

highest_percentage(dbs$cluster,iris[,5],4,'setosa')

5

If you are not aware of which.max() you can write a few lines of code to find the

index of maximum percentage.

5) Decision Trees/Regression Trees [9]

a) What are the characteristics of overfitting when learning decision trees? Assume you

observe overfitting, what could be done to learn a “better” decision tree? [5]

Training error is low [1] but testing error is high/not optimal [1]. The model is too

complex [1] and has probably learnt noise [1].

We can use pruning [1] – post-pruning [0.5] or pre-pruning [0.5]

b) What interestingness function does the regression tree induction algorithm minimize

and why do you believe this interestingness function was chosen? [4]

Variance or SSE [2]

If variance/SSE is low, prediction error is low and we get a regression tree which

better fits the data. [2]

6) APRIORI [7]

a) What is the APRIORI property? Where is it used in the APRIORI algorithm? [3]

Let i be an interestingness measure and X and Y be two sets, then

𝑿 ⊆ 𝒀 => 𝒊(𝑿) ≥ 𝒊(𝒀) [1]. This property is used when creating (k+1)-frequent

itemsets from k-frequent itemsets [1] and for subset pruning in (k+1)-frequent

itemsets[1].

b) Assume the APRIORI algorithm identified the following 7 4-item sets that satisfy a

user given support threshold: abcd, abce, abcf, bcde, and bcdf; what initial

candidate 5-itemsets are created by the APRIORI algorithm; which of those survive

subset pruning? [4]

abcde, abcdf, abcef, bcdef

abcde pruned as acde is not frequent

abcdf pruned as acdf is not frequent

abcef pruned as acef is not frequent

bcdef pruned as bdef is not frequent

7. Exploratory Data Analysis [5]

a. What is the purpose of exploratory data analysis? [3]

create background knowledge about the task at hand [1], assess difficulty [1],

provide knowledge to help select appropriate tools for the task[1], assess quality of

data [1], validate data [1], help form hypothesis [1], find issues, patterns and errors

in data [1]

At most 3 points + 1 bonus

b. What does the box in a box plot describe? [2]

The box depicts the 25 percentile to 75 percentile range of the distribution of the

attribute; horizontal line at median i.e. 50 percentile. The size of the box measures

the spread’ of data. Using the word ‘variance’ is not appropriate here!

6