ppt - GroZi

advertisement

GroZi: a Grocery Shopping Assistant

for the Blind

Carolina Galleguillos and Serge Belongie

Department of Computer Science and Engineering, UCSD

{cgallegu,sjb}@cs.ucsd.edu

Abstract

Use of the System

Grocery shopping is a common activity that

people all over the world perform on a regular

basis.

Unfortunately,

grocery

stores

and

supermarkets are still largely inaccessible to

people with visual impairments, as they are

generally viewed as "high cost" customers.

Create a Shopping List

We propose to develop a computer vision based

grocery shopping assistant based on a handheld

device with haptic feedback that can detect

different products inside of a store, thereby

increasing the autonomy of blind (or low vision)

people to perform grocery shopping.

Our solution makes use of new computer vision

techniques for the task of visual recognition of

specific products inside of a store as specified in

advance on a shopping list. These techniques can

avail of complementary resources such as RFID,

barcode scanning, and sighted guides.

We also present a challenging new dataset of

images consisting of different categories of

grocery products that can be use for object

recognition studies.

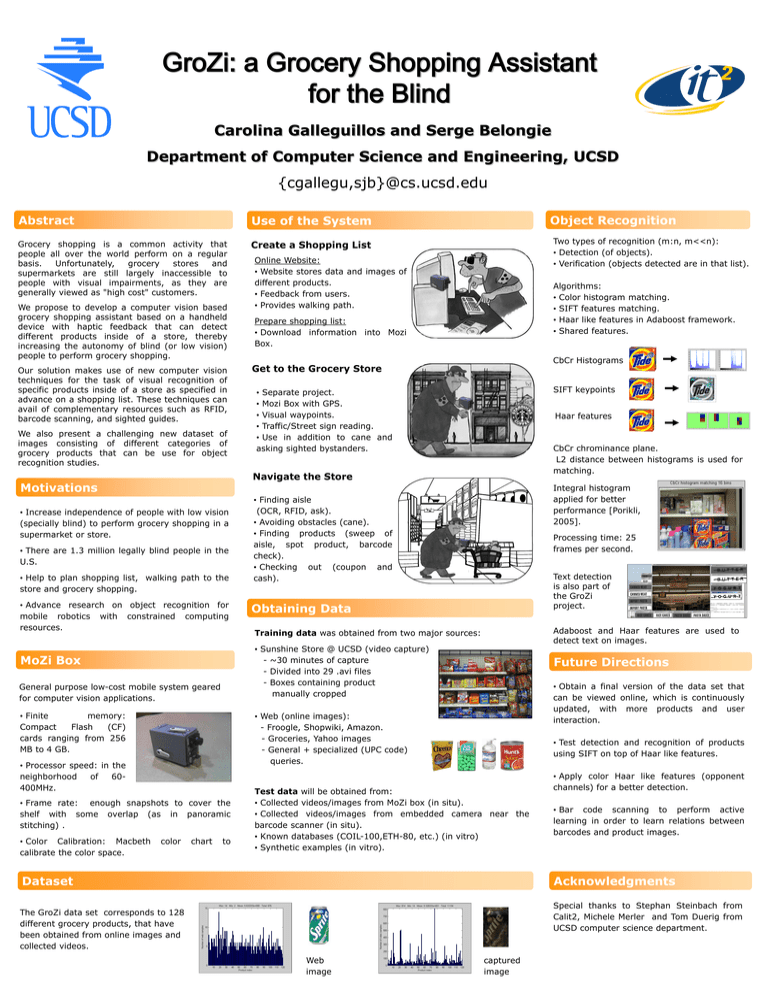

Two types of recognition (m:n, m<<n):

• Detection (of objects).

• Verification (objects detected are in that list).

Online Website:

• Website stores data and images of

different products.

• Feedback from users.

• Provides walking path.

Algorithms:

• Color histogram matching.

• SIFT features matching.

• Haar like features in Adaboost framework.

• Shared features.

Prepare shopping list:

• Download information into Mozi

Box.

CbCr Histograms

Get to the Grocery Store

SIFT keypoints

• Separate project.

• Mozi Box with GPS.

• Visual waypoints.

• Traffic/Street sign reading.

• Use in addition to cane and

asking sighted bystanders.

Haar features

CbCr chrominance plane.

L2 distance between histograms is used for

matching.

Navigate the Store

Motivations

• Increase independence of people with low vision

(specially blind) to perform grocery shopping in a

supermarket or store.

• There are 1.3 million legally blind people in the

U.S.

• Help to plan shopping list, walking path to the

store and grocery shopping.

• Advance research on object recognition for

mobile robotics with constrained computing

resources.

Integral histogram

applied for better

performance [Porikli,

2005].

• Finding aisle

(OCR, RFID, ask).

• Avoiding obstacles (cane).

• Finding products (sweep of

aisle, spot product, barcode

check).

• Checking out (coupon and

cash).

Processing time: 25

frames per second.

Text detection

is also part of

the GroZi

project.

Obtaining Data

Adaboost and Haar features are used to

detect text on images.

Training data was obtained from two major sources:

• Sunshine Store @ UCSD (video capture)

- ~30 minutes of capture

- Divided into 29 .avi files

- Boxes containing product

manually cropped

MoZi Box

General purpose low-cost mobile system geared

for computer vision applications.

• Finite

memory:

Compact

Flash

(CF)

cards ranging from 256

MB to 4 GB.

Future Directions

• Obtain a final version of the data set that

can be viewed online, which is continuously

updated, with more products and user

interaction.

• Web (online images):

- Froogle, Shopwiki, Amazon.

- Groceries, Yahoo images

- General + specialized (UPC code)

queries.

• Processor speed: in the

neighborhood

of

60400MHz.

• Frame rate: enough snapshots to cover the

shelf with some overlap (as in panoramic

stitching) .

• Color Calibration: Macbeth

calibrate the color space.

Object Recognition

color

chart

to

• Test detection and recognition of products

using SIFT on top of Haar like features.

Test data will be obtained from:

• Collected videos/images from MoZi box (in situ).

• Collected videos/images from embedded camera near the

barcode scanner (in situ).

• Known databases (COIL-100,ETH-80, etc.) (in vitro)

• Synthetic examples (in vitro).

Dataset

• Apply color Haar like features (opponent

channels) for a better detection.

• Bar code scanning to perform active

learning in order to learn relations between

barcodes and product images.

Acknowledgments

Special thanks to Stephan Steinbach from

Calit2, Michele Merler and Tom Duerig from

UCSD computer science department.

The GroZi data set corresponds to 128

different grocery products, that have

been obtained from online images and

collected videos.

Web

image

captured

image