Online Training Module - UNC School of Medicine

1

T HE B ASICS : E VIDENCE -B ASED

P RACTICE FOR P HYSICAL

T HERAPISTS AND P HYSICAL

T HERAPIST A SSISTANTS ,

O NLINE T RAINING M ODULE

Jessica Lambeth, MPT jessicamlambeth@yahoo.com

Module Purpose

The purpose of this online training module is to share the basics of evidence-based practice

(EBP). This module focuses on general concepts of EBP, with clinical scenarios related to schoolbased physical therapy.

2

Module Purpose, continued

The main emphasis of this module is not to promote certain treatments or tests and measures or “tell you” what the recent literature reveals about pediatric physical therapy practices. As will be discussed in the coming slides, the “research answer” alone is not the correct answer. In addition, EBP emphasizes the necessity of learning to perform searches and evaluate the material independently, related to clinicians’ and patients’ current circumstances. While initially time consuming, the results are worthwhile.

3

Module Purpose, continued

For those without a recent EBP background or training, this module will not result in immediate efficiency in literature searches, statistical interpretation, etc. Hopefully it will, however, encourage more questions and motivation for EPB.

It will also enable the NC DPI to focus educational sessions on meeting needs in the area of EBP and to gauge the current knowledge of school-based

PTs and PTAs. Your feedback and comments will help to plan future learning opportunities and resources.

4

Pretest

Please stop here and complete the pretest if you have not already done so. Please also keep track of your time, as directed in the instructions.

Also, “I don’t know” answers have been included to ensure that we receive appropriate feedback. If you really do not know the answer, in the pretest or the posttest, please indicate that in your response. This will greatly assist in providing necessary learning opportunities in the future, as well as help us to evaluate the effectiveness of the online module format and material.

5

References:

The content of this EBP module is largely based on curriculum from courses within the transitional

Doctor of Physical Therapy program at Rocky

Mountain University of Health Professions in Provo,

Utah. (web address: www.rmuohp.edu

)

6

References, continued:

Much of the material within this online training module can be found in the following texts:

Guyatt G, Rennie D. Users' Guides to the Medical Literature- Essentials of

Evidence-Based Clinical Practice. Chicago: AMA Press; 2002.

Jaeschke R, Singer J, Guyatt GH. Measurement of health status: ascertaining the minimal clinically important difference. Control Clin Trials

1989;10:407-15.

Portney LG, Watkins MP. Foundations of Clinical Research: Applications to

Practice . 2nd ed. Upper Saddle River, NJ: Prentice-Hall Inc, 2000.

Rothstein JM. Autonomous Practice or Autonomous Ignorance? (Editorial)

Physical Therapy 81(10), October 2001.

7

References, continued:

Much of the material within this online training module can be found in the following texts:

Straus SE, Richardson WS, Glasziou P, Haynes RB. Evidence-based

Medicine: How to Practice and Teach EBM . 3rd ed. Edinburgh: Churchill

Livingstone; 2005.

All clipart came from Microsoft Office: http://office.microsoft.com/en-us/clipart/default.aspx

.

Accessed 05/09/2009.

8

Module Outline

1) What is EBP? Slides 9-22

2) Statistics Review: Basic Research, Slides 23-115

3) How to Search for EBP, Slides 116-152

4) How to Interpret Research Related to EBP, Slides

153-174

9

Section 1 Outline

What is Evidence-Based Practice?

• Evidence-Based Practice: General Introduction,

10-12

• Survey Results (NC school-based PTs view of

EBP),13-18

• Guiding Steps to Practice EBP,19-20

• Two Fundamental Principles of EBP, 21

• Best Research Evidence, 22

10

Evidence-Based Practice:

General Introduction

EBP is the integration of the best research evidence, clinical expertise, and the patient’s values and circumstances

• Best Research Evidence: valid and clinically relevant research with a focus on patient-centered clinical research

• Clinical Expertise: use of clinical skills and experiences

• Patient’s Values and Circumstances: the patient’s unique preferences, concerns, and expectations in his or her setting

11

(Straus et al, 2005)

Evidence-Based Practice:

General Introduction

EBP is Not:

• Focused only on research studies

• Only to be used or understood by professionals who routinely participate in research studies

• A discouragement from trying new treatment

There may be little or no research on a particular topic, or studies with small sample sizes may have lacked the power to demonstrate statistical significance (as later explained in the statistics section)

12

Evidence-Based Practice:

General Introduction

“Because RCTs are so difficult, we will always have areas that lack evidence, we will need to find other credible research approaches to supply evidence.

Keep in mind that an absence of evidence is different from negative evidence. An absence of evidence is not an excuse to ignore the growing body of data available to guide practice.”

(RCT = randomized clinical or controlled trials)

13

(

Rothstein 2001)

Survey Results

Survey responses from North Carolina School-

Based PTs/PTAs about EBP:

• Survey distributed at the NC Exceptional

Children’s Conference, Nov 2008

• 41 Respondents

( www.med.unc.edu/ahs/physical/schoolbasedpt for detailed results)

• 73% had participated in a conference or course on the use of EBP in the last 5 years

• Of those that participated in a course, respondent data suggests the course changed their view of EBP, but their use and practice of EBP was changed to a lesser degree.

14

Survey Results, Continued…

• Highest frequency response as to why we should use EBP

positive impact on our clinical practice

• 39 of 41 respondents agreed that EBP is relevant and necessary for PTs in the school system (2 left that question blank)

Highest frequency responses as to why it is relevant and necessary: A focus on EBP results in improved clinical practice and provides validation and justification for our role as school-based

PTs/PTAs

15

Survey Results, Continued…

• When respondents were asked if they were comfortable searching for and using EBP:

• Yes: 17 (41%),

• No: 18 (44%),

• No Response: 6 (15%)

• Internet was the most frequently used source for searching EBP, but continuing education courses ranked highest for preference.

16

Survey Results, Continued…

• A majority of the respondents were comfortable:

Determining the level of evidence and interpreting the authors’ conclusions

• A majority of the respondents were not comfortable:

Interpreting statistics, even though statistical knowledge is often helpful to evaluate conclusions drawn by the author

17

Survey Results, Continued…

The primary barriers to searching and using EBP, as listed by NC school PTs/PTAs:

1. Time

2. Access

3. Uncertain how to search for EBP

Factors that enhance the search and use of EBP, as listed by NC school PTs/PTAs listed:

1. Additional time

2. Education on the appropriate use of EBP

3. Education in how to access EBP resources

18

Survey Results, Continued…

Questions generated from the survey:

• How to efficiently and effectively increase the knowledge and practice of EBP by NC school

PTs/PTAs?

• How to address barriers to using and searching for EBP?

• How to enhance use and access of EBP in school-based practice?

19

Guiding Steps to Practice EBP

1. Analyze what we know and what we do not know, in relation to improving our clinical practice. Form answerable questions to address any gaps in our knowledge.

2. Search for and find the best research evidence to address our questions.

3. Critically appraise the information, based on its validity, impact or size of effect, and applicability.

20

(Straus et al, 2005)

Guiding Steps to Practice EBP

4. Integrate information gathered from the best research evidence with clinical expertise and the patient’s values and circumstances

5. Evaluate the effectiveness of any intervention taken based on steps 1-4, and the effectiveness and efficiency of the process

21

(Straus et al, 2005)

Two Fundamental Principles of EBP

1.

“Evidence alone is never sufficient to make a clinical decision”

(page 8)

Consider risks and benefits, costs, inconvenience, alternative treatment strategies, patient preferences/values and circumstances.

2.

“EBM posits a hierarchy of evidence to guide clinical decision making”

(page 8)

Not all research is equal in terms of relevance and statistical support, however, that does not mean lower level evidence is not worthwhile.

(

Guyatt and Rennie use the term Evidence-Based

Medicine, EBM)

22

(Guyatt and Rennie, 2002)

Best Research Evidence

• The three sections that follow will focus on the

“best research evidence” branch of Straus’ three components of EBM . (Straus et al, 2005)

• Best research evidence is not more important than the other two branches; it is prominent in this module because knowledge concerning clinical expertise and patient values and expectations will vary from situation to situation.

• Section 2 (Statistics Review, Basic Research) will provide the background information necessary to perform effective searches and interpret the best research evidence (Sections 3 and 4).

23

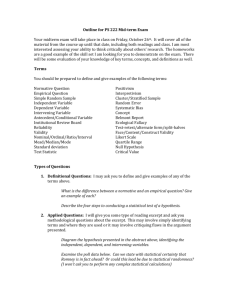

Section 2 Outline

Statistics Review, Basic Research

• Types of Research, 25-33

• Hierarchy of Evidence, 34-35

• Variables, 36-44

• Measurement Validity

• Types, 45-48

• Statistics, 49-67

• Sensitivity and Specificity

• Positive and Negative Predictive Value

• Positive and Negative Likelihood

• Receiver Operating Characteristic

(ROC) Curve

• Responsiveness to Change

• Effect Size versus MCID

24

Section 2 Outline, continued…

Statistics Review, Basic Research, continued

• Measurement Reliability, 68-72

• Descriptive Statistics,73-83

• Frequency and Shape of Distribution

• Central Tendency Measures

• Measures of Variability

• Inferential Statistics, 84-115

• Probability

• Sampling Error

• Confidence Intervals

• Hypothesis Testing

• Power

25

Types of Research

Nonexperimental (Observational)

• Descriptive or Exploratory

• No control or manipulation of variables

• Examines populations and relationships

Experimental

• Researcher manipulates/controls variable(s)

• Comparison of interventions or groups, examines cause and effect

26

(Portney and Watkins, 2000)

Types of Research

Portney and Watkins suggest viewing various designs as a continuum of research with a descriptive, exploratory, or experimental purpose.

Certain designs may include elements of more than one classification.

27

(Portney and Watkins,2000)

Descriptive Research

Examples:

• Case Study: Description of one or more patients, may document unusual conditions or response to intervention

• Developmental Research: Examines patterns of growth and change, or documents natural history of a disease or condition

• Normative Research: Establishes typical values for specific variables

28

(Portney and Watkins, 2000)

Descriptive Research, continued…

Examples:

• Qualitative Research: Collection of subjective, narrative information (rather than quantitative, numerical data) in an effort to better understand experiences

• Evaluation Research: Assessment of a program or policy, often by the collection of descriptive information through surveys or questionnaires

29

(Portney and Watkins, 2000)

Exploratory Research

Examples:

• Correlational Methods: Examines relationships between areas of interest, may be used to predict or suggest, but cannot offer cause and effect

• Cohort and Case-Control Studies: Used often in epidemiological research to describe and predict risks for certain conditions

• Methodological Studies: Used to evaluate the validity and reliability of measuring instruments

• Historical Research: Use of archives or other records to reconstruct the past to generate questions or suggest relationships of historical interest

30

(Portney and Watkins, 2000)

Experimental Research

Example

• Randomized Clinical or Controlled Trial (RCT):

In general, a clinical treatment, or experimental condition, is compared to a control condition, often a placebo but in some cases an alternative treatment, where subjects are randomly assigned to a group.

31

(Portney and Watkins, 2000)

Experimental Research, continued…

Examples:

• Single-Subject Design: Variation of RCT, study of an individual over time with repeated measurement and determined design phases

(Portney and Watkins, 2000)

In an N=1 RCT, a single individual receives alternating treatment and placebo or alternative treatment, with the patient and the assessor blinded to intervention allocation. Objective or subjective measures are then recorded during the allocation periods. (Guyatt and Rennie, 2002)

32

Experimental Research, continued…

Examples:

• Sequential Clinical Trial: Variation of RCT, technique that allows for the continuous analysis of data as it becomes available, does not require a fixed sample

• Quasi-Experimental Research: Comparative research in which subjects cannot be randomly assigned to a group, or control groups cannot be used. Lower level of evidence than RCTs.

33

(Portney and Watkins 2000)

Experimental Research, continued

Examples:

• Systematic Review: Combination of several studies with the same or similar variables, in which the studies are summarized and analyzed (Guyatt and Rennie, 2002)

• Meta-analysis: Statistical combination of the data from several studies with the same or similar variables, to determine an overall outcome (Portney and Watkins, 2000; Guyatt and Rennie, 2002)

34

Hierarchy of Evidence for Treatment Decisions:

Greatest (Top) to Least (Bottom)

1. N of 1 randomized controlled trial

2. Systematic review of randomized trials*

3. Single randomized trial

4. Systematic review of observational studies addressing patient-important outcomes

5. Single observational study addressing patientimportant outcomes

6. Physiological studies (studies of blood pressure, cardiac output, exercise capacity, bone density, and so forth)

7. Unsystematic clinical observations

*A meta-analysis is often considered higher than a 35 systematic review (Guyatt and Rennie, 2002)

Hierarchy of Evidence

Ideally, evidence from individual studies would be compiled or synthesized into systematic reviews, with that information succinctly consolidated into easily and quickly read synopses. All relevant information would be integrated and linked to a specific patient’s circumstance. The medical search literature is still far from this, but working towards that goal. Efforts include clinical prediction guidelines and

APTA’s emphasis on EBP.

36

(Straus et al, 2005)

Variables

Variables: Characteristic that can be manipulated or observed

• Types of Variables

• Independent or Dependent

• Measurement Scales/Levels

Classification is useful for communication, so that readers are aware of the author’s hypothesis of what situation or intervention (independent variable) will predict or cause a given outcome (dependent variable)

37

(Portney and Watkins, 2000)

Variables: Independent or Dependent

• Independent Variable: A variable that is manipulated or controlled by the researcher, presumed to cause or determine another

(dependent) variable

• Dependent Variable: A response variable that is assumed to depend on or be caused by another

(independent) variable

38

(Portney and Watkins, 2000)

Variables: Measurement Scales

• Useful to convey information to the reader about the type of variables observed

• Necessary to determine what statistical analysis approach should be used to examine relationships between variables

• From lowest to highest level of measurement, the scales are nominal, ordinal, interval, and ratio

39

(Portney and Watkins, 2000)

Variables: Measurement Scales

Nominal Scales (Classification Scale)

• Data, with no quantitative value, are organized into categories

• Categorizes are based on some criterion

• Categories are mutually exclusive and exhaustive (each piece of data will be assigned to only one category)

• Only permissible mathematical operation is counting (such as the number of items within each category)

• Examples: Gender, Blood Type, Side of

Hemiplegic Involvement

40

(Portney and Watkins, 2000)

Variables: Measurement Scales

Ordinal Scales

• Data are organized into categories, which are rank-ordered on the basis of a defined characteristic or property

• Categories exhibit a “greater than-less than” relationship with each other and intervals between categories may not be consistent and may not be known

41

(Portney and Watkins, 2000)

Variables: Measurement Scales

Ordinal Scales, continued

• If categories are labeled with a numerical value, the number does not represent a quantity, but only a relative position within a distribution (for example, manual muscle test grades of 0-5)

• Not appropriate to use arithmetic operations

• Examples: Pain Scales, Reported Sensation,

Military Rank, Amount of Assistance Required

(Independent, Minimal…)

42

(Portney and Watkins, 2000)

Variables: Measurement Scales

Interval Scales

• Data are organized into categories, which are rank-ordered with known and equal intervals between units of measurement

• Not related to a true zero

• Data can be added or subtracted, but actual quantities and ratios cannot be interpreted, due to lack of a true zero

• Examples: Intelligence testing scores, temperature in degrees centigrade or Fahrenheit, calendar years in AD or BC

43

(Portney and Watkins, 2000)

Variables: Measurement Scales

Ratio Scales

• Interval score with an absolute zero point (so negative numbers are not possible)

• All mathematical and statistical operations are permissible

• Examples: time, distance, age, weight

44

(Portney and Watkins, 2000)

Variables: Clinical Example

A study investigates how a strengthening program impacts a child’s ability to independently walk. In this case, the strengthening program is the independent variable and the ability to independently walk is the dependent variable. Amount of assistance required (if ranked maximal, moderate, minimal, independently, not based on weight put on a crutch or other quantitative testing) would be an example of ordinal data.

Studies often have more than one independent or dependent variable

45

Measurement Validity

• Measurement Validity examines the “extent to which an instrument measures what it is intended to measure”

(Portney and Watkins, 2000)

• For example, how accurate is a test or instrument at discriminating, evaluating, or predicting certain items?

46

Measurement Validity

Validity of Diagnostic Tests

• Based on the ability for a test to accurately determine the presence or absence of a condition

• Compare the test’s results to known results, such as a gold standard.

• For example, a test determining balance difficulties likely to result in falls could be compared against the number of falls an individual actually experiences within a certain time frame. A clinical test for a torn ACL could be compared against an

MRI.

47

(Portney and Watkins, 2000)

Measurement Validity: Types

• Face Validity: Examines if an instrument appears to measure what it is supposed to measure (weakest form of measurement validity)

• Content Validity: Examines if the items within an instrument adequately comprise the entire content of a given domain reported to be measured by the instrument

• Construct Validity: Examines if an instrument can measure an abstract concept

48

(Portney and Watkins, 2000)

Measurement Validity: Types

• Criterion-related Validity: Examines if the outcomes of the instrument can be used as a substitute measure for an established gold standard test.

Concurrent Validity: Examination of Criterionrelated Validity, when the instrument being examined and the gold standard are compared at the same time

Predictive Validity: Examination of Criterion-related

Validity, when the outcome of the instrument being examined can be used to predict a future outcome determined by a gold standard

49

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Ways to Evaluate Usefulness of Clinical Screening or

Diagnostic Tools

• Sensitivity and Specificity

• Positive and Negative Predictive Value

• Positive and Negative Likelihood Ratios

• Receiver Operating Characteristic (ROC) Curve

The above mentioned statistical procedures are often used when researchers are introducing (and validating) the test. Hopefully the values from these operations can be found tool’s testing manual or in articles evaluating the tool’s validity within certain populations or settings.

50

Test Result

Positive

Negative

Measurement Validity: Statistics

Diagnostic Reference Table

Condition

Present Absent

True Positive a

False Negative c

False Positive b

True Negative d

(Guyatt and Rennie, 2002; Portney and Watkins, 2000;

Straus et al, 2005)

51

Measurement Validity: Statistics

Table Labels:

(a) True Positive: The subject matter has the condition, and the test accurately identifies the presence of the condition

(d) True Negative: The subject matter does not have the condition, and the test accurately identifies the absence of the condition

(b) False Positive: The subject matter does not have the condition, and the test incorrectly identifies the presence of the condition

(c) False Negative: The subject matter has the condition, and the test incorrectly identifies the absence of the condition

52

( Portney and Watkins, 2000 )

Measurement Validity: Statistics

• Positive test result = the test identifies the condition as being present;

• Negative result = the test identifies the condition as being absent

(This may or may not be accurate when compared to the gold standard).

The test’s sensitivity and specificity provide information about the accuracy of the test.

53

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Sensitivity

• The ability of the test to obtain a positive test when the condition is present; the ability to detect a true positive (a)

• a/(a + c) The proportion that test positive out of those with the condition

54

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Specificity

• The ability of the test to obtain a negative result when the condition is absent, the ability to detect a true negative (d)

• d/(b + d) The proportion that test negative out of those without the condition

Sensitivity and Specificity are often provided in terms of percents, from 0% to 100% (low to high)

55

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Helpful Hints to remember

Sensitivity and Specificity

• Sensitivity: SnNout: When a test has a high sensitivity (Sn), a negative result (N), rules out (out) the diagnosis.

• Specificity: SpPin: When a test has a high specificity (Sp), a positive result (P), rules in (in) the diagnosis

56

(Straus et al, 2005)

Measurement Validity: Statistics

Clinical Example

Example:

You’re choosing between two tests that screen participation in school based on physical abilities.

A positive result means that the student’s physical abilities impact his or her participation.

57

Measurement Validity: Statistics

Clinical Example

One test has a high sensitivity, but a low specificity. A high sensitivity means that a negative test will effectively rule out students whose physical abilities do not impact participation.

However, with a low specificity, there may be many false positives, meaning students may test “positive” for the condition when, in fact, their abilities do not impact participation.

58

Measurement Validity: Statistics

Clinical Example

Example:

You’re choosing between two tests that evaluate participation in school based on physical abilities.

A positive result means that the student’s physical abilities impact his or her participation.

59

Measurement Validity: Statistics

Clinical Example

The other test has a low sensitivity, but a high specificity. A high specificity means that a positive result will effectively rule in the condition.

However, with a low sensitivity, there may be many false negatives, meaning that students may test

“negative” for the condition, when, in fact, their abilities do impact their participation.

60

Measurement Validity: Statistics

Predictive Values

• Provided in terms of percentages, 0% to 100%, low to high

• Positive Predictive Value (PV+)

• Probability that a person with a positive test actually has the condition

• a/(a + b)

• High PV+ desired for screening tools, to prevent excessive unnecessary future testing

• Negative Predictive Value (PV-)

• Probability that a person with a negative test does not have the condition

• d/(c + d)

61

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Likelihood Ratios

• Calculated from the Diagnostic Reference Table

• Requires prior calculation of the pretest probability of the condition in question

• Easy to use when familiar with the concept, but requires the use of a probability guide chart or a nomogram (chart that contains pretest probability and likelihood ratios, with a ruler connecting those two points to determine posttest probabilities)

62

(Guyatt and Rennie, 2002)

Measurement Validity: Statistics

Likelihood Ratios, continued

• Positive and negative likelihood ratios are calculated

• Determines the usefulness of a diagnostic test.

If a positive or negative result will change the posttest probability of having a condition to alter the clinician and patient’s course of action, it will be useful. If the likelihood ratios of the test do not result in a substantial change of knowledge, the test most likely will not be useful.

63

(Guyatt and Rennie, 2002)

Measurement Validity: Statistics

Receiver Operating Characteristic (ROC) Curve

• Uses sensitivity and specificity information to find the probability of correctly choosing between presence or absence of the condition

For example, a test with an area under the ROC curve of 0.80, would result in the correct determination of presence or absence of a condition 80% of the time.

64

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Responsiveness to chance statistics evaluate a measurement tool’s ability to detect change over time

• For example, will administration of the test pre and post intervention reflect a change in status, if one actually occurred?

• Evaluated by examining the change in scores in a pretest-posttest design, or using effect size

65

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Effect Size

• Effect size (ES) is a measure of the amount of difference.

For example, experimental group A increased their score on a coordination measure by an average of

15 points, while experimental group B increased their score an average of 8 points. The ES between groups would be 7 points, considering the groups were homogeneous at the start.

66

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Effect Size, continued…

• An effect size index is a converted effect size, a standardized measure of change, so that change scores across different outcome measures can be compared.

• ES is often displayed as a correlation coefficient, r

Portney and Watkins note that considerations of ES vary based on the interpreting clinician, but review

Cohen’s suggestions of scores <0.4 as small

(treatment had a small effect), 0.5 as moderate, and >0.8 as large

67

(Portney and Watkins, 2000)

Measurement Validity: Statistics

Effect Size versus Minimal Clinically Important

Difference

• In addition to numerical data revealed and statistical significance, the clinician should also consider what amount of change is clinically meaningful, such as, how great a gain in strength or endurance will result in a change in function?

This is often referred to as the minimal clinically important difference (MCID).

68

(Jaeschke et al 1989)

Measurement Reliability: Statistics

Reliability examines a measurement’s consistency and freedom from error

• Can be thought of as reproducibility or dependability

• Estimate of how observed scores vary from the actual scores

69

(Portney and Watkins, 2000)

Measurement Reliability: Statistics

Reliability Coefficient

• Ratio of reliability (many different types with various symbol representation)

• Range between 0.00 to 1.00;

• 0.00 = no reliability;

• 1.00 = perfect reliability

• Reflection of variance, a measure of the differences among scores within a sample

70

(Portney and Watkins, 2000)

Measurement Reliability: Statistics

Correlation

• Comparison of the degree of association between two variables or sets of data

• Used as a basis for many reliability coefficients

71

(Portney and Watkins, 2000)

Measurement Reliability: Statistics

Test-Retest Reliability

Examines the consistency of the results of repeated test administration

• Traditional Analysis

• Pearson product-moment coefficient of correlation (for interval or ratio data)

• Spearman rho (for ordinal data)

• Current, sometimes considered preferred,

Analysis

• Intraclass correlation coefficient

72

(Portney and Watkins, 2000)

Measurement Reliability: Statistics

Rater Reliability

• Intrarater reliability

• Reliability of data collection from one individual over two or more trials

• Interrater reliability

• Reliability of data collection between two or more raters

73

( Portney and Watkins, 2000)

Descriptive Statistics

Descriptive statistics are used to describe sample characteristics.

• A sample is a subset of a population chosen for study. Since data often cannot be collected from an entire population, the data chosen from a selected sample is intended to be representative or an estimate of the population data.

• Distribution: Total set of scores (from a sample) for a particular variable, given the symbol, n

74

(Portney and Watkins, 2000)

Descriptive Statistics

Frequency and Shapes of Distribution

• Frequency distribution: The number of times each value, from the variable data, occurred

• Drawing a graph of frequency distributions can result in shapes that characterize the distributions

• Some graphs are asymmetrical, others are symmetrical

• A symmetrical graph with a bell-shaped distribution is referred to as a normal distribution.

• A skewed distribution presents asymmetrically

75

(Portney and Watkins, 2000)

Descriptive Statistics

Normal Distributions, when graphed according to frequency, present in the shape of a bell with the majority of scores falling in the middle and progressively fewer scores at either end. It has special properties in statistics.

76

(Portney and Watkins, 2000)

Descriptive Statistics:

Central Tendency Measures

Used to quantitatively summarize a group’s characteristics.

• Mode: The score that occurs most frequently

• Median: The middle score in numerically ordered group of data. If there are an even number of scores, the median is the midpoint between the two middle scores

• Mean: The sum of a set of scores divided by the number of scores, n.

Often referred to as the

“average” of a data set.

77

(Portney and Watkins, 2000)

Descriptive Statistics:

Measures of Variability

Variability is the dispersion of scores.

Variability is affected (qualified) by five characteristics:

• Range

• Percentiles

• Variance

• Standard deviation

• Coefficient of variation

78

(Portney and Watkins, 2000)

Descriptive Statistics:

Measures of Variability

Variability, continued…

Range: Difference between the highest and lowest scores in a distribution

Percentiles: Used to describe a score’s position within a distribution, distribution data is often divided into quartiles, or four equal parts

Variance: (Too in-depth to describe the statistical background for this module purpose). Reflects variation within a set of scores, in square units.

79

Symbolized in sample data by s

(Portney and Watkins, 2000)

Descriptive Statistics:

Measures of Variability

Variability, continued

Standard Deviation: Representative of the variability of scores surrounding the mean, in original units of measurement. Square root of variance.

• The larger the standard deviation, the more spread out the variable’s scores are around a mean.

• For example, data set (A) 8,9,10,11,12 and the data set (B) 4,5,10,15,16 both have a mean of 10.

However the standard deviation for set A is 1.58 while the standard deviation of set B is 5.52.

• Symbolized in sample data by s 2 .

80

(Portney and Watkins, 2000)

Descriptive Statistics:

Measures of Variability

Variability, continued…

Coefficient of Variation: The ratio of the standard deviation to the mean.

81

(Portney and Watkins, 2000)

Descriptive Statistics:

Distributions

Normal Versus Skewed (Non-normal) Distribution

• These theoretical shapes of distribution help determine what statistical formulas or measures should be used

• The characteristics of normally distributed data are constant and predictable. For statistical purposes, the normally distributed curve is often divided into proportional areas, each equal to one standard deviation.

• Data should be examined for “goodness-of-fit” to see if the sample approximates the normal

82 distribution.

(

Portney and Watkins, 2000)

Descriptive Statistics:

Distributions

Normal Distribution Statistics

• 1 st standard deviation on either side of the average contains 34.13% of the data

• total of 68.62% of the data will be between +1 and -1 standard deviation

• Between 1 st and 2 nd standard deviation contains

13.59% of the data

• total of 95.45% of the data will be between +2 and -2 standard deviations

[(13.59 times 2) + (34.13 times 2) ]

83

(Portney and Watkins, 2000)

Descriptive Statistics:

Distributions

Normal Distribution Statistics, continued

Between 2 nd and 3 rd standard deviation contains

2.14% of the data

• total of 99.73% of the data will be between +3 and -3 standard deviations

[(13.59 times 2) + (34.13 times 2) + (2.14 times

2)]

84

(

Portney and Watkins, 2000)

Inferential Statistics

Estimate population characteristics from sample data

• Used often when testing theories about the effects of experimental treatments

• Requires that assumptions are made about how well the sample represents the larger population

• Assumptions are based on the statistical concepts of probability and sampling error

• It is important the sample be representative of the population, so that the results of interventions on samples can be applied to the entire population of individuals with those characteristics.

85

(

Portney and Watkins, 2000)

Inferential Statistics

Probability

Probability

• The likelihood that an event will occur, given all the possible outcomes. Used often in prediction.

Given the symbol p

• Probability may range from p = 1 (certain the event will occur, 100% probability) to p = 0 (certain that the event will not occur, 0% probability)

86

(Portney and Watkins, 2000)

Inferential Statistics

Probability

Probability, continued…

Reflective of should happen in the long run, not necessarily what will happen on a given trial.

For example, if a treatment has a 60% chance of success, then 60% of people will likely be successfully treated. That does not mean the treatment will be 60% successful in an individual, likely it will either be a unsuccessful or successful for that individual.

87

(Portney and Watkins, 2000)

Inferential Statistics: Probability

Clinical Example

Probability statistics can be applied to the distribution of scores

Example, for a normally distributed data set:

Average long jump for a certain group of children is

35 inches with a standard deviation of 4 inches.

Suppose you want to know the probability that a child will jump within one standard deviation (from 31 to 39 inches)? You know that within one standard deviation on either side of the mean is 68.2%, so that is the probability that a child will jump within one standard deviation of the mean.

88

Inferential Statistics: Probability

Clinical Example

Example, continued…

If you wanted to know the probability that a child will jump more than one standard deviation above the mean (greater than 39 inches), you can refer to the data and calculate 15.86%.

Charts and graphs are available to calculate the data in between the standard deviations .

89

Inferential Statistics:

Sampling Error

Sampling Error

The difference between sample values and population values

• The lower the sampling error, the greater the confidence that the sample values are similar to the population values.

• To estimate sampling error, the standard error of the mean statistic is used (too complex to explain statistical basis in this format)

• The larger the sample size, n , the smaller the standard error of the mean

90

(Portney and Watkins, 2000)

Inferential Statistics

Confidence Intervals

Confidence Interval (CI)

• Range of scores with specific boundaries, or confidence limits, that should contain the population mean

• The boundaries of CIs are based on the sample mean and its standard error

• Degree of confidence is expressed as a percentage

• Often, researchers use 95% as a boundary, which is just slightly less than 2 standard deviations on either side of the mean

91

(Portney and Watkins, 2000)

Inferential Statistics, Confidence Interval

Clinical Example

A physical therapy treatment program resulted in the ability for 40 children with a certain disorder to walk an additional 8 independent steps, on average, within a certain set of parameters.

The 95% CI for this data was ± 2 steps.

Therefore, we can be 95% certain that the population mean, or average for all children with this disorder, is between 6 and 10 extra independent steps.

Said another way, if there were an additional 100 children with the same condition, 95 of them would likely have an average that was between an additional 6 to 10 additional independent steps following the physical therapy treatment.

92

Inferential Statistics

Hypothesis Testing

Hypothesis Testing

Used to decide if effects from an intervention are due to chance or the result of the intervention

Results in one of two decisions: To accept or reject the null hypothesis

93

(Portney and Watkins, 2000)

Inferential Statistics

Hypothesis Testing

Hypothesis Testing, continued…

Statistical Hypothesis (also known as the null hypothesis):

Any observed difference (as in pretreatment to posttreatment or treatment compared to a placebo) is due to chance.

When the null hypothesis is rejected, the researcher concludes that the effect of treatment is not likely due to chance.

94

(Portney and Watkins, 2000)

Inferential Statistics

Hypothesis Testing

Hypothesis Testing, continued…

Alternative Hypothesis: Any observed difference is not due to chance.

Often the researcher is trying to support the alternative hypothesis, as when trying to prove that one particular treatment is better.

Sometimes, however, the researcher may be trying to prove that certain interventions are equal.

95

(Portney and Watkins 2000)

Inferential Statistics

Hypothesis Testing, Errors

Errors in Hypothesis Testing

• Decision to accept or reject the null hypothesis is based on the results of the statistical procedures on collected data from samples.

• Decisions are based on sample data, so it is possible that the results obtained are not accurate of population data.

• There is a chance for error, that the researcher may incorrectly accept or reject the null hypothesis

96

(Portney and Watkins 2000)

Inferential Statistics

Hypothesis Testing, Errors

Type I error (α): Rejecting the null hypothesis when it is true (for example, deciding that the difference seen between a treatment group and a control group is due to the effect of the treatment, when, in fact, the difference is due to chance).

•A commonly used standard is α= 0.05, or the researchers accept a 5% chance of making a

Type I error

Statistical tests completed with the sample data are used to calculate p , the probability that an observed difference did occur by chance.

97

(Portney and Watkins, 2000)

Inferential Statistics

Hypothesis Testing, p and α

Hypothesis Testing: Relationship between p and α

If p is greater than the chosen α, then the researchers chose not to reject the null hypothesis

For example, in a placebo versus treatment study, the researchers cannot conclude that the experimental treatment had a different effect then the placebo.

98

(Portney and Watkins, 2000)

Inferential Statistics

Hypothesis Testing, p and α

Hypothesis Testing: Relationship between p and α, continued…

If p is less than the chosen α, then the researchers chose to reject the null hypothesis

For example, in a placebo versus treatment study, the researchers conclude that the experimental treatment had a different effect then the placebo.

99

(Portney and Watkins, 2000)

Inferential Statistics

Hypothesis Testing, p and α

Hypothesis Testing: Relationship between p and α, continued…

Confidence intervals surrounding the p value can be calculated, hopefully these are included in data analysis section of the research study.

The CIs between two groups should not (rare exceptions) overlap, if a statistically significant difference is found .

100

(Portney and Watkins, 2000)

Inferential Statistics

Hypothesis, CIs versus MCID

Hypothesis Testing : CIs (slide 90) versus MCID

(slide 67)

When the null hypothesis is rejected, the researchers conclude that the experimental treatment (versus a placebo, for example) had a statistically significant effect.

The clinician should examine the effect size and the

MCID to ensure that the change is clinically and functionally relevant.

101

(Guyatt and Rennie, 2002)

Inferential Statistics

Hypothesis, CIs versus MCID

When a null hypothesis is not rejected, the researchers may conclude that the experimental treatment did not have an effect.

However, the researchers should pay close attention to the confidence intervals.

• If the CI does not include the MCID, then the trial is most likely negative.

• If the CI includes the MCID, then the possibility that the experimental treatment may have a positive effect cannot be ruled out. The researchers may wish to run a power analysis, explained in later slides.

102

(Guyatt and Rennie, 2002)

Inferential Statistics, Hypothesis Testing

Clinical Example

Children with similar abilities/diagnosis

(homogenous sample) are randomly assigned to two different groups:

• Group A receives a physical therapy designed to improve gross motor skills, and

• Group B completes typical daily activities (but don’t worry, due to ethical concerns children in Group B will receive the same treatment as those in Group A at the end of the study period).

103

Inferential Statistics, Hypothesis Testing

Clinical Example 1, continued…

The outcome measure will be a tool that tests gross motor activities with a final, numerical outcome score.

The authors hypothesis that the experimental group will show statistically significant gains (experimental hypothesis) and that the null hypothesis (there is no difference between groups at the end of the intervention period) will be rejected.

104

Inferential Statistics, Hypothesis Testing

Clinical Example 1, continued…

Initially, group A and B have similar average pre-test scores (if not, that can be statistically corrected).

Now suppose that Group A (experimental group, receiving additional PT services) increases an average of 9 points from pretest to posttest, with a CI of ±1, 8 to 10.

Group B increases an average of 0.5 points, with a

CI of ±2, -1.5 to 3.5.

105

Inferential Statistics, Hypothesis Testing

Clinical Example 1, continued…

These confidence intervals do not overlap, which corresponds with the statistical analysis performed that p <0.05 (the predetermined α), and there is a statistically significant difference between groups.

The null hypothesis is rejected.

106

Inferential Statistics, Hypothesis Testing

Clinical Example 1, continued…

Prior to the experiment, the authors agreed that the

MCID was 6.

Both the statistically determined ( p <0.05 ) and clinically determined criteria in support of the experimental group’s treatment were met (ES of experimental group,9, was greater than the MCID,

6).

107

Inferential Statistics, Hypothesis Testing

Clinical Example 2

Initially, group A and B have similar average pre-test scores (if not, that can be statistically corrected).

Now, change the scenario from previous slides.

Suppose that Group A (experimental group, receiving additional PT services) increases an average of 5 points from pretest to posttest, with a CI of ±2, 3 to 7.

Group B increases an average of 0.5 point,s CI ±2, -

1.5 to 3.5.

108

Inferential Statistics, Hypothesis Testing

Clinical Example 2, continued…

These confidence intervals overlap, and the statistical analysis performed revealed that p>0.05

(the predetermined α). There is not a statistically significant difference between groups.

The null hypothesis is not rejected.

109

Inferential Statistics, Hypothesis Testing

Clinical Example 2, continued…

However, prior to the experiment, the authors agreed that the MCID was 6.

Since the CI range of Group A includes that MCID, the beneficial effects of the treatment from Group A over Group B cannot be ruled out.

The researchers may want to run a statistical power analysis, to determine if the study was underpowered, and therefore unlikely to show a difference of the treatment

.

110

Inferential Statistics

Hypothesis Testing, Errors

Errors in Hypothesis Testing, Type II

Type II error (β): Not rejecting the null hypothesis when it is false (for example, determining that differences are due to chance, when they are, in fact, due to the experimental treatment)

111

(Portney and Watkins, 2000)

Inferential Statistics

Hypothesis Testing, Errors

Errors in Hypothesis Testing, Type II

The complement of Type II error is the statistical power of the test (1β)

• Power: the probability that a test will lead to rejection of the null hypothesis, or the probability of obtaining statistical significance if the differences are not due to chance

• Many researchers use a standard of β=0.20, or a power of 80%, as reasonable protection against Type II error

112

(Portney and Watkins,2000)

Inferential Statistics: Power

Determinants of Statistical Power

Even though the researchers may not reject the null hypothesis, it does not always mean that an experimental treatment is not effective.

The power of the study may have been too low or small to detect a significant difference.

113

(Guyatt and Rennie 2002, Portney and Watkins, 2000)

Inferential Statistics: Power

Determinants of Statistical Power

• Levels set of α and β

• Variance

• Sample Size (n)

• Effect Size (Difference between two treatments or variables, Treatments with large changes or correlations are more likely to produce significant outcomes)

Increases of effect size, sample size, and alpha all increase power, while decreases in variance increases power.

114

(Portney and Watkins, 2000)

Inferential Statistics: Tests

Parametric Vs. Nonparametric Tests

Parametric statistics are statistics used to estimate population parameters

• Primary assumptions of parametric tests:

• Samples randomly drawn from populations with normal distributions

• Variances in samples are roughly equal

• Data are measured on interval or ratio scales

115

(Portney and Watkins, 2000)

Inferential Statistics

Tests

Parametric Vs. Nonparametric Tests

Nonparametric tests are generally not as powerful, and researchers may choose to use parametric tests, despite not meeting all generally held assumptions

(such as use of parametric test in a study with ordinal data)

116

(Portney and Watkins, 2000)

Section 3 Outline

How to Search for EBP

• Formation of Clinical Questions used to search for EBP, 117-121

• Evidence Search: Sources of Information, 122-

138

• Internet/World Wide Web

• Textbooks

• Specific Journal Subscriptions

• Internet Sources for Medical Information

• The Guide

• Search Strategies, 139-152

117

Formation of Clinical Questions

Used to Search for EBP

Background Questions: general knowledge about a condition/area of interest

Foreground Questions: specific knowledge used to inform clinical decisions or actions. Often what researches use when investigating a particular treatment, prognosis, outcome measure, etc.

• Clinicians require the use of background and foreground knowledge, with proportions that may vary over time and depend on knowledge of a particular situation.

118

(Straus et al, 2005)

Formation of Clinical Questions

Used to Search for EBP

Background Questions

Usually consist of two components:

• A question root (who, what, where, when, why, how) with a verb

• A condition, test, treatment, or other health care concern

Example: “What is juvenile rheumatoid arthritis?” or

“What causes cerebral palsy?

119

(Straus et al, 2005)

Formation of Clinical Questions

Used to Search for EBP

Foreground Questions

Usually consist of four components (PICO)

• P atient and/or problem

• I ntervention (or exposure)

• C omparison, if relevant

• Clinical O utcomes, including time if relevant

120

(Straus et al, 2005)

Formation of Clinical Questions

Used to Search for EBP

Foreground Questions, continued…

• Example, “For teenage girls with juvenile rheumatoid arthritis, is treatment X or treatment Y more effective in decreasing pain in joints of the hand and wrist?” or “For children with spastic diplegic cerebral palsy age 3-6 years old, which outcome measure (can compare A or B or search in general) most accurately assesses functional mobility?”

121

Formation of Clinical Questions

Used to Search for EBP

Question Type: Categorization is useful for writing the question and statistical analysis

• Therapy: Evaluates the effects of various treatments or interventions

• Harm (not frequently investigate in PT literature):

Evaluates the effects of various treatments or modalities on function, morbidity, mortality

• Diagnosis: Evaluates a tool or test’s ability to distinguish among certain conditions

• Prognosis: Evaluates the course of a certain condition

122

(

Guyatt and Rennie, 2002)

Evidence Search: Sources of Information

Where to find the research evidence, once the foreground question is developed?

• Internet/World Wide Web

• Textbooks

• Specific Journal Subscriptions

• Internet Sources for Medical Information

123

Evidence Search: Sources of Information

Internet/World Wide Web:

This category is related to general search engines, not specific medical information journal search sources or health-related texts and journals available on the web.

• Provides a rapid and abundant source of information

• Be careful, not all sites and sources of information meet EBP guidelines

124

(Guyatt and Rennie, 2002)

Evidence Search: Sources of Information

Internet/World Wide Web, continued…

Consider the reputability of the source and the information found.

Examples:

• MD Consult (fee),

• Google Scholar (free for the search)

• Some articles found may be free, others may have a fee.

• I personally have found Google Scholar very helpful. Its own search page is displayed when you enter “Google Scholar” into Google,

125

(Guyatt and Rennie, 2002)

Evidence Search: Sources of Information

Textbooks:

• Often more useful for answering general background questions

• Books in print (hard-copy) may exclude valuable recent information, due to the time taken to compile, edit, and publish texts

• If utilizing a text, use one that is updated frequently and well referenced, so you can access more details if necessary

126

(Guyatt and Rennie, 2002)

Evidence Search: Sources of Information

Textbooks, continued…

Many texts that are internet-based are becoming more evidence-based and may be an increasing source of information for foreground questions in the future.

Examples of internet-based texts (Guyatt and Rennie recommend UpToDate and Clinical Evidence as becoming more evidence-based):

• emedicine (free)

• UpToDate (fee)

• Clinical Evidence (fee)

127

(Guyatt and Rennie, 2002)

Evidence Search: Sources of Information

Specific Journal Subscriptions:

• Browsing a specific journal may be beneficial if specific to your field of practice

• However, it mostly likely will leave out many applicable articles published in other journals

• Browsing through full text journals to find a specific article with appropriate quality and relevance often takes considerable time

128

(Straus et al, 2005)

Evidence Search: Sources of Information

Commonly Used Internet Sources for Medical

Information: Prefiltered Resources, Medline, PEDro,

EBSCOhost/CINHAL

These internet-accessible search engines allow you enter search terms to find applicable research, most often related to published journals.

Most cites will provide an abstract (general summary) of any article found for free, but often a fee is charged for full text access unless you have access to university subscriptions. Many PT programs offer access to their resources for their Clinical

Instructors.

129

Evidence Search: Sources of Information

Prefiltered Resources:

Contain only those studies, most often systematic reviews, considered high methodological quality.

• Benefits: Easy to search small database with high quality studies. Drawbacks: not comprehensive

• Examples: Best Evidence, Cochrane Library

(both require a subscription fee)

130

(Guyatt and Rennie, 2002)

Evidence Search: Sources of Information

Medline:

• Online search engine with comprehensive coverage of medical journals, can be intimidating due to large size and knowledge necessary to perform an efficient search.

• Unfiltered, containing information from prefiltered sources (such as the Cochrane Library) plus additional expansive coverage from a wide variety of types of studies and journal publications

131

(Guyatt and Rennie 2002)

Evidence Search: Sources of Information

Medline, continued …

• Medline, the search engine, not necessarily the articles within Medline, is available free to the public through PubMed

( www.ncbi.nlm.nih.gov/PubMed ).

• Commercial vendor access (most often through university or health science center subscription):

OVID, Knowledge Finder, Silver Platter

• Articles through PubMed Central, http://www.pubmedcentral.nih.gov, are free.

132

(Guyatt and Rennie, 2002)

Evidence Search: Sources of Information

PEDro (Physiotherapy Evidence Database):

Per the website, “[PEDro] has been developed to give rapid access to bibliographic details and abstracts of randomized controlled trials, systematic reviews and evidence-based clinical practice guidelines in physiotherapy.”

( http://www.pedro.org.au/index.html

)

• Not-for-profit organization supported by many national physiotherapy associations, including the

APTA

133

Evidence Search: Sources of Information

PEDro (Physiotherapy Evidence Database):

• Helpful Aspects of PEDro: systematic reviews are listed first, followed by articles ranked according to EBM hierarchy (with the scores explained based on the studies methodological characteristics)

• Free access at: http://www.pedro.org.au/index.html

, no direct fulltext articles. When applicable, the website provides potential links to full-text articles

134

Evidence Search: Sources of Information

EBSCOhost:

• Provider of CINAHL ®

(Cumulative Index to

Nursing and Allied Health Literature) – CINAHL ® also available through several other sources

• Fee for use (Often as a university or health science subscription): http://www.ebscohost.com

• Similar search strategies to other medical information sources

135

Evidence Search: Sources of Information

APTA’s Open Door:

• Available with APTA membership (login to www.apta.org

, look under areas of interest, research subheading)

• Access to research journal collections including

ProQuest, Medline, Cochrane Library, CINAHL

®

(mostly contains bibliographic records, not full text journals)

136

Evidence Search: Sources of Information

APTA’s Open Door, continued…

• Suggestions, with links, for free full text

(including BioMed Central, Directory of Open

Access Journals, Public Library of Science,

PubMed Central, and more)

• Tutorials and searching tips for EBP, along with guidelines for finding full-text articles

• Information on PT journals and samplings of current research in specific fields

137

Evidence Search: Sources of Information

APTA’s Hooked on Evidence:

• Available with APTA membership (login in to www.apta.org

, look under areas of interest, research subheading)

• Database of article extractions relevant to physical therapists: Peer reviewed information about the methodological quality and level evidence of the included articles

138

Evidence Search: Sources of Information

The Guide to Physical Therapist Practice (Text):

• Provides PTs with comprehensive descriptions of scope of practice

• Details preferred practice patterns

• Indications for specific tests and measures and interventions

APTA. Guide to Physical Therapist Practice . 2nd ed. American

Physical Therapy Association; 2001.

139

Search Strategies

Searching for the Evidence within

Medical Information Sources:

It is beyond the scope of this module to provide indepth training on searching for literature.

There are many online training courses, courses offered at health science center libraries, and thick packets of information on such searches.

140

Search Strategies

Searching for the Evidence within Medical

Information Sources, continued…

It is not included in the time estimate or quiz information, but if interested in performing more searches and you would like greater instruction, consider a course or an online tutorial. Many on the internet are free, as the one by PubMed, http://www.nlm.nih.gov/bsd/disted/pubmed.html

Efficiency in searching requires time spent learning, experimenting, and practicing!

141

Search Strategies

PubMed Search

( http://www.ncbi.nlm.nih.gov/sites/entrez )

As a brief, non-inclusive overview, the following slides provide some basic information on searches.

PubMed Database was chosen as an example since it is a free and comprehensive site. This is not intended to result in the ability to perform efficient searches, but rather to give an example, the very tip of the iceberg, of the variety of functions that can be performed on such a database.

142

PubMed Search:

Search Strategies

The first step in searching is to form your foreground question and consider which information is most important to search

• Consider the specificity of your search. There is less information on pediatrics than adults, less information on rare disorders than common ones, etc.

• If you have too many results, try to be more specific. If too few, be more general.

143

Search Strategies

PubMed Search

• Go to the PubMed website

( http://www.ncbi.nlm.nih.gov/sites/entrez ) and try entering a basic, general term of interest into the open field: Search, PubMed for (open field – enter term here)

• Consider the results of your search: Was there too little or too much information, was it relevant to what your interest, do any of the articles appear helpful to you?

144

PubMed Search

Search Strategies

• Limits: You can limit the responses in several categories. On the results page or on the search page, there is a row of tabs close to the top of the page. Click on “Limits,” the tab farthest to the left.

This allows the searcher to limit the results in certain categories such as English language, for studies specifically on children, type of study, etc.

145

PubMed Search

Search Strategies

Basic operators commonly included in search terms:

• * (truncation symbol) : Retrieves various spellings; Example: the term strength* will retrieve articles related to strength, strengthening, strengthen, etc

• OR : Expands retrieval of various topics;

Example: Spina Bifida OR Meningocele will return articles listed under either heading

146

PubMed Search

Search Strategies

Basic operators commonly included in search terms, continued…

• AND : Limits search; Example: Cerebral

Palsy AND Strength* will only return articles that are related to both terms (for example, many of those retrieved articles will have a major or minor focus on strengthening programs in individuals with CP)

147

PubMed Search

Search Strategies

Basic operators commonly included in search terms, continued…

• [tw] (text word) : Retrieves articles with a specific word in the title or abstract; Example:

[tw]orthotic will retrieve articles with orthotic in the title or possibly the abstract. Be careful though, it might leave out articles with terms such as AFOs, DAFOs, orthoses, etc. That is when MeSH headings (slide 149) are useful.

148

PubMed Search

Search Strategies

Basic operators commonly included in search terms, continued…

There are many additional terms/commands that can be added to a basic search, which again is beyond the scope of this module. This is only to make you aware that terms can be added to designate a type of study (such as treatment, prognosis, diagnosis, etc), methodological qualities of the study (specificity, sensitivity, validity, reliability, responsiveness to change, etc), and many more.

149

Search Strategies

PubMed Search, MeSH

MeSH (Medical Subject Heading) terms provide a way to search for a specific topic that may use different terminology for the same concept. Tutorials are available on PubMed.

• MeSH database: Try this search! On the main

PubMed page, look at the blue column on the left.

Under “PubMed Services” click on “MeSH

Database”

150

Search Strategies

PubMed Search, MeSH

At the top of the page, try a search in the open field.

“Search MeSH for” (open field – enter topic of your choice)

•

Checking on the numbered box with your subheading will allow the search to include all related

“Entry Terms” listed below the topic.

•To search for this in PubMed, click on the tab towards the top of the page next to “Display” currently with

“Summary” or “Full” in the field.

•Scroll down. “PubMed Links” will include a general, more comprehensive search, while “PubMed –

Major Topic Links” will include articles more 151 specific to your topic.

Search Strategies

PubMed Search, Clinical Queries

Clinical Queries database: Try this search! On the main PubMed page, look at the blue column on the left. Under “PubMed Services” click on “Clinical

Queries” Database.

This page provides specialized searches for clinicians, the search can be performed for a specific topic, related to a type of study. For example, the searcher could investigate cerebral palsy, related to etiology, diagnosis, therapy, prognosis, or clinical prediction guides.

152

Search Strategies

PubMed Search, Clinical Queries

Clinical Queries database, continued…

It is also a place to search for systematic reviews

(Don’t be disappointed if you cannot find very many for rare conditions, especially in pediatrics. More studies are being done, but there have not been as many performed yet in those fields)

You can perform a narrow, specific search or a board sensitive search.

Pick out a topic, category, and scope, and try one!

153

Section 4 Outline

How to Interpret Research Related to EBP

• Interpretation of Evidence, 154

• For therapy/intervention, 155-166

• For diagnosis, 167-174

154

Interpretation of Evidence

• Consider the type of study and where the study falls on the hierarchy of evidence.

• When evaluating the study, ask three questions:

Are the Results Valid, What Are the Results, How

Can I Apply the Results to Patient Care?

• Don’t forget to incorporate clinical expertise and patient values and preferences after evaluating any research material.

• As examples, the following slides will detail interpretation of evidence for therapy and diagnosis studies.

155

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Are the results valid? (Slides 148 to 156)

• Did the experimental (or treatment) groups begin the study with a similar prognosis?

If not, the end results may be due to differences in the groups versus differences due to intervention

• Were patients randomized into the groups?

If the participants were not randomly assigned to a group, this decreases the validity of the study, as one group’s conditions may have impacted the

156 outcomes.

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Was randomization concealed (blinded or masked)?

If randomization was not concealed, this decreases the validity of the study. Those enrolling the participants may choose, unintentionally or intentionally, certain participants for certain groups if randomization is not concealed .

157

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Were patients analyzed in the groups to which they were randomized?

It may be tempting to move patients between groups.

For example, if a patient in an experimental group did not participate in the strengthening activity or take the medication prescribed for that group, it may be tempting to move that person to the control group.

However, any cross-over or dropping out decreases the validity of the results.

158

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Were patients analyzed in the groups to which they were randomized?

Reasons for non-compliance or failure to finish treatment may be related to prognosis, and switching groups negates the unbiased comparison of randomization.

Researchers are encouraged to follow an “intentiontotreat” analysis where all participants are analyzed in groups to which they were originally assigned. If drop-outs occurred, statistical methods are present to compensate.

159

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Were patients in the treatment group and control groups similar with respect to known prognostic factors?

If not, this decreases the validity of the study, as groups’ prognostic differences may impact the outcome.

Statistical testing can determine if groups are similar, and can also compensate for potential differences in final analyses. The magnitude of initial differences should be examined.

160

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Did experimental and control groups retain a similar prognosis after the study started?

Were patients aware of group allocation? If so, this decreases the validity of the study. Often in PT research, it is difficult to blind a participant to the fact that they received a certain treatment.

However, patients’ perceptions of received treatment may impact results and responses.

161

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Were clinicians aware of group allocation?

If so, this decreases the validity of the study. The researchers may treat patients in different groups differently, aside from the treatment, such as spending more time with a certain participants.

This may bias the results.

162

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Were outcome assessors aware of group allocation?

If so, this decreases the validity of the study.

If the researcher knows the group assignment of the enrolled participant, it may unintentionally impact the results, especially if the outcome measures are more subjective.

163

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Validity

Was follow-up complete?

If not, this decreases the validity of the study.

When there is loss of follow-up, the researcher may consider a “worse-case” scenario, such as the experimental treatment that was supposed to improve function decreased function while those in the control group improved.

Statistical analysis can determine how results may have been different.

164

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Results

What are the results?

• How large was the treatment effect? Usually involves examination of statistical analysis. Was there a statistically significant difference? How large was that difference or effect size?

• How precise was the estimate of the treatment effect? What do the statistics, such as confidence intervals and minimal clinically important differences, reveal about the treatment effect?

(Refer to statistics knowledge for these questions)

165

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Application

How can I apply the results to patient care?

• Were the study patients similar to my patient?

Would your patient have qualified to be included in the study? Can you extrapolate any useful information if the patient would not have qualified?

• Were all clinically important outcomes considered? Are the outcomes of interest areas of importance in your practice?

166

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Therapy/Intervention: Application

Are the likely treatment benefits worth the potential harm and cost?

The clinician and patient would need to contemplate the impact of any potentially adverse effects, as well as the amount of time, effort, and additional costs of the treatment.

167

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Diagnosis

This information does not apply only to a medical diagnoses, it can also be used with physical therapy tests and measures that evaluate a multitude of conditions.

For example, tools that evaluate: risk for falls, developmentally appropriate gross motor skills, sensory processing abilities impact on performance

168

Interpretation of Evidence

For Diagnosis: Validity

Are the Results Valid? (slides 168-171)

Did clinicians face diagnostic uncertainty? If not, this decreases the validity of the results. The tool is only helpful if it can distinguish among those with uncertainty in diagnosis.

For example, if testing a tool determining fall risk, it would not be beneficial to study the tool with young adults with no history of falls and no risks for falls.

The tool would need to be investigated in individuals with the potential to be at risk for falls.

169

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Diagnosis: Validity

Was there a blind comparison with an independent gold standard applied similarly to the treatment group and the control group?

If not, this decreases the validity of the results.

When the comparison is not blinded, there is a potential for bias, especially if the tools contain more subjective material. The gold standard, also known as a reference standard, should be separate from the test being evaluated .

170

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Diagnosis: Validity

Was there a blind comparison with an independent gold standard applied similarly to the treatment group and the control group? continued…

If two different test batteries are being compared, for example, they should not contain the same items.

Finally, it is important to consider the reputability of the gold standard. In some cases, there might not be a universally accepted gold standard of comparison.

The clinician should consider if the reference standard is acceptable.

171

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Diagnosis: Validity

Did the results of the test being evaluated influence the decision to perform the reference standard?

If so, this decreases the validity of the results. The reference standard should be applied in every case, regardless of the results of the test being evaluated.

172

(Guyatt and Rennie, 2002)

Interpretation of Evidence

For Diagnosis: Results

What are the results?

Consider the statistical and clinical implications

What likelihood ratios were associated with the range of possible test results?

The researcher could also examine other related statistics, such as sensitivity and specificity, predictive values, likelihood ratios, and the ROC curve. The researcher can evaluate the psychometric properties of the diagnostic test: its validity, reliability, and responsiveness to change (as discussed in

173 previous slides).

(Guyatt and Rennie, 2002

)

Interpretation of Evidence

For Diagnosis: Application

How can I apply the results to patient care?

Will the reproducibility of the test result and its interpretation be satisfactory in my clinical setting? It is important that the test produce reliable results that can be effectively interpreted.