State Space Search Part 3

advertisement

State Space 3

Chapter 4

Heuristic Search

Backtrack

Depth First

Breadth First

All work if we have well-defined:

Goal state

Start state

State transition rules

But could take a long time

Three Algorithms

An informed guess that guides search

through a state space

Can result in a suboptimal solution

Heuristic

Start

Goal

A

D

B

C

C

B

D

A

Two rules:

clear(X) on(X, table)

clear(X) ^ clear(Y) on(X,Y)

Generate part of the search space BF.

answer, but it could take a long time.

We’ll find the

Blocks World: A Stack of Blocks

For each block that is resting where it

should, subtract 1

2. For each block that is not resting where

it should, add 1

1.

Heuristic 1

1.

2.

At every level, generate all children

Continue down path with lowest score

Define three functions:

f(n) = g(n) + h(n)

Where:

h(n) is the heuristic estimate for n--guides the

search

g(n) is path length from start to current

node—ensures that we choose node closest to

root when more than 1 have same h value

Hill Climbing

Given

C

B

A

D

and CB

DA

At level n

The f(n) of each structure is the same

f(n) = g(n) = (1+1-1-1) = g(n)

But which is actually better

Problem: heuristic is local

C

B

A

D

Must be entirely undone

Goal Requires 6 moves

CB

DA

Goal requires only two moves

Problem with local heuristics

Takes the entire structure into account

1. Subtract 1 for each block that has

correct support structure

2. Add 1 for each block in an incorrect

support structure

Global Heuristic

Goal: D

C

B

A

C

B

A

D

CB

DA

f(n) = g(n) + (3+2+1+0) =g(n) + 6

f(n) = g(n) + (1 + 0 -1+ 0) = g(n)

So the heuristic correctly chose the second structure

Seems to Work

The Road Not Taken

Open contains current fringe of the search

Open: priority queue ordered by f(n)

Closed: queue of states already visited

Nodes could contain backward pointers so

that path back to root can be recovered

Best First

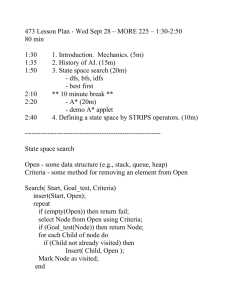

path best_first(Start)

{

open = [start], closed = [];

while (!open.isEmpty())

{

cs = open.serve();

if (cs == goal)

return path;

generate children of cs;

for each child

{

case:

{

child is on open; //node has been reached by a shorter path

if (g(child) < g(child) on open)

g(child on open) = g(child);

break;

child is on closed;

if (g(child < g(child on closed))

{

//node has been reached by a shorter path and is more attractive

remove state from closed;

open.enqueue(child);

}

break;

default:

{

f(child) = g(child) + h(child);//child has been examined yet

open.enqueue(child);

}

}

}

}

closed.enqueue(cs);//all cs’ children have been examined.

open.reorder();//reorder queue because case statement may have affected ordering

}

return([]); //failure

Admissible Search Algorithms

◦ Find the optimal path to the goal if a path to

the goal exists

Breadth-First: Admissible

Depth-First: Not admissible

Admissibility

Uses

Best First

f(n) = g(n) + h(n)

Algorithm A

f*(n) = g*(n) + h*(n)

Where

g*(n) is the cost of the shortest path from

start to n

h*(n) is the cost of the shortest path from n

to goal

So, f*(n) is the actual cost of the optimal path

Can we know f*(n)?

f*

Not without having exhaustively searched

the graph

Goal: Approximate f*(n)

The Oracle

g(n) – actual cost to n

g*(n) – shortest path from start to n

So g(n) >= g*(n)

When g*(n) = g(n), the search has

discovered the optimal path to n

Consider g*(n)

Often we can know

◦ If h(n) is bounded above by h*(n)

◦ (This sometimes means finding a function

h2(n) such that h(n) <= h2(n) <= h*(n))

Meaning: the optimal path is more

expensive than the one suggested by the

heuristic

Turns out this is a good thing (within

bounds)

Consider h*(n)

283

164 ->

7 5

123

8 4

765

Invent two heuristics: h1 and h2

h1(n): number of tiles not in goal position

h1(n) = 5 (1,2,6,8,B)

h2(n): number of moves required to move out-of-place

tiles to goal

T1 = 1, T2 = 1, T6 = 1, T8 = 2, TB = 1

h2(n) = 6

h1(n) <= h2(n) ?

An 8-puzzle Intuition

h2(n) <= h*(n)

Each out-of-place tile has to be moved a

certain distance to reach goal

h1(n) <= h2(n)

h2(n) requires moving out-of-place tiles

at least as far as h1(n)

So, h1(n) <= h2(n) <=h*(n)

h1(n) is bounded above by h*(n)

A*

If algorithm A uses a heuristic that returns

a value h(n) <= h*(n) for all n, then it is

called A*

What does this property mean?

Leads to a Definition

It means that the heuristic estimate

chosen never thinks a path is better than

it is

goal

Suppose: h(rst) = 96

• But we know it is really 1 (i.e. h*(rst) = 1) because we’re the

oracle

Suppose: h(lst) = 42

• Left branch looks better than it really is

This makes the left branch seem better than it actually is

Suppose:

1. h(n) = 0 and so <= h*(n)

Search will be controlled by g(n)

If g(n) = 0, search will be random: given

enough time, we’ll find an optimal path to

the goal

If g(n) is the actual cost to n, f(n) becomes

breadth-first because the sole reason for

examining a node is its distance from start.

We already know that this terminates in an

optimal solution

Claim: All A* Algorithms are

admissible

2.

h(n) = h*(n)

Then the algorithm will go directly to the goal since h*(n)

computes the shortest path to the goal

Therefore, if our algorithm is between these two

extremes, our search will always result in an optimal

solution

Call h(n) = 0, h’(n)

So, for any h such that

h’(n) <= h(n) <= h*(n) we will always find an optimal

solution

The closer our algorithm is to h’(n), the more extraneous

nodes we’ll have to examine along the way

For any two A* heuristics, ha, hb

If ha(n) <= hb(n), hb(n) is more informed.

Informedness

Comparison of two solutions that discover

the optimal path to the goal state:

1. BF: h(n) = 0

2. h(n) = number of tiles out of place

The better informed solution examines less

extraneous information on its path to the

goal

Comparison