Advanced Operating Systems, CSci555

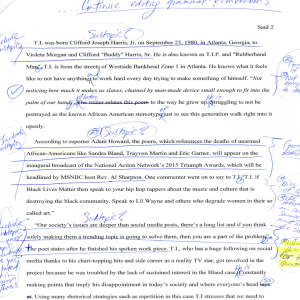

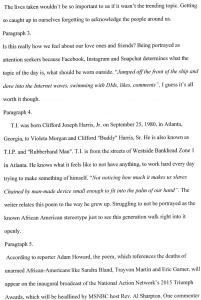

advertisement