Computer_Architectur Network Connected Multi s

advertisement

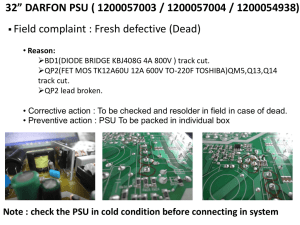

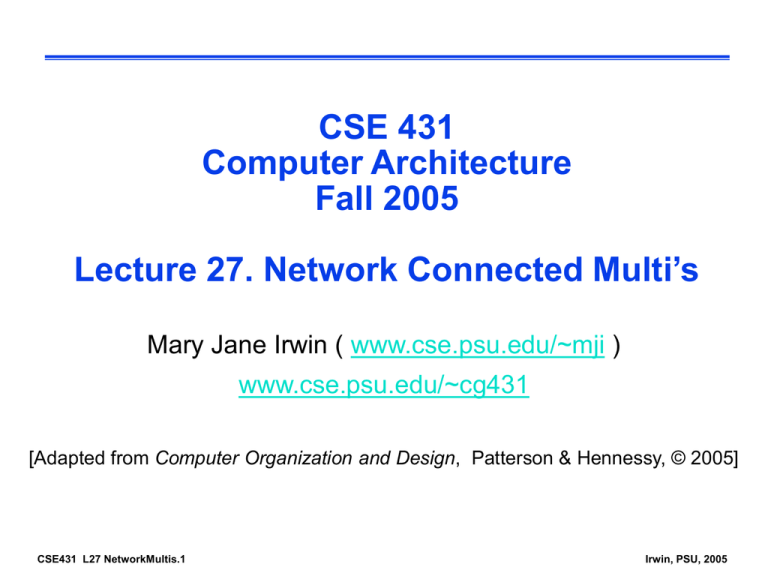

CSE 431 Computer Architecture Fall 2005 Lecture 27. Network Connected Multi’s Mary Jane Irwin ( www.cse.psu.edu/~mji ) www.cse.psu.edu/~cg431 [Adapted from Computer Organization and Design, Patterson & Hennessy, © 2005] CSE431 L27 NetworkMultis.1 Irwin, PSU, 2005 Review: Bus Connected SMPs (UMAs) Processor Processor Processor Processor Cache Cache Cache Cache Single Bus Memory I/O Caches are used to reduce latency and to lower bus traffic Must provide hardware for cache coherence and process synchronization Bus traffic and bandwidth limits scalability (<~ 36 processors) CSE431 L27 NetworkMultis.2 Irwin, PSU, 2005 Review: Multiprocessor Basics Q1 – How do they share data? Q2 – How do they coordinate? Q3 – How scalable is the architecture? How many processors? # of Proc Communication Message passing 8 to 2048 model Shared NUMA 8 to 256 address UMA 2 to 64 Physical connection CSE431 L27 NetworkMultis.3 Network 8 to 256 Bus 2 to 36 Irwin, PSU, 2005 Network Connected Multiprocessors Processor Processor Processor Cache Cache Cache Memory Memory Memory Interconnection Network (IN) Either a single address space (NUMA and ccNUMA) with implicit processor communication via loads and stores or multiple private memories with message passing communication with sends and receives Interconnection network supports interprocessor communication CSE431 L27 NetworkMultis.4 Irwin, PSU, 2005 Summing 100,000 Numbers on 100 Processors Start by distributing 1000 elements of vector A to each of the local memories and summing each subset in parallel sum = 0; for (i = 0; i<1000; i = i + 1) sum = sum + Al[i]; /* sum local array subset The processors then coordinate in adding together the sub sums (Pn is the number of processors, send(x,y) sends value y to processor x, and receive() receives a value) half = 100; limit = 100; repeat half = (half+1)/2; /*dividing line if (Pn>= half && Pn<limit) send(Pn-half,sum); if (Pn<(limit/2)) sum = sum + receive(); limit = half; until (half == 1); /*final sum in P0’s sum CSE431 L27 NetworkMultis.5 Irwin, PSU, 2005 An Example with 10 Processors sum sum sum sum sum sum sum sum sum sum P0 P1 P2 P3 P4 P5 P6 P7 P8 P9 CSE431 L27 NetworkMultis.6 half = 10 Irwin, PSU, 2005 Communication in Network Connected Multi’s Implicit communication via loads and stores hardware designers have to provide coherent caches and process synchronization primitive lower communication overhead harder to overlap computation with communication more efficient to use an address to remote data when demanded rather than to send for it in case it might be used (such a machine has distributed shared memory (DSM)) Explicit communication via sends and receives simplest solution for hardware designers higher communication overhead easier to overlap computation with communication easier for the programmer to optimize communication CSE431 L27 NetworkMultis.8 Irwin, PSU, 2005 Cache Coherency in NUMAs For performance reasons we want to allow the shared data to be stored in caches Once again have multiple copies of the same data with the same address in different processors bus snooping won’t work, since there is no single bus on which all memory references are broadcast Directory-base protocols keep a directory that is a repository for the state of every block in main memory (which caches have copies, whether it is dirty, etc.) directory entries can be distributed (sharing status of a block always in a single known location) to reduce contention directory controller sends explicit commands over the IN to each processor that has a copy of the data CSE431 L27 NetworkMultis.9 Irwin, PSU, 2005 IN Performance Metrics Network cost number of switches number of (bidirectional) links on a switch to connect to the network (plus one link to connect to the processor) width in bits per link, length of link Network bandwidth (NB) – represents the best case Bisection bandwidth (BB) – represents the worst case bandwidth of each link * number of links divide the machine in two parts, each with half the nodes and sum the bandwidth of the links that cross the dividing line Other IN performance issues latency on an unloaded network to send and receive messages throughput – maximum # of messages transmitted per unit time # routing hops worst case, congestion control and delay CSE431 L27 NetworkMultis.10 Irwin, PSU, 2005 Bus IN Bidirectional network switch Processor node N processors, 1 switch ( Only 1 simultaneous transfer at a time NB = link (bus) bandwidth * 1 BB = link (bus) bandwidth * 1 CSE431 L27 NetworkMultis.11 ), 1 link (the bus) Irwin, PSU, 2005 Ring IN N processors, N switches, 2 links/switch, N links N simultaneous transfers NB = link bandwidth * N BB = link bandwidth * 2 If a link is as fast as a bus, the ring is only twice as fast as a bus in the worst case, but is N times faster in the best case CSE431 L27 NetworkMultis.12 Irwin, PSU, 2005 Fully Connected IN N processors, N switches, N-1 links/switch, (N*(N-1))/2 links N simultaneous transfers NB = link bandwidth * (N*(N-1))/2 BB = link bandwidth * (N/2)2 CSE431 L27 NetworkMultis.13 Irwin, PSU, 2005 Crossbar (Xbar) Connected IN N processors, N2 switches (unidirectional),2 links/switch, N2 links N simultaneous transfers NB = link bandwidth * N BB = link bandwidth * N/2 CSE431 L27 NetworkMultis.14 Irwin, PSU, 2005 Hypercube (Binary N-cube) Connected IN 2-cube 3-cube N processors, N switches, logN links/switch, (NlogN)/2 links N simultaneous transfers NB = link bandwidth * (NlogN)/2 BB = link bandwidth * N/2 CSE431 L27 NetworkMultis.15 Irwin, PSU, 2005 2D and 3D Mesh/Torus Connected IN N processors, N switches, 2, 3, 4 (2D torus) or 6 (3D torus) links/switch, 4N/2 links or 6N/2 links N simultaneous transfers NB = link bandwidth * 4N BB = link bandwidth * 2 N1/2 or CSE431 L27 NetworkMultis.16 or link bandwidth * 6N link bandwidth * 2 N2/3 Irwin, PSU, 2005 Fat Tree N processors, log(N-1)*logN switches, 2 up + 4 down = 6 links/switch, N*logN links N simultaneous transfers NB = link bandwidth * NlogN BB = link bandwidth * 4 CSE431 L27 NetworkMultis.17 Irwin, PSU, 2005 Fat Tree Trees are good structures. People in CS use them all the time. Suppose we wanted to make a tree network. A D The bisection bandwidth on a tree is horrible - 1 link, at all times The solution is to 'thicken' the upper links. C Any time A wants to send to C, it ties up the upper links, so that B can't send to D. B More links as the tree gets thicker increases the bisection Rather than design a bunch of N-port switches, use pairs CSE431 L27 NetworkMultis.18 Irwin, PSU, 2005 SGI NUMAlink Fat Tree www.embedded-computing.com/articles/woodacre CSE431 L27 NetworkMultis.19 Irwin, PSU, 2005 IN Comparison For a 64 processor system Bus Network bandwidth 1 Bisection bandwidth 1 Total # of Switches 1 Ring Torus 6-cube Fully connected Links per switch Total # of links CSE431 L27 NetworkMultis.20 1 Irwin, PSU, 2005 Network Connected Multiprocessors Proc SGI Origin R16000 Cray 3TE Alpha 21164 Intel ASCI Red Proc Speed # Proc BW/link (MB/sec) fat tree 800 300MHz 2,048 3D torus 600 Intel 333MHz 9,632 mesh 800 IBM ASCI White Power3 375MHz 8,192 multistage Omega 500 NEC ES SX-5 500MHz 640*8 640-xbar 16000 NASA Columbia Intel 1.5GHz Itanium2 512*20 IBM BG/L Power PC 440 65,536*2 3D torus, fat tree, barrier CSE431 L27 NetworkMultis.22 128 IN Topology 0.7GHz fat tree, Infiniband Irwin, PSU, 2005 IBM BlueGene 512-node proto BlueGene/L Peak Perf 1.0 / 2.0 TFlops/s 180 / 360 TFlops/s Memory Size 128 GByte 16 / 32 TByte Foot Print 9 sq feet 2500 sq feet Total Power 9 KW 1.5 MW # Processors 512 dual proc 65,536 dual proc Networks 3D Torus, Tree, Barrier 3D Torus, Tree, Barrier Torus BW 3 B/cycle 3 B/cycle CSE431 L27 NetworkMultis.23 Irwin, PSU, 2005 A BlueGene/L Chip 11GB/s 32K/32K L1 128 440 CPU 2KB L2 5.5 Double FPU GB/s 256 256 700 MHz 256 32K/32K L1 128 440 CPU CSE431 4MB 2KB L2 3D torus 1 6 in, 6 out 1.6GHz 1.4Gb/s link L27 NetworkMultis.24 L3 ECC eDRAM 128B line 8-way assoc 256 5.5 Double FPU GB/s Gbit ethernet 16KB Multiport SRAM buffer 11GB/s Fat tree 8 3 in, 3 out 350MHz 2.8Gb/s link Barrier 4 global barriers DDR control 144b DDR 256MB 5.5GB/s Irwin, PSU, 2005 Networks of Workstations (NOWs) Clusters Clusters of off-the-shelf, whole computers with multiple private address spaces Clusters are connected using the I/O bus of the computers lower bandwidth that multiprocessor that use the memory bus lower speed network links more conflicts with I/O traffic Clusters of N processors have N copies of the OS limiting the memory available for applications Improved system availability and expandability easier to replace a machine without bringing down the whole system allows rapid, incremental expandability Economy-of-scale advantages with respect to costs CSE431 L27 NetworkMultis.25 Irwin, PSU, 2005 Commercial (NOW) Clusters Proc Proc Speed # Proc Network Dell P4 Xeon PowerEdge 3.06GHz 2,500 eServer IBM SP 1.7GHz 2,944 VPI BigMac Apple G5 2.3GHz 2,200 HP ASCI Q Alpha 21264 1.25GHz 8,192 LLNL Thunder Intel Itanium2 1.4GHz 1,024*4 Quadrics Barcelona PowerPC 970 2.2GHz 4,536 Myrinet CSE431 L27 NetworkMultis.26 Power4 Myrinet Mellanox Infiniband Quadrics Irwin, PSU, 2005 Summary Flynn’s classification of processors - SISD, SIMD, MIMD Q1 – How do processors share data? Q2 – How do processors coordinate their activity? Q3 – How scalable is the architecture (what is the maximum number of processors)? Shared address multis – UMAs and NUMAs Scalability of bus connected UMAs limited (< ~ 36 processors) Network connected NUMAs more scalable Interconnection Networks (INs) - fully connected, xbar - ring - mesh - n-cube, fat tree Message passing multis Cluster connected (NOWs) multis CSE431 L27 NetworkMultis.27 Irwin, PSU, 2005 Next Lecture and Reminders Next lecture - Reading assignment – PH 9.7 Reminders HW5 (and last) due Dec 6th (Part 1) Check grade posting on-line (by your midterm exam number) for correctness Final exam (tentatively) schedule - Tuesday, December 13th, 2:30-4:20, 22 Deike CSE431 L27 NetworkMultis.28 Irwin, PSU, 2005