Kanji Storyteller

advertisement

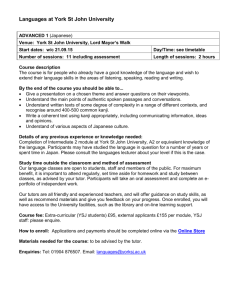

Kanji Storyteller: A Sketch-Based Multimedia Authoring and Feedback Application to Reinforce Japanese Language Learning Ross Peterson, Sung Kim, Ke Wang, Gabriel Dzodom, Francisco Vides, Jimmy Ho, Hong-Hoe Kim Department of Computer Science and Engineering, Texas A&M University – College Station ABSTRACT We present Kanji Storyteller, a kanji sketch recognition system to educate and entertain students through constructing a storyboard space. The current kanji set includes symbols representing concrete objects that can be included as actors, sprites or objects within the story space. The story space can be annotated to allow the author to create a story about the space they created. When using the story space, the author selects a spot to enter kanji and is presented with a kanji insertion popup window. The user confirms their entry manually so the system can attempt to recognize the kanji written and present corresponding images or “stickers” that represent the kanji’s actual meaning. We implement recognition of sketched kanji and kana with 3 different template matching methods; we use a new method for online kanji recognition that we call, “Stroke by Stroke." We also use the $p recognizer for kanji, as well as a modified $1 algorithm for kana recognition. Kanji Storyteller’s storyboard space is a medium for educating users on the meaning of traditional kanji as well as their transcription and stroke-order. Keywords sketch recognition, educational software, new media, Japanese kanji 1. INTRODUCTION Novice students of the Japanese language must not only learn and understand two separate syllabaries, but many must also struggle to remember the pronunciation, stroke order, radicals, and form of the Chinese characters used in Japanese called, “Kanji”. Traditional studying methods emphasize mnemonics, discipline, and repetition to master kanji use. When these traditional methods fail, many students stop studying altogether. As such, we have focused on creating a fun and engaging system that allows students to construct a kanji study space and create their own stories and mnemonics, as well as immerse themselves in an engaging set of visuals. By mapping kanji sounds and visuals to the writing of the kanji, we hope to improve the student’s ability to recall the kanji. This application also enables students to simultaneously study and express their own artistic visions within their new understanding of the character set. The interface also features a jukugo (compound kanji words) and radical (commonly recurring parts of kanji) recognition system so the student can explore those facets of kanji study. Kanji Storyteller is also planned to feature a social panel in the interface that will act as a common discussion space which may provide opportunities for the students to reinforce their learning by discussing mnemonics or discussing the annotation and stories attached to a given canvas. 2. RELATED WORK We have many educational applications that support sketch-recognition in many fields. Sketch Recognition Applications Laboratory Educational Mechanix is an educational system for engineering students to draw structure sketches on sketch pads [Field11]. Students and instructors can draw their trusses and free-body diagrams, along with other shapes. The benefit of Mechanix is that the system provides feedback to students when they want to check if their sketches are correct. Additionally, Mechanix automatically scores the students' assignments. The system's recognizer uses a geometrical approach similar to LADDER [Hammond05]. Most of the shapes for engineering class have many numbers of lines. For example, an arrow has a one horizontal line and two small lines. This approach allows Mechanix to recognize many kinds of composite or complex shapes successfully. iCanDraw is an application that teaches users how to draw a human face [Dixon10]. The application shows steps to draw faces and it provides instructions on which shapes the users need to draw. The feedback in iCanDraw is controlled by interactions conducted in a step-by-step manner, and additional feedback is provided when the system detects completion of a face sketch. Application to teach how to draw characters Taele introduced an application that teaches how to draw the East Asian characters: Korean, Chinese, and Japanese [Taele10]. The recognizer uses a low-level recognizer, i.e. PaleoSketch, to analyze primitives in shapes [Paulson08] and a high-level recognizer, which represents rules of primitives to recognize the shapes. To teach how to draw the characters, the application shows steps that users need to draw. After the users finish their drawings, the application summarizes the scores of their drawings. Developers of educational software utilize a wide assortment of multimedia to provide entertainment value as a way of capturing the interest of students. Such genres of educational software applications that blend educational elements with entertainment are often labeled as edutainment or learn to play[Rap06]. Positive contributions of edutainment software include research studies that have identified those core attributes that make them ideal for educational purposes[Khi08]. Among those positive contributions are the capabilities of entertaining software to keep the attention of the learners, retain their flow of concentration, and promote the development of good learning by stimulating creativity (learn by doing). While there are many kanji sketch recognition applications, dictionaries, and Japanese language studying tools [Ren09] [TNJ12], our prior works investigation has not revealed any software tools that feature kanji to image and audio transformations that our system provides, nor have we found any applications that supports the authoring of stories based on kanji studying. Figure 0. Screenshot of “Writing Test” from Renshuu Application Renshuu [Ren09], a simple and free, sketch based study application drills its users into writing a target kanji by giving them a fill-in-the-blank prompt and a sketch area. The user sketches in the target kanji and then chooses reveal the actual target kanji by pressing a reveal button. The revealed kanji features the stroke count and stoke order of the kanji along with onyomi (“on” pronunciation used in compound kanji words) in katakana syllabary script and kunyomi (“kun” pronunciation used in single kanji words) in hiragana syllabary script along with a few common jukugo that the kanji is found in. No actual sketch recognition is performed by the application, instead, the user marks whether they wrote the kanji correctly or incorrectly. The system records the selfreported hit and miss rate at the bottom of the application. Renshuu also lets the student search kanji or English words by their JLPT level. Tagai ni Jisho (“With Each Other Dictionary”) [TNJ12] is a free, open-source Japanese dictionary and kanji lookup tool that provides detailed information that includes nanori (pronunciation of kanji used in names), dictionary lookup codes such as Heisig’s, 4 corner, and SKIP, as well as component radicals for kanji and words of the Japanese language. Tagai ni Jisho is particularly noteworthy for its completeness, speed, and a feature that allows students to mark which kanji they have studied so as to limit repetition. In scholarly publications on Kanji study and sketch recognition, Taele and Hammond [Taele09] note the difficulty of remembering how to write and read kanji for Japanese students due to shape collisions and the nonphonetic properties, respectively, of the logographic script. Their kanji sketch recognition system, Hashigo, aimed to improve student recall by acting as an interactive instructor that provided feedback on the sketch provided by the student. Our system instead takes a different approach to resolving the difficulty of remembering how to write kanji by instead mapping the written kanji, however poorly written, to an image analogue that represents the kanji and providing the option to see the kanji as a part of the original image. This reduces the effect of shape collisions and similarity between kanji by disambiguation and association with the idea that the kanji represents. Furthermore, by placing the image on a permanent canvas, the user is exposed to the kanji for longer periods of time when compared to kanji (flash card) drills where the kanji is only seen for a few brief seconds. Lin et al.’s research is perhaps the most relevant to our system in they constructed a collaborative story based kanji learning system for tabletop computers where learners congregate around the tabletop, sketch kanji and annotations as well as arrange computer recognized radical cards that combine into larger kanji [Lin09]. Their collaborative story construction table top system is what has inspired us to create a networked desktop/laptop analogue where students could share their stories as well as search and construct kanji by the selection of radical components. Given the current adoption rate of tabletop systems, the fact that our system caters to owners of laptops and desktops means that our system can reach a much larger audience. Furthermore, our system does not even necessarily require touch screens, cards, or camera trackers which lowers the hardware cost of when compared to Lin’s system. The Kanji Storyteller system also features a dictionary that allows in depth studying of the kanji that Lin’s system does not provide. 3. IMPLEMENTATION The graphical user interface for Kanji Storyteller is divided into two views with 3 main panels: Drawing Panel / Canvas (Right) Dictionary Panel (Left) – Compose View Only Story Panel (Bottom Center) Social Panel (Right) – Explore View Only Figure 1. Kanji Storyteller GUI The drawing panel is the canvas where students can construct their scene with the kanji and their associated static images. Instead of only drawing pictures on the canvas directly, clicking on any point on the drawing panel creates a pop up with an area where they can draw a kanji. If the kanji they draw is contained in our content database and recognized by our system, an associated sticker image can be chosen from the recognition popup menu and placed on the screen. For example, if the user draws the kanji of a dog (犬), the user can select from several kinds of dogs and place that dog on the screen at the point it was drawn. In this way, the users are be rewarded when they correctly draw a kanji by having the desired sticker in the canvas. The dictionary panel allows the student to see an overview of all kanji in the language and see the range of possibilities that they can draw from to create their story space. The dictionary allows the student to look up the details of a particular kanji of interest so that they can master it. The dictionary panel also allows students to sort the fields and study the kanji along that field of interest. For example, in preparation of the Japanese Language Proficiency Test (JLPT), a student may want to sort the JLPT level field and study all JLPT kanji starting from level 4 up to 1. Finally, the dictionary panel also has a search form that allows the student to find details on a particular kanji directly rather than searching manually through the table. This panel is visible in compose view. The story panel is the section of the user interface that enables the user to create annotations to supplement the graphics on the canvas. We believe the companion annotations furthers the user's understanding of context of the kanji he has studied. On the user interface, the story panel is directly below the drawing panel; it supports basic formatting features like most standard editors. The whole system is planned to be part of a social network where users exchange feedback on stories and handwritings shared by their peers. The social panel is designed to support this interaction. Located at the right side of the drawing panel, the social panel comprises two major components: the display component that renders all feedback connected to the story in context, and the entry component that enables the user to write and post feedback. The interface also features a control panel that acts as the main menu for the interface. From left to right, the control panel contains a file command button group, a sticker depth position button group, a radical, jukugo, and kanato-word ‘grouped search’ button group, clear stickers and clear background buttons, a help button, and a view selection dropdown menu. The file command button group consists of a new button, a load button, and a save button. The sticker depth position button group features a forward button, a backward button, a to-front button, and a to-back button. Database Since one of our major goals was to provide a complete study suite for kanji, it was important that Kanji Storyteller would have a database that includes at least the most commonly used kanji that describes a lower limit for fluency in Japanese. However, even this lower limit may be too limiting for students who are passionate about studying kanji. Therefore, we thought it would be ideal to include close to all of the kanji in the Japanese language. In line with this thinking, we chose to start with the KANJIDIC database [KDIC] which is maintained by the Electronic Dictionary Research and Development Group at Monash University and contains 6355 kanji. From this original database, we populated a Microsoft Excel spreadsheet that is read into the Kanji Storyteller’s dictionary panel application through the Java Excel API [Jaxl]. We chose to use an excel document as our storage structure with Java Excel API to access it since our kanji database is mostly static (i.e. kanji are not frequently added to the language) and the Java Excel API was well documented and provided easy access to the excel document. The database file itself contains only the information most relevant to Japanese students: meanings, pronunciations, as well as a few statistics and indicators on the difficulty and frequency of use of the kanji. While the KanjiDictionary spreadsheet is useful for storing text based information, it is less than ideal for storing images and audio that we plan to include for kanji that the recognizer can transform. As such, we have constructed several separate property text files that specify the relative links to a local 1st level cache of the associated audio and sticker/kanji images. Since only a relatively small number of kanji are recognized by the recognizer, the costs of maintaining and updating these separate associative property files and image cache is relatively small. The property files also have the advantage of pointing to a small cache of easy to find and frequently used images. A similar approach for was taken for specifying the audio and images for the jukugo and radical recognition data storage. The layout and operation of the property files is discussed in more detail later. A second level of caching has also been undertaken by using the Google Web Search API search interface to retrieve images for all 6355 kanji and all katakana and hiragana. In total, this cache is about 15 gigabytes in size. The second level sticker image cache did have a few kanji that the Google based image caching failed to find images for. So when the system attempts to retrieve an image for a kanji at runtime, it first checks the 1st and 2nd level caches for an image using the kanji itself as the search key. If the lookup fails, the system uses the Google Web Search API to retrieve an image dynamically at runtime. Given our long-term goal to expand the recognizer to all kanji has not yet been reach and our goal to supporting learner creativity, any kanji is not yet sketch recognizable can still be used to create sticker images by going to the kanji’s dictionary entry and pressing a “get images from internet” button. The button dynamically retrieves a set of images using the Google Web Search API. Since many results can be returned on any given radical, jukugo, or kanji lookup, the significance of the caching method above is only further stressed. With many results, the recognition menu must populate and scale many images. However, since the user is actively waiting for the results from their sketch, the time to populate the recognition menu had to be kept to a minimum. Therefore, we implemented a ‘sliding window’ feature for the recognition menu where only the first 5 images are returned for all the kanji that are found. The remaining kanji info is sent to the recognition menu where it can be used to perform the image cache lookups and populate the remaining kanji images if the user demands those results and their associated images. Figure 2. ‘Sliding Window’ Recognition Menu that feeds the user more images on demand. Drawing Panel At the center of our interface we have our drawing panel. This is the place where the main interaction takes place. It is a blank area or canvas where the user authors a scene by setting a background and placing stickers on it. In this sense, it is no different from PowerPoint or similar slidepresentation authorship tools. However the way stickers are put on screen is novel and unique in that it is designed as a teaching tool for people who are learning kanji. The input method for converting written Kanji to stickers is explained below. Figure 3. Compose view interface for Kanji Storyteller with sketch input popup and recognition menu. Sketch Window Perhaps the most intuitive way of using the white canvas would be to simply draw on it and have the system automatically interpret drawings. But this poses several complications such as finding out when the user has stopped drawing to signal the end of a kanji as compared to when the user has simply paused. In what spatial scope should the recognizer work? When would the recognizer determine when characters are a part of multiple kanji jukugo? These are research problems in their own. In order to keep the implementation feasible within the time scope, we opted to give the user the power to explicitly tell the system when they are done drawing. As soon as the user clicks on any point on the drawing canvas the sketch window pop up shows a small area where the user can write the kanji using a stylus or mouse. Each of the user’s input strokes are processed by the Stroke by Stroke recognizer to give the user possible kanji as the user writes. More details on the Stroke by Stroke recognizer will be given in the Sketch Recognition section. If the user does not see their desired result in the list given to them by the Stroke by Stroke recognizer after they are done sketching, they can either click the ‘recognize kanji’ or ‘recognize kana’ button to attempt to have their sketch recognized all at once by the $p dollar or modified $1 recognizer, respectively. The result stickers are given on a list next to the window showing the most likely symbols recognized where the user can pick which one they intended. Dictionary Panel The dictionary panel is populated from our KanjiDictionary excel spreadsheet. Entries can be selected and the details for that kanji can be viewed. The table shows which kanji can be recognized through sketch input and displayed by the recognizer. The table also allows the student to look up the details of a particular kanji of interest and sort the database along a parameter of interest. If, for example, in preparation of the Japanese Language Proficiency Test, a student wants to study all JLPT level 1 kanji, the student can sort the JLPT level field. The student could do this by clicking on the associated header to sort in descending order. Finally, the dictionary panel also has a search form that allows the student to find details on a particular kanji directly rather than searching manually through the table. Searching works by finding a matching word within each cell in a row. If there’s an input match in that row, the row is selected and the details panel opened, replacing the dictionary panel. If multiple kanji are found in the search, then multiple dictionary entries are returned in order of their frequency of use. The user can cycle through the kanji with left and right navigation buttons. Searching with both Japanese and English characters is supported. Story Panel The story panel includes the formatting controls and a text area for writing the story. First, the formatting controls located at the upper right of the panel allow users to change the font, font color, and font size as well as to bold, italicize, and underline text. Second, the text area occupies most of the story panel and is below the formatting controls. In this text area, the user can enter their annotations or story. Figure 1. The Kanji Dictionary Panel. Details Panel The details panel (Figure 3) shows several important fields. It displays the kanji being studied in large and easy-to-read KaiTi font that mimics good kanji penmanship. Below the kanji is the English meaning for that kanji. Below the kanji are boolean checkmark images that indicate whether that kanji is a kokuji, whether the kanji has local sticker content available, and whether the sketch recognizer can recognize the kanji. To the right of the kanji is a group of statistical information for the kanji that includes the stroke count, JLPT level, grade level and frequency of use ranking for the kanji. In the middle of the details panel is the readings group that shows the kunyomi, onyomi, and nanori pronunciations. Below the readings panel is the jukugo section where the compound words that include the kanji are provided for kanji case studies. At the bottom right corner of the details panel is a “Place on Canvas” button that brings up a popup window that allows the user to choose an associated image to place on the canvas. As previously mentioned, the details panel also has a “Get Online Images” button to retrieve images dynamically. The learner can also view the stroke order (also retrieved dynamically) by pressing the “View Stroke Order” button. Finally, there is a “Return to Dictionary” button that hides the details panel and returns the user to the dictionary panel. Figure 4. Annotation Panel Comments Panel The current interface or the comments panel is a proof-of concept/placeholder GUI that behaves like a feed reader where the feed is the stream of comments related to the story in context. The panel renders the stream as a vertical list of comment cells sorted by date in descending order. Each comment cell contains the commenter's username and the comment's posted date at the top. The rest of the comment cell displays the content of the comment. This is shown in Figure 4. Figure 5. Comments Panel showing comments related to the current story. Kanji and Kana Sketch Recognition Sketch recognition generally can be divided into three categories: gesture-based recognition [Rubine91][ Jacob07], vision-based recognition [Kara05], and geometric-based recognition [Hammond05]. We chose a vision-based recognition approach for our recognizers which include $1, $p, and our proposed Stroke by Stroke recognizer. Figure 2. Details Panel showing important information of selected kanji. It was initially planned that a single recognizer would be used as a general purpose recognizer for both kana and kanji. As more symbols and training samples were added, however, several problems became apparent: 1) Adding more symbols makes it less likely for the recognizer to distinguish between those classes 2) Many symbols share the same parts or look Ex: 刀 (blade), 力 (power), カ(‘KA’) This observation makes sense given that the kana were originally created by making them look similar to kanji that have the same pronunciation. Figure 6. Similarity of kana to parent kanji. [KanaWiki13] 3) More training samples increases the cost of computation Given the high degree of visual collisions between kana and kanji, the increased time and accuracy cost of having a single input symbol recognized between two distinct classes of symbols, and the preexisting interface affordance for all-at-once user initiated recognition, it was decided that the recognition of kanji and kana should be separate in the interface. Much research has been dedicated to the recognition of multi-stroke symbols that echoes our third observation. For example, Wobbrock’s $N recognizer [Anthony10] translates the multi-stroke symbols into the one-stroke symbols. Unfortunately, $N recognizer has an exponential time complexity. In fact, the recognizer needs O(n * S! * 2^S * T) time, where S is the number of strokes, T is the number of templates, and n is the number of sampled points. This time complexity is problematic for Japanese characters which commonly consist of half a dozen to a dozen strokes or, in the most extreme case, 84 strokes (when properly written). Given that the computational cost can be prohibitive for a large number of template classes, up to 6355 classes for kanji alone, we decided to use template based recognizers that have low time complexity in execution. The most reasonable approach would be to eliminate computation where possible and reduce the set of templates that have to be traversed in order to produce the kanji that the user desires. This is the main motivation for our Stroke by Stroke recognizer. It uses online recognition to progressively reduce the set of kanji as each stroke is given to it by the user. Figure 7. CJK Strokes and Classification Groups (Adapted From [CJKWiki13]) The user’s strokes are monitored for ‘Mouse Up’ events that are used to determine the ending of a single stroke. Each individual stroke is sent to the $p dollar recognizer to be classified as one of the 37 unique CJK (China, Japan, Korea) calligraphic strokes. Once the stroke is classified, the CJK stroke’s classification group is determined which is in turn used to determine which kanji contain that kind of stroke for the current stroke number. Any kanji that does not have that stroke appearing in the correct order is eliminated from the candidate list and the remaining kanji are returned as results for the user to choose from. For example, if the user draws a horizontal stroke as their first stroke, the Stroke by Stroke recognizer returns kanji containing a horizontal stroke as their first stroke, which would include kanji like: 土, 木, and 本. Any further strokes made by the user would progressively thin the result list. If the user enters more strokes than exists in a given kanji, it is removed from the list. Many of these CJK strokes were grouped together into the same classification groups due to their visual similarity to the untrained eye. For example, any CJK stroke that is a repeat of a previous stroke with a ‘G’ (stylistic tail) attached were grouped together. Other CJK groups were formed from their visual similarity as determined by the $1 and $p recognizers. Group 13 is one such group and consists of CJK strokes N, P, D, and T. When written by users within Kanji Storyteller’s small input sketch window, the relative sizes between strokes N, P and D, T were nonexistent which made distinguishing between them difficult. A total of 11 distinct groups were made. It is important to note that the grouping system we implemented reduces our ability to distinguish between similar kanji. As a result of our inclusive grouping of CJK strokes, the Stroke by Stroke recognizer returns more kanji than it would if each stroke were a different class. At the interface level, this means that the user may have to scroll through more results in the recognition window to find their desired kanji. This problem is minimal compared to the problems compared in other recognizers, however, since any other recognizer would have to classify between 6355 classes of kanji rather than just 27 unique strokes and would be more likely to return false positives as the top results. When the stroke order, number of strokes, and the classification groups are used in concert, the Stroke by Stroke recognizer was able to uniquely distinguish all possible of the given kanji reliably. The $p recognizer was chosen as the recognizer for CJK strokes through a grounded approach where the $p and $1 recognizers were evaluated for their “hit” accuracy on two CJK stroke datasets. The same grounded approach was taken for determining which recognizers would be used for the kanji and kana data sets. The details of this analysis is given the evaluation section. As stated before, our goal was to minimize computation time for symbol recognition while using a visual approach. As such, $1 and $p were obvious choices for their strong run time performances of O(n * T * R) and O(n^2.5 * T), respectively. Note that the R term for $1 is number of iterations used in Golden Section Search for rotation invariance. Given that kanji and kana are only ‘valid’ in a single orientation, we were able to eliminate this rotational term by implementing a modified $1 that removes the rotation based scoring. $p did not need to be modified since it was already rotation invariant. The modified $1 recognizer finds the Euclidean distance between the input and template after they have been preprocessed, e.g., resampled to 64 points, scaled to a 500 by 500 square, and translated so that their to the origin. Since kana are often multi stroke symbols, and since $1 is meant to recognize single stroke symbols, the modified $1 algorithm simply concatenates the strokes to represent it as a single stroke. This does mean that the user should draw the kana in the right stroke order so that it will be recognized. We felt that this was a reasonable restriction given that one of Kanji Storyteller’s goals is to promote good handwriting. The resulting time complexity of the modified $1 algorithm is an impressive O(n*T), which is linear with the number of input points rather than quadratic like $p. The $p preprocessing is a slightly different; it resamples to 32 points, scales the points of a given sketch to the size of its bounding box (which preserves the kanji’s shape), and translates the center to origin. Details for the $1 and $p algorithms are provided in [Jacob07] and [Vatavu12] Figure 7. Examples of symbol templates. Rejection Thresholds For rejection, a set of empirically determined thresholds were put in place for each recognizer. If the average confidence of the highest scoring class is less than the threshold, the Kanji Storyteller does not return any results, buzzes the user, and sets the status label to inform the user that rejection failed. The thresholds for each recognizer were chosen by finding the unacceptable misses that had the highest confidences. The rejection thresholds values are as follows: $p for kanji and CJK strokes: 1.5 $1 for kana: 0.6 It should be noted that $p scores are 0 or greater where 0 is a perfect match while $1 scores are from 0 to 1 where 1 is a perfect match. No rejection is done for the results given by the Stroke X Stroke recognizer. We left this step out to provide a more inclusive set of results and to minimize the amount of time the user spends waiting on the recognition menu. However, this step could easily be implemented by running $p on the examples for each kanji returned by Stroke X Stroke and then rejecting any class (kanji) that did not get a score higher than an empirically determined threshold. Kanji Storyteller was formerly using the Tanimoto coefficient and the modified Hausdoff distance to score and classify kanji. However, after much testing we have decided to use the $p recognizer for kanji recognition and the modified $1 for kana recognition. The results and justification for this switch is provided in the evaluation and discussion section. One benefit of visual based recognition of kanji, as opposed to some gesture based recognition methods, is that the recognition is not rotation invariant which would otherwise cause confusion between some kanji like “three” (三) and “river” (川). Another benefit is that most visual recognizers are stroke-order invariant. Stroke-order invariance allows the student to practice kanji and seamlessly create scenes without being distracted by the finer details of learning kanji. However, it may be beneficial to enforce correct stroke-order to promote penmanship. Unfortunately, visual recognition methods tend to be sensitive to differences between training data and sketch input data. In situations where there is a high variability between acceptable instances of a given kanji, recognition rates can falter unless the training data adequately represents those differences in style. With respect to kanji, there can be a high degree of variation. Kanji can be written in different aspect ratios, with differences in the ends of strokes with different brushes, brush orientations, and writing pressure. Kanji can also be written in “cursive” stylistic forms, or in more formal blocky forms. Kanji Database KANJIDIC This file contains 6355 kanji which were included the JISX-0208-1990 2 byte character set as specified by the Japanese Industrial Standard [JISWiki][JISUni][JISN]. KANJIDIC’s file structure lists each kanji and its information on a separate line with a mixture of English and Japanese characters encoded in ASCII [ASCIIWiki][ASCIIToV] and EUC [EUCWiki], respectively. For our purposes however, much of this information was superfluous since it included fields for over a dozen different dictionary indexes among multiple other classification codes. Furthermore, the fields in KANJIDIC were not included in any particular order per line. Number Number Boolean Boolean Type General key value for searching General String Kanji Actual symbol General String Kunyomi native Japanese reading; used mostly for nouns and adjectives General String Onyomi Japanese interpretation of the original Chinese pronunciation; used mostly for jukugo (words made of multiple kanji) General String Nanori Pronunciation when used in names Number Number Double Double Stroke Number of strokes to write the Count kanji JLPT The Japanese Language Proficiency Test level of the kanji Whether the kanji was Boolean Image Whether there is image content Boolean Recognition Whether the recognizer can String Name Key A string identifier that links the kanji to the sticker images, audio, and kanjiImg content in their respective properties files. * on Grade Level (adapted from [1]): Format ID Kokuji** drawn kanji into an image. Double Boolean recognize and convert the Description Number the "grade" of the kanji.* available for the given kanji Cell Grade than China. The KanjiDictionary contains the following fields: Field Name Double originally made in Japan rather KanjiDictionary Java Frequency of Use ranking for Level Boolean Excel Frequency the 2501 most used symbols Parser To reduce the information to a reasonable working set for Kanji Storyteller as well as to have a file which was in a easy to work with and format, we developed a parser in java that output the desired fields, delineated by spaces, into a separate file and which could then be imported into an excel document called, “KanjiDictionary.xls”. The parser also removed the indicators in front of each field entry and consolidated the kunyomi, onyomi, nanori, and English meanings into their own consolidated fields so that they would be inserted into the appropriate column when imported to the excel spreadsheet. Double G1 to G6 indicates the grade level as specified by the Japanese Ministry of Education for kanji that are to be taught in elementary school (1006 Kanji). These are sometimes called the "Kyouiku" (education) kanji and are part of the set of Jouyou (daily use) kanji; G8 indicates the remaining Jouyou kanji that are to be taught in secondary school (additional 1130 Kanji); G9 and G10 indicate Jinmeiyou ("for use in names") kanji which in addition to the Jouyou kanji are approved for use in family name registers and other official documents. G9 (774 kanji, of which 628 are in KANJIDIC) indicates the kanji is a "regular" name kanji, and G10 (209 kanji of which 128 are in KANJIDIC) indicates the kanji is a variant of a Jouyou kanji; **On kokuji: This information was extracted from the KANJIDIC set of English meanings fields. Image, Audio, and Formula Property Files The Kanji Storyteller application must regularly access image and audio media files in order to populate recognition popup menus where the user can select which kanji and its associated images to place as stickers or set as the background. The system knows which images and audio to use by looking in property files that act as hashmap where the kanji’s name key field acts as the lookup key. The following are the list of property files used and their specifications. kanjiAudio – Specifies the file path to the .wav audio file that is played when the kanji is pasted on the drawing panel. kanjiImages - Specifies the file paths to the associated images for the kanji. The first file path specified is to the image of the kanji itself in the resources/content/kanjiImg directory. The following file paths are the images with the kanji placed as a visible tag in the bottom right hand corner of the image. Finally, the file paths of the same images without the kanji tag are included. radical – Specifies the kanji (there could be many), where this kanji is included as a radical. Each entry consists of a formula where the kanji (as radicals) or radicals on the left hand side are the other radicals composing the target kanji and the right hand side of the formula is the target kanji. The separation between the left hand side and right hand side of a formula is delineated by an underscore while component radicals and kanji (as radicals) on the left hand side are delineated by plus signs. An example entry for kanji ‘ricefield’ would appear as follows: ricefield=dog+grass_cat,power_man,fire_farm,… jukugo – Specifies the kanji where this kanji is the first kanji in the jukugo. Each entry consists of a formula where the kanji on the left hand side, in the order that they appear in the jukugo, are part of the target jukugo word that the kanji represents and the right hand side is the target word itself. The separation between the left hand side and right hand side of the formula is delineated by an underscore while the kanji on the left hand side are delineated by plus signs. An example entry for the word ‘queen’ would appear as follows: woman=king_queen jukugoAudio – Specifies the file path to the .wav audio file that is played when the jukugo is pasted on the drawing panel. jukugoImages – Specifies the file paths to the associated images for the jukugo. The first file path specified is to the image of the jukugo itself in the resources/content/jukugoImg directory. The following file paths are the images with the jukugo placed as a visible tag in the bottom right hand corner of the image. Finally, the file paths of the same images without the jukugo tag are included. twice. This approach reduced the time it takes to conduct a radical search dramatically. Any formulas that remained were used by taking the target kanji and using those kanji as the keys to populate the popup selection menu where the user could make their selection. As an example of how this process worked, imagine that the user groups the two kanji, each of which is the kanji for ‘tree’ ( 木 ), executes a radical recognition/search. The first tree radical is used as the lookup key which returns the following formulas: table_desk, genius_lumber, tree_woods, tree+tree_forest. The radical recognition/search algorithm then uses the second tree that was selected to eliminate the first two formulas and trim the remaining results which narrows the results to _woods, +tree_forest. Since there are no more selected kanji, the algorithm passes the two target kanji, ‘woods’ and ‘forest’ from their formulas to be used as keys to lookup their associated images and audio. While this approach file properties lookup approach was fast and could identify the number of each kind of radical in a given kanji, it had the disadvantage that the system could only recognize kanji radicals from formulas that were explicitly made in the file itself. Given the sheer number of kanji (6355) and the number of radicals that each could contain, this method was entirely too restrictive. As such, Kanji Storyteller now takes advantage of information retrieval methods to query the online WWWJDIC dictionary’s backdoor API for multiple radical searches. While this approach is slower, it enables the user to lookup the radicals for any kanji. In either method, because the radical search is reductive, the more kanji that the user uses to search, the more narrow the results are. Radical Group Search The Kanji Storyteller system allows students to group selections of kanji that have been placed on the drawing panel and use them as radicals (kanji parts) to search for related kanji. As an example, the kanji for ‘fire’ is used as a radical in kanji like ‘torch’, ‘farm’, ‘dry/parched’, and ‘flames/blaze’. In order to find kanji based on their component radicals, the user middle clicks to group the kanji to be included in the radical search. In the previous version of Kanji Storyteller, the first grouped radical would have been used as a key to lookup formulas in the properties map file and the following grouped kanji would have been used to reductively narrow down the possible kanji that could be returned. This was done by removing formulas if a selection kanji is not used in the formula. However, if that kanji was used, that kanji would have been removed from the composing radicals in the formula so that it is not counted Figure 8. Radical Group Search with 木+火 Jukugo Group Search The Kanji Storyteller system also allows students to use the same grouping mechanism, activated by the middle mouse button, to select kanji to be used in a jukugo search. With the jukugo, the order of the kanji matters. For example, ‘sun’ (日) and ‘evening’ (夕) makes ‘night fall’ ( 日 夕 ) which is pronounced ‘nisseki’ while ‘evening’ plus ‘day’ makes ‘setting sun’ (夕日) which is pronounced ‘yuuhi’. The jukugo search mechanism reflects this so that students must select kanji in the correct order. To provide feedback to the user, the grouping selection adds n-1 red boxes on the border of the sticker to indicate that sticker’s order in the selection. The jukugo recognition/search algorithm works by using the first kanji selected as the lookup key for the jukugo formulas. Then each formula is checked to see that the following kanji were selected in the right order and are the kanji that compose the left hand side of the formula. If there’s a match, the target jukugo word is passed on to be used as the key to lookup the associated audio and images and populate the recognition selection popup menu. Figure 9. Jukugo Group Search with 夕+日 Kana Group Search Kana group searching works slightly differently than the radical and jukugo group searches. In this case, the local kanji dictionary is searched for entries that contain the same kunyomi, onyomi, or nanori pronunciation as the grouped kanji. Figure 10: Kana Group Search with や+す+む Save, Load, and New In any authoring system, it is of utmost importance that the author can save and share their works so that their work can be viewed by others and so that self-expression is not restricted. As such, we have used simpl [Shahzad11] to serialize the sticker, canvas, and author data into XML. Currently, files can be saved and loaded from any directory. However, this may change in future implementations should the system be exported to a networked setting. In this case, the simpl serialization tool should prove beneficial as it affords portability and interoperability between languages and varying platforms. 4. EVALUATION AND DISCUSSION Quantitative Kanji Sketch Recognizer Evaluation A quantitative evaluation has been conducted to compare the recognition rates between the Kanji Storyteller’s Tanimoto & Modified Hausdorff (TaniHaus) recognizer, $P recognizer, and the modified $1 recognizer. Given that the interface returns the top three examples for the user to choose from, we define an “acceptable accuracy” metric as the ratio of input examples classified as the correct kanji in the top three results. We also define a “hit” as an input example that was correctly classified as the intended kanji in top result. Consequently, an “acceptable miss” is when the correct kanji is returned in as the 2 nd or 3rd most confident result and an “unacceptable miss” as when the correct kanji is not in the top 3 results. The correct kanji’s position in the results list described as the “rank” where a rank of 0 corresponds to a “hit”. In the first part of this evaluation, the recognizers distinguished between a mixed kana and kanji set that consisted of 23 different kanji and 11 different hiragana that end with the consonant “A” including “A” (あ) itself along with “N” (ん). For this mixed kana and kanji set, two users contributed 5 examples each for a total of 10 examples of each kanji which we will mark as S1. For the other dataset, S2, a different user contributed 5 examples. Both contributors had previous experience with writing kanji; the contributor of the training data was a Chinese language student while the other contributor was a Japanese language student. The results are provided in table R1. In the second part of the evaluation, the recognizers distinguished between an all kanji set that consisted of 51 different kanji, most of which were JLPT 1 level kanji. The contributors for S1 were a Chinese language student and a Japanese language student. The contributor for S2 was a Japanese language student. Each contributor provided 5 examples for each kanji. The results are provided in table R2. In the third part of the evaluation, the recognizers classified examples from all hiragana without dakuten and handakuten (diacritic marks indicating that the sounds of the kana should use the voiced voiced or ‘p’ plosive sound, respectively). For S1, one user contributed 5 examples of each hiragana while another user drew 5 examples of each hiragana for S2. Both contributors had previous experience with writing kanji, but the contributor of S1 was a Chinese language student that had no previous experiencing sketching hiragana. Conversely the other contributor was a Japanese language student with much experience in writing kana. The results are provided in table R3 For all of the above evaluations, the contributors were told to write all the kanji and kana in the correct stroke order. In the fourth part of the evaluation, the recognizers distinguished between the 11 CJK Stroke groups. For the CJK stroke evaluation, we defined a “hit” as a correct classification (True Positive) for the most confident result. For the one CJK dataset, S1, we had one contributor, a Japanese student, give 5 examples of each CJK stroke. For the second CJK dataset, S2, a different Japanese student also provided 5 examples of each CJK stroke. The results are provided in Table R4. Kana (11) and Kanji (23) $P (m=32) Mod $1 (m=64) TaniHaus Acceptable Ratio 0.84, 0.88 0.90, 0.85 0.78, 0.80 Hit Ratio 0.74, 0.75 0.86, 0.78 0.68, 0.66 Acceptable Miss Ratio 0.10, 0.14 0.04, 0.07 0.10, 0.15 Unacceptable Miss Ratio 0.16, 0.12 0.10, 0.15 0.22, 0.20 (m=64) Table R1: Mixed Kana and Kanji Results. Kanji (51) $P (m=32) Mod $1 (m=64) TaniHaus Acceptable Ratio 0.83, 0.88 0.83, 0.82 0.77, 0.82 Hit Ratio 0.68, 0.75 0.78, 0.78 0.63, 0.63 Acceptable Miss Ratio 0.14, 0.13 0.05, 0.05 0.13, 019 Unacceptable Miss Ratio 0.17, 0.12 0.17, 0.18 0.23, 0.18 (m=64) Table R2: Kanji Results Hiragana (48) No Dakuten or Handakuten $P (m=32) Mod $1 (m=64) TaniHaus Acceptable Ratio 0.60, 0.68 0.72, 0.79 0.50, 0.45 Hit Ratio 0.44, 0.49 0.57, 0.68 0.34, 0.28 Acceptable Miss Ratio 0.16, 0.18 0.14, 0.11 0.16, 0.18 Unacceptable Miss Ratio 0.40, 0.32 0.28, 0.21 0.50, 0.55 Acceptable Miss Ratio 0.04, 0.04 0.12, 0.12 X Unacceptable Miss Ratio 0.04, 0.04 0.10, 0.08 X Table R4: Mixed Kana and Kanji Results Note: The results are given below are rounded to the second decimal place. The variable ‘m’ is the number of resampling points used in preprocessing. The results on the left in each cell is where S1 was the training data. Results on the right side in each cell is where S2 was the training data. Discussion of Quantitative Results It is interesting to note that the while the modified $1 does produce a higher “hit” accuracy than the $p recognizer for the kanji study, it did not perform as well with respect to the overall “acceptable” accuracy. The results seem to imply a tradeoff between hit accuracy and acceptable accuracy. The reason for this perceived tradeoff is most likely differences in stroke order between the input and training samples. If the stroke order matches, then $1 will likely give a good result, however, in cases where the stroke order doesn’t match, the more robust $p can compensate since it treats the points as “clouds” rather than as a single consecutive set of points. Essentially the direction invariance of $p makes it more robust. Even though $1 had a higher hit ratio, we decided to use the $p recognizer instead since the desired result was likely to be included as a lower ranked result in the recognition menu. In other words, if the result is not on the top, then the user just has to scroll down to what they were actually intending to draw. To make a more grounded decision, it would be beneficial to perform a user study evaluating users’ qualitative responses to having their intended symbol be the top result as compared to their result being further down the recognition menu. It may be the case that having a slightly more selective ‘all-or-nothing’ recognizer that returns the top result more often would satisfy users more. TaniHaus 0.96, 0.96 Mod $1 (m=64) 0.90, 0.92 X The $1 recognition had the highest hit rate for classifying hiragana when comparing the 3 recognizers that we have implemented. (Tani-Haus, $1, $p) We believe that the simple nature of hiragana has allowed the $1 recognizer to be very effective in this case. The stroke order for kana is easy to remember and the shape and style of kana tends to vary less form person to person which means that was probably less error derived the sketch contributors. All of the recognizers performed relatively badly with the highest overall accuracy not even breaking 80% and with the worst recognizer, the modified Tanimoto Hausdorff, returning an unacceptable miss rate of 50%. We suspect that the reason for the bad results was that one of the contributors had never written hiragana before that point resulting in irregular looking characters, even if they had been drawn in the correct stroke order. 0.92, 0.91 0.77, 0.80 X For the classification of CJK strokes, $p had the best recognition rate of 91% hit rate. The $1 algorithm (m=64) Table R3: Mixed Kana and Kanji Results CJK Stroke Groups $P (m=32) Acceptable Ratio Hit Ratio (m=64) provided an 83-85% hit rate in comparison. Since the Stroke by Stroke algorithm only takes the top stroke result to return a list of applicable kanji, the acceptable ratio was not relevant and we only looked at the hit ratio. The $p most likely outperformed our modified $1 due to the fact that $p is stroke direction invariant since it processes points as a cloud of points without any temporal information. $1, on the other hand progresses through strokes in order. Therefore, any strokes written in the wrong direction would increase the probability of a stroke miss. We also chose $p for recognition of CJK strokes since it performs well on single stroke gestures and is resistant to variations in sketches between different users. We also conducted tests to see if the program was providing us with all of the different possible kanji based on the already entered strokes. Although we did not test in an exhaustive manner, the program did appear to in provide all the relevant kanji choices for a large set of test set. Several iterations of accuracy evaluation were run for the CJK Stroke classification to find the best groupings. The results provided here are the results for the final grouping. For all the quantitative evaluations, it was found that modified Tanimoto Hausdorff recognizer was taking longest, sometimes by a factor of 2 compared to Combined with the fact that TaniHaus was returning worst recognition results, it was easy to eliminate recognizer from later evaluations. the the $1. the the Storyteller would be a beneficial tool for learning Kanji but stressed that porting the software to a mobile platform would be desirable. The professor also requested a way to drill and test the students’ knowledge of the kanji that they had studied from within the application. In order to test the effectiveness of our program we created a user interface evaluation for our project. Our test sample population came from students taking Japanese 101, 102, and 201 at Texas A&M University. The evaluation can be broken down into three different sections: pre-questionnaire, user study, and postquestionnaire. The pre-questionnaire consisted of several Likert scale questions that questioned the amount of time the user spends studying Japanese as well as his/her interest in learning Japanese. It also asked the user which aspect of learning Japanese is most difficult for the user. The format and questions used for the questionnaires can be seen in the appendix. The user study portion consisted of a set of tasks that the user undertook while using the program. The tasks included writing and searching for specific kanji and kana. The last portion of the study was the post-questionnaire. This part of the study gauged how satisfied the users were of our program itself. Half of the questions were open ended as asked for comments for various aspects of the program while the other half of the questions contained Likert scale questions about the user’s satisfaction with the software and their experience. Interface Evaluation Results and Discussion of Interface Evaluation Before conducting an interface evaluation, we met with a long-time Japanese professor at Texas A&M University. In this meeting, we gave a demonstration of Kanji Storyteller and subsequently interviewed the professor to obtain a professional opinion on the quality of the software as Japanese language teaching tool. The professor claimed little experience in using any kind of educational software for Japanese but did mention that current students were using tablet and smart-phone applications to provide on-the-fly lookups of kanji information. When asked about what kind of study methods were recommended to students for learning kanji, we were told that repetition in writing kanji was stressed. The professor gave positive feedback on the completeness of the kanji dictionary and jukugo/radical lookup features. When the professor was asked how we would try to user Kanji Storyteller for his students, he in return asked how we intended Kanji Storyteller to be used as a study tool. We replied that we intended for the software to be a supplemental study aid to reinforce learning by providing visuals. We also explained to the professor that the students could save and share their canvases as .xml files. After some discussion, the conclusion was made that it would be preferable to share the xml files on a class server that the students could access remotely. Overall, the professor agreed that Kanji The professor’s feedback that repetition in was the preferred study method for learning kanji was substantiated by reports from the students that participated in our user study and it would appear that traditional rote repetition based study methods remain prevalent. This partially validates Kanji Storyteller’s claim to novelty as a kanji study tool. Kanji Storyteller’s novelty, however, also presented unique challenges for the users. Many users were unsure of how they would use annotation feature to add to their learning experience. Using the stickers to make scenes, while reported to “cool”, entertaining, and memorable, was often seen as a superfluous feature for studying. The professor’s request to add a quizzing mechanism to Kanji Storyteller was particularly significant and we have come to the conclusion that such a mechanism would structure the user’s learning and provide a set of both short term goals that the user could aim for. We have also decided that a quizzing feature would benefit from a user progress tracking feature so that the learner could identify kanji that they have encountered and know which parts of their knowledge needs to be reinforced. This information would further structure their studies and provide a set of long term goals for learning Japanese kanji. From the feedback we received form the professor, we added a question to the post-questionnaire that asked whether a quiz feature would be useful. The responses to this question were overwhelmingly positive. Our user study had 4 participants volunteer; 3 students took the study in an observed laboratory setting while the other participants took the study remotely and without supervision. 3 of the students were Japanese language students at Texas A&M University while another one was a native Chinese speaker with interest in beginning to study Japanese. Although our sample size was small, the users all reported being satisfied with our program with one exception; This exception was for a user who performed the study remotely and most likely did not see the last two questions which were on a different page. For the pre-questionnaire, the average Japanese study time was about 2-4 hours per week while the average kanji study time was about 1-1.75 hours per week, which is about 1/3 to 1/2 half of their study time. For the post questionnaire, the average Likert scale score for kanji and hiragana recognition satisfaction was 4 (usually recognized correctly) while the ease of finding information in dictionary had an average Likert scale score of 4.5 (Easy to find info). The overall satisfaction rating had an average of 5 (Very Satisfied). Based on the Likert, results we can safely claim that our program was successful in giving the users a novel and pleasurable experience for studying kanji. Some interesting quotes from the free responses included the following: • • “Associating Kanji with images really helps learning.” “Great way to discover new kanji” • “Good way to find kanji just by drawing” • “I like Kanji Storyteller because if I draw it incorrectly, it doesn’t read it or reads a different kanji.” The above quotes help substantiate our claims that Kanji Storyteller is a beneficial studying tool that helps by reinforcing kanji through visuals, through unique interactions, and through handwriting feedback. On the negative side of the results, students were also frustrated with being forced to use a mouse for sketching the kanji and were suspicious that any misclassifications by the recognizers were due to their inability to adequately draw the kanji with a mouse. As such we would like to add pen/stylus support for our application. This commonly cited complaint also aligns well with the professor’s recommendation to port Kanji Storyteller to a tablet or smart phone since those devices natively support touch based interaction. It was also observed that the users wanted to drag-anddrop kanji directly from the dictionary panel onto the drawing panel. Also, 2 of the users tried to use the backspace key instead of the delete key to remove stickers from the canvas. Another user reported that they wanted a ‘clear’ button (which had not been implemented at the time). These demanded features were surprising from the implementers’ point of view but were also simple to add to the interface. This set of observations provided a significant lesson on the value of performing an interface evaluation. Another major observation is that the grouping features were largely ignored or were unknown to the users. 2 of the users did middle click the stickers but did not press any of the buttons to initiate recognition. Another user requested that they would, “add a tutorial or some info bubbles that pop up over the different buttons and functions.” Based on these observations and feedback we implemented to the help window, status panels, and ‘first time popup’s to inform the user on how Kanji Storyteller can be used without the need for intervention from a trained observer. We also plan to add ‘popup buttons’ beneath the stickers when they have been grouped in order to make a visual connection between the action of grouping and the available jukugo, radica1s, and kana lookup functions. Proposed Long Term User Learning and Creativity Evaluation Our system’s intended purpose is two-fold. It serves as both an educational tool and it also serves as a creative authoring tool for visual mnemonics. One major idea behind Kanji Storyteller is that the user can learn and practice Japanese kanji while creating meaningful scenes and annotations they can share with the community. Our system also aims to enhance the writing skills of the learner, but also to be a fun tool to use that can keep the learner engaged at all times. The proposed user learning evaluation we envision would involve examining a set of Japanese students that are in the stage of learning many new kanji characters in their writing development. We would need to evaluate attempting to answer the following research questions. Do the writing and reading skills for Japanese Kanji of the learner improve after use of our system and to what degree with respect to time of investment? Does the system provide an intuitive and easy to use authoring tool for visual stories? Does the user find the system entertaining enough to remain engaged into creating new stories at the same time that they are learning? Do students retain more with sounds? Do students retain more with conversion to images or visual representations? Do students retain more by constructing a canvas, scene, or story? In order to accurately evaluate the system, it is important that we define what it means to “learn” kanji. Considering that not all kanji are used or encountered as frequently as others, it follows then that less complete knowledge is required from kanji that are used less. Consequently, our definition of “learned kanji” varies with the JLPT level of the kanji. Therefore, the student has learned a kanji when the students knows: JLPT 4 and Jouyou (common use) kanji: • at least 90% of readings known • all English meanings • how to write JLPT 3: • at least 1 kunyomi (if applicable) • at least 1 onyomi (if applicable) • all English meanings • how to write JLPT 2: • at least 1 reading (onyomi/kunyomi) • at least 1 English meaning • how to write JLPT 1: (or above) • at least 1 English meaning • how to write It is also important to define what it means to say that students learn kanji “better” with Kanji Storyteller than with more traditional methods. As such, we provide several comparative aspects of kanji learning with each aspect framed as a positive hypothesis for the performance of our system. Each aspect is followed by a possible means of measurement: Difficulty: Students learn more difficult kanji on average Record the JLPT level and grade level of the kanji encountered, sketched, and posted by the users. Speed: Students can write kanji faster Record the average time to recall and write a kanji Record the average time to complete a drill set of N kanji Mastery: Students can recall more information for a given set of kanji Record the % recalled of: onyomi, kunyomi, English meaning, jukugo Breadth of Knowledge: Students know more kanji Record the distribution of exploration vs difficulty of kanji explored – % Encountered versus mastered for a grade/JLPT level Raw Frequency of kanji encountered and mastered Readability: Students have better handwriting Record and compare before and after samples of handwriting Motivation: Students are more motivated to learn kanji and related subjects Questionnaire and surveys to get responses on: – Fun factor – What they got out of using it – Whether they want to study more The actual evaluation we plan to implement would first involve gathering a set of representative users for the system consisting primarily of beginning Japanese students. The first stage of the evaluation would involve the observation and analysis of users’ qualitative experiences with the free form use of the system. The feedback from this first stage would allow us to refine the look, feel, and operation of our program as well as give us an understanding of how users approach and attempt to use the system. The second stage of the evaluation would entail a more rigorous quantitative analysis of user knowledge of kanji and skill in reproduction after set periods of Kanji Storyteller usage. A third stage of evaluation should also be conducted to evaluate user learning over a more representative use period over the course of a semester. This could be accomplished by measuring student knowledge of kanji based on the above definitions of learning. The study participants should ideally be members of the same section of a Japanese course where one half of the students are placed in an experimental group who study using Kanji Storyteller and where the other half of the students are placed in a control group that only uses traditional study methods. 5. FUTURE WORK There are a number of features and improvements that could vastly change the experience with Kanji Storyteller. In order to ease editing of the drawing panel, it would also beneficial to be able to translate groups of stickers simultaneously and move stickers relative with the background when the size of the drawing panel is changed. It would also be beneficial to include freeform drawing pens, brushes, and other direct manipulation editing tools much like many image editing systems provide to allow the authors to paint their own canvas background or stickers and add annotations directly to the screen. Taking this idea further, these stickers could also be dynamically associated with a particular kanji, should the use wish it. The feedback from the user studies and our goal to make Kanji Storyteller an effective learning tool obligates us to implement an quiz and testing feature to drill the user on their knowledge. Extending this idea, we plan to implement a learning tracking method that is integrated with the quizzing and testing feature. We also plan to implement a “hint” feature that gives tips to the user when first opening Kanji Storyteller to inform them on available features and make suggestions on effective ways of studying and making mnemonics. There was one particular goal of our interface that has not been accomplished: Social network connection and network deployment. This was a major piece of our interface that would have eased the sharing of canvases and promoted communication, expression, collaboration, and constructive learning. Users are, however, currently able to save and share their works by sharing the xml save files. Making a port of Kanji Storyteller to mobile devices would also meet reported usage patterns of kanji studying tools. Finally, the system could benefit from the inclusion of more content in the form of more kanji for sketch recognition, more audio and more flexibility in user choice for the image content. Dynamic online retrieval of audio files is difficult given the relative dearth of free sound effects sites. More disconcerting, however, is the problem of the disconnection between the metadata and terms used to describe audio files and the terms that Kanji Storyteller would be able to provide automatically. For example, automatically retrieving sound clips for “fire” would be more successful if the search term were instead “crackling”, however Kanji Storyteller doesn’t have the information to know to use the search term “crackling” for “fire”. Sounds are usually tagged with a rich set of adjectives while images are usually easy to find with nouns. At any rate, more diverse audio and image content would enrich the experience by offering both breadth of content and depth of constructive possibilities for creative expression to reinforce learning. 6. CONCLUSIONS While we have not yet conducted the long term user learning and creativity evaluation, our interface does look promising so far from its limited exposure to beginning students. Authoring interesting and amusing scenes is not only possible, but almost unavoidable. Furthermore, the audio and visual feedback on placement is both fun and memorable, reinforcing the meaning of the kanji without effort. The database is more complete than most online resources and convergent searching as well as and divergent exploration of the kanji database is strongly afforded by the interface. As a study tool, the Kanji Storyteller could benefit greatly from a quiz and progression tracking feature. Finally, the sketch recognizer is both accurate with the results it retrieves with $p and the modified $1 and proactive in fetching those results in a short amount of time with Stroke x Stroke. 7. REFERENCES [Rap06] K. Rapeepisarn, K. W. Wong, C. C. Fung, A. Depickere, (2006). Similarities and differences between learn through play and edutainment. Proceedings of the 3rd Australasian conference on Interactive entertainment (pp. 28-32). Murdoch University. Retrieved from http://portal.acm.org/citation.cfm?id=1231894.1231899 [Khi08] M. Khine,(2008). Core attributes of interactive computer games and adaptive use for edutainment. Transactions on edutainment I. Retrieved from http://www.springerlink.com/index/G10P84265847RX1K .pdf [Ren09] Renshuu, An application to practice drawing Japanese kanji. Last updated on January 4, 2009, Accessed and downloaded on January 17, 2012 http://www.leafdigital.com/software/renshuu/ [TNJ12] Tagaini Jisho, A free, open-source Japanese dictionary and kanji lookup tool Accessed and downloaded on January 18, 2012 http://www.tagaini.net/about [Taele09] P. Taele, T. Hammond, Hashigo: A NextGeneration Sketch Interactive System for Japanese Kanji. Proceedings of the Innovative Applications of Artificial Intelligence 2009, Texas A&M University, Department of Computers Science, retrieved from http://srlweb.cs.tamu.edu/srlng_media/content/objects/obj ect-1248939731b4fd951530e57c7f1f6a70e24fcff1ce/IAAI09ptaele.pdf [Taele10] P. Taele, “Freehand Sketch Recognition for Computer-Assisted Language Learning of Written East Asian Languages,” Master’s thesis, Texas A&M University, 2010. [Paulson08] B. Paulson, T. Hammond, “Paleosketch: Accurate Primitive Sketch Recognition and Beautification,” Proc. 13th International Conference on Intelligent User Interfaces, pp. 1-10, 2008. [Lin09] N. Lin, S. Kajita, K. Mase, Collaborative StoryBased Kanji Learning Using an Augmented Tabletop System, JALT (Japanese Association for Language Teaching) Call Journal, 2009 (pp. 21-44) retrieved from http://www.jaltcall.org/journal/articles/5_1_Lin.pdf [Rubine91] D. Rubine, (1991) Specifying gestures by example. In SIGGRAPH ’91: Proceeding of the 18th annual conference on Computer graphics and interactive techniques 329-337. [Wobbrock07] J. Wobbrock, A. Wilson, Y. Li, (2007) Gestures without libraries, toolkits, or training: a $1 recognizer for user interface prototypes. In UIST ’07: Proceeding of the 20th annunology 159-168. [Vatavu12] R. Vatavu, A. Li, J. Wobbrock, (2013) Gestures as Point Clouds: A $P recognizer for User Interface Prototypes. In Proceedings of the 14 th ACM International Conference on Multimodal Interfaction (ICMI ’12). ACM, New York, NY, USA, 273-280. [Kara05] Kara, L., Thomas S (2005) An image-based, trainable symbol recognizer for hand-drawn sketches. Computers & Graphics 29(4):501-517. [Hammond05] T. Hammond, R. Davis, (2005) LADDER, a sketching language for user interace developers. Computers & Graphics 29(4):518-532. [Anthony10] L. Anthony, J. Wobbrock, (2010) A lightweight multistroke recognizer for user interface prototypes. Proceeding GI'10 Proceedings of Graphics Interface 245-252 [Field11] M. Field, S. Valentine, J. Linsey, T. Hammond. 2011. Sketch recognition algorithms for comparing complex and unpredictable shapes. In Proceedings of the Twenty-Second international joint conference on Artificial Intelligence – Volume Three(IJCAI'11), Toby Walsh (Ed.), Vol. Volume Three. AAAI Press 2436-2441. http://dx.doi.org/10.5591/978-1-57735-516-8/IJCAI11406 [Dixon10] D. Dixon, M. Prasad, T. Hammond, (2010) iCanDraw: using sketch recognition and corrective feedback to assist a user in drawing human faces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '10). ACM, New York, NY, USA, 897-906. http://doi.acm.org/10.1145/1753326.1753459 [Shahzad11] N. Shahzad, S.IM.PL Serialization: Type System Scopes Encapsulate Cross-Language, MultiFormat Information Binding, Texas A&M University Masters Thesis, 2011. [CJKWiki13] Stroke (CJKV character) Wikipedia Entry, Accessed on: May 4, 2013; https://en.wikipedia.org/wiki/Stroke_(CJKV_character) [KanaWiki13] Kana Wikipedia Entry, Accessed on: May 4, 2013; http://en.wikipedia.org/wiki/Kana 8. APPENDIX Pre-Questionnaire: 1. Have you ever studied Japanese? a. Yes, I enjoy it b. Yes, it’s ok c. No, but I’m interested d. No, I’m not into that e. Other: 2. How regularly do you study Japanese? (choose one) not at all less than an hour per week 1 to 2 hrs per week 2 to 3 hrs per week 3 to 7 hrs per week more than 7 hours per week other: 2. 3. 4. How regularly do you study Kanji in particular? (choose one) not at all less than an hour per week 1 to 2 hrs per week 2 to 3 hrs per week 3 to 7 hrs per week more than 7 hours per week other: Experimenter Guided User Study: 1. Play with the program and try out clicking the canvas and the different buttons 2. Find a kanji you haven’t studied before and study it in whatever way you would like to study it, preferably your usual way. 3. Try sketching the symbol for “Tree” (木) with the program 4. Try sketching the symbol for “Person” (人) with the program 5. Try sketching the hiragana “KA” (か) with the program 6. Make an interesting scene for yourself or to show other people 7. Find as many kanji that have the “Fire” (火) radical in it in 2 minutes 8. Find the kanji with the most strokes 9. Write down as much info as you can remember about the kanji you studied in #2. How do you study Kanji when you do study? What difficulties do you usually have when trying to use kanji? (choose all that apply) Remembering how they look Remembering what they mean Remembering the right stroke order Remembering how to pronounce them (onyomi / kun-yomi) Remembering the jukugo Other: Post-Questionnaire How would you use Kanji Storyteller for your own study and use? (if at all) Would you prefer to use Kanji Storyteller or some other tool for studying Kanji? If not KS, which tool and Why? How easy is it to find the information you are looking for in the dictionary panel? Very Easy Easy Kind of easy Kind of hard Hard Very Hard Would you make scenes/canvases that would help you or other students study? If so, how? Overall, are you satisfied with Kanji Storyteller? Is there anything that you would add to Kanji Storyteller? If so, why? Is there anything that you would change or take away from Kanji Storyteller? Would adding a drill or quiz feature help you study? Why or why not? Do you usually get the Kanji you want after drawing it as often as you would like? Never Usually not Sometimes More often than not Usually Always Do you usually get the Hiragana you want after drawing it as often as you would like? Never Usually not Sometimes More often than not Usually Always Not at all Unsatisfied Somewhat satisfied Satisfied Very satisfied Extremely satisfied How likely are you to recommend our software to others? Not at all Unlikely Somewhat likely Likely Very Likely Definitely