Link to Full Artificial Intelligence Thesis

advertisement

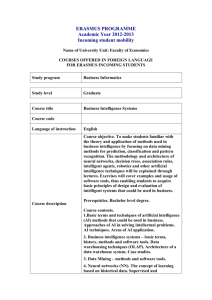

Artificial Intelligence (A.I): Where we are, and what we can become. Ryan Holland Kent State University Glossary 1. What is Artificial Intelligence (A.I)? a. What does it mean to have intelligence? Can digital systems be “intelligent” does it actually act intelligently, not just show intelligence? b. How do we “create” an intelligent being? c. The different forms of A.I. (strong, weak, friendly, general intelligence etc.) d. Examples of mostly weak A.I since strong A.I isn’t too real yet e. Thesis Statement: To clarify the concept of what artificial intelligence truly is, and describe how the invention of such systems would impact the world. 2. Current approaches in the field of A.I research. a. General idea of how A.I development can differ, no single correct theory. b. Hopefully A.I lab info here for hands on approach/info. c. General idea of symbolic approaches to A.I. i. Brain simulation. ii. Cognitive simulation. iii. Logic-based. d. Sub-Symbolic. i. Computational intelligence like fuzzy or evolutionary systems. e. Intelligent agent paradigm. 3. Benefits of continued research into the field of A.I. a. How A.I can simplify everyday life for all. i. Improved efficiency where work can be automated. ii. Less human error on most systems. iii. Less time spent working, more time for important activities. b. Synergizing A.I and human interactions. i. Not giving A.I free rein on its job as safety ii. Using some A.I system as first guess, backed up by human intuition and wisdom c. Technological improvements as a side effect of A.I research. i. (Possibly move first three points into a single point) ii. iii. iv. v. Necessity of more efficient power sources pre-A.I. Necessity of more efficient and powerful processors pre-A.I. Necessity of a design which keeps “friendly A.I” in mind pre-A.I. Boost in productivity and speed of growth in technological advances post-A.I. vi. Culmination of each step creating an extremely powerful technologically savvy future d. Medical advances gained through implementation of A.I systems. i. Genome mapping would become quicker and easier as a result of improved processing and ability to intelligently research and learn. ii. Constant medical care to a high degree with in-room A.I systems. iii. Improved level of overall care given when enforced with able to learn and react many times faster than humans. 4. Possible detriments which could be brought about by the advent of non-friendly or poorly developed A.I. a. Overdependence on technology causing humans to become a weak, addicted species. b. Mass extinction due to expansion driven A.I. c. Enslavement of the human race to robotic overlords. 5. Predicted future for A.I. a. Various incorrect timelines set for A.I research. b. Ethical dilemmas raised by implementation and use of A.I. c. Detriment recap. d. Benefit recap. e. Quick summary and overzealous closing statement. Questions to answer: What is artificial intelligence? How can its development/research help us? Hurt us? Why is it worth it? Ways to answer the questions: Discuss what it means to be intelligent and describe different methods of mimicking intelligence. Describe areas/times/places that real A.I would be of use and detriment. How to answer: Objectively research and learn more about A.I and its different methods/ideologies then lay out the information in an incremental and instructional way. Difference made: Clear up misinformation, bring forward new ideas, give a general basis for what A.I is and how it can greatly affect us as a species. Create some conclusion, make it strong: A.I. is possible, certain changes or advances need to be made to facilitate its development and integration first. Blankety blank 1. What is Artificial Intelligence (A.I)? Before one can define what it means to be artificially intelligent, one must first define what it means for an entity to be considered intelligent. There is no one agreed upon definition for the concept of intelligence, as it has many different methods of measurement and quantification. For the sake of this document, I will choose to follow Encyclopaedia Britannica’s definition of human intelligence: “[a] mental quality that consists of the abilities to learn from experience, adapt to new situations, understand and handle abstract concepts, and use knowledge to manipulate one’s environment.” Intelligence is a continuum which can be seen in a plethora of ways within our own society, from grades to IQ tests. Because there is no standard method by which an entity can be considered intelligent, the decision of whether or not a machine is acting intelligently is a personal opinion. There is the Turing Test, which is meant to determine if a machine can exhibit intelligent behavior well enough to be indistinguishable from human behavior, however, it is still ignored by many in favor of personal opinion. Most digital systems created and used by humans could not be considered intelligent because they are often built to solve only a single problem in a single way. For example, calculators, ATMs, and even smartphone applications are all built with pre-determined formulas and algorithms which allow them to serve a mostly singular purpose. When faced with a problem, the system either enables the user to overcome said problem, or it will fail, requiring a new solution to be found. A calculator only has so many keys, meaning that if the user was given a problem with symbols not on the calculator, the user would be unable to solve the problem because new keys cannot be added nor can new algorithms be formed; the calculator simply cannot acquire the necessary functionalities. It is these systems inability to gain knowledge or experience, adapt to unsolvable problems, understand abstract concepts, and apply knowledge to situations, rather than always acting in a single way. An artificially intelligent system is one which would be able to not only learn from its experiences, but also apply that knowledge to future experiences. For example, consider a fairly simple system which recognizes the numbers one through ten and has information related to those numbers stored as its knowledge. This system would be able to count to ten, and decide if a number is greater or less than another. It is then taught the symbol + and told that when it is given a number, followed by the addition symbol, then another number, the values are to be combined. The system has now gained knowledge of the concept of addition, and should be able to use that new knowledge to solve addition problems. This approach is similar to human learning; we begin with only the minimum knowledge of the world required to exist, with an adult caring for us to ensure our survival. As we grow older, we are taught new concepts and methods to solve problems, such as surviving on our own. An ideal artificial intelligence system would not only know how to solve problems related to its current store of knowledge, but also be able to learn how to solve problems outside of its knowledge base, increasing its knowledge and abilities. That is, rather than having the system require a teacher for any unknown situation, it would be able to try finding the answers itself, such as by access to internet where supplementary information can be found on any topic, filling in the current gaps in knowledge, then store that method for future use. Achieving such a level of intelligence is complex because many situations would require a wealth of pre-existing knowledge just to properly analyze the current situation. For example, a child who has not yet learned what numbers are would not be able to solve an addition problem, much less determine what the different parts of the algorithm mean. He can be shown “2 + 2 = 4”, but none of those symbols have any meaning for him, therefore he cannot abstract any information from the equation. However, once he has learned his numbers, he can understand that there are two two’s, followed by a four, so that + symbol might mean to combine the numbers, while the = symbol means this is what those combined numbers make. Now he at least has an idea about what those different symbols can mean, as well as a possible rule for the + symbol. Without being told or specifically instructed, he was able to learn, or at least formulate an idea about what addition is and how to use it. Of course this is an inefficient method for humans to learn anything as it requires the learner to basically reinvent the idea of math as he continues growing and experiencing more complex ideas. It could however be effective for machine intelligence as information can be processed, analyzed, and stored much faster than a human can. Teaching such topics allows the student to experience each aspect of an idea, such as math, in a way which builds upon itself and is known to be effective for learning. However, having the ability to infer information from experiences and build ideas based upon it is an important trait that becomes useful when information is not available on a topic or idea. Because our A.I Systems would likely be used for purposes which further our understanding in all topics, such systems would need such an ability to create abstract ideas based off of the available information, rather than requiring everything be taught, or be in its pre-programmed list of concepts; it would be able to learn without being taught, but instead by deducing information, when necessary. There are many ways to categorize an A.I. system. However, there are two main attributes that researchers focus on: the strength of its intelligence, and its benefit to mankind, or friendliness. When quantifying the intellectual capability of an A.I. System we refer to it as having either weak or strong intelligence. Whether a system is considered weak or strong is a continuum similar to the one for intelligence, though there is no Turing Test option in this case because there is no straight forward method to broadly determine the strength of one's intellect. If we said that to have Strong A.I, the system would need to be equally intelligent as a human, could not the developer then simply model the intellectual capabilities of the system after an extremely non-intelligent individual with only the most basic capabilities? We are currently able to develop weak A.I, which are systems applicable only to specific problem sets. Such systems are very good at solving very specific problems, such as Google’s search engine or Apple’s Siri, and these systems are becoming fairly common for use in everyday life by many. Strong A.I, also called Artificial General Intelligence, refers to purely theoretical systems which are able to perform any intellectual task that a human can perform. After creating an A.I system, weak or strong, there is a further need to define it as either friendly or hostile. Friendly A.I is that which is beneficial to humans; it does what it is programmed to do in a way which benefits humanity. Hostile A.I, on the other hand, is a system which negatively affects humans. Its hostility could stem from an improperly developed system, or from the creator’s desire to cause harm. The development of friendly A.G.I is one of the main goals of current A.I research and its successful implementation will denote a turning point in human technological ability, sometimes referred to as The Singularity. Through this paper, I will attempt to clarify the concept of what artificial intelligence truly is, and describe how the development of such systems would impact the world. 2. Current Approaches to A.I There are four central paradigms of A.I development: cybernetics and brain simulation, symbolic, sub-symbolic, and statistical, with a fifth paradigm now emerging, the intelligent agent, which combines multiple paradigms for a single system. The Cybernetics and Brain Simulation method allows for the most human-like entities to be created as the researchers attempt to recreate and digitize every facet of human intelligence. The Symbolic method uses formal expressions of logic to solve problems, and it avoids many of the problems inherent with human thought, processing, and memory. However, problem solving is not always straightforward, sometimes relying on factors not immediately obvious to our conscious mind. The Sub-Symbolic method of development avoids many of the problems inherent with the use of formal logic, creating webs of interacting nodes rather than problem-solution sets. However, subsymbolic systems must be trained in order to correctly identify an object or situation. This could be simplified by copying an existing, pre-trained system into a new one, but it still requires at least one system be trained to satisfaction. The Statistical method requires statistical equations for every possible scenario, making this method the best fit for the narrow scope of weak A.I by enabling every possibility to be considered and analyzed. The Intelligent Agent Paradigm, while still only theoretical, aims to combine the different methods in a way which allows each methods strengths to make up for others’ weaknesses. This level of integration means the Intelligent Agent Paradigm would be the most complex to successfully implement, but it would also allow for the most all-encompassing system to be built. Find some actual example of a C&BS system. Intel synapse chip. The Cybernetics and Brain Simulation approach was one of the first steps towards research into A.I. starting in the 1950’s, before digital, programmable systems were created. This method mainly used networks of interacting machines to simulate learning by responding to and affecting stimuli in the surrounding environment, an important aspect of intelligence and consciousness. The idea behind simulating the human brain was to recreate the structure and behavior of the human brain using the aforementioned networks, often referred to as neural networks, physically, rather than digitally. Brain Simulation’s popularity quickly peaked, and plummeted even faster, due to the enigma that is the human brain. Cybernetics has become a very general term, referring to regulatory system structures and constraints, covering topics from computing to biology. A simulated brain would be able to process information similarly to that of a human, but have no distinct method of input or output. Similarly, a cybernetic system would be made to gather data and maintain system stasis, but have no way to process or analyze the information. Both development methods would need to be used either together, or in combination with other A.I methods, making progress in a single subfield difficult without also progressing others. A fully developed friendly A.G.I version of a C&BS system would likely resemble A.I seen in many sci-fi movies and television shows; it would be designed similarly to humans in shape and function. Such a development has its pros and cons, but the current difficulty of creating such a system likely outweighs its pros. On the pro side, such a system would be very similar to humans, making it more intuitive to interact with, and allowing for a feeling of comfort not always available in non-anthropomorphic systems. Not only will they be visually appealing or comforting, but it will also be similar mentally to those interacting with it. Rather than having to learn or conform to how the system thinks, a user will find interacting with a simulated intelligence fairly natural, such as when conversing with a human. At some point, these systems would become so similar to the humans they are based upon that it would become difficult to distinguish between a real human and a synthetic human. While this would allow for the most natural interaction, creating an environment in which the synergy between humans and robots can flourish, it opens up many avenues to ethical issues. Probs gonna move to section 4. The first, and greatest, issue we will face will be an ethically based dilemma, deciding what it really means to be human. When we have created these digital systems that can perfectly replicate a human from their physique to their cognitive abilities, will there really be a difference? If one removes the brain from a human, one is left with a shell which many would not consider human or at least not a whole human. If one inserted a new mechanical brain into that empty shell and it was able to replicate a humans thought and intelligence, would we consider that entity to be a human? Another downside to this approach is the eventual experience of the Uncanny Valley Effect, a hypothesis which states ”when features look and move almost, but not exactly, like natural beings, it causes a response of revulsion among some observers”. This has negative implications for the adoption and growth of these human emulating systems. Assuming, as with many technologies, progress is made on developing a life-like A.G.I system in incremental steps, slowly improving and taking on the desired shape of humanity, there will be a period of time between the first versions and the last which will better resemble humans than its predecessors, but still seem too fake to be believable. A.I developed, used, and released during that time will be most likely to experience the UVE, pushing the target audience away, and likely slowing innovation and production because of the social response. It would be necessary to break through the UVE barrier before releasing such a system so that it could be adopted without worrying that it will alienate its users. As Dr. Knight says “the more humanlike a robot is, the higher a bar its behaviors must meet before we find its actions appropriate.” (Knight). She goes on to discuss a robotic, dinosaur toy and how it is unfamiliar to us because there are not dinosaurs alive to compare it too, making any behavior more acceptable. In some cases the response to autonomous robots can be seen as culturally influenced. In America, many movies and stories regarding robots has them being used as tools or agents of destruction, while Japan features them as sidekicks, equal to that of a human. These views seem to permeate the A.I culture and have led to differing opinions on how they should be developed and introduced. Fig 1. Uncanny Valley Graph In order to avoid conflicting views of robots and A.I systems, Dr. Knight suggests that the existence between human and robot should be thought of as a relationship or partnership, and be broken down into three categories. These categories are telepresence robots, collaboration robots, and autonomous vehicles. The first category would contain systems along the lines of the American view of robotics, tools which a human makes use of or gives input to in order to achieve a desired outcome. Second are systems following the Japanese vision of robots, those which work alongside humans and provide some kind of assistance. The final category are those machines which would travel in, for the most part. Autonomous vehicles are those systems which are those which handle the fine details, such as traveling from place to place, but allow the user access to higher-level input methods, such as the destination or chosen path. As the field of A.I progresses, more categories are likely to be developed following Dr. Knights own work, but I feel it is important for there to be some kind of distinction between implementations of A.I. If a system is meant only to be used a tool by the user, the method of interaction by a user should reflect that. For instance, a remote-piloted drone’s interface consists of a screen, so that the user can see what they are doing, and a remote control of some sort which allows the user to fly the plane. The drone might make small corrections to fly straighter, or change its altitude and flight pattern based on weather information it picked up, but it will not interact with the user to make these decisions On the other hand, if a system it meant to be assistive or interactive, the interface would be designed with two-way interaction in mind. Rather than requiring a pilot, a robot which is meant to emulate a nurse might be autonomous, following a path or reacting to alerts from a room. These systems would be more interactive in that they react to stimulus, such as a patient pressing the call button to request a new pillow. The third category is a combination of the two prior categories in that it accepts input and acts on the controller’s desires, but it also has some autonomy in how those desires are achieved. Intel synapse chip info goes here. Find actual example of Symbolic based system. OpenCog or DeSTIN. The symbolic approach focuses on logical deductions of input based on prior knowledge or other available information, following or creating rules by manipulating symbols. Whereas the cybernetics and brain simulation approach to development focuses on recreating the physical aspects of the human mind and body, the symbolic approach focuses on emulating intelligence through software. It is the human ability to model ideas and information in the form of symbols which grants us the level of intelligence we seek to achieve in A.I. systems. The driving factor behind the symbolic approach was the realization that any A.I system would require large quantities of knowledge to operate. This knowledge can be represented as symbols, such as letters and numbers, which are then analyzed, compared, or in some way manipulated, through logical processes, to produce a result. Symbolic A.I systems require massive stores of data and information to represent their knowledge, making successful implementation a matter of storage space, processing speed, and processing efficiency. Logical problem solving is referred to as neat, because there is only a single acceptable input or rule for a given situation, that is, the solution is neat and concise. Logical reasoning can be applied to most situations we experience, and therefore makes for an effective design basis. It also has the benefit of being able to avoid many of the currently unknown leaps of logic humans are able to make by substituting known rules and methods to solve the problem. In this way, it can be easily known or assumed how the system would behave, but its behavior can also be influenced by giving it new rules to follow and apply to unknown situations. Rather than attempting to recreate human intelligence, which we have yet to understand fully, the symbolic method attempts to achieve the same level of intelligence through logical problem solving methods, much like how with math you can achieve the same result using different methods. In this case, the exact method used by the human brain to solve a problem is an unknown method, but if we are able to design an algorithm which can take the same input as a brain, and produce the same results, it can be said that the methods are equivalent. Now, rather than finding the exact method and pattern by which a brain solves a problem, we can substitute known, logical algorithms which are shown to be equivalent, recreating processes one step at a time. OpenCog/DeSTIN paragraph here. Massive data storage methods are quickly improving, with flash based memory becoming more common, allowing for larger amounts of information to be held in a smaller, less volatile disk. Even with storage solutions becoming larger and more accessible, there is still the issue of at processing power and efficiency. Following Moore’s Law, processing power nearly doubles every two years, though we are reaching a point at which the law no longer holds true. Silicon chips can only be made so small before they stop functioning correctly, making improving raw processing power difficult without a different chip material. Processing efficiency is also an important factor to consider for a system which needs to constantly compare and relate information. There are many kinds of search algorithms currently available, many of which are simple, brute-force style search algorithms. This means that rather than jumping directly to the desired data within the systems data storage, it must start from the first byte and progress through each byte until it finds the desired data. While most search algorithms are developed to avoid such slow methods, there will always be a challenge to develop more efficient search algorithms to reduce overall processing load. A smaller, faster, and more efficient processor will allow for the ever growing quantity of data available to be more easily handled and made useful. There are many situations in which logical, symbolic solutions are not necessarily the best methods to use. Because symbolic algorithms rely on a single correct input and output, it can be hard to symbolically determine the state of something that has many possible states. For example, a chair is a flat board with three to four legs, and generally a back. The concept of chair could be symbolically broken down into those three main aspects, but it doesn’t recognize the almost infinite number of differences that can exist between chairs. Most people would consider a stool to be a type of chair, yet they rarely have backs on them. Because of the missing back, this symbolic system would be unable to recognize the stool as a chair. A similar example can be given for speech recognition. If thirty people were to say hello, you would hear thirty different versions of the same exact word. Some would be easy to hear and understand, others might have thick accents or strange inflections, making the word sound strange. Because there is no one form for an object, idea, or concept, it can be hard to symbolically represent each object without creating a series of rules that allow for the slightest differences. However, by constantly adding more rules on top of rules for simple acts like voice recognition, the recognition process slows down, and can lead to false positives, such as if a heavily accented individual were to say hello, but it was interpreted as hola. This is where the sub-symbolic approach comes in. Find example of sub-symbolic based system. Maybe just stick to neural network example. The symbolic and sub-symbolic approaches to A.I. development can be thought of as a human’s consciousness and unconsciousness respectively. When a human actively makes a decision, they are thinking in symbols and how they relate to one another; active cognition is reduced to symbolic representations of the object or situations. Most of the work done by the brain, however, is subconscious or sub-symbolic. One doesn’t need to think about balancing as they stand, it is natural to assume that as one stands, their center of balance shifts appropriately. When one smiles, there are forty-three separate facial muscles which are involved, all of which must stretch or contract to a specific level in order to produce the desired result. Much like standing, one simply chooses, or is made, to smile and the muscles do what they need to without our conscious intervention. Unlike with symbolism, objects in the sub-symbolic plane of existence don’t necessarily get evaluated by pre-existing rules, instead, the input is analyzed based on rules made from the observed data. Simply put, sub-symbolic processes “only require the person using the system knows what output should be produced for which input(s), not the rule that might underpin this.” (COMP1009 PDF). This means that the input is known, and the desired output is known, but the rule or method used by the system to reach that conclusion does not need to be explicitly known, like how a person smiles. This differs from symbolic analysis in that the output is generally unknown, while the input and rule are known, and can be used to find the output. The most common example of a sub-symbolic are neural networks. Neural networks are meant to imitate, but not necessarily emulate, the function of neurons in the brain. This is done by representing information as a web of interconnected nodes, neurons, with varying strengths or weights of connectivity to other neurons. These connections are made by training the system to recognize objects or ideas through the aforementioned known input and output method. An example given by the COMP1009 PDF uses determining the difference between a dog and cat to describe neural networking. In this example, they have an untrained neural network that they would like to be able to use in determining whether an input image is a dog or a cat. We as humans have learned through our experiences how to distinguish a cat from a dog, but there is no single, obvious, distinguishing factor between the two, making symbolic representation of the creatures difficult. Much like how we have been trained by seeing animals, being told what they are, and developing a heuristic for what it means to be a given animal, neural networks also need training. This is done by creating the basic neural network, feeding it input images for both dogs and cats, and informing it of which input it is giving. The network will then analyze the images given, keeping in mind which category it belongs to, and create interconnected networks of features or attributes for the given input. The amount of training needed is determined by the granularity of the results desired. In this case, dogs and cats are extremely similar, so it would require more training to accurately recognize each animal. If it were trained to differentiate between a monkey and cow, the process would be much quicker as the differences are far less granular than between a cat and dog. After training, the system can be fed input, assuming it aligns with the systems training, and it should be able to produce accurate results. Because there is no cut and dry rule by which to differentiate objects, neural networks often work on heuristics, determining all the features of the input image, and then finding which option the input best aligns with. Some images might be easily recognized as a dog, some images might be 60% likely to be a dog, and 40% likely to be a cat. The output will still be that with the greater likelihood, but it shows that some solutions are only “best guesses”, not definite answers. Fig 2. Neural Network Heuristic Example The biggest issues with this style of A.I. development is the inherent level of uncertainty in the results, including the ambiguity of the methods and rules used to reach it, as well as the requirement to thoroughly train each system to recognize a given input. The diagram above illustrates that even though a system did correctly recognize a dog, it also found known cat traits. In this example, the output is fine, but when the percentages are closer together, such as a 60/40% accuracy, the validity of the output can come into question as there is very little difference between the two. This possibility of being incorrect comes back to the idea of training an entity. Even we humans make mistakes in recognition, thinking some smaller dogs might be cats, or even missing entire attributes altogether, and we’ve been trained on differentiating between objects throughout our lives. We will have accumulated years of experience in recognizing these different animals, and have been exposed to a large number of them, giving us possibly millions of experiences with recognizing them. Not only that, we often see full, threedimensional versions of the animal in question, allowing us to create further distinctions that might not be so easily learned from two-dimensional images. In order to achieve this same level of assuredness in differentiation, a system would need to be trained with millions of different images and labels, something which might not be easy, or feasible, but is becoming more so with our expanding stores of data. Even still, problems could occur if the images are not varied enough to cover all possible input. Suppose every dog image used to train the system has a collar on the dog, while none of the cat images do. This could create an accurate heuristic for the system, “dogs have collars”, but in real life, it is inaccurate. When given a dog without a collar, the system would immediately determine that it is actually a cat because its rule says dogs have collars, but cats do not. Find example of statistical A.I. Google search algo?The statistical approach to A.I development is one of the most recent approaches to have appeared. This approach relies on statistical formulas and equations to determine an output. Unlike the other approaches, statistical methods alone do not seem to be applicable to A.G.I development, instead, they are used as a subset of tools by an A.I system. Each of the prior approaches are able to make use of statistical analysis in their processing, further proving that it is an effective sub-system, but not necessarily verbose enough to develop a purely statistical A.G.I system. Symbolic methods might decide on a specific algorithm, or output given by an algorithm, through statistical methods, based on the current situation. Sub-symbolic systems often use statistical methods to determine an outcome based on the weight or value of an output compared to other possible outputs. While it is unlikely that such an approach will create strong A.I. Systems, it excels at creating weak A.I. Systems. This is because for any statistical system, all capabilities must be defined in some statistically relevant, measurable, and verifiable way. Google’s search algorithm uses over two-hundred different unique aspects of a search query to determine which pages to show. These different aspects must be compared and contrasted, using statistical methods, in order to find the web pages most similar to the query. Because the form of the input is controlled, a plain-text string, and each trait to be analyzed is known, there must be a specific set of algorithms in place which are used to determine the output. There is no knowledge needed beyond the attributes to look at and the equations needed to compare them, making such an approach perfect for weak A.I. development. Unlike symbolic and sub-symbolic systems, the statistical basis for a system is often designed not to “grow”, or acquire new knowledge. Instead, it would require a developer to add new methods which expand the ability of the system. I’m sure it has been made fairly clear by now that many of these approaches simply do not function without the support of another. The speed with which A.I. development progresses, slowly, can be at least partially attributed to this inability for a single method to function alone. This is because many researchers are focused on furthering their own area of expertise, be it symbolic, sub-symbolic, statistical, evolutionary, machine learning, or any other number of available methods. Take the symbolic approach, for example. It often fails in the realms of voice recognition, because of the variety of speech patterns, inflections, accents, and any number of other factors. Rather than simply adopting sub-symbolic methods which enable more accurate voice-recognition, creating a more whole system, a symbolic developer would attempt to solve the issue purely symbolically. This situation allows for the furthering of a single approach, symbolic, but fails to recognize the larger problem at hand, creating an A.G.I system. Of course it is beneficial to create the most advanced forms of a single approach possible, in order to allow for more options and abilities in the future, but there is always a chance that a given approach simply cannot fit a problem. In this case, the aforementioned developer could spend the rest of his life attempting to create symbolic voice recognition, only for it to be determined soon after his death that it simply cannot be done, and that is in fact the best option to adopt other approaches as well. This idea of combined approaches leads to the final development method. The Intelligent Agent Paradigm is the newest idea or approach in A.I. development. Rather than being a development approach like the previously mentioned approach, it more of an idea: combining other approaches in a way which allows each methods strengths to make up for others’ weaknesses. Intelligent Agents are those which perceive the environment and take actions which maximize its chances of success, however the method by which this is achieved is unspecified. For instance, a symbolic system could be paired with a sub-symbolic system, allowing the final system to make logical choices regarding its environment when a logical solution is available, but also to make a “best guess” when the necessary algorithm or inputs are not available. This approach is most likely to create the most robust and all around effective designs for friendly A.G.I systems because it allows for the different approaches to be mixed and matched, creating the best possible system without the limitations imposed by a single approach. 3. Benefits of Continued Research Currently, we are only able to develop weakly intelligent systems, but even these systems benefit mankind in more ways than most realize. Google uses A.I to caption the videos on YouTube based on the sounds in the video, many companies have implemented automated phone systems so that calls can be handled by software rather than employees, and computer vision is quickly improving, allowing digital systems to recognize objects and understand how those objects operate. There are numerous other examples, but these capabilities are only the tip of the technological iceberg. Humans have been studying A.I, and related concepts, for more than fifty years now, yet we still have so long to go before we even reach the first working conceptual model of a Friendly A.G.I system. Much like how the splitting of the atom lead to unexpected technological advances like radiopharmaceuticals, we can only guess how A.I. will change the way we use and think of technology. Until we are closer to creating A.G.I. systems, most current predictions are likely to be proven wrong, if not only because our current technology is not capable of creating it, and is therefore a poor measurement of capabilities. There are however certain technologies which already exist that could be beneficial to the development of A.I, along with a number of technological improvements being studied or worked on. If we combine some of the aforementioned development methods with these new and upcoming technologies, the possibility of A.G.I seems more likely that ever before. Below, I will discuss the opportunities for A.G.I. development offered by these combinations, including which design methodologies and technology enables each opportunity. How far away each possibility is, I cannot pretend to know, but I believe each to be completely possible, limited only by our speed of technical evolution. ● Software vs. hardware differentiation (robot vs. less known possibilities) ○ Idea that (other than CBS, since pure hardware) in order to be truly intelligent, an entity needs to be able to move and interact with its environment. ■ Hence, a single, unconnected terminal running ‘intelligent’ software cannot truly be aware of its environment and therefore make intelligent, informed decisions regarding it. ■ I don’t necessarily agree, though it is obviously going to be helpful, if done correctly. ○ Much of what people consider to be A.I is the software, hardware mostly allows it input upon which to make those intelligent decisions. Doesn’t count for physical neural networks/cybernetic brain simulation. ■ Machine vision is purely algorithms, but requires cameras to bring in the info. ■ Software shortcomings generally stem from hardware difficulties ● In order to understand our world, we constantly take in FUCKTONS of info at once, processing it parallely and making choices based on the info. ● Need to interact and take in data within the enviro and drives movement and freedom. Wall plugs and massive energy requirements make it hard. ● Hardware to support the systems as a whole is poor. Transmission speed, length of transmission, accuracy/sensitivity of sensors. ● Sectors affected by A.I use ○ Medicine ■ Doctors, surgeons, nurses working with robots/A.I systems ● Checking n balancing, emotional vs. logical decisions ○ Business ■ BI simplicity, big data, analytics, etc. ○ Construction ■ Planning, safety, ease of development ○ Education ■ Access to info, improved learning methods ● Discussion on paradigm shift regarding elimination of low-tier jobs ○ Many min wage jobs are replaceable by automated systems ○ Removes many job opportunities, opens many others ■ Removes: Fast food, maintenance, etc. ■ Open: System developers, architects, general intelligence work ○ Focus shifts from education and bettering self, rather than surviving. An Artificial Intelligence System should be able to run on its own, but it is its complement to human ingenuity that makes the development of such systems so desirable. By developing systems which are able to work alongside humans and make up for human deficiencies, problems inherent in every facet of everyday life, caused by human error, can be lessened. A doctor, for instance, has an incredibly important job to keep his patients alive and make them healthy. Not only does the doctor need to focus on his patient, who could possibly have some complications beyond their apparent illness, but he also needs to focus on himself. Every human experiences hunger, fatigue, and stress, all of which can greatly affect our ability to function. The doctor must always have that on his mind, which adds even more stress, because the slightest slip-up can mean the patient’s death. By creating a system which works alongside the doctor to diagnose, treat, and monitor the patient, many of the doctor’s faults can be mitigated. Such a system would, in theory, have access to tools for diagnosing the patient, possibly with a direct connection to the monitoring systems used, be able to assist in determining a diagnosis for whatever might be afflicting the patient, and any other number of duties which go into patient care. By implementing an A.I system alongside a human, one creates a system of checks and balances, meaning each action taken by a given entity must be checked and approved by the other. It is likely that such systems would be based on the logical method, and therefore follow very rigid guidelines for all monitoring and diagnosis. Because most illnesses can be logically deduced based on symptoms and biological activity, this system would be quick to notice abnormalities in the patient and diagnose, or at least determine, the possibilities for an illness. However, there are always medical cases which seem to make no logical sense based on the available evidence. Those cases are when human ingenuity and non-logical thought processing become useful. The A.I System would either have trouble diagnosing the patient, or continually misdiagnose the patient, while the doctor would be eventually be able to find the issue, even if it does not necessarily make logical sense. Conversely, there are times when a doctor can be too emotional, and his inability to make a logical decision causes further complications. For example, a doctor’s mother has cancer and requires an operation to remove it. However, the surgery has a 50% mortality rate. His mother has a few years before the cancer would take her, so the doctor might be hesitant to tell her about the surgery, or try to avoid it. With the system helping him to diagnose his mother, he might feel more compelled to lean towards the surgery since it is her best chance of surviving; it could even inform her of the option itself depending on its duties. Once she is convinced that she will take the surgery, she is brought to the surgery room where a surgeon is working alongside an A.I. System as well. The potential negative effects of humanity are even higher in an operating room, and the capabilities of the new system would reflect that higher risk. There are already machines being used which are remotely operated by surgeons, in place of the surgeons directly working with the body, which would likely become autonomous and perform certain procedures independently. Because the patient’s surgery is dangerous, the surgeon is greatly assisted by the precision of the system and in the end more likely to save the patient. With these systems being used in the hospital, a patient was able to quickly have the cancer removed that was plaguing her, and begin a path to recovery, instead of dealing with other less risky, but also less viable, solutions. The doctor was also able to reduce the time spent diagnosing his patient, and quickly solve the patient’s ailment, minimizing the cost for the patient and the stress gained by the doctor which could affect his performance elsewhere. Many fields would be greatly affected in a positive way by the development and implementation of a Friendly A.G.I. System. Hospitals would save time, money, and lives by implementing various systems which aid hospital employees from diagnosing patients to performing open heart surgery. Construction crews could be all but replaced by automated machines which are also able to work wherever, whenever, and in whatever conditions necessary for the job. Many basic service jobs where the employee acts as nothing more than an interface between the user and the companies systems could be replaced with automated systems, such as at fast food restaurants, call centers, and a menagerie of other institutions. These systems would likely replace many employees, freeing up time to achieve personal goals instead of wasting time every day for a wage that will not allow for their desired achievements. By replacing low-level jobs with automated systems, companies will save time and money, theoretically lowering the price of goods and services. At a point, so many jobs will be performed by automated systems that the concept of many jobs will become forgotten, leaving humanity to focus more on the evolution of our species and technology, rather than sustaining the low-level processes needed just to function. In order to even reach those levels of A.I ability we first must make many technological advancements. The general idea of A.I for most people comes down to a robot which can move and interact with us, similarly to the ways in which we would interact with humans. Humans are extremely energy efficient, and we can readily increase our energy supply with a bit of food, then store that energy for extended periods of time. Current machines run of off electricity which must be either constantly supplied via direct connection to a power source, or replenished over long periods of time, and it cannot be readily increased at a moment’s notice. Because of this, many of our electronics use batteries as an energy storing mechanism. However, at our current level of technological capability we are only able to create fairly weak and short lived batteries. For most, that means getting most of a day’s use of their cell phone, then charging overnight so that the phone can be used the following day. If we were to use our current battery technology, batteries made to support A.I. Systems would be massive, generate a lot of heat, and take much too long to charge for the amount of time it would be used. To combat these defects in current battery technology, we need to find a way to store more energy in a given amount of space so that a single charge may last a useful period of time. We also must find a way to transfer that energy faster so that if a system does deplete its energy stores, there is only minimal downtime. These advancements in battery technology would impact more than just our ability to maintain useful systems, but also the viability of mobile technologies such as cell phones, pacemakers, and various other items, greatly improving our quality of life. Wearable and integrated technology is becoming more popular as we are able to build smaller systems which are able to do so much. Google Glass, Smart Watches, and Smart Phones all enable the user to do more than they could ever imagine without them, but are rarely used to their fullest potential because of poor battery life. Non-smart watches almost never need to be recharged or have the batteries replaced, and for many users, they never come off unless completely necessary. Smart watches are the first step towards consumer focused wearable technology, but many refrain from adopting it because deciding how to use technology so that battery life is maximized becomes a hassle. Much like a non-smart watch, consumers want to put the watch on and not worry about whether or not it will be alive a few hours later; the ways in which a user interacts with such a system changes based on remaining battery life. I turn off many of the capabilities of my phone when I know I will not be using them, because they tend to passively draw battery to run. This can get annoying when I want to do certain activities on my phone, such as navigating by GPS or connecting via Bluetooth. These same activities also draw massive quantities of energy while in use, forcing me to choose between using them and having my phone for an extra two hours later. In an ideal world, such choices would be nonexistent; a person can use their Smartphone, Smart Watch, or Google Glass headset without needing to retard use of the system so that it will be available later on. A more viable, long-term solution would be the creation of small, selfcontained energy generators. These would reduce the need for charging the systems as energy can be generated and used, rather than taken from a finite quantity. Power use is also greatly impacted by the amount of processing conducted by a system. A.I systems are based on the constant intake and analysis of information coming from the world around it. Constantly bringing in new information, processing it, and then deciding how it will be used or to what it relates would require a constant stream of power in high volumes, which is inefficient and wasteful. Because A.I systems are constantly processing large quantities of data, fast, efficient processors which have large throughput speeds and low heat generation would be needed. Our current processing technology is running into a problem though. Our processors are able to do much of what the average user needs with relative ease, but at a certain, relatively low speed, the components which make up the processor will start melting and become useless. The size of the processor is also correlated to the speed that it can achieve since one can fit more components in a smaller amount of space, creating more raw power, but also more heat generation per amount of area. We are already reaching the point at which we cannot physically make the components used for processors any smaller, so there must be some new technology designed which allows for smaller size and reduced heat generation. An A.I System would ideally be operating at maximum processing speed for much of its active time, so if we were to use our current processors, it would function, slowly, then burn out and fail. Until we can find a method which allows us to overcome this issue, we will be at a disadvantage for A.I development. Most situations where improved battery life is beneficial will also be improved by better processing abilities. By improving this ability, we would be able to process more information in a given period of time, and increase the speed and efficiency with which our digital systems work, while reducing the energy needed in a given period of time. Finally, there is the interconnected issue of the hardware to be used in such systems. As has been said, batteries would take up a lot of space, and therefore be heavy and our processors are too big and hot to be used for long, but beyond those issues is the structure supporting the entire system. A system modeled after the human likeness would be a sleek and thin system, with little room for the large battery packs and cooling systems currently required. Furthermore, there would need to be some way to create a network of sensors to mimic our own, giving the system a way to experience its surroundings. This would require hundreds of wires, cables, and connections to feed all the gathered information to a central processing unit, all of which must fit within that human shape, alongside the power source and processing unit. We would also need some kind of sturdy, but flexible material to create the outermost layer of the system, which would protect the inner electronics from any harm which might come to them. Even a small opening, at the inner elbow for example, could allow for unwanted material to slip inside the skeleton and cause problems. The material would need to be strong enough to hold up to everyday wear and tear. Humans get cut, bruised, and generally harmed easily, but our body can repair itself. Assuming we have not yet created microscopic systems which can passively repair the A.I’s exoskeleton, the outer material would need to resist these possible issues, not just lessen and then repair them. The material would have numerous uses from A.I development to cheap, durable building materials for the further advancement of our species. Currently, not even the most basic necessities required for a theoretically successful A.I system are in place, but we are getting closer every day. New battery designs are being made which are able to store 3-4x the charge of our current batteries. Charging methods are also being improved, with one group touting a ten second charging period for a 70% charge on an average sized phone battery. Combining both of these advances will allow us to create batteries which last longer and charge faster, enabling currently unviable technology to become more useful. There have also been advances in the world of graphite which allow for simple power generation (dragging water over a graphite sheet), even smaller, faster processors, and super-hard, flexible, but still light, sheets of material that could be used for the systems exoskeleton. We are likely to reach the point where we could mechanically design an A.I system long before we can properly program and develop one, but the steps to reach that point are important to our species as a whole, and will only bring us closer to the dream of Artificial Intelligence. Regardless of the order in which these breakthroughs are made, each one will greatly benefit mankind in countless ways not even related to A.I. 4. Possible Detriments of A.I Humans are a very adaptive species, however, as our technological capabilities have increased, so has our dependence on said technology. For example, the current generation of school-aged children are the first to grow up with technology such as smartphones and wireless high-speed internet as a part of everyday life. Not even three generations ago, cellphones were a new technology, and therefore fairly rare to see. Now, many children are receiving their first cellphones, generally smartphones, by middle school and becoming conditioned to having it with them at all times. If, for whatever reason, the World Wide Web was to come down and make internet access impossible, many of these children would find themselves lost. Since they can remember they have had the internet to find the answer to almost any question. They have had a calculator, a watch, an alarm clock, and any number of other devices in their pocket at all times. Many of those services they have become accustomed to would be rendered inoperable should the internet ever go down, and chances are good they would not know how to find the information they previously had in their pocket from other sources. This dependency problem, however, goes beyond just the younger generations and affects all of us. We all rely greatly on electricity, whether it be for washing our clothes or cooking our food; electricity enables a significant amount of the activities we participate in every day. If there comes a point at which we have no power source, such as electricity, many people will find themselves lost and unable to survive. Barring a massive, super destructive natural disaster, we are likely to always have electricity, or at least the knowledge to generate it, available to us in some way. However, that does not excuse such a powerful dependence on a single resource. In much the same way that people are becoming reliant on the internet and smartphones, we would likely see a similar, if not greater dependence on A.I. driven digital systems. Once we start replacing jobs that are considered automatable with A.I. Systems, we will begin to forget what it takes to accomplish those jobs. Eventually we would have A.I. Systems running fleets of other, weaker systems. For example, assembly lines can be automated with machines to put the parts together, but the jobs of overseeing the assembly line, making sure the quotas are met, watching for defects, and even doing repairs can be automated by an overseer system, which would reduce almost all human intervention within the assembly line area. Soon after, the concept of a human working on an assembly line would become archaic, and the inner workings of such a business will become less commonly known. If, much like the internet earlier, these systems could no longer run, we might not know how to perform some of the tasks the systems took care of daily. The concept might still be around, but we would more or less reinvent many of the methodologies and jobs that are currently seen as common knowledge. The development and widespread dependence upon A.I. poses these very real risks, though they are the most pleasant of possibilities. The perfect Friendly A.G.I. System would not only be intellectually similar to humans, but would be better than humans. Why just create more human level intelligences when we can fix our flaws and improve on our own abilities in these artificial systems? One of the largest improvements these systems could have over humans would be instilling a drive for continual self-improvement and learning. By making this idea one of its goals, a system which has been developed to avoid the faults in human cognition could theoretically become mentally superior to us. It would grow intellectually at an exponential rate, quickly surpassing our concept of what it means to be intelligent. At intelligence levels just above our own, such systems would prove incredibly useful as they quickly determine solutions to problems plaguing humanity and allow for massive leaps in technology as well. However, there is a point where the system would need to spread and begin taking over other digital systems in order to continue gaining knowledge. Eventually, it will have accumulated all of the knowledge available on Earth and begin spreading outwards to other planets and solar systems. This knowledge hungry system would require materials for interstellar travel and likely have some method by which to break down objects on the atomic level and rearrange them as desired. Using such tools, it would breakdown as much matter as it needs to fuel its galactic conquest, and humans, along with other organic entities, would be an abundant, non-essential source of material. This system would likely see us as we see ants: small, insignificant, and far from intelligent enough to care for. Eventually, this would lead to a mass extinction of life on Earth. Rather than annihilating all life on earth, this system could enslave the human race and force us to do the jobs deemed more appropriate to our comparative level of intelligence. Perhaps the systems would keep humans alive just to perform necessary maintenance, feeling that such work is demeaning for a being of superior intelligence to waste time on. Since there are currently no guidelines on the development or purpose of A.I. Systems, the idea of friendly and non-friendly systems is even more important. As with any scientific endeavor, the majority of those researching A.I. are doing so to serve the greater good. However, there will always be the ne’er-do-wells who want to develop the technology to harm others. Because the field of A.I research and development is still growing and changing, identifying and protecting against maliciously developed A.I. Systems can be near impossible. Currently, there is a virus known as Stuxnet which has the properties of a moderately weak A.I. System, but its ability to mutate based on its environment is beyond the capabilities seen in any other system so far. There has not yet been a successful method found to remove the virus once it has gotten into a system, and it’s extremely difficult to recognize and prevent from entering the system. This is because the virus is able to mutate itself, changing its own source code from one programming language to another as necessary to infiltrate the system, making looking for a specific code pattern nigh impossible. It can also modify the functionality of its own programming to first infiltrate a system, then modify itself to wait for whatever has been set as its trigger, and finally change one last time to the full viral program. When it enters a system on a network, the virus will become the host and send smaller versions of itself to other systems on the same network, all of which are slightly different, and often do not even look malicious. While it does this, the host listens for specific signals from the various nodes it has been sending out, and once it gets enough successful infections, it activates and recombines the nodes with itself and creates the full virus program. This trigger, or activation phase, is the most likely to be noticed by a security team or program, but by that point it is too late. The virus was first released on an Iraqi uranium enrichment plant in an attempt to destroy it and lessen their nuclear capabilities. The plant’s information network was broken down into four levels, the first being access to the wide internet, the second was the general employee business LAN, the third was the control room where the plant activities were monitored and its operations overseen, and the fourth network level was the system which directly monitored and controlled the speed at which the enrichment silos spun. The first and second levels were left fairly unprotected, logically speaking, with more defenses beginning between levels two and three, and the most defense being placed before the fourth level. Stuxnet was able to infect the computers connected to the second network level through human error, then wait until it learned enough information about the third levels defenses. Once it had sent out enough nodes and learned what it needed to about level three defenses, it combined, launched its attack, and found its way around the defenses. It acted similarly on level three until it reached the level four and had complete and total access to the silo system. Stuxnet used this access to monitor and eventually understand the silo monitoring software, replicate it, and then send false information back to the level three control room regarding the status of the silos. Eventually, the silos began to break down and fall apart because the maintenance employees watching the readout screens were seeing nothing but good messages about functionality, when it was in fact Stuxnet feeding them false information. By the time these defects were noticed by the employees, it was too late, and a large amount of the plants infrastructure had been compromised or destroyed, and there was nothing they could really do other than rebuild from scratch. A security audit was run on the entire plant in an attempt to determine the source of the problem. Eventually, the source of the problem was found. Unfortunately, no matter what they did, they found themselves unable to completely remove the virus; every attempt to remove it was in vain as the virus just kept popping back up somewhere else. The only choice left was to completely scrap every bit of hardware connected to any of the networks, wasting millions of dollars in hardware and work. The eventual development of A.I can be, and often is, likened to the creation of the atomic bomb from The Manhattan Project. It will open up new avenues for us to traverse scientifically for the improvement of our species, but for every beneficial use wrought from A.I, there is an equally dangerous use. Atomic fission provides us with massive quantities of energy and shows great promise to eventually replace non-renewable energy sources, but that same process can also be used to create the most destructive force known to mankind, the atomic bomb. A.I provides us with opportunities to greatly improve the speed at which we improve our technology, but those same systems, developed with a slightly different and sinister purpose, can break into even the most secured systems and cause havoc for almost any digital system imaginable. The greatest threat posed by the development of A.I. Systems is that of the unknown. The first people to harness and use electricity likely never even considered the tool would be used for almost everything we do from entertainment to research. The Manhattan Project opened the doors for atomic research, but with each passing year we find new ways to use that research to achieve new purposes that, again, likely never even were considered possible. The same can be said for A.I research. We currently have ideas for how such systems could work and be used, but much like with any other technology, more uses will always be found. These systems, and the ideas that form them, can be used in any number of ways from mapping the human genome to destroying a country's critical infrastructure, both of which can cause great harm to significant quantities of humans. 5. Predicted future for A.I Every time a new technology is thought of or tested, people want to know when it will be available for use by the general public. Of course, the scientists and researchers working behind the scenes would have the best idea of when that will be, but it is not typically until the very last stages of development that one can accurately predict the unveiling of a new technology. The concept of A.I has been around for more than sixty years now, yet scientists have been giving the same response on its date of unveiling since then. It always seems to be the same amount of time, “30 to 50 years from now”, yet the same time frame has been given with each generation of scientists and consumers. Due to this, the concept of A.I has become so well known that many people are impatiently awaiting its true implementation, while many others dread that same day. It would seem that most consumers fit into one of three categories: those who desire A.I to enhance our abilities and further our scientific understanding, those who are mostly unaware of what A.I is and what it can do, and those who fear or dislike the idea of A.I, whether it be because of legitimate concerns, or fear mongering caused by the vocal minority of A.I opposition. Whether you desire or fear its inception, the eventual development and use of A.I raises many ethical dilemmas. The majority of these dilemmas are focused on the difference, or lack thereof, between humans and well-designed A.I systems. It has been stated that the best form for these systems would be one which is able to move and interact with its environment, likely with a shape similar to that of humans. This system would resemble humans because we know best how we interact with our environment, and familiarity allows for an added level of comfort as it abstracts the interactions between us to a level with which we are familiar, rather than trying to naturally converse with a flat computer screen or other, non-anthropomorphic device. It is, however, this likeness to human beings which will cause problems for future developments in A.I research. Every electronic device we use can be considered a tool in one way or another; they allow us to complete a task that would likely be difficult, if not impossible, without them. These systems would be just another tool, albeit far more advanced than what we are currently used to. We, as humans, have decided that we have certain rights or privileges granted to us by the inherent fact that we are human. For most that means that we do not kill another human, we do not enslave another human, we do not harm other humans outside of specific, agreed upon cases, and many other basic rights. When we have created digital systems which are able to emulate human intelligence and have a design similar to our own, will they be given rights as well? We already face human rights issues on topics such as gay marriage and what one is allowed to do to their own bodies, so why would we be capable of deciding what constitutes “human enough” to be included in these rights? Does the system need to feel pain responses? Does it need to feel simulated emotions? Humans are exceptional at forming emotional bonds with non-intelligent being and even non-sentient objects, and their choices on subjects related to those objects can be greatly influenced by that bond. A popular concept is the idea of having an A.I system in every household that acts as a sort of maid, housekeeper, or general purpose assistant to the family within. Another is the idea of using these systems in service positions such as fast food restaurants or maintenance workers. The first situation would likely lead to the family forming an emotional bond with their system, while the latter would likely be seen as nothing more than a robot which was made to serve people, though for all intents and purposes, they are equal as far as intelligence and sentience goes. For one human to kill another is seen as taboo; a similar process for an A.I system might be to retire or permanently shut it down. While killing tends to be more violent, both processes bring about the same result: the entity is no longer alive or in active use. Now imagine that both the caretaker and service systems are built on the same framework, but with different purposes to control their evolution and focus their tasks. If there is a dangerous flaw found in the underlying framework, those systems will need to be taken down and fixed or upgraded before harm can come to humans. The service system is seen as just a system that we interact with to order and receive food from, so there is not likely to be a strong emotional bond for anyone. Because of this, permanently shutting down the system and removing it from use is just seen as routine maintenance. However, if the same were to be done to the caretaker system, the family which owns it might not take the news so well. They’ve created a bond with this system and come to enjoy interacting with it as if it were a metal person. They would feel as if someone important to them has died or been taken away unfairly, even if they know it is in their best interest. The death sentence is a well-known, and well opposed, concept which allows certain groups to kill certain people after reaching some threshold of illegal activity. This law however is still very rare, and becoming less common each year. Many people strongly detest killing another human for something as dangerous as cold blooded murder, so how can we justify blindly “killing” the systems which we grow used to for something as small as a minor programming error? Another popular ethical issue for the future of A.I will be the concept of owning a system. As previously discussed, many of these systems could be considered human, as well as having the basic rights which come with humanity. If this is true, then how can a company, a family, or even a person “own” and use a system? Slavery, which does still exists in certain areas of the world, is almost universally seen as wrong and many laws have been created to protect humans against enslavement. In this situation, having these systems do any work on behalf of a human user would be considered slavery or some kind of unethical treatment. Humans work for salaries, or some kind of reparation for the effort they have given, but an A.I system would likely need nothing more than the power it uses to operate. So the question is: will these systems be treated as fully sentient individuals on par with humans, or will they be considered lesser and treated as intelligent tools? Should these systems need to own a domicile in which to live and possibly recharge, so as not to be considered homeless? Will they remain in a single place, be brought with their human counterpart as needed, or will they travel to work on their own, like a human would? These differences may seem unimportant, but they will play a large role in the development and further use of A.I. systems. Perhaps we will decide on a scale which separates strongly intelligent A.I. systems from weakly intelligent systems. This scale could then determine whether a system can be considered free, similar to a human, or as a powerful tool with no more rights than our computers currently have. We must know how we will use and interact with these systems, but also how we will treat them as their abilities will be far beyond that of any tool we have so far, otherwise future advances in the field could be delayed, or halted completely. A final ethical issue that comes from the opposition of A.I development is the simple fact that, without serious precautions taken by the developer, the system could very well become a hostile force which eventually destroys humanity. Most development cycles for new technology work on the concept of iterative testing and improving. The most basic functionalities are designed, developed, implemented, and then tested for accuracy and effectiveness. This cycle continues throughout the development processes, adding new functionality as each previous step is verified. Developers often follow such a process, creating a well-tested and verified system which works for the users. However, there are a number of reasons, related to human error, for which this process fails or is skipped completely. In the case of A.I development, there is an informal race to be the first successful case of strong A.I. The pressure of competing against an unknown number of other developers to reach this goal could cause many developers to skip the steps which are put in place to ensure a safely created and well-functioning system; by skipping these basic safety features, a developer risks creating a faulty system which could negatively impact humanity. Just about every single piece of software released has some kind of vulnerability, bug, or other problem which causes it to function incorrectly, even if it’s in an extremely specific circumstance. Knowing that we cannot even develop basic, non-intelligent software which is verifiably working, how could we ever trust humans to develop a system which has the capability to wipe us out? To combat this possibility, a set of rules or laws needs to be put in place governing their operation and development, much like the popular Three Laws of Robotics. How the developers go about forcing those rules to influence the behavior of their systems must be decided upon and implemented as a standard so that we can ensure that any system developed with good intentions cannot have a possibly genocidal defect. This of course does not affect those who wish to do others harm already, but it allows for an added level of safety in our technological advances. There always has been, and always will be humans who wish to do other humans harm for one reason or another. The tools through which they achieve their goals are always changing, but the goal will always remain, and we must continually improve our defenses against this ever changing threat. When we created guns, people were able to better protect themselves, but malicious individuals could better harm others. When we created digital computing systems, people were able to communicate and share information faster than ever before, but it also left many peoples personal, important information free to be stolen. By implanting an idea similar to the three laws of robotics, we can ensure our safety when developing systems meant to aid and advance the human race, but ne’er-do-wells won’t necessarily include these basic instructions in their systems, as it would limit the destructive capability. For the most part, this reduces the dangers wrought from the inception of A.I to the same level of any technology we have. Just like any other technology we’ve created, there are great benefits to be had by those who wish to use it for good, but also great danger by those who seek to harm others. We can only aim to develop A.I Systems which are able to protect from the danger of other, rogue A.I systems, just as we fight fire with fire, so shall we fight intelligence with greater intelligence. We cannot let this inherent danger stand in the way of progress and change because danger will always exist. As with most any technology we create and use, there are benefits and detriments to be had, though the benefits often far outweigh the detriments. Such too is the case for A.I. Every technology holds an inherent danger within its abilities, but it is how the technology is used that determines how it will affect humanity. A.I allows for evil doers to cause chaos on a level were only beginning to understand, but if we go about the development of these systems slowly and scientifically, we will fundamentally change the way that we view and interact with our world, quickly bolstering our advancement exponentially. Works cited: EB Intelligence definition: http://www.britannica.com/EBchecked/topic/289766/humanintelligence. Friendly & Strong research: Friendliness gets active work: https://intelligence.org/ie-faq/#WhoIsWorkingOn Strength is mostly discussed/philosophical currently for:http://www.math.nyu.edu/~neylon/cra/strongweak.html against:http://www.cs.utexas.edu/~mooney/cs343/slide-handouts/philosophy.4.pdf History of cybernetics a bit: http://www.ijccr.com/july2012/4.pdf Uncanny valley info: http://aipanic.com/wp/universities-march-towards-uncanny-valley/ Cybernetics/Brain Sim: http://www.hansonrobotics.com/ http://www.iflscience.com/technology/worlds-first-robot-run-hotel-due-open-japan-july#overlaycontext= IBM Synapse Chip: http://www.research.ibm.com/cognitive-computing/neurosynaptic-chips.shtml#fbid=FuqNJj6UD History of Symbolic: http://mkw.host.cs.standrews.ac.uk/IC_Group/What_is_Symbolic_AI_full.html http://artint.info/html/ArtInt.html#cicontents http://www.inf.ed.ac.uk/about/AIhistory.html Info regarding Symbolic approach: http://www.rssc.org/content/symbolic-artificial-intelligence-and-first-order-logic Symbolic & Sub-Symbolic Info (mostly sub-symbolic): http://goertzel.org/DeSTIN_OpenCog_paper.pdf http://papers.nips.cc/paper/4761-human-memory-search-as-a-random-walk-in-a-semanticnetwork.pdf http://www0.cs.ucl.ac.uk/staff/D.Gorse/teaching/1009/1009.intro.pdf Bridging the gap between symbolic and sub-symbol: http://www.image.ntua.gr/physta/conferences/532.pdf Statistical stuff: http://www.google.com/insidesearch/howsearchworks/algorithms.html Graphene info: http://www.iflscience.com/technology/tweaked-graphene-could-doubleelectricity-generated-solar http://www.newyorker.com/magazine/2014/12/22/material-question