File

advertisement

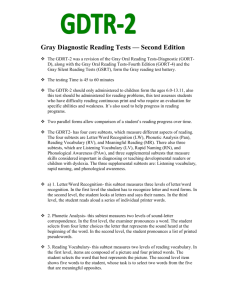

An Analysis of a Set of Three Tests Measuring Reading Comprehension Through the Awareness and Understanding of Some English Vocabulary. Gloria Bello EDU 570 Assignment 1 Professor Ken Beatty July 2nd, 2015 An Analysis of a Set of Three Tests Measuring Reading Comprehension Through the Awareness and Understanding of Some English Vocabulary 1. Introduction The purpose of this paper is to analyze the effectiveness of three subtests of a progress test which defined “as part of an ongoing assessment procedure during the course of instruction” (Bailey, 1998, p. 39) in order to assess the students´ vocabulary awareness and comprehension as well as reading comprehension. This will allow me to reflect upon the students´ skills, processes and knowledge that I want to assess, as well as the identification of the most pertinent design (C tests, cloze passages, filling the blanks, multiple choice) or scoring method to measure the language constructs. The practice of knowing how to design appropriate tests, identifying the learning and context needs of the students, supports the improvement of the teaching and learning practices. It also encourages classroom research processes re garding performance assessment, validity, and reliability of the test that are usually applied to score the students, calculate item facility and item discriminability, differentiate the impact that can make a direct and indirect tests of language skills. Finally, the analysis of the test construction strive on principles that guide a communicative language test (Sevignon and Berns, 1984). Knowing these principles may teach me: to build tests from existing theory and practice; to avoid to start from nowhere; to choose better content to promote communicative activities to promote motivation, substantiality, integrity and interactivity; to do everything to encourage the test takers´ best response such as better instructions of what and how to do it, assertive time expectation for completing the test, reference material needed in the test, etc. and finally, this work may help me to identify how the effect of a test has on the school teaching practices. Teachers can transcend from knowing how to better create language English test to assess our own classroom practice as well as other teachers´. Thus, this work can help other teachers to improve their test building. 2. Subjects The subjects for this test are sixth grade students whose language abilities do not even belong to A1 level according to the Common European Framework of Reference (CEFR), just a low percentage of these learners can understand, spell and read short sentence. The group is made up by boys and girls around 10 to 14 years old. They study in the afternoon shift and most of them, according to a survey run last year, belong to social strata 1 and 2. They are dedicated to the school academic studies; just a little percentage work in the other shift of the day. Most of them live with their own families but a 16% of the learners are living in ICBF institutions; these are State places where the students are protected if their parents do not take care of them. They all live in Bogotá but not all are from this city. There are some students who come from other Colombian cities like Medellín, Valledupar, or Magdalena. They moved to Bogotà due to family issues or Colombian political problems such as displacement by the “guerrilla”. Regarding their learning, the sixth grade students are approached to artistic and sport interests but reading or writing are not their main focus neither their interest to study English. From around 25 students per classroom, just 1 or 2 students really like English and act accordingly. 3. Constructs The constructs measured in these tests include Vocabulary awareness, Reading comprehension and Vocabulary comprehension. 3.1 The First Construct: Vocabulary awareness. It talks about the use of vocabulary acquisition strategies, especially this called “planning strategy”. (Nation, 2001). According to Flores (2008) “the learners choose what to focus on and when to focus on it”. This fits in the test in in the way students are able to apply an strategy (picture exploitation) in order to be aware of the vocabulary they need to use to be able to write the sentences. 3.2 The Second Construct is Reading comprehension. It can have a varied set of definitions, but for the purpose of the assessment of this test, I will take reading comprehension as “the process of constructing meaning by coordinating a number of complex processes that include word reading, word and word knowledge, and fluency.” (Vaughn and Boardman, 2007, p.2). Additionally, Snow (2002) and Grellet (2006) state that reading comprehension is also a process of extracting and constructing meaning through a continuous interaction between the written language and the reader (Ajideh, 2003). Additionally, Nunan (1999) highlights that this interaction involves linguistic (bottom-up) and background (top-down) knowledge and Mikulecky, (2008) explains that the background knowledge activation can be done when the readers compare information in the text to their own prior experience and knowledge, “including knowledge of the world, cultural knowledge and knowledge of the generic structure”(Gibbons, 2002, p. 93) This theory is observable in doing the subtest of reading comprehension subtest given that students need to construct meaning by interacting with the text including word reading of a mutilated paragraph that will allow the readers to identify the words that the text has and does not have in order to construct the message. This process of word reading and word recognition for constructing meaning will be activated through the use of the students´ bottom up and top-down reading processes, it means that students´ knowledge is activated due to their content schema and their paradigmatic competence (Berns and Sevignon, 1984), prior experiences and formal knowledge students have gotten from the classroom instructions and activities regarding colors, body parts and cloths. 3.3 The Third Construct: Vocabulary comprehension. “Vocabulary in learning a language is more than the use of words but the understanding and use of lexical chunks or units, for instance “good afternoon” “Nice to meet you” are sentences that have more than one word and which are constituted as vocabulary with a formulaic usage. In other words “...vocabulary can be defined as the words of a language, including single items and phrases or chunks of several words which covey a particular meaning, the way individual words do. Vocabulary addresses single lexical items—words with specific meaning(s)—but it also includes lexical phrases or chunks. (Lessard-Clouston, 2013) The Lexical Approach clearly states that learning a language should not be seen from grammar to vocabulary, it suggest that learning a language goes beyond. “Language consists not of a traditional grammar and vocabulary but often of multi-word prefabricated chunks” (qtd. In Thornbury 4). This fits in the test in the way that the vocabulary is seen as part of the formulaic language used for communication. Students use the vocabulary to express a message of a situational content. In this way, the lexical approach is observable. 4. Purpose of the tests The entire test seeks to find out how much students have achieved in the English subject during the second academic term (colors, clothing, and body parts) after continuous classroom instructions and after running a Cambridge EFL Test (Cambridge English: Key for Schools, 2006) intended to examine the schools´ students current English knowledge applied at the beginning of this academic year. Observing the skills reading and writing skills, it was evident that students could not recognise nor spell and write words, phrases and short sentences from a text. So, a very basic level of vocabulary comprehension and sentence writing were seen in the test results. Therefore, it was considered necessary to encourage students to develop Vocabulary Awareness, Reading Comprehension and Vocabulary Comprehension, being these the three constructs of the test development project. Following we can see more specific purposes for assessing the three mentioned constructs: 4.1. To determine students’ awareness of the vocabulary learned in class such as colors, body parts and some garments by writing some sentences observing Cesar´s Appearance. 4.2. To evaluate the grammatical structures students are using when writing the previous sentences: person + verb + complement. 4.3. To check the students´ understanding of the vocabulary by using it into a specific context: My Best Friend Carla. 5. The Three tests The following is the first draft of the three tests. The aims of the tests are to measure the sixth graders´ awareness and comprehension of basic vocabulary like colors, body parts and cloths as well as to identify its use into specific written contexts. The complete test is available in the next link, although it will be shown and explained within this section of the paper. https://docs.google.com/forms/d/1FUvmF8fsthLQUqxXEb572j46BG69Do04AWMB5VyXJo/viewform?usp=send_form 5.1. Subtest 1. Writing About Cesar´s appearance: a subjectively-scored test with 10 items, without choices for the student (Appendix A). The goal of this part of the test is to identify the students´ level of awareness of the vocabulary studied in class related to physical appearance in third person and in simple present tense using an image of a person as the input material to understand the meaning. 5.2. Subtest 2. Reading About my Best Friend Carla, an objectively-scored test with 10 items of another type, such as a Fill in the Blanks (Appendix B). The goal of this part of the test is to encourage students to read and comprehend a mutilated passage in which they need to add the vocabulary of colors and body parts to give meaning to the entire paragraph. 5.3. Subtest 3. Identifying Tatti´s Appearance: an objectively-scored test, multiple choice test with 10 items, four choices for each item (Appendix C). The goal of this part of the test is to identify the students´ level of comprehension of the vocabulary studied in class through fixing the words into specific contextual situations. Students need to choose a word in order to give meaning to each of the 10 sentences. 6. Scoring procedures for Subtest 1 The following table provides markers with instructions on how to score each test item of the subtest 1 which assess students´ vocabulary awareness through sentence writing. For doing it, the analytic scoring approach will be used. It will evaluate the “students´ performances on a variety of categories” (Bailey, 1998, p. 190) such as content, organization, vocabulary, language use and mechanics. “The weights given to these components follow: Content: 13 to 30 points possible; organization: 7 to 20 points possible; vocabulary: 7 to 20 points possible: language use: 5 to 25 points possible; and mechanics: 2 to 5 points possible” (Bailey, 1998, p. 190). So, this assessing system will be used since the students will write isolated sentences instead of a composition. Therefore, it is essential to identify the writing components of the students´ sentences specially those components with higher scores like content and language use. Following, you can see the descriptors for each category: CONTENT: Students are able to describe Cesar´s appearance. ORGANIZATION: Students write organized sentences following the grammatical structures: person + verb + complement. VOCABULARY: Students use the vocabulary learned in class such as the body parts, colors and cloths. LANGUAGE USE: Students write coherent sentences in English to describe a person. MECHANICS: Students use the capital and lower case letters and a period correctly. These descriptors as well as the categories and the score ranges are more clearly stated in the excel charts created to evaluate each students´ sentences. An example of the writing assessment of a student is provided (Appendix D). 7. Pre-piloting the three subtests The three tests were pre-piloted with two teachers. Teacher 1: Yeny Franco. She studies Modern Languages (Spanish - English) and has experience in pedagogy and second language teaching. She has worked as an English and Spanish teacher during 10 years in private schools and she is currently teaching these two subjects in a public school in the south of Bogotá. She has teaching experience with kids, children and nowadays with teenagers. Teacher Franco is currently doing a Master degree in Teaching English for SelfDirected Learning. Part of their insights about teaching is that effective teaching involves the interaction of three major components of instruction: learning objectives, assessments, and instructional activities and effective teaching involves adopting appropriate teaching roles to support the students´ learning goals. Teacher 2: Yesenia Moreno. She is graduated from the Universidad de la Salle where she received the degree of Bachelor of Modern languages. She has taught English-Spanish in private schools with students from kindergarten, primary and high school. She has a 10-year experience as a Spanish and English teacher, being eager to improve her academic and professional practices as well as her English proficiency. For her, it is important to teach not only English grammar knowledge but values like respect, honesty, cooperation and integrity. She considers discipline and self-reflection vital strengths to be able to reach personal goals. The following are the comments that teachers made. Most of them are the same for the three subtests; therefore, they are grouped as comments for Subtest 1, 2 and 3. Just some comments were given just for the subjective test. 7.1 Subtest 1, 2 and 3 Given that the students are demonstrating their English reading performance, the instructions should be in English such as commands. English instructions can be complemented with examples for a better understanding and for a positive washback. There should be a subtitle that indicates the skill that is going to be evaluated for example: vocabulary section . 7.2 Subtest 1 There should be 10-line structured so that students can write the sentences. It is necessary to explain that the students should write a minimum of 10 complete sentences in English, since students tend to write in Spanish although they are doing an English task. It is important to analyse if the presentation of the subtest 1 is appropriate for the students ‘English level: true beginners’ sixth graders. 8. Administering the test The test was administered to 12 students at a public school in Bogotá, Colombia. It was taken by the students referred in section two “subjects” of this paper on May 28th, 2015. The exam was administered virtually using a Google form document by the Technology Teacher of the school since I am in a post natal medical leave. So, there was some help from my colleague to be able to administer the exam. As a result, I could have the students´ responses easy and quickly. Some of the students sent answers are seen in Appendix E. Regarding the issues while taking the exam, the teacher did not report any inconvenience neither with the exam itself nor with the conditions in which the test was taken. 9. Results from the three subtests The three subtests were scored by the researcher. The subjectively-score subtest was also scored by a colleague, an experienced teacher who has worked with different kinds of population: kids, teenagers and adults. She has taught Spanish and English in private and state schools and currently, she is teaching in a public school where a bilingual program under the National bilingualism Policies has been structured; therefore, she and some foreign teachers have organized programs to increase the students´ English proficiency. The results of the scoring are as follows: 9.1 Subtest 1 According to the Analytic Scoring Criteria used to evaluate the students 10sentence writing, in which different categories were assessed -content, organization, vocabulary, language use and mechanics and the descriptive statistics analyzed from the subtests´ results (Appendix G), I could identify that the average score is 50 which says that most of the students did not reach a high score out of 100. Since the middle score is 42 and the most frequent score is 33, I would say that the students did not do well when writing. It is important to clarify that this subtest is scored out of 100 points distributed in this way: “Content: 13 to 30 points possible; organization: 7 to 20 points possible; vocabulary: 7 to 20 points possible: language use: 5 to 25 points possible; and mechanics: 2 to 5 points possible” (Bailey, 1998, p. 190). Comparing these results with the ones obtained by the rater 2(Appendix H), it is evident that the results were kind the same, the range of the scores were 57, one point more than the rater 1. The most frequent score and the middle score are exactly the same. So, for helping the students improve the writing, it would be important to clarify the subtest instruction more and emphasize the importance of the writing categories of the Analytic Scoring approach. Besides, I can say that these results are reliable since the value gotten from the Cronbach alpha´s analysis is 0.77, which tells a greater value of consistency or reliability with which the two raters evaluate the same data. In other words, there is a consistency interrater reliability in the two raters´ scores. Finally, the frequency polygon (Table 1) clearly states a high numbers of students in the lowest grades. It means that the higher the values are, the less number of students are. It is important to find out what is the most problematic issues in the students´ sentence-writing taking into account the five writing components (Analytic Score System). 9.2 Subtest 2 Taking into account the frequency polygon (Table 2) of the answers gotten in this objectively-scored test with 10 item in which the students need to choose a word from 4 options in order to fill in the blanks of a mutilated paragraph, I could identify as in the subtest one that most of the students got wrong answers when reading and filling in the paragraph. In this part of the test, it is observable even lower grades than in the first one. The highest score was 60 while in the subtest one is 80. In such a way, the descriptive statistics for this subtest state that being the average score is 18, 20 the most frequent score achieved by the students and 20 as the middle score (Appendix J) indicate that the students’ knowledge about the description of physical appearance in which they needed to use vocabulary such us colors, body parts and cloths is too low to be able to fill in the paragraph. It may suggest that students do not understand the vocabulary that has been learned in class. It could increase the possibility to have better grades clarifying the importance of observing the input material (the image of the little girl) very carefully or by changing the input material used for this subtest. Besides this, the Item Facility chart of the subtest 1 (Appendix K) shows that the most of the items yield enough variance which is very useful, except number 11 and 18 which are of acceptable difficulty; however, items number 12, 13, 15 and 19 look with a doubtful at 0.16. Just two of the items are in the middle difficulty range. As a conclusion, I could say that it would be better to rewrite most of the items, especially the one that got 0, since just two of the 10 items got an acceptable difficulty range 0.33 and 0.41, and ideal value would be from 0.15 and 0.85 (Oller, 1979 cited by Baily, 1998) . In order to provide a more detailed analysis of the subtest items, I would describe the item discrimination I.D. (Appendix L) between high and low scorers (Bailey, 1998) of this subtest. In looking at the data, it seems that all the items need to be rewritten for a criterion-reference test since some items´ values are 0.0 and the rest are -0,3 it means that they do not discriminate between high and low scorers at al. Oller (1979) cited by Bailey (1998) clarified that “I.D. values range from +1 to 1, with positive 1 showing a perfect discrimination between high scorers and low scorers, and -1 showing perfectly wrong discrimination. An I.D. of 0 shows no discrimination or no variance whatsoever” (p.135). So, to improve the I.D. of this subtest, the options to be chosen can be colors, parts of the body and cloths instead of just one category. It may confuse the students because it turns to be more difficult. Observing the Distractor analysis chart (Appendix M), I can observe that all the options were chosen excepting the C for number 17 and the distractor A for item 20. In the philosophy of the multiple-choice format, this shows that these distractors should perhaps be changed, especially A for the item 20 given that being this the correct answers, nobody chose it. Looking at the Response Frequency Distribution (Appendix N), can give me a better idea of how the distractors are functioning. So, the results here support those in I.D. chart in which none item discriminates at all. In this data, I can see that except item 18, no one in either group (high and low scorers) chose identical answers. However, it is important to emphasize that despite these results, the low scorers seem to have chosen the correct answers while in the high scorers nobody, except one person did so. In sum, the high scorers and the low scorers differed substantially the distractors they chose and therefore, to make certain revisions as the why just one person in the high scorers chose the correct answers while in the low scorers at least one person chose most of the correct answers. 9.3 Subtest 3 Analyzing the frequency polygon (Table 3) of this objectively-scored subtest with 10 items in which the students need to complete a sentence by observing the input material and choosing a word from 4 options of the vocabulary studied to give sense and meaning to the sentence; they basically needed to use the vocabulary studied in contextual sentences, I could identify as in the subtest one and two that just lower students got correct answers when responding to the task. It is observable that even the highest score was gotten in this part of the test, there were more wrong answers in this part than in the others. Besides this, the statistics for this subtest (Appendix J) show a significant different among the mean of the subtest 2 in comparison with the mean of the subtest 3. It suggests that the students´ performance is higher in this part of the test. However, by analyzing the median and mode, I found that there was the same low score as the most frequent score gotten by the students but the median increased a little bit more. All this indicates that the students’ vocabulary comprehension (colors, body parts and cloths) continues being too low to be able to reach the goal. In order to help students to increase such as results, I consider important to teach students how to do picture exploitation which is an strategy used for the input material of the three subtests. They perhaps did not observe carefully the images. Additionally, the data of the Item Facility of the subtest 1 (Appendix K) shows that most of the items have enough variance, except number 22 and 30 which are of very low difficulty; the rest of the are in the middle or acceptable difficulty range. So, except these mentioned items, most of this subtest does not have to be rewritten nor revised since better scores were obtained by the students. In order to continue analysing this subtest items, I found that the item discrimination I.D. (Appendix L) between high and low scorers (Bailey, 1998) do not highly discriminate. It shows that just a few of the items are fairly solid but in general the items like 21, 22 24, 25, 27 and 30 do not show discrimination, therefore, they need to be revised. Though, observing the Distractor analysis of the subtest (Appendix M), I can observe that all the options were chosen excepting the option B for the item 30. This shows that with the exception of just one item, most of the items show perfect discrimination. The Response Frequency Distribution (Appendix N) specifies how the distractors are functioning. In this data, I could see that there was not any distractor that was not chosen by low or high scorers. And differently to the subtest 2, there were more scorers from both groups that chose identical answers. However, item 30 showed only borderline variance. As a conclusion, the high scorers and the low scorers did not differ substantially but it is important to make some revisions regarding the distractors that nobody chose like A and D for item 22, a for item 24, c for item 25, B for item 27 and A and B for item 30. 9.4 The Entire Test Looking at the frequency polygon of the entire test (Table 4) I could identify that most of the correct answers are located within 0 to 100 out of 300 points and very few students reached 50% of the correct answers, it means 150 points. The descriptive statistics (Table 5) corroborates what the frequency polygons shows. As the mean as the mode and median suggest that most of the students got third quarter of the total score of the test. So, there is a constant amount of incorrect answers in the three subtests. It makes me think that not only the entire test should be reviewed but also the conditions in which it was presented –run by another different teacher, there was not any person capable person in the subject that could answer the students´ test doubts, there was not extra explanation about the instructions of the test, especially what picture exploitation may imply. And although most of the scores are significantly situated in one part of the polygon, the standard deviation or the average amount of difference (Bailey, 1998) of the entire test suggests that the scores are not compacted, they are distributed among the lowest scores. 10. Conclusion In conclusion, I have to say that this original test development assignment was tremendously stimulating to start thinking about how complex and serious the process of creating and evaluating the language tests is. First of all, I would like to reflect on the importance of the test instructions. They should be as clear and direct as possible. Since the students’ English level is very low, it may be helpful to have clear examples of the expected answers located in the instruction part. Another important issue observed in the test development is the stimulus material that was used and the corresponding tasks that students needed to do. The results of the test showed that there should be internal and external factors, in all the subtests, that contributed for the poor results of most of the students (Table 4). One way to improve it is to model how to analyze the pictures of the subtests during the classroom instructions so that the students know what exactly they need to watch and write. Besides this, I could not administer the test in the classroom. It might have contributed since students´ doubts could not be clarified by the Technology teacher who run the test. Sometimes poor results are not because of their lack of knowledge but because of their lack of understanding of test instructions. The results from piloting the tests also showed that there should a different alternative to evaluate the 10-sentence writing, since the Analytical Scoring Approach was not practical nor and lacks of positive washback. The task as well as the scoring system can be used for an independent writing activity, since the results are too specific and students should go back to receive specific feedback as well. So, a more direct writing task and scoring system should be used in a progress test. Additionally, it is also important to create the items carefully so that the answers do not affect the face and content validity (Brown, 2005). They could be rewritten using different kinds of categories within the four options of the answers with acceptable degree of difficulty based on the current students´ English level. In terms of Wesche’s (1983) four components of a test, these tests had non-linguistic stimulus materials such images. Students needed to analyzed the images in order to do the tasks, they could be writing sentences, completing a mutilated paragraph or completing some sentences. It may alert me that extraneous factors may be affecting the students’ results, it is maybe because I was absent or because I did not explain the test, it was a colleague given to my post natal medical leave. , the options: chose different categories based on the students English level. The test was too high for the students’ current English level although the vocabulary was taught in the classroom. References Ajideh, P. (2003). Schema theory-based pre-reading tasks: A neglected essential in the ESL reading class. The Reading Matrix, 3(1). Retrieved from http://www.readingmatrix.com/articles/ajideh/article.pdf Alderson, J. C., & Urquhart, A. H. (1984). Reading in a foreign language. London, UK: Longman. Bailey, K. M. (1998). Learning about language assessment: Dilemmas, decisions, and directions (p.189) Pacific Grove Calif.: Heinle & Heinle Publishers. Brown, J.D. (2005). Testing in language programs: A comprehensive guide to English language assessment. Chapter 10: Language Test Validity. New York, NY: McGraw-Hill. p. 108. Cambridge English: Key for Schools. (2006). Retrieved on February 27th http://www.cambridgeenglish.org/images/165870-yle-starters-sample-papers-vol-1.pdf from Flores, M. (2008). Exploring vocabulary acquisition strategies fro EFL advanced learners. Retrieved on June 2nd . 2015 from http://digitalcollections.sit.edu/cgi/viewcontent.cgi?article=1192&context=ipp_collection Gibbons, P. (2002). Scaffolding language, scaffolding learning: Teaching second language learners. Retrieved on may 15th from http://www.heinemann.com/shared/onlineresources/e00366/chapter5.pdf Grellet, F. (1981). Developing reading skills: A practical guide to reading comprehension exercises. Cambridge, UK: Cambridge University Press. Lessard-Clouston, M. (2013). Teaching Vocabulary. Retrieved from on april 5th, 2015, from http://www.academia.edu/2768908/Teaching_Vocabulary Mikulecky, B. (2008). Teaching reading in a second language. Retrieved from on april 5th, 2015, from http://www.longmanhomeusa.com/content/FINAL-LO%20RES-Mikulecky- Reading%20Monograph%20.pdf Nation, I. (2001). Learning vocabulary in another language. Cambridge: Cambridge university Press. Nunan, D. (1999). Second Language Teaching & Learning. Heile, Cengage Learning. Snow, C (2002) Reading for Understanding: toward a research and development program in reading comprehension. RAND Corporation. Swain, M. (1984). Large-scale Communicative language testing: A case study. In S.J. Savignon, S.J. & M.S. Berns (Eds.), Initiatives in communicative language (pp. 185-201). Reading, MA: Addison-Wesley. teaching. Thornbury, S. (1998). The Lexical approach: A journey without a map? Modern English Teacher. Retrieved on June 6th, 2015. Appendices Appendix A Subtest 1 Appendix B Subtest 2 Appendix C Subtest 3 Appendix D Analytic Approach chart to evaluate the sentence writing from the subtest 1. Appendix E A sample of a students´ Analytic Approach Assessment to evaluate his 10-sentence writing from the subtest 1. Appendix F Some of the students´ subtests answers sent through Google doc. Appendix G Results of the subtest 1 using the Analytic Scoring Approach and its corresponding descriptive statistics student 1 student 2 student 3 student 4 student 5 student 6 student 7 student 8 student 9 student 10 student 11 student 12 MEAN MEDIAN MODE RANGE VARIANCE STANDARD DEVIATION Subtest 1 Results Analytic Scoring Approach 43 42 51 85 35 37 73 48 39 33 33 89 50,66666667 42,5 33 89 – 33: 56 373,3888889 20,18250067 Appendix H Results of the subtest 1 by the rater 1 and the rater 2 and its corresponding descriptive statistics student 1 student 2 student 3 student 4 student 5 student 6 student 7 student 8 student 9 student 10 student 11 student 12 MEAN MEDIAN MODE RANGE VARIANCE STANDARD DEVIATION Subtest 1:Rater 49 33 60 67 33 33 90 42 47 33 33 42 46,83333333 42 33 99 – 33: 57 287,6388889 Subtest 1:Rater 2 43 42 51 85 35 37 73 48 39 33 33 89 50,66666667 42,5 33 89 – 33: 56 373,3888889 17,71405879 20,18250067 Appendix I Cronbach´s Alpha to for the rater 1 and 2´s results of the subtest 1 to identify the consistency or reliability with which the two raters evaluate the same data. Original Test Development Assignment Appendix J Descriptive Statistics of the Subtests 1 and 2 student 1 student 2 student 3 student 4 student 5 student 6 student 7 student 8 student 9 student 10 student 11 student 12 MEAN: average MEDIAN MODE RANGE VARIANCE STANDARD DEVIATION Individual Scores of Subtest 2 10 0 0 60 10 20 0 30 20 30 20 20 18,3333333 20 20 0 - 60 263,888889 Individual Scores of Subtest 3 20 50 20 80 20 40 50 40 30 20 30 40 36,6666667 35 20 20 - 80 288,888889 16,9669911 17,7525073 Appendix K The Item Facility chart of the Subtests 1 and 2 Items Subtest 2 11 12 13 14 15 16 17 18 19 20 Items Subtest 3 21 22 23 24 Students who answer it correctly 1 2 2 4 2 5 3 1 2 0 Students who answer it correctly 3 6 5 4 I.F. 0,083333333 0,166666667 0,166666667 0,333333333 0,166666667 0,416666667 0,25 0,083333333 0,166666667 0 I.F. 0,25 0,5 0,416666667 0,333333333 Original Test Development Assignment 25 26 27 28 29 30 5 4 3 3 5 6 0,416666667 0,333333333 0,25 0,25 0,416666667 0,5 Appendix L The Item Discrimination chart of the Subtests 1 and 2 Items Subtest 2 Low Scorers High Scorers (bottom 3) (top 3) with with correct correct answers answers I.D 11 0 0 0 12 13 14 15 16 17 18 19 20 0 1 0 0 0 0 0 0 0 1 0 2 1 2 0 1 1 0 -0,333 0,333 -0,667 -0,333 -0,667 0 -0,333 -0,333 0 Items Subtest 3 21 22 23 24 25 26 27 28 29 30 Low Scorers High Scorers (bottom 5) (top 5) with with correct correct answers answers 0 1 0 2 1 0 0 1 1 1 2 0 1 1 1 0 2 1 1 1 I.D -0,333 -0,667 0,333 -0,333 0 0,667 0 0,333 0,333 0 Original Test Development Assignment Appendix M The Distractor Analysis Chart of the Subtests 1 and 2 Answers for sub test 2 A Item B C D 11 3 6 2 1+ 12 4 2+ 4 2 13 2+ 6 2 2 14 1 4 3 4+ 15 2+ 3 4 3 16 5+ 2 4 1 17 3+ 6 0 3 18 2 8 1 1+ 19 2+ 5 3 2 20 0+ 8 2 2 Answers for sub test 3 21 2 4 3 3+ 22 1 6+ 4 1 23 1 2 5+ 4 24 1 3 4 4+ 25 2 5+ 2 3 26 3 3 4+ 2 27 3+ 2 4 3 28 3+ 2 6 1 29 3 1 3 5+ 30 1 0 5 6+ Original Test Development Assignment Appendix N The Response Frequency Distribution Chart of the Subtests 1 and 2 Response Frequency Distribution of Subtest 1 Item 11 12 13 14 15 16 17 18 19 20 A B C D High Scorers 1 2 0 0* Low Scorers 1 1 1 0 High Scorers 0 0* 2 1 Low Scorers 1 1 0 1 High Scorers 1* 2 0 0 Low Scorers 0 1 1 1 High Scorers 1 2 0 0* Low Scorers 0 0 1 2 High Scorers 0* 1 1 1 Low Scorers 1 1 1 0 High Scorers 0* 0 3 0 Low Scorers 2 0 0 1 High Scorers 0* 2 0 1 Low Scorers 0 2 0 1 High Scorers 1 2 0 0* Low Scorers 1 2 0 0 High Scorers 0* 2 1 0 Low Scorers 1 2 0 0 High Scorers 0* 2 1 0 Low Scorers 0 2 1 0 Response Frequency Distribution of Subtest 2 Item 21 22 23 24 25 26 27 28 29 30 High Scorers Low Scorers High Scorers Low Scorers High Scorers Low Scorers High Scorers Low Scorers High Scorers Low Scorers High Scorers Low Scorers High Scorers Low Scorers High Scorers Low Scorers High Scorers Low Scorers High Scorers Low Scorers A B C D 0 1 0 0 1 0 0 0 1 0 0 0 1* 1 1* 0 0 1 0 0 2 1 0* 2 0 1 1 1 1* 1 1 2 0 0 1 0 0 1 0 0 1 0 3 1 1* 0 2 1 0 0 2* 0 1 1 1 3 1 0 2 2 0* 1 0 0 1 2 0* 1 b 2 0 1 1 1 0 0 2* 1 1* 1 Original Test Development Assignment Tables Table 1 Frequency Polygon for Subtest I Vocabulary Awareness: describing Cesar´s Appearance Table 2 Frequency Polygon for Subtest II Reading Comprehension: Reading about my Best Friend Carla Table 3 Original Test Development Assignment Frequency Polygon for Subtest III Vocabulary Comprehension: Identifying Tatti´s Appearance Table 4. Frequency Polygon for the Entire Test: What does he/she look like? Frequency Polygon of the Entire Test 9 8 7 6 5 Number of students 4 3 2 1 0 0 - 50 51 - 100 101 - 150 151 - 200 201 - 250 251 - 300 Original Test Development Assignment Table 5. Summary of Descriptive Statistics for Test and Sub-tests I through 3 Statistics Number of students Total possible points Mean (x-bar) Mode Median Range Variance (s²) Standard deviation (s) Entire test 12 300 105,7 83 90,5 73 - 225 1838,4 44,8 I. Vocab.A 12 100 50,7 33 42,5 33 - 89 373,4 II. Reading 12 100 18,3 20 20 0 - 60 263,9 III Vocab. C. 12 100 36,7 20 35 20 - 80 288,9 20,1 17 17,8 Original Test Development Assignment Table 5 Construct Definition Chart Subtest (named by the construct) Subtest 1: Writing About Cesar´s appearance. Construct: Vocabulary Awareness Subtest 2: Reading About my Best Friend Carla Construct: Reading Comprehension Definitions of Construct(s) Assessed (Add the complete citations for the works you consulted to find these definitions as a reference list in Part III below.) Vocabulary awareness compels the use of vocabulary acquisition strategies, especially this called “planning strategy”. (Nation, 2001). “The learners choose what to focus on and when to focus on it” (Flores, 2008) In order to understand better this concept, I will take into account different definitions of what Reading comprehension is: -“The process of constructing meaning by coordinating a number of complex processes that include word reading, word and word knowledge, and fluency.” Vaughn and Boardman (2007, p.2). -“The process of simultaneously extracting and constructing meaning through interaction and involvement with written language”. Snow (2002, p.11) . -“Extracting the required information from it (referring to a text) as efficiently as possible” Grellet (2006, p.3). -Nunan, 1999 defines reading as an interactive process that involves both linguistic (bottomup) and background (top-down) knowledge. -“Reading is a conscious and unconscious thinking process. The reader applies many Possible Test Methods (Item Types) Subjective subtest: 10sentence writing Filling the blanks: Discrete Point Approach Original Test Development Assignment strategies to reconstruct the meaning that the author is assumed to have intended. The reader does this by comparing information in the text to his or her background knowledge and prior experience” (Mikulecky, B, 2008) -As a text participant, the reader connects the text with his or her own background knowledge – including knowledge of the world, cultural knowledge,a nd knowledge of the generic structure”( Gibbons, 2002, p. 93) Subtest 3: Identifying Tatti´s Appearance Construct: Vocabulary Comprehension “Vocabulary in learning a language is more than the use of words but the understanding and use of lexical chunks or units, for instance “good afternoon” “Nice to meet you” are sentences that have more than one word and which are constituted as vocabulary with a formulaic usage. In other words “...vocabulary can be defined as the words of a language, including single items and phrases or chunks of several words which covey a particular meaning, the way individual words do. Vocabulary addresses single lexical items— words with specific meaning(s)—but it also includes lexical phrases or chunks. (LessardClouston, 2013) The Lexical Approach clearly states that learning a language should not be seen from grammar to vocabulary, it suggest that learning a language goes beyond. “Language consists not of a traditional grammar and vocabulary but often of multi-word prefabricated chunks” (qtd. In Thornbury 4) Multiple Choice: Discrete Point Approach Original Test Development Assignment Table 6 Swain’s (1984) “Principles of Communicative Test Development” Swain’s Principles. Section of your test Section 1: Writing About Cesar´s appearance: Vocabulary Awareness Section 2: Reading About my Best Friend Carla: Reading Comprehension Section 3: Identifying Tatti´s Appearance: Vocabulary comprehension Start from Somewhere The test is built based on the role of vocabulary acquisition strategies to build on vocabulary awareness. The test is built under the understanding of the different approaches on reading comprehension The test is built under the Lexical Theory and what implies vocabulary acquisition in the EFL learning. Concentrate on Content The content of the subtest is based on the interaction between the stimulus material, an image, and the students´ knowledge to be able to write a description 10 sentences. It is based on the interaction between the reader and the text by choosing the best option to complete a mutilated paragraph. It is based on the interaction between the stimulus material, an image, and 10 incomplete sentences. Bias for Best Colorful images to understand better the vocabulary and the situational contexts. Work for Washback The test impacted on the importance of picture exploitation teaching. An example of the possible answer. Adequate time to complete the task and to revise the work. Colorful images to understand better the vocabulary and the situational contexts. An example of the possible answer. Colorful images to understand better the vocabulary and the situational contexts. An example of the possible answer. The test made an impact on the students´ conception of English language testing using technology. The test made an impact on the importance of teaching strategies to improve the vocabulary, the chunks and the reading comprehension. Original Test Development Assignment Name: ______________________________ Date: __________________________ EDU 570: CLASSROOM-BASED EVALUATION SELF-ASSESSMENT CHECKLIST FOR THE ORIGINAL LANGUAGE TEST DEVELOPMENT PROJECT This worksheet should serve as a checklist for you as you complete your original test development project. It should be copied and pasted into the end of your report, since the MOODLE will only allow you to upload one file in submitting an assignment. Please complete it as a self-assessment, using the following symbols: + = = - = NA = good to excellent work; no questions or doubts in these areas fair to good work; some doubts and/or some confusion here poor to fair work; many doubts and/or much confusion not applicable in this case These entries will not be “used against you.” The information will be used solely to help us improve the preparation for this assignment and to guide you in completing it. Please turn in this checklist at the very end of your completed project. 1. In developing my original language test, I have defined the construct(s) to be measured. _____ The construct(s) to be measured is/are clearly defined and I used the professors’ feedback. _____ The test has been written with some real audience and/or purpose in mind. _____ I have explained the purpose(s) of my test in the report. _____ I have included the construct chart as an appendix at the end of my paper. 2. I have drafted a language test with three subtests. _____At least one subtest is subjectively scored. _____I have included a multiple-choice subtest consisting of ten items. _____I have included a key for the objectively scored portion(s) of the test as an appendix. 3. I have designed (or adapted) scoring procedures for the subjectively scored portion. _____ I have written (or adapted) clear descriptors for the scoring levels. _____ I have trained my additional rater using the descriptors. _____ Since I used an existing rating scale, I have explained why I chose it. _____ Since I adapted an existing rating scale, I have explained the adaptations I’ve made. _____ I have cited the sources that influenced my scoring procedures. 4. I have developed a key for the objectively scored portion(s). _____I have taken the test myself. Original Test Development Assignment _____I have made certain that there is one and only one correct answer to the items in the objectively scored section(s) 5. I have pre-piloted my test _____ ...with at least two native speakers or proficient speakers of the target language. _____ I have checked their responses against my predicted answer key and obtained their feedback about the clarity of the instructions. _____ I have revised the test as needed and duplicated it. _____If I designed the test for a class which I myself am not teaching, I have gotten the teacher’s feedback on the draft. 6. I have administered my revised test in order to pilot test this version. _____I piloted my test with at least twelve learners of the target language. _____On the basis of the students’ performance and direct feedback, I have determined the clarity of the instructions, stimulus material(s) and tasks. 7. I scored the test and analyzed the results. _____I have drawn frequency polygons representing the students’ scores for each subtest and for the total test. They are included in an appendix at the end of the paper. _____I have calculated the descriptive statistics (mean, mode, median, range, variance, and standard deviation) for each subtest and for the total test, along with the total points possible for the total test and each subtest. I have and included this information in a table as an appendix. _____I have evaluated the students’ performance on the subjectively scored part of the test using at least two raters (myself and someone else). _____I have computed ID and IF for each item in the objectively scored subtest(s). _____ I have computed the average ID,and average IF for the objectively scored subtest(s). _____The ID and IF information is reported in a table in an appendix at the end of the paper. _____I have correctly interpreted and discussed the results of each of these analyses. _____I have computed inter-rater reliability for the subjectively scored portion(s) of the test. _____I have explained what the inter-rater reliability index tells me about my rating system. _____I have reported the correlations among the subtests in a correlation matrix in an appendix. _____I have correctly interpreted and explained the results of the statistics from the pilot testing. 8. I have written a concise, coherent and well documented report of my project. _____The body of my report is no more than five (5) pages long, typed, double-spaced, in twelve-point font (but not counting the title page, appendices or reference list). _____Based on my analyses, I have discussed the test's strengths and weaknesses. _____I have analyzed my test in terms of the four traditional criteria (reliability, validity, practicality, and washback). _____I have included appropriate suggestions as to how my test could be improved in the future. _____My report clearly locates my work relative to the literature covered in the course and other appropriate research which I have found. _____The appendix has my chart about Wesche’s (1983) four components of a language test. _____I have discussed my test in terms of Swain’s (1984) four principles of communicative language testing (in a chart in an appendix). Original Test Development Assignment _____I have read and properly cited Bailey, Brown, Wesche, Swain and other authors. _____The construct definition chart, the rating scale, and the test itself are included in appendices. _____I have provided a complete and accurate reference list (using APA format). _____I have personally checked the reference list for completeness and accuracy. _____I understand that the grade is final and that I may not resubmit this paper to improve the grade. _____I have learned something by developing this original test and am proud of my work. =<)