A Taste of the Importance of Effect Sizes

advertisement

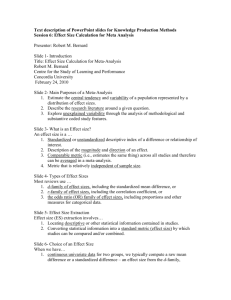

9.0 A taste of the Importance of Effect Size QuickTime™ and a TIFF (Uncompressed) decompressor are needed to see this picture. The Basics of Effect Size Extraction and Statistical Applications for MetaAnalysis Robert M. Bernard Philip C. Abrami Concordia University What is an Effect size? • A descriptive metric that characterizes the standardized difference (in SD units) between the mean of a control group and the mean of a treatment group (educational intervention) • Can also be calculated from correlational data derived from pre-experimental designs or from repeated measures designs April 12, 2005 4 Characteristics of Effect Sizes • Can be positive or negative • Interpreted as a z-score, in SD units, although individual effect sizes are not part of a z-score distribution • Can be aggregated with other effect sizes and subjected to other statistical procedures such as ANOVA and multiple regression • Magnitude interpretation: ≤ 0.20 is a small effect size, 0.50 is a moderate effect size and ≥ 0.80 is a large effect size (Cohen, 1992) April 12, 2005 5 Zero Effect Size ES = 0.00 Control Group Intervention Group Overlapping Distributions April 12, 2005 6 Moderate Effect Size ES = 0.40 Control Group April 12, 2005 Treatment Group 7 ES = 0.85 Control Condition April 12, 2005 Intervention Condition 8 Large Effect Size ES = 0.85 Control Group April 12, 2005 Intervention Condition 9 Percentage Interpretation of Effect Sizes • ES = 0.00 means that the average treatment participant outperformed 0% of the control participants • ES = 0.40 means that the average treatment participant outperformed 65% of the control participants (from the Unit Normal Distribution) • ES = 0.85 means that the average treatment participant outperformed 80% of the control participants April 12, 2005 10 Independence of Effect Sizes • Ideally, multiple effect sizes extracted from the same study should be independent from one another • This means that the same participants should not appear in more than one effect size • In studies with one control condition and multiple treatments, the treatments can be averaged, or one may be selected at random • Using effect sizes derived from different measures on the same participants is legitimate April 12, 2005 11 Independence: Treatments & Measures R One outcome O1 R X1 O1 R O1 R X2 O1 R Xpooled O1 R X3 O1 R R April 12, 2005 O1 O2 X1 O1O2 Two outcomes, one for O1 and one for O2 12 Effect Size Extraction • Effect size extraction is the process of identifying relevant statistical data in a study and calculating an effect size based on those data • All effect sizes should be extracted by two coders, working independently • Coders’ results should be compared and a measure of inter-coder agreement calculated and recorded • In cases of disagreement, coders should resolve the discrepancy in collaboration April 12, 2005 13 ES Calculation: Descriptive Statistics Glass dCohen gHedges YExperimental YControl SDControl YExperimental YControl (SD 2 SD C ) / 2 2 E YExperimental YControl ((N E 1) SD 2 E (N C 1)SD 2 C )) / (N Tot April 12, 2005 3 1 4(N E N C ) 9 2) 14 Examples from Three Studies Study nE nC ME MC SDE SDC SDP G dC gH 0.57 0.51 0.50 Study 1: Equal ns and roughly equal standard deviations S-1 41 41 62.5 59.3 7.0 5.6 6.3 Study 2: Different ns and roughly equal standard deviations S-2 38 14 70.4 80.5 10.8 10.1 10.5 –1.00 –0.96 –0.95 Study 3: Roughly equal ns and different standard deviations S-3 19 April 12, 2005 22 62.5 48.6 14.1 5.6 12.2 2.48 1.14 1.11 15 Extracting Effect Sizes in the Absence of Descriptive Statistics • Inferential Statistics (t-test, ANOVA, ANCOVA, etc.) when the exact statistics are provided • Levels of significance, such as p < .05, when the exact statistics are not given (t can be set at the conservative t = 1.96 (Glass, McGaw & Smith, 1981; Hedges, Shymansky & Woodworth, 1989) • Studies not reporting sample sizes for control and experimental groups should be considered for exclusion April 12, 2005 16 Other Codable Data Regarding Effect size • Type of statistical data used to extract effect size (e.g., descriptives, t-value) • Type of effect size, such as posttest only, adjusted in ANCOVA, etc. • Direction of the statistical test • Reliability of dependent measure • In pretest/posttest design, the correlation between pretest and posttest April 12, 2005 17 Examples from CT Meta-Analysis • Study 1: pretest/posttest, one-group design, all descriptives present • Study 2: posttest only, two-group design, all descriptives present • Study 3: pretest/posttest, two-group design, all descriptives present • Coding Sheet for 3 studies April 12, 2005 18 Mean and Variability ES+ Variability April 12, 2005 Note: Results from Bernard, Abrami, Lou, et al. (2004) RER 19 Variability of Effect Size • The standard error of each effect size is estimated using the following equation: 2 n n d 2 E C ̂ (d) nE nc 2(nE nC ) The average effect size (d+) is tested using the following equation: t d ̂ 2 (d) with N – 2 degrees of freedom (Hedges & Olkin, 1985). April 12, 2005 20 Testing Homogeneity of Effect Size (di d) Q 2 ̂ (di ) i 1 k 2 Note the similarity to a t-ratio. Q is tested using the sampling distribution of 2 with k – 1 degrees of freedom where k is the number of effect sizes (Hedges & Olkin, 1985). April 12, 2005 21 Homogeneity vs. Heterogeneity of Effect Size • If homogeneity of effect size is established, then the studies in the meta-analysis can be thought of as sharing the same effect size (i.e., the mean) • If homogeneity of effect size is violated (heterogeneity of effect size), then no single effect size is representative of the collection of studies (i.e., the “true” average effect size remains unknown) April 12, 2005 22 Example with Fictitious Data Study nE nC YE YC SDP d ̂ 2 (d) Q Study 1 19 22 62.5 48.6 13.9 12.2 1.14 0.11 7.85 Study 2 12 15 18.7 16.9 1.8 4.3 0.42 0.15 0.33 Study 3 32 22 79.6 82.2 –2.6 18.9 –0.14 0.08 1.45 Study 4 41 41 62.5 59.3 3.2 6.3 0.51 0.05 1.98 Study 5 38 24 70.4 80.5 –10.1 10.5 –0.96 0.08 17.66 Totals 142 124 *d+ is not significant, p > .05; April 12, 2005 d+ = 0.135* ∑Q = 29.28** **2 is significant, p < .05 23 Graphing the Distribution of Effect Sizes Forest Plot Units of SD –1.5 –1.0 –0.5 0.0 0.5 1.0 1.5 Study 1 Study 2 Study 3 Study 4 Study 5 Mean Favors Control April 12, 2005 Favors Treatment 24 Statistics in Comprehensive Meta-Analysis™ Note: Results from Bernard, Abrami, Lou, et al. (2004) RER April 12, 2005 Comprehensive Meta-Analysis 1.0 is a trademark of BioStat® 25 Examining Study Features • Purpose: to attempt to explain variability in effect size • Any nominally coded study feature can be investigated • In addition to mean effect size, variability should be investigated • Study features with small ks may be unstable April 12, 2005 26 Examining the Study Feature Gender Overall Effect d+ = +0.14 k = 60 April 12, 2005 Males Females d+ = –0.14 d+ = +0.24 k = 18 k = 32 27 ANOVA on Levels of Study Features April 12, 2005 Note: Results from Bernard, Abrami, Lou, et al. (2004) RER 28 Sensitivity Analysis • Tests the robustness of the findings • Asks the question: Will these results stand up when potentially distorting or deceptive elements, such as outliers, are removed? • Particularly important to examine the robustness of the effect sizes of study features, as these are usually based on smaller numbers of outcomes April 12, 2005 29 Meta-Regression • An adaptation of multiple linear regression • Effect sizes weighted by 1 ̂ 2 (d) in regression • Used to model study features and blocks of study features with the intention of explaining variation in effect size • Standard errors [̂ 2 (d)], test statistics (z) and confidence intervals for individual predictors must be adjusted (Hedges & Olkin, 1984) April 12, 2005 30 Selected References Bernard, R. M., Abrami, P. C., Lou, Y. Borokhovski, E., Wade, A., Wozney, L., Wallet, P.A., Fiset, M., & Huang, B. (2004). How Does Distance Education Compare to Classroom Instruction? A Meta-Analysis of the Empirical Literature. Review of Educational Research, 74(3), 379439. Glass, G. V., McGaw, B., & Smith, M. L. (1981). Metaanalysis in social research. Beverly Hills, CA: Sage. Hedges, L. V. & Olkin, I. (1985). Statistical methods for meta-analysis. Orlando, FL: Academic Press. Hedges, L. V., Shymansky, J. A., & Woodworth, G. (1989). A practical guide to modern methods of meta-analysis. [ERIC Document Reproduction Service No. ED 309 952]. April 12, 2005 31