defended - Moshe Looks

advertisement

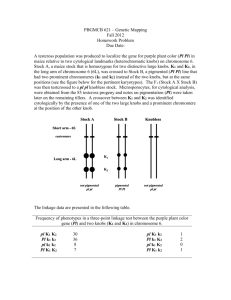

Competent Program Evolution Dissertation Defense Moshe Looks December 11th, 2006 1 Synopsis Competent optimization requires adaptive decomposition This is problematic in program spaces Thesis: we can do it by exploiting semantics Results: it works! 2 General Optimization Find a solution s in S Maximize/minimize f(s) f:S To solve this faster than O(|S|), make assumptions about f 3 Near-Decomposability Complete separability would be nice… Weaker Interactions Stronger Interactions Near-decomposability (Simon, 1969) is more realistic 4 Exploiting Separability Separability = independence assumptions Given a prior over the solution space represented as a probability vector 3. Sample solutions from the model Update model toward higher-scoring points Iterate... Works well when interactions are weak 1. 2. 5 Exploiting Near-Decomposability Bayesian optimization algorithm (BOA) represent problem decomposition as a Bayesian Network learned greedily, via a network scoring metric Hierarchical BOA uses Bayesian networks with local structure restricted tournament replacement allows smaller model-building steps leads to more accurate models promotes diversity Solves the linkage problem Competence: solving hard problems quickly, accurately, and reliably 6 Program Learning Solutions encode executable programs execution maps programs to behaviors find a program p in P maximize/minimize f(exec(p)) exec:PB f:B To be useful, make assumptions about exec, P, and B 7 Properties of Program Spaces Open-endedness Over-representation Compositional hierarchy many programs map to the same behavior intrinsically organized into subprograms Chaotic Execution similar programs may have very different behaviors 8 Properties of Program Spaces Simplicity prior Simplicity preference smaller programs are preferable Behavioral decomposability simpler programs are more likely f:B is separable / nearly decomposable White box execution execution function is known and constant 9 Thesis Program spaces not directly decomposable Leverage properties of program spaces as inductive bias Leading to competent program evolution 10 Representation-Building Organize programs in terms of commonalities Ignore semantically meaningless variation Explore plausible variations 11 Representation-Building Common regions must be aligned Redundancy must be identified Create knobs for plausible variations 12 Representation-Building What about… changing the phase? averaging two input instead of picking one? … 13 behavior (semantic) space program (syntactic) space Statics & Dynamics Representations span a limited subspace of programs Conceptual steps in representation-building: 1. 2. 3. reduction to normal form (x, x + 0 → x) neighborhood enumeration (generate knobs) neighborhood reduction (get rid of some knobs) Create demes to maintain a sample of many representations deme: a sample of programs living in a common representation intra-deme optimization: use the hBOA inter-deme: based on dominance relationships 14 Meta-Optimizing Semantic Evolutionary Search (MOSES) 1. Create an initial deme based on a small set of knobs (i.e., empty program) and random sampling in knob-space 2. Select a deme and run hBOA on it 3. Select programs from the final hBOA population meeting the deme-creation criterion (possibly displacing existing demes) 4. For each such program: 1. 2. 3. 5. create a new representation centered around the program create a new random sample within this representation add as a deme Repeat from step 2 15 Artificial Ant →### # # # # #### ##### # # # # Eat all food pellets within 600 steps Existing evolutionary methods not significantly than random Space contains many regularities ### # # ## # # # # # # # # # To apply MOSES: three reductions rules for normal form # # # # # # # e.g., left, left, left → right separate knobs for rotation, movement, & conditionals no neighborhood reduction needed ## # # # # ##### # # # # # ### # # # # # # # # # # # # ####### # # #### # # 16 Artificial Ant Technique 136,000 How does MOSES do it? Evolutionary Programming Searches a greatly reduced space Genetic Programming 450,000 MOSES 23,000 x x Exploits key dependencies: Effort “[t]hese symmetries lead to essentially the same solutions appearing to be the opposite of each other. E.g. either a pair of Right or pair of Left terminals at a particular location may be important.” – Langdon & Poli, “Why ants are hard” hBOA modeling learns linkage between rotation knobs Eliminate modeling and the problem still gets solved but with much higher variance computational effort rises to 36,000 17 Elegant Normal Form (Holman, ’90) Hierarchical normal form for Boolean formulae Reduction process takes time linear in formula size 99% of random 500-literal formulae reduced over 98% 18 Syntactic vs. Behavioral Distance Is there a correlation between syntactic and behavioral distance? 5000 unique random formulae of arity 10 with 30 literals each Computed the set of pairwise qualitatively similar results for arity 5 behavioral distances (truth-table Hamming distance) syntactic distances (tree edit distance, normalized by tree size) The same computation on the same formulae reduced to ENF 19 Syntactic vs. Behavioral Distance Is there a correlation between syntactic and behavioral distance? Random Formulae Reduced to ENF 20 Neighborhoods & Knobs What do neighborhoods look like, behaviorally? 1000 unique random formulae, arity 5, 100 literals each Enumerate all neighbors (edit distances <2) qualitatively similar results for arity 10 compute behavioral distance from source Neighborhoods in MOSES defined based on ENF neighbors are converted to ENF, compared to original used to heuristically reduce total neighborhood size 21 Neighborhoods & Knobs What do neighborhoods look like, behaviorally? Random formulae Reduced to ENF 22 Hierarchical Parity-Multiplexer Study decomposition in a Boolean domain Multiplexer function of arity k1 computed from k1 parity function of arity k2 total arity is k1k2 Hypothesis: parity subfunctions will exhibit tighter linkages 23 Hierarchical Parity-Multiplexer Computational effort decreases 42% with modelbuilding (on 2-parity-3-multiplexer) Parity subfunctions (adjacent pairs) have tightest linkages Hypothesis validated 24 Program Growth 5-parity, minimal program size ~ 53 25 Program Growth 11-multiplexer, minimal program size ~ 27 26 Where do the Cycles Go? Problem hBOA Representation- Program Building Evaluation 5-Parity 28% 43% 29% 11-multiplex 5% 5% 89% CFS Complexity 80% 10% O(N·l2·a2) O(N·l·a) 11% O(N·l·c) N is population size, O(n1.05) l is program size, a is the arity of the space n is representation size, O(a·program size) c is number of test cases 27 Supervised Classification Goals: accuracies comparable to SVM superior accuracy vs. GP simpler classifiers vs. SVM and GP 28 Supervised Classification How much simpler? Consider average-sized formulae learned for the 6-multiplexer MOSES 21 nodes max depth 4 or(and(not(x1) not(x2) x3) and(or(not(x2) and(x3 x6)) x1 x4) and(not(x1) x2 x5) and(x1 x2 x6)) GP (after reduction to ENF!) 50 nodes max depth 7 and(or(not(x2) and(or(x1 x4) or(and(not(x1) x4) x6))) or(and(or(x1 x4) or(and(or(x5 x6) or(x2 and(x1 x5))) and(not(x1) x3))) and(or(not(x1) and(x2 x6)) or(not(x1) x3 x6) or(and(not(x1) x2) and(x2 x4) and(not(x1) x3))))) 29 Supervised Classification Datasets taken from recent comp. bio. papers Chronic fatigue syndrome (101 cases) Lymphoma (77 cases) & aging brains (19 cases) based on 26 SNPs genes either in homozygosis, in heterozygosis, or not expressed 56 binary features based on gene expression levels (continuous) 50 most-differentiating genes selected preprocessed into binary features based on medians All experiments based on 10 independent runs of 10-fold cross-validation 30 Quantitative Results Classification average test accuracy: Technique CFS Lymphoma Aging Brain SVM 66.2% 97.5% 95.0% GP 67.3% 77.9% 70.0% MOSES 67.9% 94.6% 95.3% 31 Quantitative Results Benchmark performance: artificial ant parity problems 6x less computational effort vs. EP, 20x less vs. GP 1.33x less vs. EP, 4x less vs. GP on 5-parity found solutions to 6-parity (none found by EP or GP) multiplexer problems 9x less vs. GP on 11-multiplexer 32 Qualitative Results Requirements for competent program evolution all requirements for competent optimization + exploit semantics + recombine programs only within bounded subspaces Bipartite conception of problem difficulty program-level: adapted from the optimization case deme-level: theory based on global properties of the space (deme-level neutrality, deceptiveness, etc.) 33 Qualitative Results Representation-building for programs: parameterization based on semantics transforms program space properties to facilitate program evolution probabilistic modeling over sets of program transformations models compactly represent problem structure 34 Competent Program Evolution Competent: not just good performance Vision: representations are important explainability of good results robustness program learning is unique representations must be specialized based on semantics MOSES: meta-optimizing semantic evolutionary search exploiting semantics and managing demes 35 Committee Dr. Ron Loui (WashU, chair) Dr. Guy Genin (WashU) Dr. Ben Goertzel (Virginia Tech, Novamente LLC) Dr. David E. Goldberg (UIUC) Dr. John Lockwood (WashU) Dr. Martin Pelikan (UMSL) Dr. Robert Pless (WashU) Dr. William Smart (WashU) 36