Tackling Lack of Determination - National Institute of Statistical

advertisement

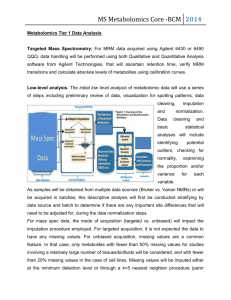

Tackling Lack of Determination Douglas M. Hawkins Jessica Kraker School of Statistics University of Minnesota NISS Metabolomics, Jul 15, 2005 The Modeling Problem • We have a dependent variable y, which is categorical; numeric; or binary. • We have p ‘predictors’ of ‘features’ x • We seek relationship between x and y – so we can predict future y values – to understand ‘mechanism’ of x driving y • We have n ‘cases’ to fit and diagnose model, giving n by p+1 data array NISS Metabolomics, Jul 15, 2005 Classic Approaches • Linear regression model. Apart from error y = S j bj x j Generalized additive model / Neural Net y = S j gj (xj ) Generalized linear model y = g( Sj bj xj ) Nonlinear models, Recursive partitioning NISS Metabolomics, Jul 15, 2005 Classical Setup • • • • Number of features p is small. Number of cases n is much larger. Diagnosis, fitting, verification fairly easy. Ordinary/weighted least squares, GLM, GAM, Neural net straightforward NISS Metabolomics, Jul 15, 2005 The Evolving Setup • Huge numbers of features p, • Modest sample size n, giving rise to n<<p problem, seen in – molecular descriptors QSAR – microarrays – spectral data – and now metabolomics NISS Metabolomics, Jul 15, 2005 Implications • Detailed model checking (linearity, scedasticity) much harder, • If even simple models (eg linear) are hard, more complex ones (eg nonlinear) much harder. NISS Metabolomics, Jul 15, 2005 Linear Model Paradox • The larger p, the less you believe linear normal regression model. • But simple linear is surprisingly sturdy. – is best for linear homoscedastic – is OK with moderate heteroscedasticity – works for generalized linear model – ‘Street smarts’ like log transforming badly skew features take care of much nonlinearity. NISS Metabolomics, Jul 15, 2005 Leading You to Idea • Using linear models is smart, even if for no more than benchmark of other methods. So we concentrate on fitting the linear model y = Sj bj xj = bTx in vector/matrix form • Standard criterion is ordinary least squares (OLS), minimizing – S = Si (yi – bTxi)2 NISS Metabolomics, Jul 15, 2005 Linear Models with n<<p • Classical OLS regression fails if n<p+1 (the ‘undetermined’ setting). • Even if n is large enough, linearly related predictors create headache (different b vectors give same predictions.) NISS Metabolomics, Jul 15, 2005 Housekeeping Preliminary • Many methods are scale-dependent. You want to treat all features alike. • To do this, ‘autoscale’ each feature. Subtract its average over all cases, and divide by the standard deviation over all cases. • Some folks also autoscale y; some do not. Either way works. NISS Metabolomics, Jul 15, 2005 Solutions Proposed • Dimension reduction approaches: – Principal Component Regression (PCR) replaces p features by k<<p linear combinations that it hopes capture all relevant information in the features. – Partial Least Squares / Projection to Latent Spaces (PLS) uses k<<p linear combinations of features. Unlike PCR, these are found looking at y as well as x. NISS Metabolomics, Jul 15, 2005 Variable Selection • Feature selection (eg stepwise regression) seeks handful of relevant features, keeps them, tosses all others. – Or, we can think, keeps all predictors, but forces ‘dropped’ ones to have b = 0. NISS Metabolomics, Jul 15, 2005 Evaluation • Variable subset selection is largely discredited. Overstates value of retained predictors; eliminates potentially useful ones. • PCR is questionable. No law of nature says its first few variables capture the dimensions relevant to predicting y • PLS is effective; computationally fast. NISS Metabolomics, Jul 15, 2005 Regularization • These methods keep all predictors, retain least squares criterion, ‘tame’ fitted model by ‘imposing a charge’ on coefficients. • Particular cases – ridge charges by square of the coefficient – lasso charges by absolute value of coefficient. NISS Metabolomics, Jul 15, 2005 Regularization Criteria • Ridge: Minimize S + l Sj b2j • Lasso: Minimize S + m S j | bj | where l, m are the ‘unit prices’ charged for a unit increase in the coefficient’s square or absolute value. NISS Metabolomics, Jul 15, 2005 Qualitative Behavior - Ridge • Ridge, lasso both ‘shrinkage estimators’. The larger the unit price of coefficient, the smaller the coefficient vector overall. • Ridge shrinks smoothly toward zero. Usually coefficients stay non-zero. NISS Metabolomics, Jul 15, 2005 Qualitative Behavior - Lasso • Lasso gives ‘soft thresholding’. As unit price increases, more and more coefficients become zero • For large m all coefficients will be zero; there is no model • The lasso will never have more than n non-zero coefficients (so can be thought of as giving feature selection.) NISS Metabolomics, Jul 15, 2005 Correlated predictors • Ridge, lasso very different with highly correlated predictors. If y depends on x through some ‘general factor’ – Ridge keeps all predictors, shrinking them – Lasso finds one representative, drops remainder. NISS Metabolomics, Jul 15, 2005 Example • Little data set. A general factor involves features x1, x2 x3, x5 while x4 is uncorrelated. The dependent y involves the general factor and x4. Here are the traces of the 5 fitted coefficients as functions of l (ridge) and m (lasso) NISS Metabolomics, Jul 15, 2005 Correlated predictors - Ridge 0.6 0.4 x1 x2 x4 x5 0.2 x6 0.0 0 50 100 lambda NISS Metabolomics, Jul 15, 2005 150 Correlated predictors - Lasso 0.6 0.4 x1 x2 x4 x5 0.2 x6 0.0 0 3 20 60 100 140 mu NISS Metabolomics, Jul 15, 2005 180 200 Comments - Ridge • Note that ridge is egalitarian; it spreads the predictive work pretty evenly between the 4 related factors. • Although all coefficients go to zero, they do so slowly. NISS Metabolomics, Jul 15, 2005 Comments - Lasso • Lasso coefficients piecewise constant, so look only at m where coefficients change. • Coefficients decrease overall as m goes up; individual coefficients can increase. • General factor term coeffs do not coalesce; x6 carries can for them all. • Note occasions where coeff increases when m increases. NISS Metabolomics, Jul 15, 2005 Elastic Net • Combining ridge and lasso using criterion S + l Sj b2j + m Sj |bj | gives the ‘Elastic Net’. • More flexible than either ridge or lasso; has strengths of both. • For general idea, same example, l=20, here are coeffs as function of m. Note smooth near-linear decay to zero. NISS Metabolomics, Jul 15, 2005 Elastic; lambda=20 0.28 0.21 x1 x2 0.14 x3 x4 x5 0.07 0.00 0 50 100 mu NISS Metabolomics, Jul 15, 2005 150 200 Finding Constants • Ridge, Lasso, Elastic Net need choices of l, m. Commonly done with cross-validation – randomly split data into 10 groups. – Analyze full data set. – Do 10 analyses in which one group is held out, and predicted from the remaining 9. – Pick the l, m. minimizing prediction sum of squares NISS Metabolomics, Jul 15, 2005 Verifying Model • Use a double-cross validation – Hold out one tenth of sample – Apply cross-validation to remaining ninetenths to pick a l, m – Predict hold-out group – Repeat for all 10 holdout groups – Get prediction sum of squares NISS Metabolomics, Jul 15, 2005 (If-Pigs-Could-Fly Approach) • (If you have a huge value of n you can de novo split sample into a learning portion and a validation portion; fit the model to the learning portion, check it on the completely separate validation portion. • This may give high comfort level, but is an inefficient use of limited sample. • Inevitably raises suspicion you carefully picked halves that support hypothesis.) NISS Metabolomics, Jul 15, 2005 Diagnostics • Regression case diagnostics involve: – Influence: How much to answers change if this case is left out – Outliers: Is this case compatible with model fitting the remaining cases. • Tempting to throw up hands when n<<p, but deletion diagnostics, studentized residuals still available, still useful NISS Metabolomics, Jul 15, 2005 Robustification • If outliers are a concern, potentially go to L1 norm. For robust elastic net minimize Si |yi - bTxi| + l Sj b2j + m Sj |bj | • This protects against regression outliers on low-leverage cases; still has decent statistical efficiency. • Unaware of publicly-available code that does this. NISS Metabolomics, Jul 15, 2005 Imperfect Feature Data • A final concern is feature data. Features form matrix X of order n x p. • Potential problems are:– Some entries may be missing, – Some entries may be below detection limit, – Some entries may be wrong, potentially outlying. NISS Metabolomics, Jul 15, 2005 Values Below Detection Limit • Often, no harm replacing values below detection limit by the detection limit. • If features are log-transformed, this can become flakey. • For a thorough analysis, use E-M (see rSVD below); replace BDL by the smaller of imputed value and detection limit. NISS Metabolomics, Jul 15, 2005 Missing Values • are a different story; do not confuse BDL with missing. • Various imputation methods available; tend to assume some form of ‘missing at random’. NISS Metabolomics, Jul 15, 2005 Singular Value Decomposition • We have had good results using singular value decomposition X = G HT + E where matrix G are ‘row markers’, H are ‘column markers’, E is an error matrix. • You keep k<min(n,p) columns in G, H (but be careful to keep ‘enough’ columns; recall warning about PCR.) NISS Metabolomics, Jul 15, 2005 Robust SVD • SVD is entirely standard, classical; the matrix G is the matrix of principal components. • Robust SVD differs in two ways: – alternating fit algorithm accommodates missing values, – robust criterion resists outlying entries in X NISS Metabolomics, Jul 15, 2005 Use of rSVD • The rSVD has several uses: – Gives way to get PCs (columns of G) despite missing information and/or outliers. – GHT gives ‘fitted values’ you can use as fill-ins for missing values in X. – E is matrix of residuals. A histogram can flag apparently outlier cells for diagnosis. Maybe replace outliers by fitted values or winsorize NISS Metabolomics, Jul 15, 2005 SVD and Spectra • A special case is where features are a function (eg spectral data). So think xit where i is sample and t is ‘time’. • Logic says finer resolution adds information, should give better answers. • Experience says finer resolution dilutes signal, adds noise, raises overfitting concern. NISS Metabolomics, Jul 15, 2005 Functional Data Analysis • Functions are to some degree smooth. t, t-h, t+h ‘should’ give similar x. • Approaches – pre-process x – smoothing, peakhunting etc. • Another approach – use modeling methods that reflect smoothness. NISS Metabolomics, Jul 15, 2005 Example: regression • Instead of plain OLS, use criterion like S + S f (bt-1-2bt+bt+1)2 (S is sum of squares) f is a smoothness penalty. • The same idea carries over to SVD, where we want our principal components to be suitably smooth functions of t. NISS Metabolomics, Jul 15, 2005 Summary • Linear modeling methods remain valuable tools in the analysis armory • Several current methods are effective, and have theoretical support. • The least-squares-with-regularization methods are effective, even in the n<<p setting, and involve tolerable computation. NISS Metabolomics, Jul 15, 2005 Some references Cook, R.D., and Weisberg, S. (1999). Applied Regression Including Computing and Graphics, John Wiley & Sons Inc.: New York. Dobson, A. J. (1990). An Introduction to Generalized Linear Models, Chapman and Hall: London. Li, K-C. and Duan, N., (1989), “Regression analysis under link violation”, Annals of Statistics, 17, 1009-1052. St. Laurent, R.T., and Cook, R.D. (1993). “Leverage, Local Influence, and Curvature in Nonlinear Regression”, Biometrika, 80, 99-106. Wold, S. (1993). “Discussion: PLS in Chemical Practice”, Technometrics, 35, 136-139. Wold, H. (1966). “Estimation of Principal Components and Related Models by Iterative Least Squares”, in Multivariate Analysis, ed. P.R. Krishnaiah, Academic Press: New York, 391-420. Rencher, A.C., and Pun, F. (1980). “Inflation of R2 in Best Subset Regression”, Technometrics, 22, 49-53. . Miller, A. J. (2002). Subset Selection in Regression, 2nd ed., Chapman and Hall: London. Tibshirani, R. (1996). “Regression Shrinkage and Selection via the LASSO”, J. R. Statistical Soc. B, 58, 267-288. Zou, H., and Hastie, T. (2005). “Regularization and Variable Selection via the Elastic Net”, J. R. Statistical Soc. B, 67, 301-320. Shao, J. (1993). “Linear Model Selection by Cross-Validation”, Journal of the American Statistical Association, 88, 486-494. Hawkins, D.M., Basak, S.C., and Mills, D. (2003). “Assessing Model Fit by Cross-Validation”, Journal of Chemical Information and Computer Sciences, 43, 579-586. Walker, E., and Birch, J.B. (1988). “Influence Measures in Ridge Regression”, Technometrics, 30, 221-227. Efron, B. (1994). “Missing Data, Imputation, and the Bootstrap”, Journal of the American Statistical Association, 89, 463-475. Rubin, D.B. (1976). “Inference and Missing Data”, Biometrika, 63, 581-592. Liu, L., Hawkins, D.M., Ghosh, S., and Young, S.S. (2003). “Robust Singular Value Decomposition Analysis of Microarray Data”, Proceeding of the National Academy of Sciences, 100, 13167-13172. Elston, D. A., and Proe, M.F. (1995). “Smoothing Regression Coefficients in an Overspecified Regression Model with Interrelated Explanatory Variables”, Applied Statistics, 44, 395-406. Tibshirani, R., Saunders, M., Rosset, S., Zhi, J, and Knight, K., (2005), “Sparsity and smoothness via the fused lasso”, , J. R. Statistical Soc. B, 67, 91-108. Ramsay, J. O. , and Silverman, B. W. (2002), ``Applied functional data analysis: methods and case studies'', Springer-Verlag Inc (Berlin; New York) NISS Metabolomics, Jul 15, 2005