Metabolomics Tier 1 Data Analysis

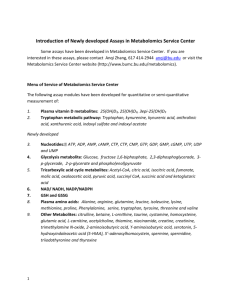

advertisement

MS Metabolomics Core -BCM 2014 Metabolomics Tier 1 Data Analysis Targeted Mass Spectrometry: For MRM data acquired using Agilent 6430 or 6490 QQQ, data handling will be performed using both Qualitative and Quantitative Analysis software from Agilent Technologies, that will ascertain retention time, verify MRM transitions and calculate absolute levels of metabolites using calibration curves. Low-level analysis. The initial low level analysis of metabolomic data will use a series of steps including preliminary review of data, visualization for spotting patterns, data cleaning, and Data imputation normalization. cleaning basic and statistical analyses will include identifying potential outliers, checking for normality, examining the proportion and/or variance for each variable. As samples will be obtained from multiple data sources (Bruker vs. Varian NMRs) or will be acquired in batches, this descriptive analysis will first be conducted stratifying by data source and batch to determine if there are any important site differences that will need to be adjusted for, during the data normalization steps. For mass spec data, the mode of acquisition (targeted vs. unbiased) will impact the imputation procedure employed. For targeted acquisition, it is not expected the data to have any missing values. For unbiased acquisition, missing values are a common feature. In that case, only metabolites with fewer than 50% missing values for studies involving a relatively large number of tissues/biofluids will be considered, and with fewer than 20% missing values in the case of cell lines. Missing values will be imputed either at the minimum detection level or through a k=5 nearest neighbor procedure (pamr MS Metabolomics Core -BCM 2014 package in the R programming language). Depending on the study design several different approaches will be available, ranging from simple median centering, to centering and scaling based on the values of internally spiked standards, to employing more advanced fixed effects analysis of variance procedure that use factors, data platform, batch information, ionization mode, etc. For samples run on days that are fairly apart, batch effects occur that need to be corrected. To correct such batch effects, we either use analysis of variance techniques, or when the focus is on unsupervised techniques such as clustering and principal components analysis, the function “removeBatchEffect” available in the R package “limma” is employed. Standardization of Isotopic Enrichment data and Determination of Steady State: For every substrate or metabolite, a standard curve of known isotopic enrichments versus measured enrichments is first performed. A Regression analysis is performed and raw isotopic data first corrected based on the slope and intercept of the curve when R2=0.99. To calculate flux from the corrected data, steady state isotopic enrichment (plateau) has to be determined by performing a regression analysis of enrichment versus time to ascertain whether slope is not different from zero. If the slope is greater than zero, a non-linear regression is performed to estimate the asymptote (plateau). Normalization of Biolog metabolic phenotyping data: First the data from these metabolic phenotyping assays will be corrected for background signal, obtained from the median reading of the three empty wells on the plate. Following this, the data will be scaled by dividing each measurement on the plate by the inter-quartile range of the data. In our experience, this simple normalization has worked well even for samples that have been run on different days or belong to different conditions (control vs. treatment). Mid-level analysis (Figure 1): These types of analysis involve identification of differentially expressed metabolites, model building for classificatory or survival analysis purposes, dimension reduction for extracting broad patterns from the data and identification of groups of samples and/or metabolites. MS Metabolomics Core -BCM 2014 Specifically, differentially expressed compounds across two classes will be identified using parametric (t-tests) and non-parametric (rank-sum) tests, while for multiple classes we will employ analysis of variance models. The latter models in addition to the key treatment factors being tested also allow for the incorporation of key covariate information, such as clinical (stage of the disease, indices of physiological impairments), as well as demographic and health habits of the samples (e.g. age, race, gender, education, smoking and drinking status in the case of humans and strain, gender and housing conditions in the case of mice). Given the large number of markers that are likely to be identified as significantly different between groups, as well as the number of conditions and differences in any given experiment, we recognize that these multiple comparisons increase the possibility of Type I error (false positives). Hence, family-wise error rate (FWER) methods and false discovery rate (FDR) methods would be employed to reduce or eliminate false positives. In many studies, classificatory and/or prognostic models will be built. Such models are important for delineating metabolomics signatures associated with clinical outcomes, including disease/normal status, recurrence times, stage progression, etc. For categorical outcomes (e.g. disease/normal status, etc.) there are several standard models in the machine learning literature that will be employed, including logistic regression, random forests and support vector machines, whereas for outcomes capturing event times (e.g. disease recurrence, survival, etc) Cox-proportional hazards models will be used. An important aspect of this modeling will be to enforce sparsity through penalization (e.g. lasso or group lasso penalties) that lead to more parsimonious models that exhibit good theoretical properties in terms of inference and predictive ability. Especially in the case of structured penalties (e.g. group lasso that is implemented in the R package grplasso), one can impose a priori biological information, such as pathway structure. The performance of these classificatory and prognostic models will be assessed through K-fold cross-validated error rates, and through receiver-operator characteristic (ROC) curve, while the area under the curve (AUC) will be used as an overall measure of model fit. The significance of the AUC metric for each MS Metabolomics Core -BCM 2014 fitted model will be assessed through the Mann-Whitney U-statistic and it will also be used to select between competing models. Finally, depending on project needs the core will also provide several other standard analyses to users to understand and gain insight into their data including dimension reduction techniques, such as principal components analysis and penalized (for sparsity) variants for obtaining more robust low-dimensional representations of the samples and/or the metabolites, clustering of samples and/or metabolites into groups using a wide range of algorithms (hierarchical, model-based, partition such as k-means and robust variants and graph based ones, such as normalized cuts). In addition, it will provide enhanced visualization capabilities by mapping results into pathways (see also Section on pathway mapping).