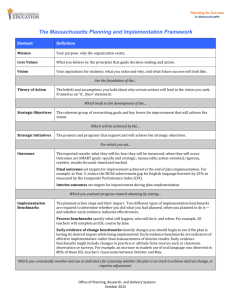

Self-Evaluation, Instructions and Definitions

advertisement

SLOA Self Assessment Rubric, Instructions and Definitions Accreditation Standards, as established by the ACCJC, require an on-going self-evaluation of all processes and procedures conducted by the college. This includes self-evaluation of the SLOA process. With the next round of Program Reviews, all programs are asked to complete a self assessment worksheet. All that is required is to enter the number of courses in your department which fall into each grade category (F to A) for each of the four evaluation categories. (Note, given that we are in the beginning/learning phase of the SLOA process, a C grade at this point would be admirable. The goal is to eventually achieve As for all categories. The four evaluations categories are as follows: 1. Assessment is Authentic Scores are based on Bloom's Taxonomy (see below) of learning, a hierarchical categorization. The six categories are: remembering, understanding, applying, analyzing, evaluating, and creating. Authentic assessments go beyond “remembering” and “understanding” to the “applying” category. Assessment methods are unknown. F The assessment methods for most of the SLOs in these courses test only the recall of simple information such as names, D dates, events, places. (Bloom's category: remembering) The assessment methods for most of the SLOs in these courses test recall of subject matter, ideas or concepts by simple memory. (Bloom's category: remembering.) The assessment methods for most of the SLOs in these courses test student understanding of the concepts by requiring B student to interpret the facts and make comparisons, contrasts or predictions. (Bloom's category: understanding.) The assessment methods for most of these courses test the application of the acquired knowledge and/or skill to "real A world" situations. Students can use their knowledge and/or skills for problem solving and critical analysis. (Bloom's category: applying.) ACCJC encourages (requires) the use of authentic assessments for SLO analysis. Definition of Authentic Assessments: Traditional assessment sometimes relies on indirect or proxy items such as multiple choice questions focusing on content or facts. In contrast, authentic assessment simulates a real world experience by evaluating the student’s ability to apply critical thinking and knowledge or to perform tasks that may approximate those found in the work place or other venues outside of the classroom setting. --This definition is from the Academic Senate of the California Community Colleges. C 2. Data is Useful and Informative, data is actionable: Do the assessment results provide data that can be used to support and inform recommendations and action plans? The more broken down (disaggregated) and the more detailed the data the more informative and useful they are. This is referring to the "raw" data collected from individual students, not the summary of results reported on the SLOA forms. No data or completely inappropriate data indicated. F No detail. Only the data required on the SLOA forms were analyzed for most of the SLOs in these courses. D Some of the data/scores analyzed were detailed but most were not. For example, summary differences between C sections were analyzed. Scores are recorded and analyzed for separate components (sub-topics or tasks) of the assessment assignment and for B each section for some of the SLOs of these courses. For example, results of an essay assignment were recorded and analyzed separately for: grammar/syntax, organization and content. Scores are recorded and analyzed for separate components (sub-topics or tasks) of the assessment assignment and for A each section for all the SLOs of these courses. For example, results of an essay assignment were recorded and analyzed separately for: grammar/syntax, organization and content. Results of embedded exam questions were grouped and analyzed topic by topic. Addition comments about data. 1. The program review SLOA forms require only limited data. Specifically they require the number of students who achieved a successful outcome and the total number of students who were assessed for each outcome of each course. 2. The data that departments or programs use for their analysis and recommendations must be more detailed and are kept by individual departments or programs. They are not included with the SLOA forms. The current evaluation refers to this more detailed data, not the data reported on the SLOA forms. 3. For examples of disaggregated data and its importance see the "SLO TIPS--Record Keeping" document on our SLO/AUO website. To find this web site follow the "Faculty and Staff" and then "Faculty and Staff Resources" links from the homepage of Contra Costa College. An Example. In this rubric, the score is 2.03, C. That number represents the aggregated results across all the 4 measures. Looking at the disaggregated results, this department is fairly strong on discussion (2.79 or B-) so-so on authenticity (2.42 or C+) but very weak on data and benchmarks (1.37 and 1.53, D). This disaggregated approach indicates where major improvement needs to be made. If you just looked at the overall average there wouldn't be any way of knowing where the strengths and weaknesses lie and without that information there couldn't be any useful, relevant or appropriate analysis or recommendations. 3. Benchmarks Are benchmarks identified? Are they appropriate, justifiable? Does analysis include reference to benchmarks? Is their evidence of progress related to benchmarks? No benchmarks are used or indicated. F Benchmarks are indicated for SLOs in these courses but there is no analysis of results in reference to benchmarks. D There is no analysis of the appropriateness of the benchmarks. Benchmarks are indicated for some SLOs in these courses with only minimal analysis of results in reference to benchmarks (no indication of any improvements). There is no analysis of the appropriateness of the benchmarks. Benchmarks are indicated for most SLOs in these courses with some analysis of results in reference to benchmarks (no B indication of any improvement). There is no analysis of the appropriateness of the benchmarks. Benchmarks are clearly established and justifiable for all SLOs in these courses. Analysis and recommendations refer to A benchmarks. Results show continuous improvement in relation to the benchmarks. A benchmark is a measure used for comparative purposes. It can be used as a goal or as a "starting point" from which to measure progress or improvement. For SLOs, a benchmark could be: 1. the original results collected from initial assessments, 2. national or local norms or levels of achievement, 3. results from comparable institutions, or 4. any logically established level or indicator of success, as for example the stated expected results or criteria for success. For accreditation purposes, ACCJC expects colleges to show CSQI--Continuous Sustainable Quality Improvement across all aspects of Integrated Planning, Program Reviews and SLOs. One way to measure CSQI is through the use of benchmarks. For example, "SLO results will improve by 2% each assessment cycle" where the "2%" reflects improvement from the original benchmark. C 4. Department Discussion: Are the SLOA results and recommendation and the assessment methods widely discussed across the department or unit? No discussion takes place regarding any of the SLO results in these courses. F Minimal, informal discussions occur by some of the faculty members about some of the SLOs in these courses but D C B A without any significant impact on analysis and recommendations Discussions occur in an informal manner on some of the SLOs in these courses and contribute to some of the analysis and recommendations. Discussions occur in a formal manner on most of the SLOs in these courses. Results of the discussions inform the analysis and recommendations. Substantial, rigorous, inclusive and directed discussions regarding results from all the SLOs in these courses occurs. Analysis and recommendations are based on these discussions and analyses. Bloom’s Taxonomy