Chapter 11 - Testing a Claim

AP Statistics

Hamilton/Mann

Confidence intervals are one of the two most common types of statistical inference.

Use confidence intervals when you want to estimate a population parameter.

The second common type of statistical inference, called significance tests, has a different goal: to assess the evidence provided by data about some claim concerning a population.

The following example will help us to understand the reasoning of statistical tests.

I claim that I make 80% of my free throws. To test my claim, you ask me to shoot 20 free throws. I only make 8 of the 20 free throws and you believe you have disproven me and say, “Someone who makes 80% of their free throws would almost never make only 8 out of 20. So I don’t believe your claim.”

Your reasoning is based on asking what would happen if my claim were true and we repeated the sample of 20 free throws many times – I would almost never make as few as 8. This event is so unlikely that it gives strong evidence that my claim is not true.

You can say how strong the evidence against my claim is by giving the probability that I would make as few as

8 out of 20 shots if I really were an 80% free throw shooter. That probability is 0.0001. (How’d we get that?) I would make 8 free throws or less only once in

10,000 tries in the long run if my claim to make 80% is true.

This small probability convinces you that I am lying.

Significance tests use an elaborate vocabulary, but the idea is simple: an outcome that would rarely happen if a claim were true is good evidence that the claim is not true.

Significance Tests: The Basics

HW: 11.3, 11.4, 11.5, 11.6, 11.7, 11.8, 11.11, 11.12,

11.14, 11.16…due Tuesday at the end of class

A significance test is a formal procedure for comparing observed data with a hypothesis whose truth we want to assess.

The hypothesis is a statement about a population parameter, like the population mean or population proportion p.

The results of the test are expressed in terms of a probability that measures how well the data and the hypothesis agree.

The reasoning of statistical tests, like that of confidence intervals, is based on asking what would happen if we repeated the sampling or experiment many times.

We will begin by unrealistically assuming that we know the population standard deviation

Vehicle accidents can result in serious injury to drivers and passengers. When they do, people generally call 911. Emergency personnel then report to these emergency calls as quickly as possible. Slow response times can have serious consequences for accident victims. In case of life-threatening injuries, victims generally need medical attention within 8 minutes of the crash.

For this reason several cities have begun monitoring paramedic response times.

In one such city, the mean response time to all accidents involving life-threatening injuries last year was minutes with a standard deviation of minutes. The city manager shares this information with emergency personnel and encourages them to “do better” next year.

At the end of the next year, the city manager selects an SRS of 400 calls involving lifethreatening injuries and examines the response times. For this sample, the mean response time was minutes. Do these data provide good evidence that response times have decreased since last year?

Remember, sample results vary! Maybe the response times have not improved at all and the apparent improvement is a result of sampling variability.

We are going to make a claim and ask if the data gives evidence against it. We would like to conclude that the mean response time has decreased, so the claim we test is that response times have not decreased. For that claim, the mean response time of all calls involving life-threatening injuries would be minutes. We will also assume that minutes for this year’s calls too.

If the claim that minutes is true, the sampling distribution of from 400 calls will be approximately Normal (by the CLT) with mean minutes and standard deviation minutes.

We can judge whether any observed value is unusual by locating it on this distribution.

Is a value of 6.48 for unusual?

Is a value of 6.61 for unusual?

The city manager’s observed value of 6.48 is far from the distribution’s mean of minutes. In fact, it so far that it would rarely occur by chance if the mean was minutes. This observed value is good evidence that the true mean is less than 6.7 minutes. In other words, it appears that the average response time did decrease this year, and the paramedics should be commended for a job well done.

This outlines the reasoning of significance tests.

A statistical test starts with a careful statement of the claims that we want to compare. In our prior example, we asked if the accident response time data were likely if, in fact, there is no decrease in paramedics’ response times.

Because the reasoning of tests looks for evidence against a claim, we start with the claim we seek evidence against, such as “no decrease in response time.” This claim is our null hypothesis.

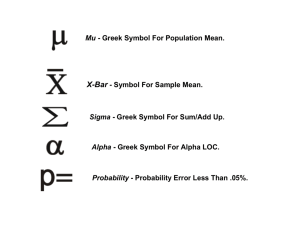

We abbreviate the null hypothesis as H alternative hypothesis as H a

0 and the

. In our example, we were seeking evidence of a decrease in response time this year. For this reason, the null hypothesis would say that there was “no decrease” and the alternative hypothesis would say that “there was a decrease.” The hypotheses are

Since we were only interested in the fact that the mean time had decreased, the alternative hypothesis is one-sided.

Hypotheses always refer to some population, not to a particular outcome. So always state H and H a in terms of a population parameter.

Because H a

0 expresses the effect that we hope to find evidence for, it is often easier to begin by stating H present. a and then setting up H

0 as the statement that the hoped-for effect is not

Does the job satisfaction of assembly workers differ when their work is machine-paced rather than self-paced? One study chose 18 workers at random from a group of people who assembled electronic devices. Half the subjects were assigned at random to each of the two groups. Both groups did similar assembly work, but one work setup allowed workers to pace themselves, and the other featured an assembly line that moved at fixed time intervals so that the workers were paced by the machine. After two weeks, all subjects took the Job

Diagnosis Survey (JDS), a test of job satisfaction.

Then they switched work setups and took the JDS again after two more weeks.

This is a matched pairs design. The response variable is the difference in the JDS scores, selfpaced minus machine-paced. The parameter of interest is the mean of the differences in JDS scores in the population of all assembly workers.

Since we are asking “Does the job satisfaction of assembly workers differ when their work is machine-paced rather than self-paced?,” our alternative hypothesis will be that the job satisfaction does differ. If they were the same, then the mean difference would be 0.

equal.

since difference means they aren’t

The alternative hypothesis should express the hopes or suspicions we have before we see the data. It is cheating to first look at the data and then frame H a to fit what the data show.

To create a confidence interval we had to have:

an SRS from the population of interest

Normality independent observations

These are the same three conditions for a significance test.

The details for checking the Normality condition for means and proportions are different.

For means – population is Normal or large sample size

For proportions -

Before conducting a significance test about the mean response time of paramedics, we should check our conditions.

SRS – We were told that the city manager took an SRS of

400 calls involving life-threatening injuries.

Normality – The population distribution of paramedic response times may not follow a Normal distribution, but our sample size (400) is large enough to ensure that the sampling distribution of is approximately Normal (by the Central Limit Theorem).

Independence – We must assume that there were at least

4000 calls that involved life-threatening injuries for the observations to be independent.

We appear to meet all three conditions.

A significance test uses data in the form of a test statistic. Here are some principles that apply to most tests:

The test is based on a statistic that compares the value of the parameter as stated in the null hypothesis with an estimate of the parameter from the sample data.

Values of the estimate far from the parameter value in the direction specified by the alternative hypothesis give evidence against H

0

.

To assess how far the estimate is from the parameter, standardize the estimate. In many common situations, the test statistic has the form

For this example, the null hypothesis was and the estimate of was that minutes. Since we were assuming minutes for the distribution of response times, our test statistic is where is the value of specified in the null hypothesis.

The test statistic z says how far is from in standard deviation units.

So, for our example,

Because this sample result is over two standard deviations below the hypothesized mean of 6.7, it gives good evidence that the mean response time this year is not 6.7 minutes, but rather, less than 6.7 minutes.

The null hypothesis H

0 states the claim we are seeking evidence against. The test statistic measures how much the sample data diverge from the null hypothesis. If the test statistic is large and is in the direction suggested by the alternative hypothesis H would be unlikely if H called a P-value.

0 a

, we have data that were true. We make

“unlikely” precise by calculating a probability,

Small P-values are evidence against

H

0

because they say that the observed result is unlikely to occur when H

0

is true. Large values fail to give evidence against H

0

.

Recall that our test statistic had the value

Since the alternative hypothesis H a was a negative z-value would favor

H a over H

0

.

The P-value is the probability of getting a sample result that is at least as extreme as the one we did if H

0

P-value is assuming that were true. In other words, the calculated

So

The shaded area under the curve is the P-value of the sample results

So there is about a 1.4% chance that the city manager would select a sample of 400 calls with a mean of 6.48 minutes or less.

This small P-value provides strong evidence against H

0 and in favor of the alternative

For this reason, we believe that there is evidence to suggest that the mean response times of the paramedics to accidents that produced life-threatening injuries has decreased.

Recall that our hypotheses for job satisfaction were

Suppose we know that the differences in job satisfaction follow a Normal distribution with a standard deviation of

Data from 18 workers gave that is these workers preferred the self-paced environment on average.

The test statistic is

Because the alternative hypothesis is two-sided, the P-value is the probability of getting at least as far from 0 in either direction as the observed z =

1.20. As always, calculate the P-value by taking H

0 to be true. When H

0 is true, and z has a standard Normal distribution.

So we are looking for

Since values as far from 0 as would occur approximately 23% of the time when the mean is it is not good evidence that there is a difference in job satisfaction.

Let’s look at an applet.

We sometimes take one final step to assess the evidence against H

0

. We can compare the P-value with a fixed value that we regard as decisive. This amounts to announcing in advance how much evidence against H

0 we will insist on. This decisive value of P is called the significance level.

We write it as α, the Greek letter alpha. If we choose

α = 0.05, we are requiring that the data give evidence against H

0 so strong that it would happen no more than 5% of the time when H

0 is true. If we choose

α = 0.01, we are insisting on stronger evidence against H

0

, evidence so strong that it would appear only 1% of the time if H

0 is true.

Significant in the statistical sense does not mean important. It simply means “not likely to happen by chance.” The significance level α makes “not likely” more precise. Significance at level 0.01 is often expressed by the statement “The results were significant (P < 0.01).” Here the P stands for the Pvalue. The P-value is more informative than a statement of significance because it allows us to assess significance at any level we choose. For example, a result with P=0.03 is significant at the α = 0.05 level, but is not significant at the α = 0.01 level.

We found that the P-value for paramedic response times was 0.0139. This result is statistically significant at the α = 0.05 level since

0.0139<0.05, but is not significant at the α =

0.01 level because The figure below gives a visual representation of this relationship.

In practice, the most commonly used significance level is α = 0.05.

Sometimes it may be preferable to choose α = 0.01 or α = 0.10, for reasons we will discuss later

.

1.

2.

The final step is to draw a conclusion about the competing claims you were testing. As with confidence intervals, the conclusion should have a clear connection to your calculations and should be stated in the context of the problem. In significance testing there are two acceptable methods for drawing conclusions:

One based on P-values

One based on statistical significance

Both methods describe the strength of the evidence against the null hypothesis H

0

.

1.

2.

We have already seen an example of the Pvalue approach.

Our other option is to make one of two decisions about the null hypothesis H

0 based on whether or result is statistically significant.

Reject H

0

– There is sufficient evidence to believe the null hypothesis is incorrect

Fail to reject H

0

– There is not sufficient evidence to believe that the null hypothesis is incorrect. This does not mean that it is correct, just that we cannot show that it is not incorrect.

We reject H

0 if our sample result is too unlikely to have occurred by chance assuming H is true.

In other words, we will reject H

0

0 if our result is statistically significant at the given α level.

If our sample result could possibly have happened by chance assuming H fail to reject H

0

0 is true, we will

. That is, we will fail to reject H our result is not significant at the given α level.

0 if

We should always interpret the results by remembering the three C’s: conclusion, connection, and context.

The P-value for the city manager’s study of response times was P = 0.0139. If we were using an α = 0.05 significance level, we would reject minutes (conclusion) since our P-value is less than our significance level α = 0.05 (connection). In other words, it appears that the mean response time to all lifethreatening calls this year is less than last year’s average of 6.7 minutes (context).

The P-value for the job satisfaction study was

P = 0.2302. If we were using an α = 0.05 significance level, we would fail to reject

(conclusion) since our P-value is greater than our significance level α = 0.05

(connection). In other words, it is possible that the mean difference in job satisfaction scores for workers in a self-paced versus machinepaced environment is 0 (context).

If you are going to draw a conclusion based on statistical significance, the significance level α should be selected before the data are produced to prevent manipulating the data.

A P-value is more informative than a “reject” or

“fail to reject” conclusion at a given significance level. The P-value is the smallest α level at which the data are significant.

Knowing the P-value allows us to assess significance at any level.

However, interpreting the P-value is more challenging than making a decision about H on statistical significance.

0 based

Carrying Out Significance Tests

HW: 11.27, 11.28, 11.30, 11.31, 11.32, 11.33, 11.34

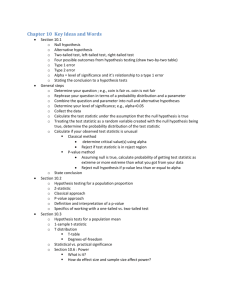

To test a claim about an unknown population parameter:

Step 1: Hypotheses – Identify the population of interest and the parameter you want to draw conclusions about. State hypotheses.

Step 2: Conditions – Choose the appropriate inference procedure and verify the conditions for using it.

Step 3: Calculations – If the conditions are met, carry out the inference procedure.

Calculate the test statistic.

Find the P-value.

Step 4: Interpretation – Interpret your results in the context of the problem.

Interpret the P-value or make a decision about H

0 using statistical significance.

Don’t forget the three C’s: conclusion, connection, and context.

Once you have completed the first two steps, a calculator or computer can do Step 3. Here is the calculation step for carrying out a significance test about the population mean

μ

in the unrealistic setting when

σ

is known.

The medical director of a large company is concerned about the effects of stress on the company’s younger executives.

According to the National Center for

Statistics, the mean systolic blood for males 35 to 44 years of age

Health pressure is 128, and the standard deviation in this population is

15. The medical director examines the medical records of 72 male executives in this age group and finds that their mean systolic blood pressure is

Is this evidence that the mean blood pressure for all the company’s younger male executives is different from the national average?

Step 1 – The population of interest is all male executives aged 35 to 44 at this company.

The parameter of interest is the mean systolic blood pressure μ of these male executives aged 35 to 44. Since we want to check that the mean blood pressure is different, the alternative hypothesis would be that it is different from, in other words, not equal to, the national average of 128 for men in this age group. So, the null hypothesis would be that the mean systolic blood pressure would be the same as the national average.

Step 2 – Conditions

SRS – We are not told. If the records that are being checked are not an SRS, then our results may be questionable. For instance, if we only have records for executives who have been ill, this introduces bias because illness is usually accompanied by higher blood pressure.

Normality – The sample is large enough (72) for the

Central Limit Theorem to tell us that the sampling distribution of is approximately Normal.

Independence – We must assume that there are at least 720 executives aged 35 to 44 who work for this large company. If not, independence does not hold.

Step 3 – Calculations

Since the test is two-sided, we have to find the probability that we are less than -1.09 or greater than

1.09.

Step 4 – Conclusions – Since our P-value is

0.2758, we fail to reject the null hypothesis that

Therefore, there is not good evidence that the mean systolic blood pressure of the male executives aged 35 to 44 differs from the national average.

The data for our example do not establish that the mean systolic blood pressure μ for the male executives aged 35 to 44 is 128. We sought evidence that μ differed from 128 and failed to find convincing evidence. That is all we can say.

Most likely, the mean systolic blood pressure of all male executives aged 35 to 44 is not exactly equal to 128. A large enough sample would give evidence of the difference, even if it is very small.

Failing to find evidence against H

0 means only that the data are consistent with H , not that we

The company medical director initiated a health promotion campaign to encourage employees to exercise more and eat a healthier diet. One measure of the effectiveness of such a program is a drop in blood pressure. The director chooses a random sample of 50 employees and compares their blood pressures from physical exams given before the campaign and again a year later. The mean change in systolic blood pressure for these 5o employees is We assume that the population standard deviation is The director wants to use an significance

Step 1 – We want to know if the health campaign reduced blood pressure on average in the population of all employees at this company. We are going to let μ be the mean change in blood pressure for all employees.

Then we are testing

Step 2 – Conditions

SRS – We are told he took a “random sample.”

Normality – The sample size is large enough to create a sampling distribution of that is approximately Normal.

Independence – More than 500 people should work

Step 3 – Calculations

Since we are expecting it to be less, that is all we have to check.

Step 4 – Conclusions

Since our P-value of 0.0170 is less than our significance level of α = 0.05, we reject the null hypothesis that

Therefore, we conclude that there is reason to believe that the mean systolic blood pressure rates of the employees at the company have decreased. Since treatments were not random, we cannot conclude it was the treatment that caused the change.

We would like to conclude that the program causes the decline in systolic blood pressure rates, but there are other possible explanations.

For example, a local television station could have run a series on the risk of heart attacks and the value of better health and diet.

Another possibility is that a new YMCA just opened and gave away free memberships for a few months.

To conclude that the program made the difference, we would need to conduct a randomized comparative experiment. This would involve a control group to control for

A 95% confidence interval captures the true value of μ in 95% of all samples. If we are 95% confident that the true μ lies in our interval, we are also confident that values of μ that fall outside our interval are incompatible with the data. That sounds like the conclusion of a significance test.

In fact, there is an intimate connection between

95% confidence and significance at the 5% level. The same connection holds between 99% confidence intervals and significance at the 1% level and so on.

The link between confidence intervals and two-sided significance tests is sometimes referred to as duality.

The Deely Laboratory analyzes specimens of a drug to determine the concentration of the active ingredient. Such chemical processes are not perfectly precise. Repeated measures of the same specimen will give slightly different results. The results of repeated measurements follow a Normal distribution quite closely. The analyses procedure has no bias, so the mean μ of the population of all measurements is the true concentration of the specimen. The standard deviation of this distribution is a property of the analysis method and is known to be grams per liter.

A client sends a specimen for which the concentration of active ingredients is supposed to be 0.86%. Deely’s three analyses give concentrations

0.8363

0.8447

Is there significant evidence at the 1% level that the true concentration is not 0.86%?

As we go through this, we will discuss the 4 steps, but not write them all down!

We are testing

So

Since it is two-sided, we are looking for the probability that it is less than -4.99 or greater than 4.99.

So

Since our P-value is less than our 1% significance level, we reject the null hypothesis.

Let’s create a 99% confidence interval.

The hypothesized value of 0.86% falls outside of this interval. Therefore the hypothesized value is not a plausible value for the mean concentration of active ingredients in this specimen. We can therefore reject at the 1% significance level.

What if our null hypothesis was instead

Then

So we fail to reject the null hypothesis at the 1% confidence level.

Since our 99% confidence level contains 0.85, we would fail to reject.

Use and Abuse of Tests

HW: 11.43, 11.44, 11.45, 11.46, 11.48

Significance tests are widely used in reporting the results of research in many fields.

New drugs require significant evidence of effectiveness and safety.

Courts ask about statistical significance in hearing discrimination cases.

Marketers want to know whether a new ad campaign significantly outperforms the old one.

Medical researchers want to know whether a new therapy performs significantly better.

In all these cases, statistical significance is valued because it points to an effect that is unlikely to occur simply by chance.

Carrying out a significance test is often very simple, especially if using P-values that you find with calculators and computers.

Using tests wisely is not so simple.

This section presents points to keep in mind when using or interpreting significance tests.

The purpose of a significance test is to give a clear statement of the degree of evidence provided by a sample against the null hypothesis. The P-value does this.

How small must the P-value be to be considered convincing evidence against the null hypothesis?

How plausible is H

0

? If H

0 represents an assumption that the people you must convince have believed for years, strong evidence (small P) will be needed to persuade them.

What are the consequences of rejecting H in favor H a

0

? If rejecting H

0 means making an expensive change over from one type of product packaging to another, you need strong evidence that the new packaging will boost sales.

These criteria are a bit subjective. This is why giving the P-value is a good idea. It allows each person to decide individually if the evidence is sufficiently strong.

Standard levels of significance have typically been

10%, 5% and 1%. This evidence reflects the time when table of critical values rather than software dominated statistical practice. 5% was a particularly common level of significance.

There is no sharp border between statistically significant and statistically insignificant, only increasingly strong evidence as the P-value decreases. For example, there is no practical distinction between the P-values 0.049 and 0.051.

Therefore, it makes no sense to treat P <0.05 as a universal rule for what is significant.

When a null hypothesis is rejected at the usual levels ( α = 0.05 or α = 0.01), there is good evidence that an effect is present. That effect may, however, be very small.

When large samples are available, even tiny deviations from the null hypothesis will be significant. (Recall that large samples have smaller standard deviations than the entire population and, as a result, will require that values be closer to the actual mean or proportion.)

Suppose we are testing a new antibacterial cream on a small cut made on the inner forearm. We know from previous research that with no medication, the mean healing time

(defined as the time for the scab to fall off) is

7.6 days with a standard deviation of 1.4 days.

The claim we want to test here is that the antibacterial cream speeds healing. We will use a 5% significance level.

Procedure: We cut 25 volunteer college students and apply the antibacterial cream to the wounds. The mean healing time for these subjects is days. We will assume that

So

Since it is one-sided,

Since 0.0367 < 0.05, the results is statistically significant and reject H

0 and conclude that the mean healing time is reduced by the antibacterial cream. Is the result practically important though? Is having your scab fall off half a day sooner a big deal?

Statistical significance is not the same thing as practical importance.

The remedy for attaching too much practical importance to statistical significance is to pay attention to the actual data as well as the Pvalue. Plot your data and examine them carefully. Are there outliers or other deviations from a consistent pattern?

A few outlying observations can produce highly significant results if you blindly apply common significance tests.

Outliers can also destroy the significance of otherwise-convincing data.

The foolish user of statistics will feed the calculator or computer data without exploratory analysis. This individual will often be embarrassed.

Is the effect you are seeking visible in your plots? If not, ask if the effect is large enough to be practically important.

Give a confidence interval for the parameter in which you are interested. A confidence interval actually estimates the size of an effect rather than simply asking if it is too large to reasonably occur by chance alone.

Confidence intervals are not used as often as they should be, while significance tests are

There is a tendency to infer that there is no effect whenever a P-value fails to attain the usual 5% standard.

This is not true and will be illustrated on the next slide.

In an experiment to compare methods for reducing transmission of HIV, subjects were randomly assigned to a treatment group and a control group.

Result: the treatment group and the control group had the same rate of HIV infection. Researchers described this as an “incident rate ratio” of 1. A ratio above 1 would mean that there was a greater rate of HIV infection in the treatment group, while a ratio below 1 would indicate a greater rate of HIV infection in the control group. The 95% confidence interval for the incident rate ratio was reported as 0.63 to 1.58. This confidence interval indicates that the treatment may achieve a 37% decrease or a 58% increase in HIV infection. Clearly, more data are needed to distinguish between these possibilities. However, a significance test found that there was no statistically significant

Research in some fields is rarely published unless significance at the 5% level is attained.

A survey of the four journals published by the

American Psychological Association showed that of 294 articles using statistical tests, only 8 reported results that did not attain the 5% significance level.

In some areas of research, small effects that are detectable only with large sample sizes can be of great practical significance. Data accumulated from a large number of patients taking a new drug may be needed before we can conclude that there are life-threatening consequences for a small number of people.

Badly designed experiments or surveys often produce invalid results.

Formal statistical inference cannot correct flaws in design.

Each test is valid only in certain circumstances, with properly produced data being particularly important.

The z test, for instance, should bear the same warning labels that we gave to z confidence intervals (p.636).

You wonder whether background music would increase the productivity of the staff who process mail orders in your business. After discussing the idea with the workers, you add music and find a statistically significant increase. Should you be impressed?

No. Almost any change in the work environment combined with knowledge that a study is being conducted will result in a shortterm increase in productivity. This is known as the Hawthorne effect.

Significance tests can inform you that an increase has occurred that is larger than what would be expected by chance alone. It does not, however, tell you what, other than chance, caused the increase. The most plausible explanation in our example is that workers change their habits when they know they are being studied. This study was uncontrolled, so the significant result cannot be interpreted. A randomized comparative experiment would isolate the actual effect of background music and make significance meaningful.

Significance tests and confidence intervals are based on the laws of probability.

Randomization in sampling or experimentation ensures that these laws apply.

We often have to analyze data that do not arise from randomized samples or experiments. To apply statistical inference to such data, we must have confidence in the use of probability to describe the data.

Always ask how the data were produced, and don’t be too impressed by P-values on a printout until you are confident that the data deserve a formal analysis.

Some people believe there is a link between cell phone usage and brain cancer. Studies disagree. In examining 20 studies, all but one found that there was no statistically significant link between cell phone usage and brain cancer. Should this one link be cause for concern?

No. Since the tests were conducted with a significance level of 5%, we would expect 1 in

2o to produce statistically significant results.

This is what we mean by multiple analyses.

Don’t trust the results of a single study when there are many more that contradict it.

Using Inference to Make Decisions

HW: 11.49, 11.50, 11.51, 11.52, 11.53, 11.54,

11.55, 11.56, 11.57

In Section 11.1, significance tests were presented as a method for assessing the strength of evidence against the null hypothesis. Most users of statistics think of tests this way.

We also presented another method in which a fixed level of significance α is chosen in advance and determines the outcome of the test (decision).

If the P-value is less than α , then we reject H

0

.

If the P-value is greater than α , then we fail to reject

H

0

.

There is a big difference between measuring

Many statisticians feel that making decisions is too grand a goal for statistics alone. They believe that decision makers should take other factors that are hard to reduce to numbers into consideration.

Sometimes we are concerned about making a decision or choosing an action based on our evaluation of the data.

A potato chip producer and a supplier of potatoes agree that each shipment of potatoes must meet certain quality standards. If less than 8% of the potatoes in the shipment have

“blemishes,” the producer will accept the entire truckload. Otherwise, the truck will be sent away to get another load of potatoes from the supplier. Obviously, they don’t have time to inspect every potato. Instead, the producer inspects a sample of potatoes. On the basis of the sample results, the potato chip producer uses a significance test to decide whether to accept or reject the potatoes.

When making decisions based on a significance test, we hope that our decision will be correct. Unfortunately, sometimes it will be wrong.

1.

There are two types of incorrect decisions.

Reject the null hypothesis when it is actually true.

2.

Fail to reject the null hypothesis when it is actually false.

To distinguish between these two types of errors, we give them specific names.

If H

0 is true, our decision is either correct (if we fail to reject H

0

) or is a Type I error.

If H

0 is false (H a

(we reject H

0 is true), our decision is either correct

) or is a Type II error.

For the potato example, we want the truckload to have less than 8% of the potatoes with blemishes. So

What would each type of error be?

Type I error – Reject H

0 when H

0 is actually true. So in this case, we believe that the actual proportion of potatoes with blemishes is less than 8% when actually 8% or more of the potatoes have blemishes. As a result, the producer would accept the truckload and use the potatoes to make chips. As a result, more chips would be made with bad potatoes and consumers may get upset.

Type II error – Fail to reject H

0 when it is not true. So in this case, we would believe that 8% or more of the truckload contains blemishes when in fact less than 8% contain blemishes. As a result, we would not accept the shipment of potatoes resulting in a loss of revenue for the supplier. Additionally, the producer will now have to wait for another shipment of potatoes.

Recall in Section 11.1, we tested the claim that the mean response time of paramedics to lifethreatening accidents had decreased from 6.7 minutes. So we tested the hypotheses

What would each type of error be?

Type I error – Reject H

0 when H

0 is actually true. In this case, we would conclude that mean response times had decreased when they actually had not. As a result, more lives could be lost because response times are not fast enough.

Type II error – Fail to reject H

0 when H

0 is actually false. In this case, we would conclude that the mean response time is 6.7 minutes or more when it is actually less than 6.7 minutes. As a result, we will continue to try to reduce response time which may cost the city a considerable amount of money and upset the paramedics.

There is no definitive answer because it depends on the circumstances. For example, in the perfect potato example, a type I error could result in decreased sales for the potato chip producer. A type II error, on the other hand, may not cause much difficulty provided they can get another shipment of potatoes fairly quickly. The supplier will see things differently though. For the supplier, a Type II error would result in lost revenue from the truckload of potatoes. The supplier would prefer a Type I error, as long as the producer did not discover the mistake and seek a new

We can assess any rule for making decisions by looking at the probabilities of the two types of error. This is in keeping with the idea that statistical inference is based on asking, “What would happen if I did this many times?”

We cannot (without inspecting the entire truckload), guarantee that good shipments of potatoes will never be rejected and bad shipments will never be accepted. We can, however, using random sampling and the laws of probability, determine what the probabilities of both types of error are.

Suppose that the city manager decided to test using a 5% significance level.

A Type I error is to reject H

0 when μ = 6.7 minutes. Since, by random chance, we would get a small enough value to conclude that we should reject H

0 in 5% of all samples, the probability of a Type I error is 5%, which is our significance level.

What about Type II errors? Since there are many values of μ that satisfy H a

: μ < 6.7 minutes, we will concentrate on one value. The city manager decides that she really wants the significance test to detect a decrease in the mean response time to 6.4 minutes or less. So a particular Type II error is to fail to reject H

0 when μ = 6.4 minutes.

The shaded area represents the probability of failing to reject H

0 when μ = 6.4 minutes. So this area is the probability of a Type II error.

The probability of a Type II error (sometime called β ) for the particular alternative μ = 6.4 minutes is the probability the test will fail to reject H

0 when μ = 6.4 minutes. This is the probability that the sample mean falls to the right of the critical value.

Type II errors are not easy to calculate. We are not concerned with calculating them as much as we are understanding what a Type II error is.

A significance test makes a Type II error when it fails to reject a null hypothesis that really is false. A high probability of a Type II error for a particular alternative means that the test is not sensitive enough to usually detect that alternative.

Calculations of the probability of Type II errors are therefore useful even if you don’t think that a statistical test should be viewed as making a decision.

In the significance test setting, it is more common to report the probability that a test does reject H when an alternative is true.

This probability is called the power of the test against that specific alternative. The higher this probability is, the more sensitive the test is.

Calculations of P-values and power both say what would happen if we repeated the test many times.

Calculations of P-values say what would happen supposing the null hypothesis is true.

Calculations of power describes what would happen supposing that a particular alternative is true.

High power is desirable. Along with 95% confidence intervals and 5% significance tests,

80% power is becoming a standard. In fact,

U.S. government agencies that provide research funding are requiring that the sample size be sufficient to detect important results

80% of the time using a significance test with α

= 0.05.

Suppose we performed a power calculation and found that our power is too small. What can we do to increase it?

1.

2.

3.

4.

Increase α . A test at the 5% significance level will have a greater chance of rejecting the alternative than a 1% test because the strength of evidence required for rejection is less.

Consider a particular alternative that is farther away from μ but lie

0

. Values of μ that are in H close to the hypothesized value μ

0 detect than values farther away.

Increase the sample size. More data will provide more information about so we will have a better chance of distinguishing values of μ .

Decrease σ . This has the same effect as increasing the sample size: more information about

Improving the measurement process and restricting attention to a subpopulation are two ways to decrease σ .