not sample

advertisement

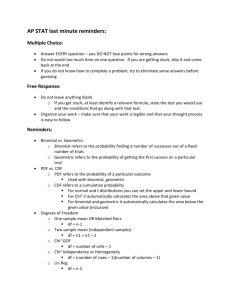

Last Time

• Q-Q plots

– Q-Q Envelope to understand variation

• Applications of Normal Distribution

– Population Modeling

– Measurement Error

• Law of Averages

– Part 1: Square root law for s.d. of average

– Part 2: Central Limit Theorem

Averages tend to normal distribution

Reading In Textbook

Approximate Reading for Today’s Material:

Pages 61-62, 66-70, 59-61, 335-346

Approximate Reading for Next Class:

Pages 322-326, 337-344, 488-498

Applications of Normal Dist’n

1. Population Modeling

Often want to make statements about:

The population mean, μ

The population standard deviation, σ

Based on a sample from the population

Which often follows a Normal distribution

Interesting Question:

How accurate are estimates?

(will develop methods for this)

Applications of Normal Dist’n

2. Measurement Error

Model measurement

X = μ + e

When additional accuracy is required,

can make several measurements,

and average to enhance accuracy

Interesting question: how accurate?

(depends on number of observations, …)

(will study carefully & quantitatively)

Random Sampling

Useful model in both settings 1 & 2:

Set of random variables

X 1 , , X n

Assume:

a. Independent

b. Same distribution

Say:

X 1 ,, X n are a “random sample”

Law of Averages

Law of Averages, Part 1:

Averaging increases accuracy,

by factor of

1

n

Law of Averages

Recall Case 1:

X 1 ,, X n ~ N ,

CAN SHOW:

ˆ X ~ N ,

n

Law of Averages, Part 2

So can compute probabilities, etc. using:

• NORMDIST

• NORMINV

Law of Averages

Case 2: X 1 , , X n any random sample

CAN SHOW, for n “large”

X is “roughly” N ,

Consequences:

• Prob. Histogram roughly mound shaped

• Approx. probs using Normal

• Calculate probs, etc. using:

– NORMDIST

– NORMINV

Law of Averages

Case 2: X 1 , , X n any random sample

CAN SHOW, for n “large”

X is “roughly” N ,

Terminology:

“Law of Averages, Part 2”

“Central Limit Theorem”

(widely used name)

Central Limit Theorem

For X 1 , , X n any random sample

and for n “large”

X is “roughly” N ,

Central Limit Theorem

For X 1 , , X n any random sample

and for n “large”

X is “roughly” N ,

Some nice illustrations

Central Limit Theorem

For X 1 , , X n any random sample

and for n “large”

X is “roughly” N ,

Some nice illustrations:

• Applet by Webster West & Todd Ogden

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

For 10 plays

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

For 10 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

For 20 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

For 50 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

For 100 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

For 1000 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

For 10,000 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll single die

For 100,000 plays,

histogram

Stabilizes at

Uniform

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll 5 dice

For 1 play,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll 5 dice

For 10 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll 5 dice

For 100 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll 5 dice

For 1000 plays,

histogram

Central Limit Theorem

Illustration: West – Ogden Applet

http://www.amstat.org/publications/jse/v6n3/applets/CLT.html

Roll 5 dice

For 10,000 plays,

histogram

Looks mound

shaped

Central Limit Theorem

For X 1 , , X n any random sample

and for n “large”

X is “roughly” N ,

Some nice illustrations:

• Applet by Webster West & Todd Ogden

• Applet from Rice Univ.

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

user input

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

user input

(very non-Normal)

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

user input

(very non-Normal)

Dist’n of average

of n = 2

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

user input

(very non-Normal)

Dist’n of average

of n = 2

(slightly more

mound shaped?)

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

user input

(very non-Normal)

Dist’n of average

of n = 5

(little more

mound shaped?)

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

user input

(very non-Normal)

Dist’n of average

of n = 10

(much more

mound shaped?)

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

user input

(very non-Normal)

Dist’n of average

of n = 25

(seems very

mound shaped?)

Central Limit Theorem

For X 1 , , X n any random sample

and for n “large”

X is “roughly” N ,

Some nice illustrations:

• Applet by Webster West & Todd Ogden

• Applet from Rice Univ.

• Stats Portal Applet

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Density for average

of n = 1, from

Exponential dist’n

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Density for average

of n = 1, from

Exponential dist’n

Best fit Normal

density

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Density for average

of n = 2, from

Exponential dist’n

Best fit Normal

density

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Density for average

of n = 4, from

Exponential dist’n

Best fit Normal

density

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Density for average

of n = 10, from

Exponential dist’n

Best fit Normal

density

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Density for average

of n = 30, from

Exponential dist’n

Best fit Normal

density

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Density for average

of n = 100, from

Exponential dist’n

Best fit Normal

density

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Very strong

Convergence

For n = 100

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Looks “pretty good”

For n = 30

Central Limit Theorem

For X 1 , , X n any random sample

and for n “large”

X is “roughly” N ,

How large n is needed?

Central Limit Theorem

How large n is needed?

Central Limit Theorem

How large n is needed?

• Depends completely on setup

Central Limit Theorem

How large n is needed?

• Depends completely on setup

• Indiv. obs. Close to normal small OK

Central Limit Theorem

How large n is needed?

• Depends completely on setup

• Indiv. obs. Close to normal small OK

• But can be large in extreme cases

Central Limit Theorem

How large n is needed?

• Depends completely on setup

• Indiv. obs. Close to normal small OK

• But can be large in extreme cases

• Many people “often feel good”,

when n ≥ 30

Central Limit Theorem

How large n is needed?

• Depends completely on setup

• Indiv. obs. Close to normal small OK

• But can be large in extreme cases

• Many people “often feel good”,

when n ≥ 30

Review earlier examples

Central Limit Theorem

Illustration: Rice Univ. Applet

http://www.ruf.rice.edu/~lane/stat_sim/sampling_dist/index.html

Starting Distribut’n

user input

(very non-Normal)

Dist’n of average

of n = 25

(seems very

mound shaped?)

Central Limit Theorem

Illustration: StatsPortal. Applet

http://courses.bfwpub.com/ips6e

Looks “pretty good”

For n = 30

Extreme Case of CLT

I.e. of:

averages ~ Normal,

when individuals are not

Extreme Case of CLT

I.e. of:

averages ~ Normal,

when individuals are not

Extreme Case of CLT

I.e. of:

averages ~ Normal,

when individuals are not

w. p. p

1

Xi ~

0 w. p. 1 p

Extreme Case of CLT

I.e. of:

averages ~ Normal,

when individuals are not

w. p. p

1

Xi ~

0 w. p. 1 p

• I. e. toss a coin:

1 if Head,

0 if Tail

Extreme Case of CLT

I.e. of:

averages ~ Normal,

when individuals are not

w. p. p

1

Xi ~

0 w. p. 1 p

• I. e. toss a coin: 1 if Head,

• Called “Bernoulli Distribution”

0 if Tail

Extreme Case of CLT

I.e. of:

averages ~ Normal,

when individuals are not

w. p. p

1

Xi ~

0 w. p. 1 p

•

•

•

•

I. e. toss a coin: 1 if Head,

Called “Bernoulli Distribution”

Individuals far from normal

Consider sample: X 1 ,, X n

0 if Tail

Extreme Case of CLT

Bernoulli sample:

X 1 , , X n

Extreme Case of CLT

Bernoulli sample:

X 1 , , X n

(Recall: independent,

with same distribution)

Extreme Case of CLT

Bernoulli sample:

Note:

X 1 , , X n

Xi ~ Binomial(1,p)

Extreme Case of CLT

Bernoulli sample:

Note:

X 1 , , X n

Xi ~ Binomial(1,p)

(Count # H’s in 1 trial)

Extreme Case of CLT

Bernoulli sample:

Note:

So:

X 1 , , X n

Xi ~ Binomial(1,p)

EXi = p

Extreme Case of CLT

Bernoulli sample:

Note:

So:

X 1 , , X n

Xi ~ Binomial(1,p)

EXi = p

Recall np, with p = 1

Extreme Case of CLT

Bernoulli sample:

Note:

So:

X 1 , , X n

Xi ~ Binomial(1,p)

EXi = p

var(Xi) = p(1-p)

Extreme Case of CLT

Bernoulli sample:

Note:

So:

X 1 , , X n

Xi ~ Binomial(1,p)

EXi = p

var(Xi) = p(1-p)

Recall np(1-p), with p = 1

Extreme Case of CLT

Bernoulli sample:

Note:

So:

X 1 , , X n

Xi ~ Binomial(1,p)

EXi = p

var(Xi) = p(1-p)

sd(Xi) = sqrt(p(1-p))

Extreme Case of CLT

Bernoulli sample:

X 1 , , X n

EXi = p

sd(Xi) = sqrt(p(1-p))

Extreme Case of CLT

Bernoulli sample:

X 1 , , X n

EXi = p

sd(Xi) = sqrt(p(1-p))

So Law of Averages

Extreme Case of CLT

Bernoulli sample:

X 1 , , X n

EXi = p

sd(Xi) = sqrt(p(1-p))

So Law of Averages

(a.k.a. Central Limit Theorem)

Extreme Case of CLT

Bernoulli sample:

X 1 , , X n

EXi = p

sd(Xi) = sqrt(p(1-p))

So Law of Averages gives:

X roughly N p,

p 1 p

n

Extreme Case of CLT

Law of Averages:

X roughly N p,

p 1 p

n

Extreme Case of CLT

Law of Averages:

X roughly N p,

Looks familiar?

p 1 p

n

Extreme Case of CLT

Law of Averages:

X roughly N p,

Looks familiar?

For X ~ Binomial(n,p)

p 1 p

n

Recall:

(counts)

Extreme Case of CLT

Law of Averages:

X roughly N p,

p 1 p

n

Looks familiar?

Recall:

For X ~ Binomial(n,p) (counts)

X

ˆ

p n

Sample proportion:

Extreme Case of CLT

Law of Averages:

X roughly N p,

p 1 p

n

Looks familiar?

Recall:

For X ~ Binomial(n,p) (counts)

X

ˆ

p n

Sample proportion:

Has: Epˆ E Xn p & sd pˆ

p 1 p

n

Extreme Case of CLT

Law of Averages:

X roughly N p,

Finish Connection:

p 1 p

n

Extreme Case of CLT

Law of Averages:

X roughly N p,

p 1 p

n

Finish Connection:

n

i1 X i = # of 1’s among X 1 ,, X n

i.e. counts up H’s in n trials

n

So i 1 X i ~ Binomial(n,p)

n

1

And thus: X n i 1 X i pˆ

Extreme Case of CLT

Law of Averages:

X roughly N p,

p 1 p

n

Finish Connection:

n

i1 X i = # of 1’s among X 1 ,, X n

i.e. counts up H’s in n trials

n

So i 1 X i ~ Binomial(n,p)

n

1

And thus: X n i 1 X i pˆ

Extreme Case of CLT

Consequences:

p̂ roughly N p,

p 1 p

n

Extreme Case of CLT

Consequences:

p̂ roughly N p,

p 1 p

n

X roughly N np, np1 p

Extreme Case of CLT

Consequences:

p̂ roughly N p,

p 1 p

n

X roughly N np, np1 p

(using

pˆ

X

n

and

multiply through by n)

Extreme Case of CLT

Consequences:

p̂ roughly N p,

p 1 p

n

X roughly N np, np1 p

Terminology: Called

The Normal Approximation to the Binomial

Extreme Case of CLT

Consequences:

p̂ roughly N p,

p 1 p

n

X roughly N np, np1 p

Terminology: Called

The Normal Approximation to the Binomial

Extreme Case of CLT

Consequences:

p̂ roughly N p,

p 1 p

n

X roughly N np, np1 p

Terminology: Called

The Normal Approximation to the Binomial

(and the sample proportion case)

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

Control n

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

Control n

Control p

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

Control n

Control p

See Prob. Histo.

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

Control n

Control p

See Prob. Histo.

Compare to fit

(by mean & sd)

Normal dist’n

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

n = 20

p = 0.5

Expect:

20*0.5 = 10

(most likely)

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

n = 20

p = 0.5

Reasonable

Normal fit

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

n = 20

p = 0.2

Expect:

20*0.2 = 4

(most likely)

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

n = 20

p = 0.2

Reasonable fit?

Not so good

at edge?

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

n = 20

p = 0.1

Expect:

20*0.1 = 2

(most likely)

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

n = 20

p = 0.1

Poor Normal fit,

Especially

at edge?

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

n = 20

p = 0.05

Expect:

20*0.05 = 1

(most likely)

Normal Approx. to Binomial

Example: from StatsPortal

http://courses.bfwpub.com/ips6e.php

For Bi(n,p):

n = 20

p = 0.05

Normal approx

Is very poor

Normal Approx. to Binomial

Similar behavior for p 1:

For Bi(n,p):

n = 20

p = 0.5

Normal Approx. to Binomial

Similar behavior for p 1:

For Bi(n,p):

n = 20

p = 0.8

(1-p) = 0.2

Normal Approx. to Binomial

Similar behavior for p 1:

For Bi(n,p):

n = 20

p = 0.9

(1-p) = 0.1

Normal Approx. to Binomial

Similar behavior for p 1:

For Bi(n,p):

n = 20

p = 0.95

(1-p) = 0.05

Mirror image

of above

Normal Approx. to Binomial

Now fix p, and let n vary:

For Bi(n,p):

n=1

p = 0.3

Normal Approx. to Binomial

Now fix p, and let n vary:

For Bi(n,p):

n=3

p = 0.3

Normal Approx. to Binomial

Now fix p, and let n vary:

For Bi(n,p):

n = 10

p = 0.3

Normal Approx. to Binomial

Now fix p, and let n vary:

For Bi(n,p):

n = 30

p = 0.3

Normal Approx. to Binomial

Now fix p, and let n vary:

For Bi(n,p):

n = 100

p = 0.3

Normal Approx.

Improves

Normal Approx. to Binomial

HW: C20

For X ~ Bi(n,0.25), find:

a. P{X < (n/4)+(sqrt(n)/4)}, by BINOMDIST

b. P{X ≤ (n/4)+(sqrt(n)/4)}, by BINOMDIST

c. P{X ≤ (n/4)+(sqrt(n)/4)}, using the Normal

Approxim’n to the Binomial (NORMDIST),

For n = 16, 64, 256, 1024, 4098.

Normal Approx. to Binomial

HW: C20

Numerical answers:

n

16

64

256

1024

4096

(a)

0.630

0.674

0.696

0.707

0.713

(b)

0.810

0.768

0.744

0.731

0.725

(c)

0.718

0.718

0.718

0.718

0.718

Normal Approx. to Binomial

HW: C20

Numerical answers:

n

16

64

256

1024

4096

(a)

0.630

0.674

0.696

0.707

0.713

(b)

0.810

0.768

0.744

0.731

0.725

(c)

0.718

0.718

0.718

0.718

0.718

Notes:

• Values stabilize over n

(since cutoff = mean + Z sd)

Normal Approx. to Binomial

HW: C20

Numerical answers:

n

16

64

256

1024

4096

(a)

0.630

0.674

0.696

0.707

0.713

(b)

0.810

0.768

0.744

0.731

0.725

(c)

0.718

0.718

0.718

0.718

0.718

Notes:

• Values stabilize over n

• Normal approx. between others

• Everything close for larger n

Normal Approx. to Binomial

HW: C20

Numerical answers:

n

16

64

256

1024

4096

(a)

0.630

0.674

0.696

0.707

0.713

(b)

0.810

0.768

0.744

0.731

0.725

(c)

0.718

0.718

0.718

0.718

0.718

Notes:

• Values stabilize over n

• Normal approx. between others

Normal Approx. to Binomial

HW: C20

Numerical answers:

n

16

64

256

1024

4096

(a)

0.630

0.674

0.696

0.707

0.713

(b)

0.810

0.768

0.744

0.731

0.725

(c)

0.718

0.718

0.718

0.718

0.718

Notes:

• Values stabilize over n

• Normal approx. between others

Normal Approx. to Binomial

How large n?

Normal Approx. to Binomial

How large n?

• Bigger is better

Normal Approx. to Binomial

How large n?

• Bigger is better

• Could use “n ≥ 30” rule from above

Law of Averages

Normal Approx. to Binomial

How large n?

• Bigger is better

• Could use “n ≥ 30” rule from above

Law of Averages

• But clearly depends on p

Normal Approx. to Binomial

How large n?

• Bigger is better

• Could use “n ≥ 30” rule from above

Law of Averages

• But clearly depends on p

– Worse for p ≈ 0

Normal Approx. to Binomial

How large n?

• Bigger is better

• Could use “n ≥ 30” rule from above

Law of Averages

• But clearly depends on p

– Worse for p ≈ 0

– And for p ≈ 1

Normal Approx. to Binomial

How large n?

• Bigger is better

• Could use “n ≥ 30” rule from above

Law of Averages

• But clearly depends on p

– Worse for p ≈ 0

– And for p ≈ 1

– i.e. (1 – p) ≈ 0

Normal Approx. to Binomial

How large n?

• Bigger is better

• Could use “n ≥ 30” rule from above

Law of Averages

• But clearly depends on p

• Textbook Rule:

OK when {np ≥ 10 & n(1-p) ≥ 10}

Normal Approx. to Binomial

HW: 5.18 (a. population too small, b. np =

2 < 10)

C21: Which binomial distributions admit a

“good” normal approximation?

a.

b.

c.

d.

Bi(30, 0.3)

Bi(40, 0.4)

Bi(20,0.5)

Bi(30,0.7)

(no, yes, yes, no)

And now for something

completely different….

A statistics professor was describing

sampling theory to his class, explaining

how a sample can be studied and used

to generalize to a population.

One of the students in the back of the room

kept shaking his head.

And now for something

completely different….

"What's the matter?" asked the professor.

"I don't believe it," said the student, "why not

study the whole population in the first

place?"

The professor continued explaining the

ideas of random and representative

samples.

The student still shook his head.

And now for something

completely different….

The professor launched into the mechanics of

proportional stratified samples, randomized

cluster sampling, the standard error of the

mean, and the central limit theorem.

The student remained unconvinced saying, "Too

much theory, too risky, I couldn't trust just a

few numbers in place of ALL of them."

And now for something

completely different….

Attempting a more practical example, the

professor then explained the scientific

rigor and meticulous sample selection

of the Nielsen television ratings which

are used to determine how multiple

millions of advertising dollars are spent.

The student remained unimpressed saying,

"You mean that just a sample of a few

thousand can tell us exactly what over

250 MILLION people are doing?"

And now for something

completely different….

Finally, the professor, somewhat disgruntled

with the skepticism, replied,

"Well, the next time you go to the campus

clinic and they want to do a blood

test...tell them that's not good enough ...

tell them to TAKE IT ALL!!"

From: GARY C. RAMSEYER

•

http://www.ilstu.edu/~gcramsey/Gallery.html

Central Limit Theorem

Further Consequences of Law of Averages:

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

e.g. SAT scores are averages of scores from many

questions

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

e.g. SAT scores are averages of scores from many

questions

e.g. heights are influenced by many small factors,

your height is sum of these.

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

e.g. SAT scores are averages of scores from many

questions

e.g. heights are influenced by many small factors,

your height is sum of these.

2. N(μ,σ) distribution useful for modeling

measurement error

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

e.g. SAT scores are averages of scores from many

questions

e.g. heights are influenced by many small factors,

your height is sum of these.

2. N(μ,σ) distribution useful for modeling

measurement error

Sum of many small components

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

2. N(μ,σ) distribution useful for modeling

measurement error

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

2. N(μ,σ) distribution useful for modeling

measurement error

Now have powerful probability tools for

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

2. N(μ,σ) distribution useful for modeling

measurement error

Now have powerful probability tools for:

a. Political Polls

Central Limit Theorem

Further Consequences of Law of Averages:

1. N(μ,σ) distribution is a useful model for

populations

2. N(μ,σ) distribution useful for modeling

measurement error

Now have powerful probability tools for:

a. Political Polls

b. Populations – Measurement Error

Course Big Picture

Now have powerful probability tools for:

a. Political Polls

b. Populations – Measurement Error

Course Big Picture

Now have powerful probability tools for:

a. Political Polls

b. Populations – Measurement Error

Next deal systematically with unknown p & μ

Course Big Picture

Now have powerful probability tools for:

a. Political Polls

b. Populations – Measurement Error

Next deal systematically with unknown p & μ

Subject called “Statistical Inference”

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

(major work for this is already done)

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

(major work for this is already done)

(now will just formalize, and refine)

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

(major work for this is already done)

(now will just formalize, and refine)

(do for simultaneously for major models)

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

e.g. 1:

Political Polls

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

e.g. 1:

Political Polls

(estimate p = proportion for A)

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

e.g. 1:

Political Polls

(estimate p = proportion for A)

(in population, based on sample)

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

e.g. 1:

Political Polls

e.g. 2a:

Population Modeling

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

e.g. 1:

e.g. 2a:

Political Polls

Population Modeling

(e.g. heights, SAT scores …)

Statistical Inference

Idea: Develop formal framework for

handling unknowns p & μ

e.g. 1:

Political Polls

e.g. 2a:

Population Modeling

e.g. 2b:

Measurement Error

Statistical Inference

A parameter is a numerical feature

Statistical Inference

A parameter is a numerical feature of

population

Statistical Inference

A parameter is a numerical feature of

population, not sample

Statistical Inference

A parameter is a numerical feature of

population, not sample

(so far parameters have been indices

of probability distributions)

Statistical Inference

A parameter is a numerical feature of

population, not sample

(so far parameters have been indices

of probability distributions)

(this is an additional role for that term)

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 1, Political Polls

• Population is all voters

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 1, Political Polls

• Population is all voters

• Parameter is proportion of population for A

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 1, Political Polls

• Population is all voters

• Parameter is proportion of population for A,

often denoted by p

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 1, Political Polls

• Population is all voters

• Parameter is proportion of population for A,

often denoted by p

(same as p before, just new framework)

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 2a, Population Modeling

• Parameters are μ & σ

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 2a, Population Modeling

• Parameters are μ & σ

(population mean & sd)

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 2b, Measurement Error

• Population is set of all possible

measurements

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 2b, Measurement Error

• Population is set of all possible

measurements

(from thought experiment viewpoint)

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 2b, Measurement Error

• Population is set of all possible

measurements

• Parameters are

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 2b, Measurement Error

• Population is set of all possible

measurements

• Parameters are:

– μ = true value

Statistical Inference

A parameter is a numerical feature of

population, not sample

E.g. 2b, Measurement Error

• Population is set of all possible

measurements

• Parameters are:

– μ = true value

– σ = s.d. of measurements

Statistical Inference

A parameter is a numerical feature of

population, not sample

An estimate of a parameter is some function

of data

Statistical Inference

A parameter is a numerical feature of

population, not sample

An estimate of a parameter is some function

of data

(hopefully close to parameter)

Statistical Inference

An estimate of a parameter is some function

of data

E.g. 1:

Political Polls

Estimate population proportion, p, by

X

ˆ

sample proportion: p n

Statistical Inference

An estimate of a parameter is some function

of data

E.g. 1:

Political Polls

Estimate population proportion, p, by

X

ˆ

sample proportion: p n

(same as before)

Statistical Inference

An estimate of a parameter is some function

of data

E.g. 2a,b: Estimate population:

mean μ, by sample mean:

̂ X

Statistical Inference

An estimate of a parameter is some function

of data

E.g. 2a,b: Estimate population:

mean μ, by sample mean:

s.d. σ, by sample sd: ̂ s

̂ X

Statistical Inference

An estimate of a parameter is some function

of data

E.g. 2a,b: Estimate population:

mean μ, by sample mean:

s.d. σ, by sample sd: ̂ s

Parameters

̂ X

Statistical Inference

An estimate of a parameter is some function

of data

E.g. 2a,b: Estimate population:

mean μ, by sample mean:

s.d. σ, by sample sd: ̂ s

̂ X

Estimates

Statistical Inference

How well does an estimate work?

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

(i.e. on average get right answer)

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 1: E pˆ

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 1: E pˆ E Xn

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 1: E pˆ E Xn

np

n

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 1: E pˆ E Xn

np

n

p

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 1: E pˆ E Xn

np

n

p

(conclude sample proportion is unbiased)

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 1: E pˆ E Xn

np

n

p

(conclude sample proportion is unbiased)

(i.e. centered correctly)

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 2a,b:

E ̂

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 2a,b:

E ̂ E X

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 2a,b:

E ˆ E X

Statistical Inference

How well does an estimate work?

Unbiasedness: Good estimate should be

centered at right value

E.g. 2a,b:

E ˆ E X

(conclude sample mean is unbiased)

(i.e. centered correctly)

Statistical Inference

How well does an estimate work?

Standard Error: for an unbiased estimator,

standard error is standard deviation

Statistical Inference

How well does an estimate work?

Standard Error: for an unbiased estimator,

standard error is standard deviation

E.g. 1:

SE of

p̂

is sd pˆ

p 1 p

n

Statistical Inference

How well does an estimate work?

Standard Error: for an unbiased estimator,

standard error is standard deviation

E.g. 2a,b: SE of ̂

is sd ˆ sd X

n

Statistical Inference

How well does an estimate work?

Standard Error: for an unbiased estimator,

standard error is standard deviation

Same ideas as above

Statistical Inference

How well does an estimate work?

Standard Error: for an unbiased estimator,

standard error is standard deviation

Same ideas as above:

• Gets better for bigger n

Statistical Inference

How well does an estimate work?

Standard Error: for an unbiased estimator,

standard error is standard deviation

Same ideas as above:

• Gets better for bigger n

• By factor of n

Statistical Inference

How well does an estimate work?

Standard Error: for an unbiased estimator,

standard error is standard deviation

Same ideas as above:

• Gets better for bigger n

• By factor of n

• Only new terminology

Statistical Inference

Nice graphic on bias and variability:

Figure 3.14

From text

Statistical Inference

HW:

C22: Estimate the standard error of:

a. The estimate of the population proportion,

p, when the sample proportion is 0.9,

based on a sample of size 100. (0.03)

b. The estimate of the population mean, μ,

when the sample standard deviation is

s=15, based on a sample of size 25 (3)