CyberSEES_Proposal_2015Final

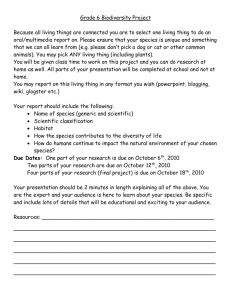

advertisement

1. VISION A paradigm shift is needed in ecosystem biodiversity research. Despite having been recognized as system-level science, the way specialists and non-specialists think about ecosystems must move from reducing the problem spaces into specialized and finer partitions, to a significantly more integrative and predictive one. In other words, the move must be from microscope to cyberscope. At the heart of such a shift is the integration of diverse and heterogeneous data, computational workflow (machine learning, etc.) combined with information and knowledge resources. However, there is more. The ability to trace, verify and trust the original data and model sources that comprise key indicators of ecosystem health and diversity has become a key requirement for technical (and societal) implementations. Building on ~15 years of demonstrated success in distributed data discovery and access, we consider that next-generation virtual observatories are now possible. We propose both a well-documented and data-enhanced biodiversity indicator pipeline and an embedded extensive computational set of analytic models – from regression, classification, and clustering to relation rule extraction (using parametric and non-parametric methods) – that are connected to incoming data streams. The result is intended to be: validated, uncertainty quantified, predictive models of marine ecosystems that will inform future indicators that cover both current state and future scenarios. These capabilities frame what we term here a virtual laboratory (VL) that will pave the way to a robust future information environment upon which the very best biodiversity science can be advanced. 2. BACKGROUND AND SIGNIFICANCE Marine ecosystems are complex and highly variable networks of interacting organisms that support roughly half of global productivity and drive major impacts on global processes, ranging from biogeochemical cycling to regulation of climate. The oceans are a common resource and the need to protect this natural resource should be a goal shared between nations to secure sustainable fisheries and other ecosystem services while promoting "blue growth" (Horizon 2020 2014). Direct economic value is associated with relatively few species that are harvested for food or other natural resources, but the sustainability of marine ecosystems and their many services depend critically on a much wider diversity of life forms that support stability, resilience, and ultimately productivity. Despite the fact that the US, Europe, and other nations have focused fisheries management on the resource itself, the vast majority of biomass and metabolic activity helping to sustain these resources is associated with microscopic plankton. These lower trophic levels include essential primary producers at the base of the food web, as well as heterotrophic bacteria, archaea, and a variety of small grazers that turn over rapidly and efficiently recycle carbon and nutrients and provide links that transfer energy to higher trophic levels, such as fish, seabirds, and marine mammals thus helping to maintain healthy and productive ecosystems. As such this proposal addresses the goals of U.S. Ocean Policy and complements the goals of the Horizon 2020 mission to build competitive and environmentally friendly fisheries and aquaculture; enhanced marine innovation through biotechnology; and crosscutting marine and maritime research. These important planktonic organisms are challenging to study and characterize because of their small size and the highly variable nature of their fluid marine habitat. Furthermore, though microscopic, they are exceedingly abundant and diverse, spanning all three domains of life, many orders of magnitude in size, and extremes of metabolism and life style. As a result there has been a long-standing tendency for biological oceanographers to specialize in relatively narrow ranges of organism types (e.g., one taxonomic group or metabolic strategy) and to develop dedicated approaches for their observation and study. On the one hand, this stove piping has been very fruitful and led to an explosion of new technologies and data streams that make this a very exciting time to be studying marine microbes and their links to higher trophic levels (e.g., Wiebe and Benfield 2003; Benfield et al. 2007; Moore et al. 2009; Sosik et al. 2010; Caron 2013). At the same time, it has led us to a current situation where large quantities of very heterogeneous data types are being produced to characterize different and interacting parts of the same system. This heterogeneity encompasses spatial and temporal scales that include a few discrete samples collected on “snap-shot” research cruises, much more synoptic regional-to-basin scales routinely accessible from sensors on earth-orbiting satellites, and highly resolved data records from 1 sensors at fixed-location ocean observatories (minutes to hours, over years to decades) and on autonomous mobile platforms (centimeters to meters, over kilometers to basins). It is also reflected in the observations produced, which can include not only traditional biological, chemical, and physical measurements (e.g., temperature, nutrients, pigments, microscope counts), but also extremely large quantities of molecular data, high-resolution images of microscopic organisms, and multi-frequency optical and acoustic images of communities and habitats. The combined quantity, breadth, and detail reflected in these datasets overwhelm the capabilities of individual or small teams of researchers to carry out conventional integration and analyses, and thus they are not yet being exploited to their full potential. The fusion of domain science, application science and informatics built on computational Cyberinfrastructure (CI) that we propose, here, is driven by not only the pressing need for more comprehensive and on-going assessments of marine biodiversity but also to know about near-future change. This need has received a great deal of recent scientific attention (e.g., Tittensor et al. 2010; Halpern et al. 2012; Duffy et al. 2013; Koslow and Couture 2013). Even national policy attention has been placed on the value of protecting and sustaining natural biodiversity as exemplified in the U.S. Interagency Ocean Policy Task Force’s final recommendations to the President in 2010, which declares that “the policy of the United States to protect, maintain, and restore the health and biological diversity of ocean, coastal, and Great Lakes ecosystems and resources” (White House 2010). Meeting these policy goals demands not only effective observation systems, but also novel and responsive modeling information systems. In ecology, the opportunity for progress in modeling via data mining and statistical machine learning for space-time series, classification and clustering has been around for a while (De'ath and Fabricius 2000; Leathwick et al. 2006; Olden et al. 2008; Kelling et al. 2009; and see the review of Elith and Leathwick 2009). Much attention has been given to species distribution models (SDMs) though scale disparities – between geographic and environmental space and their relation to sought after patterns, still present a challenge for SDMs. The conclusion of the decade-long International Census of Marine Life program (Amaral-Zettler et al. 2010; Snelgrove 2010) came with discovery of many new species, but it also unveiled large knowledge gaps (Webb et al. 2010), and highlighted the limitations of a one-time exploratory effort when many research problems and management applications demand more detailed and sustained information. The broad spectrum of societal needs led Duffy et al. (2013) to call for an enhanced and coordinated observation system that addresses requirements for spatial, temporal, and taxonomic resolution and coverage in marine systems. This call for action led directly to sponsorship via the interagency National Ocean Partnership Program of pilot projects to launch and coordinate a Marine Biodiversity Observation Network (MBON; NOPP 2013), with motivation as captured by Duffy et al. (2013): “In the same way that long-term financial health is stabilized by a diversified portfolio, ecosystem health and resilience are often enhanced by biodiversity (Schindler et al. 2010). These benefits suggest that managing systems to maintain marine biodiversity may provide a way to resolve otherwise conflicting objectives resulting from piece-meal management (Palumbi et al. 2009; Foley et al. 2010). Therefore, in addition to the direct and indirect benefits that it provides, biodiversity can be seen as a master variable for practically evaluating both the health of ecosystems and the success of management efforts. Yet, our knowledge of marine biological diversity remains fragmented, uneven in coverage, and poorly coordinated.” As pilot MBON projects in U.S. waters and anticipated coordinated actions in the North Atlantic by Canadian and European colleagues begin, there is clear opportunity to put in place a documented indicator pipeline that not only addresses the challenges associated with access to and integration of heterogeneous data, but also develops a modeling framework and capability for knowledge synthesis. 2 Figure 1. Development and implementation of ecosystem indicators involves an iterative process between scientists and stakeholders. Here, we indicate entities and activities (yellow) that will be addressed directly in the proposed Research Plan. Diagram modified from the Biodiversity Indicators Partnership (http://www.bipindicators.net). Characterizing Marine Ecosystems: Biodiversity Indicators “Tracking biodiversity change is increasingly important in sustaining ecosystems and ultimately human well-being” (Scholes et al. 2008). Ecosystem indicators are Big Data, with challenges to address source data heterogeneity, appropriate integration, and exposed provenance for derived data products. The Biodiversity Indicators Partnership (BIP: http://www.bipindicators.net) has clarified how an indicator is “based on verifiable data [and] conveys information about more than itself.” Figure 1 is a modified indicator diagram from BIP where the yellow boxes/arrows are part of the innovations proposed here and developed into the MBVL. In the BIP representation, “calculate indicators” may include data transformation, aggregation, weighting, thresholding, and modeling. This “data funnel” produces indicators; however, the meaning of a derived data product (the indicator) does not necessarily have any recognizable relationship to the meaning of the source datasets that poured into the funnel. Our collaboration will bring cyber-innovation to this process for efficiency, transparency, understanding, and discovery, link it to the modeling component (yellow box in center of Fig. 1) and develop reporting and monitoring systems (yellow box near top right) that include predictive capabilities (space and time) of structural indicators that relate to certain functions of marine ecosystems. Pre-Existing Data Science and Computational Innovations A number of factors set the stage for transformative progress in developing data integration strategies and indicators for marine biodiversity and ecosystem sustainability. An NSF-INTEROP funded project, Employing Cyber Infrastructure Data Technologies to Facilitate Integrated Ecosystem Assessment for Climate Impacts in NE & CA LME's (ECO-OP) was conceived as an interoperability initiative, strongly motivated by a national directive to develop a policy framework for a comprehensive, ecosystem-based approach to ocean resource management that addresses conservation, economic activity, user conflict, and sustainable use (also see Results of Prior). Conceived as a close collaboration among RPI, WHOI and NOAA National Marine Fisheries Service, ECO-OP's objective was to enable routine ecosystem status reports to aid forecasts and integrated ecosystem assessments. This includes impacts related to climate change and the capacity to address vulnerability, risks, and resiliency, and to develop an outcome-based process that results in informed tradeoffs and priority setting. ECO-OP has brought ocean informatics to the forefront as an essential tool for implementing the new national policy framework and advancing the capacity for science in support of ecosystem-based management for and integrated ecosystem assessments. Using IPython Notebooks, as noted below, a working report and assessment capability was developed based on the virtual observatory paradigm to integrate heterogeneous Web-based data and information collections. Semantic technologies were used to annotate datasets and the code used to generate data products for ecosystem assessment, for discovery, understanding, and re-use. 3 As part of the on-going ECO-OP project, members of our interdisciplinary team (Fox, Beaulieu) have established the technological framework for producing ecosystem assessment products while providing detailed, standard provenance information. They extended earlier foundational work (by team member Futrelle) on the Open Provenance Model (OPM), now a W3C recommendation (PROV-O), by deeply integrating it into a leading open-source Web-based scientific computing platform (IPython Notebook). The explosive popularity and shallow learning curve of the IPython Notebook presents a significant advantage over traditional scientific workflow systems in which processing steps are handled in a more opaque and rigid manner via “wrapping” and visual programming languages. In WHOI’s Ocean Imaging Informatics (OII) project, members of our interdisciplinary team (Sosik, Futrelle) have been addressing challenges associated with large, continuously updating image datasets from coastal observatories and other imaging systems. This multi-year, multi-laboratory project, including collaboration with PI Fox and several WHOI biologists, has emphasized formal interdisciplinary design and evaluation methodology and software best practices. The Imaging FlowCytobot (IFCB, see Results of Prior) Data Dashboard represents an innovative technological approach similar to NASA Jet Propulsion Lab’s “webification” effort (http://podaac-w10n.jpl.nasa.gov/). IFCB datasets, subsets, and images are made available in user-requested formats on-demand in near-real time via simple, URL-based web services (Sosik and Futrelle 2012). This approach skips the manual preparation, curation, interactive navigation and download stages often necessary with centralized, repository-based approaches in favor of direct, URL-based access to raw data, data products, and subsets. Because data is managed automatically, it is available immediately after acquisition, no extra capacity is required for copies of data, and it can be syndicated and used as part of a larger near-real-time observing system. The use of Linked Open Data allows for harvesting of datasets via traversal of metadata that semantically characterizes the relationship between data and products, as well as between data and data subsets, enabling immediate use of these semantics without prior large-scale system integration efforts involving data producers and consumers. Virtual Observatories (VOs), both as a concept (i.e. an observatory that appears to be in one “place” or has one “theme” with many instruments but is actually distributed in space, time and mode) and as implemented as a technical science infrastructure, have changed the ways that researchers and students do science today. In many fields where the concept of an observatory is familiar, they are the eScience (Hey and Trefethen 2005; Bell et al. 2009) data framework of choice. To date, there have been a variety of approaches to developing VOs starting with the original efforts in astronomy (Szalay 2001), to the substantial growth in geosciences (Fox et al. 2006; Fox et al. 2009) to genomic observatories (Davies et al. 2014). VOs and other distributed data systems offer opportunities to bring the large amounts of diverse data, both real-time and historical, to bear on biodiversity modeling and prediction via appropriate analytical tools and procedures with the results rapidly visualized and understood. Most importantly is the identification and embedding of the required application and integration tools into VO data frameworks and to the extent possible the dissemination of these capabilities into the larger marine biodiversity informatics communities (“out-of-the-box”). This leads us to propose herein the Marine Biodiversity Virtual Laboratory (MBVL) – computational capabilities built upon a virtual observatory/ or network (MBON) that we elaborate upon in the next section and Section 3. Proposed Data Science and Computational Innovations The MBVL will provide a near-realtime dashboard enabling access to raw data as it is acquired as well as data and visualizations of a variety of biodiversity indicator model runs as they are automatically applied to incoming data. Inspired by the comprehensive cross-model study of Elith et al. (2006) – see their Table 4 for implemented models in that study – we see the opportunity to include recent advancements toward hierarchical linear models, as part of an overall move from generalized linear models to generalized additive models. These would include regression and classification via k Nearest Neighbors (knn; possibly weighted) and follow-ons (facilitated by the availability of libraries of open-source implementations of parametric and non-parametric techniques); support vector machines (SVM), etc.; clustering via kmeans and entropy-based approaches (in use in the Visualization and Analysis of 4 Microbial Population Structures (VAMPS), described below); and the Genetic Algorithm for Rule-Set Prediction (GARP) approach. The investigators have strong familiarity with both the Python (numpy, scipy) and Gnu-R library suites and will bring this experience to bear in this project. Notably Our 'empirical model' use case would address Elith and Leathwick’s (2009) challenge "improvement of methods for modeling presence-only data"; and Our 'mechanistic model' use case would address "linkages between SDM practice and ecological theory are often weak". Thus in addition to innovations surrounding a documented and enhanced biodiversity indicator pipeline (see above), we will survey relevant analytic models to provide state descriptions and predictive capability. We will successively implement each of these models inclusive of the current the computational framework used for the IFCB and proposed indicators pipeline. Each time new data becomes available (see above: all the way from near-real time to weekly, monthly, etc.) we will trigger new model runs automatically. Then, in the spirit of VAMPS and the IFCB dashboard we will push the description models to the MBVL dashboard and alert researchers in the project as well as selected community network (see collaboration and network convening plan) members. The detail of such capabilities will be fully detailed in use cases developed during the project, as provide the basis for evaluation (see Section 4) and increasing the value of the models developed (Fig. 2). Since validation is one means of model evaluation we are able to include both science validation via research review, via the dashboard but will also provide both the standard statistical approaches (e.g. kfold cross validation), and explore and conceive of hybrid forms, i.e. science-based and statistical validation with the goal of quantifying means for model selection. Remaining on our horizon, but outside the scope for this project, is uncertainty quantification of data, predictors and models via Bayesian methods – we do not elaborate further here. Figure 2. General motivation of the value chain for analytics (from Stein 2012; http://steinvox.com/blog/wpcontent/uploads/2012/10/Analy ticsValueChain3.png) Advancing Science Understanding and Predictability of Biodiversity in Marine Ecosystems We are poised to substantially improve our ability to characterize, assess and understand marine biodiversity using leading edge cyberinfrastructure and informatics-based innovation. The assembled team has demonstrated collaborative experience and success in each of the key and intersecting areas needed for success in this project. The proposed MBVL is directly aimed at advancing understanding and prediction of biodiversity in marine ecosystems for all stakeholders. 3. RESEARCH PLAN Our research plan develops the informatics solutions to enable the generation and documentation of biodiversity indicators, providing the direct link between data and information to be used for management and policy decisions for sustainable ecosystems. We expect that our cyber-innovation will be of interest to international groups including the Convention on Biological Diversity (CBD), the Group on Earth Observations Biodiversity Observation Network (GEO BON), and the Global Ocean Observing System (GOOS). Our research plan includes developments in the domain sciences of biological oceanography and computer & information sciences and a strategy for end user application. We will use a strategy that 5 achieves specific scientific and management outcomes in a context of solutions that promote broader impacts. Objectives described below include: 1) advancing solutions that overcome accessibility issues for heterogeneous, distributed data types; 2) promoting a network effect through Web-based methods and open-source workflow tools for product generation; 3) Implementing specific use cases to test model development; 4) Traceable Product workflows, 5) Development of Knowledge Base for Indicators; and 6) additional broader impacts, including education and workforce development. Figure 3. The key components of Rensselaer’s unique iterative development methodology. To meet these objectives, we will utilize an informatics methodology to build the MBVL capabilities by leveraging existing technical infrastructure using the iterative technology development approach created by Rensselaer’s Tetherless World Constellation (see Fig. 3; Fox and McGuinness 2008 - http://tw.rpi.edu/web/doc/TWC_SemanticWebMethodology). Importantly, this method was used for both the ECO-OP and OII projects introduced above – indicating that Fox, Beaulieu, Sosik and Futrelle have a highly collaborative relationship over the last 3-4 years. This also means that 4 of the 5 main investigators on this project have used the method successfully together. The proposed project thus will also be a highly collaborative activity. At the heart of this method is the identification and articulation of use cases. A use case is a methodological means used in system analysis to identify, clarify, and organize system requirements. The use case is made up of a set of possible sequences of interactions between systems and users in a particular environment and related to a particular goal. Science use cases have science goals, and science terminology (see below). The use case should contain all system activities that have significance to an intended user, in this case biologists and computer scientists. Use cases can be employed during several stages of informatics application development, such as planning requirements, validating design, and testing software. A use case (or set of use cases) has the following characteristics: Organizes functional requirements Models the goals of system/actor (user) interactions Records paths (called scenarios) from trigger events to goals Describes one main flow of events (also called a basic course of action), and possibly other ones, called exceptional flows of events (also called alternate courses of action) Is multi-level, so that one use case can use the functionality of another one Identifies vocabulary relevant to the end user, which then defines the required semantic representation Through success criteria, defines metrics upon which the value of the use case outcome is assessed 3.1 Objective 1) The Virtual Laboratory: Data Access and computational infrastructure Accessing observational data that are in a variety of formats, with a variety of protocols, is the first challenge in producing indicators from heterogeneous, distributed data types. Although referring mainly to terrestrial data, Scholes et al. (2008) recognized, “The problem lies in the diversity of the data and the fact that it is physically dispersed and unorganized.” In this project we target data that are already online, in various states of accessibility, including from web-based user interfaces or automated via web services. We will access biodiversity data for the NES LME, with initial focus on a smaller region near the 6 Martha’s Vineyard Coastal Observatory (MVCO), at the intersection of the Georges Bank and MidAtlantic Bight Ecological Production Units. Thus MVCO becomes an integral component of the MBVL. To construct lower trophic level indicators, we will access data for a variety of planktonic functional groups, including bacteria, phytoplankton, and other eukaryotic microbes. Our target data types are a) genetic sequence data, b) image data for phytoplankton, c) environmental data including water temperature from the MVCO. We chose these data types to hit the “3 V’s of Big Data” - Variety, Velocity and Volume (see Pinal 2013). On-going research in the region has demonstrated the power of new approaches for biodiversity assessment that include enhanced automation and exploit genetic, optical, and other approaches. Here we consider three very different means by which organisms are classified so that information can be determined for biodiversity as expressed by richness (i.e., the number of species or species equivalents such as Operational Taxonomic Units (OTUs) of classified organisms) and evenness (i.e., the relative proportion per sample of counts in each category). These data traditionally are counts of individuals assigned to species. 3.1.a) Next-Generation microbial DNA sequence data We will access large volumes of highly-contextualized, metadata-rich DNA marker gene sequence data available from the Visualization and Analysis of Microbial Population Structures database (VAMPS, vamps.mbl.edu; Huse et al. 2014) developed and maintained by co-PI Mark Welch. VAMPS hosts nearly 1000 projects encompassing more than 25,000 datasets and over 400 million sequence tags. Extensive metadata compliant with current genomics community standards (MIxS: Yilmaz et al. 2011) are available for many samples. The MIxS standard adds a checklist for uncultured diversity marker gene surveys with sets of measurements describing particular habitats, termed ‘environmental packages’. This provides a rich environmental context for interpreting microbial diversity data. For this project we will leverage the eukaryotic, bacterial, and archaeal data collected through the International Census of Marine Microbes (ICoMM) and the Microbial Inventory Research Across Diverse Aquatic-Long Term Ecological Research (MIRADA-LTERS). The Sloan-funded ICoMM project coordinated a massively parallel tag sequencing survey of bacterial, archaeal, and eukaryotic rRNA sequence tags from more than 700 samples from around the world collected as part of 40 different projects. The NSF-funded MIRADA-LTERS collected similar data from the eight marine LTER sites. In addition, a three year time series (~monthly sampling) of prokaryotic rRNA sequence tags from MVCO is already included in VAMPS, and the Sosik lab will extend that time series and contribute a complementary multi-year dataset for eukaroyotic sequence tags (sampling and sequencing separately funded by on-going projects). VAMPS is an NSF supported database-driven website that allows researchers using data from massivelyparallel sequencing projects to analyze the diversity of eukaryotic, bacterial, and archaeal microbial communities and the relationships between communities; to explore these analyses in an intuitive visual context; and to download analyses and images for publication. Sequence data is quality filtered and assigned to both taxonomic structures and taxonomic-independent clusters, which can then be linked to metadata and compared using a wide variety of analytical and visualization tools. Each result is extensively hyperlinked to other analysis and visualization options, promoting data exploration and leading to a greater understanding of data relationships. Analysis tools include all major alpha diversity estimators and beta diversity metrics, Principal Component Analyses linking sequence and metadata, and integrated QIIME tools such as Unifrac. In the work proposed here, we will augment these tools with Hill's Diversities, recently argued to be independent of sampling effort and robust to the presence of rare species (Haegeman et al. 2013), and new statistical estimators of alpha and beta diversity developed by VAMPS collaborators (Bunge 2013; Willis 2014). Additionally we will calculate bacterial indicator taxa for subsets of our data that coincide with different biomes. Indicator Species Analysis (ISA; Peck 2010) as implemented in the R package indicspecies (R Development Core Team 2008) can be used to test hypotheses that different OTUs define different "healthy" states of marine environments based on constancy and abundance in a given group. We will explore two approaches for calculating this indicator, Dufrêne and Legendre (1997) and Tichý and Chytrý (2006). 7 While VAMPS provides sophisticated algorithms for taxonomic assignment (Huse 2008) and for clustering sequences based on percent similarity (Huse 2010) both approaches often lack the sensitivity needed to discern significant patterns of community variation. Therefore VAMPS is incorporating oligotyping and minimum entropy decomposition, two new approaches that use Shannon entropy to discriminate between information-rich nucleotide positions in a dataset and stochastic variation due to sequencing error and other sources of noise (Eren 2013, 2014). Oligotyping with single-nucleotide resolution was recently used to detect temporal phase transitions of a microbial community, discriminating taxa that likely interact synergistically or occupy similar habitats from those that likely interact antagonistically or prefer distinct habitats (Mark Welch 2014). These patterns were not apparent in an earlier study based on taxonomy and clustering (Caporaso 2011). Oligotyping has also been used to study the association of bacterial communities with haptophyte blooms (Delmont 2014) and to distinguish members of Vibrio communities across marine habitats (Schmidt 2014). The information theory-guided minimal entropy decomposition algorithm enables sensitive discrimination of closely related organisms without relying on extensive computational heuristics and user supervision, but its efficacy has yet to be fully established. 3.1.b) Image data for phytoplankton As the primary producers at the base of the food web in the ocean, phytoplankton are already recognized as an Essential Climate Variable (ECV; UNESCO 2012b), and their designation as an Essential Ocean Variable (EOV) is presently being considered by GOOS. Biodiversity data for phytoplankton have been specifically requested for inclusion in the US Integrated Ocean Observing System (IOOS); for instance, “phytoplankton species and abundance” is included in some long term regional plans (IOOS 2011). Phytoplankton are microscopic algae whose diversity is traditionally observed with labor intensive and time consuming microscopy. To meet modern observatory needs for spatial, temporal, Figure 4. Snap shot of the web-based IFCB Data Dashboard (http://ifcband taxonomic resolution, co-PI Sosik data.whoi.edu/) showing the time series navigation tool (top) and a mosaic of images (phytoplankton, microzooplankton, and detritus) from a single time and her colleagues have developed an series sample selected by the user. From Sosik and Futrelle (2012). underwater microscope and imaging system specifically optimized for analysis of phytoplankton (Olson and Sosik 2007; Sosik et al. 2010). The Imaging FlowCytobot (IFCB) is now commercially available (from industry partner McLane Research Laboratories, Inc.) and has been deployed at a number of observatory locations for months to years (e.g., Sosik et al. 2010; Campbell et al. 2013). The resolution of images collected by IFCB is high enough (~1 m) that most microphytoplankton can be identified to genus or species level (Sosik and Olson 2007). Since 2006, large volumes of image data (>500 million images) have been acquired at MVCO in a nearly continuous time series. The number of images produced demands automated image analysis and taxonomic classification (Sosik and Olson 2007; Moberg and Sosik 2012) and presents access and analysis challenges for researchers. To address this team member Futrelle (in collaboration with co-PI Sosik) has developed an open-source Web-based data service providing both visualization of near8 realtime IFCB data and machine-readable services for accessing raw images, metadata, and derived products in a variety of standard formats and protocols including Linked Open Data (Sosik and Futrelle 2012) (Fig. 4). In addition, the production of key products (image segmentation, extraction of image features) is automated and runs in near-realtime as images are acquired from the environment. We expect that this existing work, particularly the work on Linked Open Data formats, will ease the integration of this important high-volume dataset into the proposed MBVL. The IFCB Dashboard (Fig. 4) provides links to URLs accessing results ranging from each individual full resolution image (including metadata), to all images in a sample bin (zip compressed), or metadata for an entire sample bin (in various formats, e.g., HTML, XML, RDF). This specific sample can be viewed in the dashboard at http://ifcb-data.whoi.edu/mvco/IFCB5_2012_028_223654.html. 3.2 Objective 2) Data products The derived data products will include time-series of presence/absence data and abundance data (when possible) for bacterial taxa, as distinguished by OTUs and oligotyping, and eukaryotic taxa including phytoplankton species. Our data products will conform with GEO BON Essential Biodiversity Variable (EBV) Class “Community Composition,” and specifically, subclass “Taxonomic diversity” (see GEO BON 2013). We will distinguish community composition over time as the bacterial community, the eukaryotic phytoplankton community, and the combined microbial community. Some of these taxa may be indicator species (e.g., toxic or harmful algal species). Our computational infrastructure will allow for the construction of composite biodiversity indicators for the bacterial and eukaryotic taxa, separately and combined, such as species richness and other diversity indices recommended by the U.S. IOOS (IOOS 2011) and the international Biodiversity Indicators Partnership. 3.3 Objective 3) Implementing specific use cases to test model development For the model development, we will conduct a formalized iterative process of interaction between informatics experts, observational scientists, and end users as described by Fox and McGuinness (2008). This approach emphasizes small interdisciplinary teams containing information technologists, scientists, and other end users, working together in a highly structured process to design, prototype, and evaluate use cases and candidate technical implementations. The approach allows the team to iteratively converge on solutions that meet high-priority end user goals, while leveraging as much existing technology and technical efficiency as possible. Several of the co-PIs have worked extensively together to utilize this approach successfully in other informatics efforts (i.e., Fox and Beaulieu for ECO-OP project described in Results of Prior below, and Sosik and Futrelle in developing the IFCB Data Dashboard). We will identify a small but diverse set of targeted biodiversity indicators that will demonstrate the generality and scalability of the approach (e.g., across a range of taxa and frequencies of observations). We will evaluate two use cases, involving two very different types of modeling, to develop our computational infrastructure in 3.1 and data products in 3.2. We will examine an empirical model (3.3.1), based on statistical analyses of the observed data for temporal patterns and correlations, and a mechanistic model (3.3.2) based on mathematical relationships hypothesized for a subset of the observed data. 3.3.1 Empirical modeling use case. Our goal in the empirical modeling use case is to develop a computational infrastructure to enable modeling of changes in community composition with time. An ultimate goal would be to develop a platform that would enable analysis and modeling of our ocean microbial time series in an approach similar to how such changes are modeled for gut microbiomes (e.g., Stein et al. 2013). In the most general case, which is true for our data, the intra- and inter-specific interactions are not known. This use case will utilize high-throughput sequence data and phytoplankton imagery data to produce a composite time series of microbial community composition to explore for patterns / correlations in the context of environmental parameter time series. A strength of this approach is that it will enable a wide range of exploratory investigations; to be sure that use case development is appropriate focused, however, we will initially target the specific objective of elucidating patterns of occurrence among diatom taxa and bacterial oligotypes in the MVCO time series. Evidence is growing 9 concerning the potential ecological and biogeochemical implications of interactions between diatoms and bacteria, but to date most inferences come from laboratory studies that may have limited ecological relevance (e.g., Grossart et al. 2005; Gärdes et al. 2011). To set the stage for refined hypotheses, there is an immediate need for quantitative analysis of complex co-occurrence patterns in nature. 3.3.2 Mechanistic modeling use case. Our goal in the mechanistic modeling use case is to develop a platform that will enable the testing of hypotheses on links between community structure, trophic transfer, and functioning of the ecosystem. The platform will be flexible enough to explore a variety of hypotheses and models. To focus initial use case development, we will target an example model system that involved the interplay between a phytoplankton species host, a parasite, and environmental conditions (e.g., water temperature) at MVCO. This system has already been described in sufficient detail (Peacock et al. 2014) to construct a semi-analytical model to hindcast abundance patterns of the interacting species, as well as set the stage to quantify implications for trophic transfer and to forecast impacts under climate change scenarios. This use case will utilize a subset of high-throughput sequence data (i.e., parasite abundance) and a subset of phytoplankton imagery data (i.e., host abundance), and selected environmental parameters (i.e., temperature, light), and a hypothesis for parasite-host-temperature interactions in a model (tuned with existing data) and predict species abundance (based on predictions for environmental parameter). 3.4 Objective 4) Traceable Product workflows Objective 2 includes the transformation of observational data (accessed in Objective 1) into separate data products for bacteria, phytoplankton, and other eukaryotic microbes, fulfilling a key concept of the GOOS Framework for Ocean Observing – transforming observational data into EOVs (UNESCO 2012a). Objective 2 also includes the calculation of a composite indicator for lower trophic level, pelagic biodiversity in the NES LME. These products will be generated with automated, flexible, open-source, documented, and reproducible workflows. Our system will enable the pulling of data from multiple access points into an open-source, extensible platform enabling the generation, analysis, and modeling of biodiversity products, with tools and techniques that enable the following: use of existing analysis code in a variety of widely-used languages (e.g., MATLAB, R, Python); end-to-end documentation of procedures; reproducibility via Web-based sharing of workflows; and interoperability of these workflows with standard data access methods. We will leverage the approach successfully developed in current informatics work in collaboration with the Ecosystem Assessment Program at NOAA’s NEFSC (ECO-OP; Beaulieu et al. 2013). In particular, we will build on new methods to automatically produce components of the NEFSC’s regularly updated Ecosystem Status Report for the NES LME (Di Stefano et al. 2012). In this approach, the open-source IPython Notebook system (Pérez and Granger 2007) is used to develop, document, execute, and publish workflows based on researcher-contributed code (which need not be written in Python but can be written in MATLAB, R, and a variety of other languages via IPython’s extensible support for multiple languages and external code). In contrast with traditional workflow systems (either ad hoc or highly structured, e.g., Kepler), these “notebook” workflows provide a more transparent approach where each snippet of code involved in product generation is visible to the researcher during development and after publication. Notebooks can be downloaded, shared, modified, and re-executed via a simple Web-based interface. In addition, visualization of intermediate and final products is deeply integrated into notebooks, greatly facilitating evaluation and iterative development. This approach has been demonstrated in the ECO-OP project to document provenance for data products and enable code-sharing, even for users unfamiliar with developing complex, automated workflows. The approach provides a level of reproducibility that exceeds NOAA’s Information Quality Guidelines. While relatively new, the IPython Notebook is experiencing rapid adoption in the scientific community and is likely to become a standard researcher tool offering a no-cost alternative to large, proprietary systems such as MATLAB, while providing numerous interoperability mechanisms to support use of existing analytic codes and procedures (Pérez 2013). IFCB imagery has already been successfully integrated into IPython Notebook, enabling similar workflow outcomes as currently supported by technologies that are much more challenging to configure 10 and maintain. For example, we have already demonstrated a prototype version of IFCB’s image segmentation workflow in an IPython Notebook [http://nbviewer.ipython.org/gist/joefutrelle/9898646]. We propose to combine this existing work with the integration of standard provenance information into the IPython Notebook, as protoyped in the ECO-OP project, to enable semantic interoperability between IFCB images, derived products, and the use of these products in indicators. The separate bacteria and phytoplankton biodiversity products can retain the temporal resolution and duration of their respective underlying observational data. However, the composite indicator can only be calculated for the overlapping time interval, and some aggregation will be necessary to match the time series with coarsest resolution. 3.5 Objective 5) Development of Knowledge Base for Indicators/ Implementation of Linked data standards for workflow (machine-readable); RPI will lead the implementation of the Linked Data framework for production of ecosystem indicators, including provenance capture, utilizing resulting experience from the Global Change Information System (http://tw.rpi.edu/web/project/GCIS-IMAP (PI Fox) and ECO-OP (Fox, with co-PI Beaulieu and NOAA partners Fogarty and Hare) ontologies. In this case, Linked Data represents an open knowledge base of facts/ assertions about the world – e.g. indicators and the underlying data, assumptions and limitations. Further, an inference engine can reason about those facts and use rules and other forms of logic to deduce new facts or highlight inconsistencies (Leadbetter et al. 2013). Essentially, in this Objective, we fulfill a key concept of the GOOS Framework for Ocean Observing: transforming “EOVs into information… to serve a wide range of science and societal needs and enable effective management of the human relationship with the ocean” (UNESCO 2012a). Candidate use cases include those put forward by researchers who want to compare patterns of change in space and time for biodiversity (from genetic to functional) across many taxonomic levels, where scales of interest include seasonal, inter-annual, and multi-year (ultimately to decades), and local to regional (ultimately basins to global). The use cases will provide exemplar successes for the provenance, understanding, and re-usability of data shared by marine scientists to aid decisions on management of marine ecosystems. We will also identify end-users of the indicators in the regional IOOS and NOAA communities and international ICES working groups, engaging them in the evaluation of each iteration. 3.6 Objective 6) Broader Impacts The cyber-innovation we propose will be designed for sustainability, emphasizing demonstration with capability for extension, scale up, and diverse adoption by specialists and non-specialists alike. End results will be applicable far beyond the target data types identified for development and evaluation. We will use a strategy that achieves specific scientific and management outcomes in a context of solutions that promote broader impact. The outcome will be a "win-win" for science and policy. Policy makers want to make informed decisions, and scientists want to know how their data are being used. In the following sections, we describe more fully additional broader impacts of this project with respect to a) Education and workforce development; and b) National; c) International; and d) Industry partners. 3.6.a) Education and workforce Rensselaer’s Data Science and Informatics curriculum offerings (now in their 7th year) are currently embedded in four degree programs in the School of Science (Geology, Environmental Science, Computer Science and IT and Web Science (ITWS)). Several students are completing degrees in the MultiDisciplinary Sciences program and RPI has budgeted support for one of these students on this project. PI Fox teaches in all of these programs and is director of the ITWS program. Fox teaches Data Science, Informatics and Data Analytics. The RPI-WHOI memorandum specifically includes education and personnel exchanges and adjunct arrangements, which we will continue to leverage in this project. Additional links between this project and undergraduate and graduate education include incorporation of some of the tools into the Marine Biodiversity and Conservation SEA Semester 11 (http://www.sea.edu/voyages/caribbean_latespring_studyabroadprogram ) at the Sea Education Association in Woods Hole, the MBL Semester in Environmental Science (co-PI Mark Welch), and into the MIT/WHOI Joint Program course in Biological Oceanography and topics courses (co-PIs Sosik and Beaulieu). This curriculum has been delivered over many years and provides opportunities for participation in workshops, as well as student mentoring to take advantage of the tools we will be developing. Models and workflows developed by the project will be presented by Sosik and/or Beaulieu in the MBL summer course Strategies and Techniques for Analysis of Microbial Population Structures (co-directed by Mark Welch), which trains ~60 graduate students, postdocs, and independent investigators every year. WHOI promotes recruitment, including focus on ethnic minorities, into science and engineering at the undergraduate level, through its Minority Fellowship, Summer Student Fellowship and Partnership in Education Program. These long-standing and successful programs attract many highly qualified applicants each year for summer research internships. The PIs have a track record of teaching and sponsoring and advising students in these programs, often resulting in outcomes such as authorship on peer-reviewed publications and transition into competitive graduate programs in oceanography. During this project, we will actively recruit undergraduates from these programs to participate in the MBVL development and evaluation. The experience gained from these kinds of interactions cannot be replaced by other forms of training and will contribute to a new generation of scientists capable of working with coupled state-of-the-art ocean observation systems and virtual laboratory technologies. 3.6.b) National agency partnership Marine resource management is iterative and uses the best available science. The outcome of this proposal will improve the provision of biodiversity information for use in marine resource management. The focus here in building the capacity to provide biodiversity information is fundamental to using this type of information in management decisions. As an example, the IEA process is iterative, repeating on a cycle to provide updated information on the status of an ecosystem and the drivers, pressures, and states. The proposed work will build the informatics infrastructure that would also support an IEA in the U.S. Northeast large marine ecosystem, as well as support numerous other tools that are under development under the auspices of Ecosystem-Based Management. There is an accompanying letter of support from NOAA. Kane (2011) identified links between the diversity of phytoplankton and zooplankton in the NES LME. However, links between the diversity of lower and higher trophic levels (i.e., fishes, mammals, seabirds) have not been examined explicitly. As part of the 2014-2016 plan for the NES LME Integrated Ecosystem Assessment (IEA), the NEFSC will be developing a regional Ocean Health Index (OHI; see Letter from Fogarty and Hare). Biodiversity is one of the ten goals for which indicators are included in the composite OHI (Halpern et al. 2012). The OHI’s biodiversity goal includes a species sub-goal, which presently only includes data for species on the IUCN’s (International Union for the Conservation of Nature) Red List of Threatened Species. Marine species are also included in the OHI’s “Sense of Place” goal in the sub-goal “Iconic Species,” and invasive species are a component of the “species pollution” pressure (Halpern et al. 2012). For pelagic biodiversity, the main taxonomic groups included in the OHI are at higher trophic levels (i.e., fishes, reptiles, mammals) (Halpern et al. 2012). 3.6.c) International Our cyber-innovation will be of interest to international groups including the Group on Earth Observations Biodiversity Observation Network (GEO-BON) (Scholes et al. 2008), the Convention on Biological Diversity (CBD), and the Global Ocean Observing System (GOOS). In operational settings, the Global Environmental Forum is paying close attention to the characterization and management of LMEs at the ecosystem level and our outcomes will bear directly on the factors they consider in managing international waters. GOOS recognizes the importance of biodiversity and has called for phytoplankton and zooplankton EOVs (UNESCO 2012a) in support of several international agreements including the 12 CBD and the United Nations Framework Convention on Climate Change (UNFCCC). We note that EOVs (Gunn 2012) overlap with Essential Biodiversity Variables (EBVs) and ECVs (UNESCO 2012b). 3.6.d) Industry – See section 3.1.b, Management and Collaboration Plan, and Facilities for details. 4. EVALUATION PLAN Our accumulated experience and numerous formal assessments (early projects - McGuinness et al. 2007 as well as for ECO-OP and OII) have convinced us that evaluation studies are essential for a robust approach to evolve capabilities of a combined knowledge and software system for this project. To provide a more formal basis, we draw on the work of Twidale et al. (1994) to define evaluation studies consisting of several components with an orientation toward software. The baselines for such assessments will be captured via qualitative and quantitatively metrics when the use cases are detailed. Key attributes of the assessment in general terms are: overall effectiveness compared to current implementations, cost-benefit analysis of adoption, fulfillment of a specification and/ or purpose, superiority to an alternative, generalizable results, acceptance and continued use by other researchers, failure modes, relative importance of inadequacies. Each of these attributes will be made specific for each objective in this proposal (see Section 3). The array of techniques that can be used (according to a number of orthogonal dimensions) adds to the complexity of the evaluation task. The relevant dimensions are: summative ↔ formative quantitative ↔ qualitative controlled experiments ↔ ethnographic observations formal and rigorous ↔ informal and opportunistic Since we will utilize the Informatics Development methodology (Fig. 2; Benedict 2007; Fox et al. 2009; Fox and McGuinness 2014; Ma et al. 2014) in this work, we propose to conduct a series of formative evaluations throughout the first half of the development cycle at team meetings. These evaluations will provide direct feedback for development iterations. This has proven to be very effective in numerous recent projects. For example the IFCB Data Dashboard has gone through several stages of evaluation using this methodology, resulting in major improvements in usability that have made it a tool that is used daily in multiple laboratories and is becoming a key component of the IFCB data platform. Following formative evaluations and prototyping work, we will then formulate a set of evaluations that are summative, mostly quantitative, and formal but not in controlled situations since virtual laboratories and data and modeling capabilities proposed are considered to operate in the ’wild’, or in real, often unplanned use; so contrived studies are not realistic. The evaluation reports, with recommendations, support continuous project improvements. A final summative report will document progress toward program goals, highlighting effective strategies, and will provide recommendations for sustaining, improving, and replicating practices. We plan to leverage our collaborative relationship with Prof. Carole Palmer (University of Washington, and formerly of the Graduate School of Library and Information Sciences at UIUC’s Center for Informatics Research in Science and Scholarship). Dr. Palmer has extensive evaluation experience and a strong background in Science, Technology, Engineering, and Mathematics programs (e.g. her recent sitebased study of collaborations among geochemists in Yellowstone National Park). The evaluation design for this project involves both qualitative and quantitative methods, with dual goals of yielding 1) evidence of the impact of the project and 2) formative evaluation information to highlight successes and areas for improvement and to document variables associated with the greatest impact. Outcomes will be measured with a combination of data gathering processes, including surveys, interviews, focus groups, document analysis, and observations that will yield both qualitative and quantitative results. Evaluation questions (with metrics) will determine to what extent MBVL activities: 13 1. Enhance end-user access to and use of data to advance science and application questions (measured in terms of surveys and qualitative and sustained improvement for the majority of users)? 2. Enable the development of biodiversity indicators that are sufficiently robust and traceable that they can be used in science ecosystem assessments (quantitatively assessed internally in the MBVL)? 3. Contribute to the development and support of community resources including predictive models (with functional relevance) of biodiversity for specific marine ecosystems to other researchers. 4. Incorporate researcher experiences in the redesign and development cycles of MBVL (explicit in the informatics methodology; formative and summative, directly guiding iterations and improvements)? 5. Lead to implications for available data sources and their sampling approaches, and increased institutional collaboration activities (surveys / rates of adoption at the end of 3 years)? 6. Overcome factors that impede or facilitate progress toward the MBVL vision and objectives (interviews and observation)? Makes progress toward what a sustainable and scaled up MBVL might be. 14