Robust Fuzzy Extractors - CS-People by full name

advertisement

A Unified Approach to

Deterministic Encryption:

New Constructions

and

a Connection to

Computational Entropy

Benjamin Fuller Adam O’Neill Leonid Reyzin

Boston University

& MIT Lincoln Lab

Boston University

August 16, 2012

Work from Theory of Crypto 2012

1

Public Key Encryption (PKE)

m

Enc

$

c

PK

Need randomness to achieve semantic security

2

Public Key Encryption (PKE)

What can be achieved without randomness?

m

$

Enc

c

PK

3

Why deterministic PKE?

• The question of deterministic stateful symmetric key

prg – pseudorandom generator

encryption is well understood:

Key:

Messages:

Encryption:

Each bit appears random to

k

bounded distinguisher

m1, …, mn

pad1 || … || padn = prg(k)

ci = padi mi

• Deterministic PKE is more difficult but has important applications:

– Supporting devices with limited/no randomness

– Enabling encrypted search

– E.g. Spam filtering by keyword on encrypted email

4

Deterministic PKE

• PKE scheme where encryption is deterministic

– Introduced by [BellareBoldyrevaO’Neill07]

• Need source of randomness

messages are only hope

• Security defined w.r.t. high min-entropy message distribution M

– H∞(M)≥ μ

for all m, Pr[M=m] ≤ (1/2)μ

• Even most likely message is hard to guess:

Network packet w/ fixed header

All Strings of Even Parity

U conditioned on a high probability event

– Message distribution must be independent of public key

• An approach: fake coins to chosen plaintext-secure (CPA) scheme

[BellareBoldyrevaO’Neill07, BelllareFischlinO’NeillRistenpart08]

5

Results

• Deterministic PKE:

– General: from arbitrary TDF with enough hardcore bits

– Efficient: uses only a single application of TDF

• Framework yields constructions from RSA, Paillier, & Niederreiter

– These TDFs have many hardcore bits under

non-decisional (search) assumptions

• Tools of independent interest:

– Improved Equivalence between Indistinguishability & Semantic Security

– Conditional Computational Entropy

• First deterministic PKE for q arbitrarily correlated messages

– Extension of LHL to correlated sources using 2q-wise indep. hash

– Extension of crooked LHL to improve parameters

6

Results

• Deterministic PKE:

– General: from arbitrary TDF with enough hardcore bits

– Efficient: uses only a single application of TDF

Focus of

the talk

• Framework yields constructions from RSA, Paillier, & Niederreiter

– These TDFs have many hardcore bits under

non-decisional (search) assumptions

• Tools of independent interest:

– Improved Equivalence between Indistinguishability & Semantic Security

– Conditional Computational Entropy

• First deterministic PKE for q arbitrarily correlated messages

– Extension of LHL to correlated sources using 2q-wise indep. hash

– Extension of crooked LHL to improve parameters

7

Our Scheme: Encrypt with hardcore Enc hc

Enc

m

$

PK

8

Our Scheme−Enc hc

TDF: Easy to compute, hard to invert without key

hc: Pseudorandom given output of TDF

Ext: Converts high entropy distributions to uniform

TDF – Trapdoor function

hc – Hardcore function

Ext – Randomness extractor

Enc – Randomized Encrypt Alg.

TDF

m

Enc

TDF(m)

hc

hc(m)

Ext

Ext(hc(m))

PK

9

Our Scheme−Enc hc

Question: Why is this semantically secure?

TDF – Trapdoor function

hc – Hardcore function

Ext – Randomness extractor

Enc – Randomized Encrypt Alg.

TDF

m

Enc

TDF(m)

hc

hc(m)

Ext

Ext(hc(m))

PK

10

Indistinguishability

Semantic Security

Definitional Equivalence

[BellareFischlin

O’NeillRistenpart08]

Distributions

of entropy μ-2

Distributions

of entropy μ

This Work

Indistinguishability on

all pairs of msg. distributions

of entropy μ-2

Indistinguishability of all pairs on

conditional msg. distributions,

M|e, of entropy μ-2

Semantic security

on

all msg. distributions

of entropy μ

Semantic security on

a msg. distribution M

(of entropy μ)

M

11

Indistinguishability

Semantic Security

Definitional Equivalence

M|e, Pr[e]≥1/4

[BellareFischlin

O’NeillRistenpart08]

Distributions

of entropy μ-2

Distributions

of entropy μ

This Work

Indistinguishability on

all pairs of msg. distributions

of entropy μ-2

Indistinguishability of all pairs on

conditional msg. distributions,

M|e, of entropy μ-2

or equivalently

M|e, where Pr[e] ≥ 1/4

Semantic security

on

all msg. distributions

of entropy μ

Semantic security on

a msg. distribution M

(of entropy μ)

M

12

Outline of Security Proof

m

TDF(m)

TDF

c

hc hc(m)

PK

Enc

Ext

Ext(hc(m))

General Definitional Equivalence

Indistinguishability:

For all conditional pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0], Pr[e1] ≥ 1/4

Semantic Security:

For a message distribution M

13

Our Scheme−Enc hc

TDF – Trapdoor function

hc – Hardcore function

Ext – Randomness extractor

Enc – Randomized Encrypt Alg.

Question: Why is this secure?

TDF

m

Enc

TDF(m)

hc

hc(m)

Ext

Ext(hc(m))

PK

14

Our Scheme−Enc hc

Question: Why is this secure indistinguishable? TDF – Trapdoor function

hc – Hardcore function

To gain intuition we will try removing the

Ext – Randomness extractor

extractor.

Enc – Randomized Encrypt Alg.

TDF

m

Enc

TDF(m)

hc

hc(m)

Ext

Ext(hc(m))

PK

15

Toy Scheme

Question: Is this scheme indistinguishable?

NO: hc can reveal the first bit of m.

TDF

m

TDF(m)

hc

Enc

hc(m)

PK

16

Toy Scheme

Question: Is this scheme indistinguishable?

NO: hc can reveal the first bit of m.

Enc can reveal its first coin.

TDF

m

TDF(m)

hc

Enc

m0 ||...

m0 ||...

PK

17

Outline of Security Proof

m

TDF(m)

TDF

hc hc(m)

Enc

c

PK

Indistinguishability:

For all conditional pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0], Pr[e1] ≥ 1/4

Semantic Security:

For a message distribution M

18

Outline of Security Proof

m

TDF(m)

TDF

Enc

hc hc(m)

c

PK

Robust hardcore function:

hc is hardcore on M|e

for all e, Pr[e] ≥ 1/4

Indistinguishability:

For all conditional pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0], Pr[e1] ≥ 1/4

Semantic Security:

For a message distribution M

19

Outline of Security Proof

m

TDF(m)

TDF

hc hc(m)

Enc

c

PK

Robust hardcore function:

hc(M|e) is pseudorandom given TDF(M|e)

for all e, Pr[e] ≥ 1/4

Indistinguishability:

For all conditional pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0], Pr[e1] ≥ 1/4

Semantic Security:

For a message distribution M

20

Robustness: Implicit in Prior Work

TDF

Robust hc function

[Bellare

Fischlin

O’Neill

Ristenpart08]

Iterated trapdoor

permutation

[GL89] hc bit at each

iteration ([BM84] prg)

[Boldyreva

Fehr

O’Neill 08]

Lossy trapdoor

function

Pairwise independent

hash function

Single

iteration

of

This work

arbitrary trapdoor

function

Any function with

enough hc bits +

extractor Ext

21

Outline of Security Proof

m

TDF(m)

TDF

Enc

hc hc(m)

c

PK

Hardcore function:

Robust hardcore function:

hc(M) is pseudorandom

given TDF(M)

Ext( hc(M|e)) is pseudorandom given TDF(M|e)

for all e, Pr[e] ≥ 1/4

Indistinguishability:

For all conditional pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0], Pr[e1] ≥ 1/4

Semantic Security:

For a message distribution M

22

Outline of Security Proof

m

TDF(m)

TDF

c

hc hc(m)

PK

Hardcore function:

Robust hardcore function:

Enc

Ext

Ext(hc(m))

hc(M) is pseudorandom

given TDF(M)

Rest of

the talk

Ext( hc(M|e)) is pseudorandom given TDF(M|e)

for all e, Pr[e] ≥ 1/4

Indistinguishability:

For all conditional pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0], Pr[e1] ≥ 1/4

Semantic Security:

For a message distribution M

23

Key Theorem

• Know: hc produces pseudorandom bits on M

• Want: hc produces pseudorandom bits on M|e

M

hc

hc(M)≈U

24

Key Theorem

• Know: hc produces pseudorandom bits on M

• Want: hc produces pseudorandom bits on M|e

M|e

hc

hc(M)≈U

(hc(M|e))≈U

Problem: hc(M|e) cannot be pseudorandom

For example, event e can fix the first bit of hc(M)

Solution: Use HILL entropy!

25

Key Theorem

• Know: hc produces pseudorandom bits on M

• Want: HHILL( M | E ) is high

M|e

hc

26

Key Theorem

• Know: hc produces pseudorandom bits on M

• Want: HHILL( hc(M|e) ) is high

HHILL(X) ≥ μ if

Y, H∞ (Y) ≥ μ

X≈Y

M|e

hc

How is HHILL( hc(M|e) ) related to HHILL( hc(M) )?

General question:

How is HHILL( X|e ) related to HHILL( X )?

Key Theorem:

HILL

HeHILL

(X

|

e)

³

H

',s'

e ,s (X) - log1/ Pr[e]

27

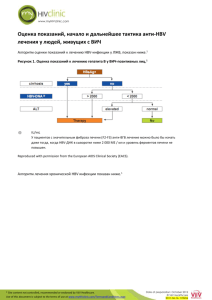

Tangent: Avg Case Cond. Entropy

Info-Theoretic Case [Dodis Ostrovsky Reyzin Smith 04]:

Distribution not a single event!

H¥ (X | E) ³ H¥ (X)- log | E |

Our Lemma:

He /|E|,s (X | E) ³ He,s (X)- log | E |

•

•

•

We can apply the lemma multiple times to measure H(M |E1,E2)

Cannot measure entropy when original distribution is conditional

Average case conditioning useful for leakage resilience

28

Related Work

• Unconditional: HHILL( X|E=e ) related to HHILL( X )?

– [DziembowskiPietrzak08]

• Entropy drop depended on resulting quality (ε’)

– [ReingoldTrevisanTulsianiVadhan08]:

• Improved parameters, entropy drop only depends on e

(we use similar techniques, improve on repeated conditioning)

• Conditional: HHILL( X|E1,E2 ) related to HHILL( X|E1 )?

– [ChungKalaiLiuRaz11]:

• Asymptotic formulation, worst-case cond. min-entropy,

Conditions E1 that have efficient sampling algorithm for X,

|E2|≤ log |sec param.|

– [GentryWichs11]:

• Both X, E1 are replaced by X’, E’1: produces incomparable result 29

Key Theorem

H HILL (hc(M | e)) ³ H HILL (hc(M)) - log1/ Pr[e]

H HILL (hc(M)) ³ n

Pr[e] ³1/ 4

M|e

H

HILL

(hc(M | e)) ³ n - 2

hc

HILL

entropy

30

Key Theorem

H HILL (hc(M | e)) ³ H HILL (hc(M)) - log1/ Pr[e]

H HILL (hc(M)) ³ n

Pr[e] ³1/ 4

M|e

H

HILL

(hc(M | e)) ³ n - 2

hc

HILL

entropy

Ext

pseudorandom

Extractors convert distributions

w/ min-entropy to uniform

w/ HHILL to pseudorandom

31

Outline of Security Proof

m

TDF(m)

TDF

c

hc hc(m)

PK

Hardcore function:

Robust hardcore function:

Enc

Ext

Ext(hc(m))

hc(M) is pseudorandom

given TDF(M)

Rest of

the talk

Ext( hc(M|e)) is pseudorandom given TDF(M|e)

for all e, Pr[e] ≥ 1/4

Indistinguishability:

For all conditional pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0], Pr[e1] ≥ 1/4

Semantic Security:

For a message distribution M

32

Results

• Enc hc , deterministic PKE:

– General: from arbitrary TDF with enough hardcore bits

– Efficient: uses only a single application of TDF

• Framework yields constructions from RSA, Paillier, & Niederreiter

– These TDFs have many hardcore bits under

non-decisional (search) assumptions

• Tools of independent interest:

– Improved Definitional Equivalence

– Conditional Computational Entropy

• Allows encryption of messages from block sources

– Each message has entropy conditioned on previous msgs:

H∞(Mi | M1,…, Mi-1) is high

33

Results

• Enc hc , deterministic PKE:

– General: from arbitrary TDF with enough hardcore bits

– Efficient: uses only a single application of TDF

• Framework yields constructions from RSA, Paillier, & Niederreiter

– These TDFs have many hardcore bits under

non-decisional (search) assumptions

• Tools of independent interest:

– Improved Definitional Equivalence

– Conditional Computational Entropy

Briefly

• First deterministic PKE for q arbitrarily correlated messages

– Extension of LHL to correlated sources using 2q-wise indep. hash

– Extension of crooked LHL to improve parameters

34

Extending to multiple messages

• We need an extractor that “decorrelates” messages:

• Use a 2q-wise independent hash function

35

Extending to multiple messages

• We need an extractor that “decorrelates” messages:

• Use a 2q-wise independent hash function

m

TDF

hc hc(m)

TDF(m)

Enc

c

Ext

Ext(hc(m))

PK

36

Extending to multiple messages

• We need an extractor that “decorrelates” messages:

• Use a 2q-wise independent hash function

• First scheme for q-arbitrarily correlated messages

m

TDF

hc hc(m)

TDF(m)

Enc

c

Hash Hash(hc(m))

PK

37

Results

• Enc hc , deterministic PKE:

– General: from arbitrary TDF with enough hardcore bits

– Efficient: using single application of TDF

• Framework yields constructions from RSA, Paillier, & Niederreiter

– These TDFs have many hardcore bits under

non-decisional (search) assumptions

• Tools of independent interest:

– Improved Definitional Equivalence

– Conditional Computational Entropy

• First deterministic PKE for q arbitrarily correlated messages

– Extension of LHL to correlated sources using 2q-wise indep. hash

– Extension of crooked LHL to improve parameters

38

Thank you!

BACKUPS

40

Extending to multiple messages

Lemma (Extension of LHL):

Let M1 ,…, Mq be high entropy,

arbitrarily correlated random variables (Mi ≠ Mj ),

Hash family of 2q-wise indep. hash functions (keyed by K)

1

| range(Hash) |q (q 2 2 H¥ (Mi ) + 3 / 4)

2

K, Hash(K, M1) ,…, Hash(K, Mq)

K,

U1

≈

,…,

Uq

41

Semantic Security for Deterministic PKE

Adversary

Challenger

M – message distribution

f – test function

A

b

M

DetEnc(mb), pk

$

m0 , m1 ¬ M

DetEnc

mb

g

Adv(A) = Pr[g = f (m1 ) | b =1]

Compute f from ciphertext

42

Semantic Security for Deterministic PKE

Adversary

Challenger

M – message distribution

f – test function

A

b

M

DetEnc(mb), pk

$

m0 , m1 ¬ M

DetEnc

mb

g

Adv(A) = Pr[g = f (m1 ) | b =1]- Pr[g = f (m1 ) | b = 0]

Compute f from ciphertext

Compute f from random ciphertext

43

Indistinguishability for Deterministic PKE

Adversary

Challenger

M0 – message distribution

M1 – message distribution

A

M 0, M1

b

$

m¬ M b

DetEnc

DetEnc(m), pk

b'

Adv(A) = Pr[b' = b]-1/ 2

44

Outline of Security Proof

m

TDF(m)

TDF

c

hc hc(m)

PK

Enc

Ext

Ext(hc(m))

General Definitional Equivalence

Indistinguishability:

Semantic Security:

For a message distribution M

45

Outline of Security Proof

m

TDF(m)

TDF

hc hc(m)

Enc

c

PK

Q: Is any hc robust? A: NO! Define event e: fix first bit(previous example!)

Robust hardcore function:

hc(M|e) is pseudorandom given TDF(M|e)

for all e, Pr[e] ≥ 1/4

Indistinguishability:

For all pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0],Pr[e1]≥1/4

Semantic Security:

For a message distribution M

46

Outline of Security Proof

m

TDF

hc

TDF(m)

Enc

m0 ||...

m0 ||...

PK

Q: Is any hc robust? A: NO! Define event e: fix first bit(previous example!)

Robust hardcore function:

hc(M|e) is pseudorandom given TDF(M|e)

for all e, Pr[e] ≥ 1/4

Indistinguishability:

For all pairs M|e0 , M|e1

e0, e1 are events s.t. Pr[e0],Pr[e1]≥1/4

Semantic Security:

For a message distribution M

47

Outline of Security Proof

m

TDF

TDF(m)

c

hc hc(m)

PK

Enc

Ext

Ext(hc(m))

Hardcore function

Robust hardcore function

Indistinguishability

Semantic Security

48

Outline of Security Proof

m

TDF(m)

TDF

c

hc hc(m)

Ext

PK

Enc

Ext(hc(m))

Hardcore function

1.

Hardcore function:

hc(M) is pseudorandom

given TDF(M)

Robust hardcore function

2.

Comp. Entropy:

hc(M|e) high computational

entropy

3.

Uniform Ext Output:

Ext( hc(M|e) )

pseudorandom

4.

Robust hc function:

Ext( hc(M|e) ) | TDF( M|e )

pseudorandom

49

Indistinguishability

Semantic Security

(1) Hc function

(2) Comp. Entropy

• Know: hc produces pseudorandom bits on M

• Want: HHILL( hc(M|e) ) is high

HHILL(X)≥μ if

Y, H∞ (Y)≥μ

X≈ε,sY

Distinguisher

Advantage

M|e

hc

Distinguisher

Size

50

Conditional Computational Entropy

Info-Theoretic Case:

H¥ (X | E = e) ³ H¥ (X)- log1/ Pr[e]

Our Lemma:

He /Pr[e],s (X | E = e) ³ He,s (X) - log1/ Pr[e]

Warning: this is not HHILL!

•

•

Different Y (that has true entropy) for each distinguisher (“metric*”)

Notion used in [Barak Shaltiel Widgerson03] [DziembowskiPietrzak08]

51

Conditional Computational Entropy

Info-Theoretic Case:

H¥ (X | E = e) ³ H¥ (X)- log1/ Pr[e]

Our Lemma:

He /Pr[e],s (X | E = e) ³ He,s (X) - log1/ Pr[e]

Warning: this is not HHILL!

•

Can be converted to HILL entropy with a loss in circuit size

[BSW03, ReingoldTrevisanTulsianiVadhan08]

Our Theorem:

H

e ' = e / Pr[e]+ 3 log | M | /s, s' = 3 s / log | M |

(X | E = e) ³ H

HILL

e ',s'

HILL

e,s

(X) - log1/ Pr[e]

52

Tangent: Avg Case Cond. Entropy

Info-Theoretic Case [Dodis Ostrovsky Reyzin Smith 04]:

Distribution not a single event!

H¥ (X | E) ³ H¥ (X)- log | E |

Our Lemma:

He /|E|,s (X | E) ³ He,s (X)- log | E |

•

•

•

We can apply the lemma multiple times to measure H(M |E1,E2)

Cannot measure entropy when original distribution is conditional

Average case conditioning useful for leakage resilience

Works on conditional computational entropy:

[ReingoldTrevisanTulsianiVadhan08], [DziembowskiPietrzak08],

[ChungKalaiLiuRaz11],[GentryWichs10]

53

Outline of Security Proof

m

TDF(m)

TDF

c

hc hc(m)

Ext

PK

Enc

Ext(hc(m))

Hardcore function

1.

Hardcore function:

hc(M) is pseudorandom

given TDF(M)

Robust hardcore function

2.

Cond. Comp Entropy:

hc(M|e) high computational

entropy for e, Pr[e]≥1/4

3.

Uniform Ext Output:

Ext( hc(M|e) )

pseudorandom for e, Pr[e]≥1/4

4.

Robust hc function:

Ext( hc(M|e) ) | TDF(M|e)

pseudorandom

Indistinguishability

Semantic Security

54

Outline of Security Proof

m

TDF(m)

TDF

c

hc hc(m)

Ext

PK

Enc

Ext(hc(m))

Hardcore function

1.

Hardcore function:

hc(M) is pseudorandom

given TDF(M)

Robust hardcore function

2.

Cond. Comp Entropy:

hc(M|e) high computational

entropy for e, Pr[e]≥1/4

3.

Uniform Ext Output:

Ext( hc(M|e) )

pseudorandom for e, Pr[e]≥1/4

4.

Robust hc function:

Ext( hc(M|e) ) | TDF(M|e)

pseudorandom

Indistinguishability

Semantic Security

55

(3) Unif. Ext Output

(4) Robust hc function

• Know: hc(M) | TDF(M) is pseudorandom (hc is hardcore)

M

TDF

hc

pseudorandom

56

(3) Unif. Ext Output

(4) Robust hc function

• Know: hc(M) | TDF(M) is pseudorandom (hc is hardcore)

• Know: Ext( hc(M|e) ) is pseudorandom ((1) (3))

M

TDF

hc

pseudorandom

57

(3) Unif. Ext Output

(4) Robust hc function

• Know: hc(M) | TDF(M) is pseudorandom (hc is hardcore)

• Know: Ext( hc(M|e) ) is pseudorandom ((1) (3))

M|e

TDF

hc

pseudorandom

58

(3) Unif. Ext Output

(4) Robust hc function

• Know: hc(M) | TDF(M) is pseudorandom (hc is hardcore)

• Know: Ext( hc(M|e) ) is pseudorandom ((1) (3))

M|e

TDF

hc

HILL

entropy

59

(3) Unif. Ext Output

(4) Robust hc function

• Know: hc(M) | TDF(M) is pseudorandom (hc is hardcore)

• Know: Ext( hc(M|e) ) is pseudorandom ((1) (3))

• Want: (Ext( hc(M|e) ) | TDF(M|e) ) is pseudorandom

M|e

TDF

hc

HILL

entropy

Ext

pseudorandom

60

(3) Unif. Ext Output

(4) Robust hc function

• Know: hc(M) | TDF(M) is pseudorandom (hc is hardcore)

• Know: Ext( hc(M|e) ) is pseudorandom ((1) (3))

• Want: (Ext( hc(M|e) ) | TDF(M|e) ) is pseudorandom

M|e

TDF

hc

Unfortunately our entropy theorem does not work

if the starting point is conditional

Solution: Consider the joint distribution ( hc(M), TDF(M) )

Condition on e to measure entropy of ( hc(M|e), TDF(M|e) )

HILL

entropy

Ext

pseudorandom

61

(3) Unif. Ext Output

(4) Robust hc function

• Know: hc(M) | TDF(M) is pseudorandom (hc is hardcore)

• Know: Ext( hc(M|e) ) is pseudorandom ((1) (3))

• Lemma: (Ext( hc(M|e) ) | TDF(M|e) ) is pseudorandom

M|e

TDF

hc

Unfortunately our entropy theorem does not work

if the starting point is conditional

Solution: Consider the joint distribution ( hc(M), TDF(M) )

Condition on e to measure entropy of ( hc(M|e), TDF(M|e) )

HILL

entropy

Ext

pseudorandom

62

Outline of Security Proof

m

TDF(m)

TDF

c

hc hc(m)

Ext

PK

Enc

Ext(hc(m))

Hardcore function

1.

Hardcore function:

hc(M) is pseudorandom

given TDF(M)

Robust hardcore function

2.

Cond. Comp Entropy:

hc(M|e) high computational

entropy for e, Pr[e]≥1/4

3.

Uniform Ext Output:

Ext( hc(M|e) )

pseudorandom for e, Pr[e]≥1/4

4.

Robust hc function:

Ext( hc(M|e) ) | TDF(M|e)

pseudorandom

Indistinguishability

Semantic Security

63

Our Scheme−E hc

F

m

F(m)

E

hc

PK

65

Previous Schemes

•

•

•

•

Random Oracle [Bellare Boldyreva O’Neill 07]

Iterated TDP w/ GL Hardcore bit [Bellare Fischlin O’Neill Ristenpart 08]

Lossy TDF, 2-wise independent hash [Boldyreva Fehr O’Neill 08]

Correlated Product TDFs [Hemenway Lu Ostrovsky 10]

Schemes all use trapdoor functions

Can we find a simple and efficient construction

for arbitrary trapdoor and hardcore function pair?

66

(5) Indistinguishability

(6) Security

Theorem [BFOR08]:

Fix message distribution M

E ( M1 ) ≈ E ( M2 ) for all distributions with a little less entropy

H ( M1 ), H( M2 ) ≥ H ( M ) − 2

E is secure on M

It is difficult to consider security for all M1, M2

We will consider only conditional distributions

68

(5) Indistinguishability

(6) Security

Proof:

Fix adversary A with function f

If g ¹ tb , g, r ¹ tb , r

With probability 1/2 over a random r

f

$B, 2Adv(B) ³ Adv(A)

B outputs a 1-bit test vector

We can consider adversaries with binary f

69

(5) Indistinguishability

(6) Security

Proof:

Fix adversary B with binary function f

We can balance f by combining it with a

random coin at the cost of advantage

f

æ 2

ö

+1÷ Adv(C) = 81Adv(C) ³ Adv(B)

ç

è1 4 ø

Pr[1¬ C], Pr[0 ¬ C] ³ 1 4

2

We can consider adversaries with balanced binary f

70

(5) Indistinguishability

(6) Security

Proof:

Fix adversary C with balanced binary function f

f induces two high entropy distributions

M | f ( M )=1, M | f ( M )=0

f

C distinguishes M | f ( M )=1, M | f ( M )=0

If E (M | E) ≈ E (M | E’), then E is secure on M

71

Outline

• Prior work:

Turns out their “coins” hide partial information

• Generalization:

We show hiding partial information

is sufficient in general

• Construction:

We show how to augment any hardcore

function so it hides all partial information

72

E hc Security Notion

Necessary for security: hc hides partial information about m

F

m

F(m)

hc

E

hc(m)

PK

73

Deterministic PKE with RO

[Bellare Boldyreva O’Neill 07]

m

E

$

PK

74

Deterministic PKE with RO [BBO07]

Want construction in standard model

m

E

RO

RO

(m || PK)

PK

75

Deterministic PKE w/o RO

[Bellare Fischlin O’Neill Ristenpart 08]

F

m

E

m

F 0 (m)

GL

PK

76

Deterministic PKE w/o RO [BFOR08]

F

m

F 0 (m)

GL

F1 (m)

E

0

bF0 (m), s

PK

77

Deterministic PKE w/o RO [BFOR08]

F

m

F1 (m)

E

b0

GL

b1

PK

78

Deterministic PKE w/o RO [BFOR08]

F

m

F n-1 (m)

E

b0

GL

b1

…

PK

bn-1

79

Deterministic PKE w/o RO [BFOR08]

F

m

GL

PK

F n (m)

E

b0

…

bn-1

80

Security Proof of [BFOR08]

Construction hides partial information about m in M

E ( M1 ) ≈ E ( M2 ) for all distributions with a little less entropy

H ( M1 ), H( M2 ) ≥ H ( M ) − 2

[BM84] generator with GL is pseudorandom on M1, M2

81

Security Proof

E hc hides partial information about m in M

E ( M1 ) ≈ E ( M2 ) for all distributions with a little less entropy

H ( M1 ), H( M2 ) ≥ H ( M ) − 2

[BM84] generator with GL is pseudorandom on M1, M2

82

Security Proof

E hc hides partial information about m in M

Improve definitional

equivalences of

[BFOR08,BFO08]

E hc (M | E) ≈ E hc (M | E’) for events E, E’

[BM84] generator with GL is pseudorandom on M1, M2

83

Security Proof

E hc hides partial information about m in M

Improve definitional

equivalences of

[BFOR08,BFO08]

E hc (M | E) ≈ E hc (M | E’) for events E, E’

Proof shows it is sufficient to consider

high probability E, Pr[E] ≥ 1/4

hc ( M | E ) ≈ hc ( M | E’ ) for events E, E’

84

Security Proof

E hc hides partial information about m in M

Improve definitional

equivalences of

[BFOR08,BFO08]

E hc (M | E) ≈ E hc (M | E’) for events E, E’

Proof shows it is sufficient to consider

high probability E, Pr[E] ≥ 1/4

hc ( M | E ) ≈ hc ( M | E’ ) where Pr[E] ≥ 1/4, Pr[E’] ≥ 1/4

85

Security Proof

E hc hides partial information about m in M

Improve definitional

equivalences of

[BFOR08,BFO08]

E hc (M | E) ≈ E hc (M | E’) for events E, E’

Proof shows it is sufficient to consider

high probability E, Pr[E] ≥ 1/4

hc is robust on message distribution M

hc is hardcore on all M|E, Pr[E]≥1/4

86

Security Proof

E hc hides partial information about m in M

Improve definitional

equivalences of

[BFOR08,BFO08]

E hc (M | E) ≈ E hc (M | E’) for events E, E’

hc is robust on message distribution M

hc is hardcore on all M|E, Pr[E]≥1/4

87

Security Proof

E hc hides partial information about m in M

Improve definitional

equivalences of

[BFOR08,BFO08]

E hc (M | E) ≈ E hc (M | E’) for events E, E’

Can

we find

Shown

usingor construct

standard

robust

hctechniques

functions?

hc is robust on message distribution M

hc is hardcore on all M|E, Pr[E]≥1/4

88

Our Scheme−E hc

• Problem: coins shouldn’t reveal info about m

• Achieved: coins are pseudorandom given F(m)

Coins can still reveal part of the message m

F

m

F(m)

hc

E

hc(m)

PK

89

Our Scheme−E hc

• Problem: hc and E can both reveal the first bit of m.

F

m

F(m)

hc

E

hc(m)

PK

90

Our Scheme−E hc

• Problem: hc and E can both reveal the first bit of m.

• Necessary for security: hc hides “everything” about m.

F

m

F(m)

hc

E

m0 ||...

m0 ||...

PK

91

Ext ( hc() ) is hardcore on M | E

Proof:

(hc(M), f (M)) »e,s (U, f (M))

H

HILL

e ,s

(hc(M ), f (M)) ³ hc + H¥ (M)

By computational

entropy theorem

H

HILL

e ',s'

(hc(M), f (M) | E) ³ hc + H¥ (M) - 2

92

Ext ( hc() ) is hardcore on M | E

Proof:

H¥ ((A, B)) ³ hc + H¥ (M)- 2

(hc(M ), f (M )) | E

»e ',s'

(A, B)

93

Ext ( hc() ) is hardcore on M | E

Proof:

H¥ ((A, B)) ³ hc + H¥ (M)- 2

(hc(M

| E) | E

(hc(M

), f ),(Mf (M

),U))

|seed|

»e ',s'

(A,(A,

B,UB)

|seed| )

Add independent uniform

random variable

94

Ext ( hc() ) is hardcore on M | E

Proof:

H¥ ((A, B)) ³ hc + H¥ (M)- 2

(Ext(hc(M

), f |seed|

(M)),U

(hc(M ),),U

f (M

| E|seed| ) | E

|seed|),U

»e ',s'

',s'-sext

(A, B,U

) |seed| )

(Ext(A,U

), B,U

|seed|

|seed|

Deterministic function can

only help distinguisher

by its size

95

Ext ( hc() ) is hardcore on M | E

Proof:

H¥ ((A, B)) ³ hc + H¥ (M)- 2

(Ext(hc(M ),U|seed| ), f (M ),U|seed| ) | E

»e ',s'-sext

(Ext(A,U|seed| ), B,U|seed| )

»eExt

(U, B,U|seed| )

96

Ext ( hc() ) is hardcore on M | E

Proof:

H¥ ((A, B)) ³ hc + H¥ (M)- 2

(Ext(hc(M ),U|seed| ), f (M ),U|seed| ) | E

»e ',s'-sext

(Ext(A,U|seed| ), B,U|seed| )

»eExt

(U, B,U|seed| )

Extractor input has

enough entropy

97

Ext ( hc() ) is hardcore on M | E

Proof:

H¥ ((A, B)) ³ hc + H¥ (M)- 2

(Ext(hc(M ),U|seed| ), f (M ),U|seed| ) | E

»e ',s'-sext

(Ext(A,U|seed| ), B,U|seed| )

»eExt

(U, B,U|seed| )

»e ',t '

(U, f (X | E),U|seed| )

98

Ext ( hc() ) is hardcore on M | E

Proof:

H¥ ((A, B)) ³ hc + H¥ (M)- 2

(Ext(hc(M ),U|seed| ), f (M ),U|seed| ) | E

»e ',s'-sext

(Ext(A,U|seed| ), B,U|seed| )

»eExt

(U, B,U|seed| )

»e ',t '

(U, f (X | E),U|seed| )

hc( M ), f( M ) | E

≈ε’,s’

(A , B)

implies

f(M)

≈ε’,s’

B

99

Ext ( hc() ) is hardcore on M | E

Theorem:

(hc(M), f (M)) »t,e (U, f (M))

(Ext(hc(M ),U|seed| ), f (M ),U|seed| ) | E

» 2e '+2eExt ,s'-sext

(U, f (M ),U|seed| ) | E

100

Indistinguishability

Security

Theorem:

If E (M | E) ≈ E (M | E’),

E (m)

0001001110001

0 00

M|E

101

Indistinguishability

Theorem:

If E (M | E) ≈ E (M | E’),

M | E’

E (m)

M|E

001001110001

0

001001110001

00

102

Indistinguishability

Security

Theorem:

If E (M | E) ≈ E (M | E’),

Encryption scheme E secure on M

E (m)

M

f

f(m)=???

103

Outline of Security Proof

hc is robust on message distribution M

Pr[E] ³1/ 4, Pr[E '] ³1/ 4, hc(M) | E » hc(M) | E'

Standard argument

E hc (M | E) » E hc (M | E ')

Improve definitional

equivalences of

[BFOR08,BFO08]

E hc is secure for distribution M

Can we find or construct robust hc functions?

104

Outline of Security Proof

hc is robust on message distribution M

Pr[E] ³1/ 4, Pr[E '] ³1/ 4, hc(M) | E » hc(M) | E'

EncwHC(M | E) » EncwHC(M | E ')

EncwHC is secure for distribution M

105

Outline of Security Proof

hc is robust on message distribution M

Pr[E] ³1/ 4, Pr[E '] ³1/ 4, hc(M) | E » hc(M) | E'

Standard techniques

EncwHC(M | E) » EncwHC(M | E ')

Improve definitional

equivalences of

[BFOR08,BFO08]

EncwHC is secure for distribution M

106

hc functions that hide partial information

Necessary for security: hc hides partial information about m

Necessary for security: hc(M | E) ≈ hc(M | E’)

• These are also sufficient conditions

• It is also sufficient to consider only

high probability E, Pr[E] ≥ ¼

107

hc functions that hide partial information

Sufficient for security: hc hides partial information about m

Sufficient for security: hc(M | E) ≈ hc(M | E’)

Pr[E] ≥ ¼, Pr[E’] ≥ ¼

• These are also sufficient conditions

• It is also sufficient to consider only

high probability E, Pr[E] ≥ ¼

108

Outline of Security Proof

1

1

Pr[E] ³ , Pr[E '] ³ , hc(M ) | E » hc(M ) | E '

4

4

109

Making HC functions robust

• Know: hc produces pseudorandom bits on M

• Want: HHILL(M|PI=p) is high

M|PI=p

hc

Theorem:

Hε/Pr[p],s (M | PI = p) Hε,s (M) log 1/Pr[p]

Warning: this is not HHILL!

•

Can be converted to HILL entropy with a loss in circuit size

[BSW03, RTTV08]

110

Making HC functions robust

• Know: hc produces pseudorandom bits on M

• Want: HHILL(M|PI=p) is high

M|PI=p

hc

Theorem:

Hε’/Pr[p],s’ (M | PI = p) Hε’,s’ (M) log 1/Pr[p]

Warning: this is not HHILL!

•

Can be converted to HILL entropy with a loss in circuit size

[BSW03, RTTV08]

111

Making HC functions robust

• Know: hc produces pseudorandom bits on M

• Want: HHILL(M|PI=p) is high

M|PI=p

hc

Theorem:

Hε/Pr[p],s (M | PI = p) Hε,s (M) log 1/Pr[p]

Warning: this is not HHILL!

•

•

Universal, deterministic distinguisher for all Z (“metric*”)

Notion used in [BSW03] and [DP08]

112

EncwHC Lemma

If hc is hardcore on M1 and M2

EncwHC(M1)≈EncwHC(M2)

m

F(m)

F

hc

E

DE

hc(m)

PK

113

Security of EncwHC

Improve definitional

equivalences of

[BFOR08,BFO08]

EncwHC is secure for distribution X

All distributions X|E=e are indistinguishable

hc is hardcore for all X|E=e

***Pr[E=e]≥¼

114

Security of EncwHC

EncwHC is secure for distribution X

All distributions X|E=e are indistinguishable

hc is hardcore for all X|E=e

Can

hc functions?

Canwewefind

findororconstruct

constructrobust

hc functions

secure on X|E=e?

***Pr[E=e]≥¼

115

Making HC functions robust

• Randomness Extractors produce pseudorandom output

if input has conditional computational entropy

• Theorem:

If hc is hardcore

hc

Ext

ext(hc(X)) is robust

Proof requires measuring conditional entropy

116

Conditional computational entropy

H∞(M | E = e) H∞(M) log 1/Pr[e]

Extract from conditional entropy to get uniform distribution

HILL(hc(M))

H,s

(hc(M) | =n

E = e) ???

E.g.

H HILL (hc(m) | m0 =1) = ????

Theorem:

H/Pr[e],s (M | E = e) H,s (M) log 1/Pr[e]

Warning: this is not HHILL!

•

•

•

Universal, deterministic distinguisher for all Z (“metric*”)

Notion used in [BSW03] and [DP08]

Cannot extract directly

117

Conditional computational entropy

Theorem:

H/Pr[e],s (M | E = e) H,s (M) log 1/Pr[e]

Warning: this is not HHILL!

Can be converted to HHILL with a loss in circuit size

[BSW03, RTTV08]

Theorem:

HILL

HeHILL

(M

|

E

=

e)

³

H

',s'

e,s (M) - log1/ Pr[E = e]

Related work on conditional computational entropy:

[RTTV08, DP08, GW10, CKLR11]

118

EncwHC

• Problem: need independent and statistically random coins

DE

m

F(m)

F

hc

E

hc(m)

PK

119

EncwHC

• Problem: need independent and statistically random coins

• While hc(m) is hard to predict from F(m) doesn’t need to hide m

DE

m

F(m)

F

hc

E

hc(m)

PK

120

EncwHC

EncwHC, single message deterministic PKE scheme, from

F, family of trapdoor functions,

hc, sufficiently long hardcore function

Π=(K, E, D), CPA-secure PKE scheme

DE

m

F(m)

F

hc

E

hc(m)

PK

121

Security of Det PKE [BBO07]

K

x0

pk

A1

DE

t0

122

Security of Det PKE [BBO07]-PRIV

[BBO07]

K

x0 , x1

A1

pk

b=$

DE

E(xb, pk)

A2

t’

priv

P,A

Adv

(k) = Pr[t1 = t ' | b =1]- Pr[t1 = t ' | b = 0]

t0, t1

123

Security of Det PKE [BFOR08]

PRIV

• Adversary cannot

communicate using

ciphertext (semantic

security)

IND

• Adversary cannot

distinguish between two

high entropy distributions

IND-security for all pairs of message distributions,

X1, X2, where H∞(X1)≥ μ-ε and H∞(X2)≥ μ-ε

implies

PRIV-security for all message distributions,

X, of H∞(X)≥μ

124

EncwHC PRIV-Lemma

• Call hc robust for distribution X if hc is hardcore for all

X|E=e where Pr[E=e]≥¼.

• Intuitively, hc hides partial information about X.

Can we find or construct robust hc functions?

Theorem:

Suppose Π=(K, E, D ) is CPA secure,

F is a TDF family,

hc is robust on distribution M,

then,

EncwHC is PRIV-secure on M.

125

Whirlwind Entropy Review

• Min-Entropy

guessing probability

H∞(X) = log max Pr[x]

X over {0, 1}n

xX

= log guessing prob(X)

•

•

[HILL99] generalized pseudorandomness to

distributions with entropy:

HILL(X) k if Z such that H(Z) = k and X Z

H,s

Two more parameters relating to what means

• maximum size s of distinguishing circuit D

• maximum advantage with which D will distinguish

126

Outline

• Previous deterministic PKE schemes

• Hardcore functions that hide partial information

• Making hardcore functions tolerate correlation

– Pseudorandomness from conditional computational entropy

127

Security of Det PKE [BFOR07]-IND

b=$

K

x0b

A1

pk

DE

E(xb, pk)

A2

d

ind

P,A

Adv

1

(k) = Pr[d = b]2

128

EncwHC IND-Lemma

If hc is hardcore on X1 and X2

Then EncwHC is IND-secure for X1, X2

Issues

How to show hc is hardcore for input distribution?:

Want to work with PRIV (semantic security)

This means showing hc is hardcore for X, H∞(X)≥μ

129

Lemma

• For any family of trapdoor functions, F, with sufficiently

many hardcore bits, hc, and a CPA-secure PKE

scheme Π=(K, E, D), EncwHC is IND-secure

DE

m

Φ

F

FF (m)

hc(m)

hc

c

E

PK

130

Lemma

• For any family of trapdoor functions, F, with sufficiently

many hardcore bits, hc, and a CPA-secure PKE

scheme Π=(K, E, D), EncwHC is IND-secure

DE

m

Φ

F

FF (m)

hc(m)

hc

c

E

PK

131

IND-Security

PRIV-Security

• The equivalence of [BFOR08] showed

IND-security of all message distributions,

X’, where H∞(X’)≥ μ-ε,

implies

PRIV-security for all message distributions,

X, of H∞(X)≥μ.

• This is a hard condition to satisfy, would like to argue

about a single message distribution X.

• Scheme “should” be PRIV secure for X it hides all

partial information about X.

132

Conditional computational entropy

Theorem:

H/Pr[y],s (X | Y = y) H,s (X ) log 1/Pr[y]

Warning: this is not HHILL!

But it can be converted to HHILL with a loss in circuit size

[BSW03, RTTV08]

Theorem:

where

HILL

HeHILL

(X

|Y

=

y)

³

H

',s'

e ,s (X)- log1/ Pr[Y = y]

e ' = e / Pr[Y = y]+ 3

log | X |

, s' = W( 3 s / log | X | )

s

133

Comparison with Dense Model Thm

Let R be a distribution and

let D be a δ-dense

distribution.

Suppose that for every h

|E h(R) – E h(U) | < ε’

Then there exists a δ-dense

distribution M of U such

that for all f:

Let R be a distribution and

let Pr[D=d]=δ.

Suppose that

|E f(D) – E f(M) | < ε

H,s (R|D=d )> k – log 1/δ

H,s (R ) >k

Then

134

Average Case Conditional

[DORS08]: H∞(X | Y ) H∞(X) log |Y|

E.g. H∞(X | wt (X)) H∞(X) log (length of X)

Our Theorems:

H|Y|,s (X | Y ) H,s (X ) log |Y|

H|Y2|,s (X | Y1, Y2 ) H,s (X |Y1) log |Y2| *

*Requires the original entropy to be “decomposable”

Related work on conditional computational entropy:

[RTTV08, DP08, GW10, CKLR11]

135

Main Result

• We can extract from a distribution with

conditional computational entropy.

• This allows us to make any hc robust

m

Φ

F

E

FF (m)

DE

ext(hc(m), s)

hc

s

ext

PK

136

Main Result

• EncwHCExt, from

any family of TDFs, F, with

sufficiently many hardcore bits, hc,

CPA-secure PKE (K, E, D),

and strong average-case extractor ext.

m

Φ

F

E

FF (m)

DE

ext(hc(m), s)

hc

s

ext

PK

137

Additional Results/Directions

• Multi-message security from block source

• Bounded multi-message security using extension to

Crooked Leftover Hash Lemma

• Future Directions:

– General multi-message security w/o RO

– Full chain rule for computational entropy

138