Univariate Statistics

advertisement

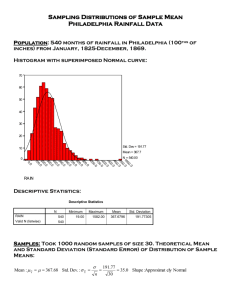

Inference, Representation, Replication & Programmatic Research • Populations & Samples -- Parameters & Statistics • Descriptive vs. Inferential Statistics • How we evaluate and support our inferences • Identifying & evaluating replications • Extracting replications from larger studies Just a bit of review … • The group that we are hoping our data will represent is the … • The list of folks in that group that we have ... • The folks we select from that list with the intent of obtaining data from each ... •The group from which we actually collect data is the ... population sampling frame selected sample data sample Some new language … Parameter -- summary of a population characteristic Statistic -- summary of a sample characteristic Just two more … Descriptive Statistic -- calculated from sample data to describe the sample Inferential Statistic -- calculated from sample data to infer about a specific population parameter So, let’s put it together -- first a simple one … The purpose of the study was to determine the mean number of science credits taken by all students previously enrolled in Psyc350. We got the list of everybody enrolled last fall and from it selected 20 students to ask about their science coursework. It turned out that 3 had dropped, one was out of town and another didn’t remember whether they had taken 1 chem course or two. Sampling -- Identify each of the following... Target population Psyc350 enrollees Selected sample 20 from last fall Last fall’s Sampling frame enrollment list Data sample 15 providing data Identify each of the following… Mean # science credits from all students previously enrolled in Psyc350 population parameter Mean # science credits from 15 students enrolled in Psyc350 during the fall semester who were in the data sample descriptive statistic Mean # science credits from all students previously enrolled in Psyc350 estimated from the 15 students in the data sample inferential statistic Reviewing descriptive and inferential statistics … Remember that the major difference between descriptive and inferential statistics is intent – what information you intend to get from the statistic Descriptive statistics • obtained from the sample • used to describe characteristics of the sample • used to determine if the sample represents the target population by comparing sample statistics and population parameters Inferential statistics • obtained from the sample • used to describe, infer, estimate, approximate characteristics of the target population Parameters – description of population characteristics • usually aren’t obtained from the population (we can’t measure everybody) • ideally they are from repeated large samplings that produce consistent results, giving us confidence to use them as parameters Let’s look at an example of the interplay of these three… Each year we used to interview everybody from senior managers to part-time janitorial staff once a year to get a feel for “How things were going?”. Generally we found we had 70% female and 30% male employees, divided about 10% management, 70% clerical and 20% service/janitorial, with an average age of 32.5 (std=5.0) years and 7.4 (std= 5.0) years seniority. From these folks we usually got an overall satisfaction rating of about 5.2 (std = 1.1) on a 7-point scale. With the current cost increases we can no longer interview everybody. So, this year we had a company that conducts surveys complete the interview using a sample of 120 employees who volunteered in response to a notice in the weekly company newsletter. We were very disappointed to find that the overall satisfaction rating had dropped to 3.1 (std=1.0). At a meeting to discuss how to improve worker satisfaction, one of the younger managers asked to see the rest of the report and asked use to look carefully at one of the tables… Table 3 -- Sample statistics (From 100 completed interviews) gender 50 males (50%) 50 females (50%) job 34 mang. (34%) 30 cler. (30%) 36 ser/jan (36%) age mean = 21.3 std = 10 seniority mean = 2.1 std = 6 First.. Sampling -- Identify each of the following... Target population our company Sampling Frame company newsletter Selected sample 120 volunteers Data sample 100 who completed the survey Each year we used to interview everybody from senior managers to part-time janitorial staff once a year to get a feel for “How things were going?”. Generally we found we had 70% female and 30% male employees, divided about 10% management, 70% clerical and 20% service/janitorial, with an average age of 32.5 (std=5.0) years and 7.4 (std= 5.0) years seniority. From these folks we usually got an overall satisfaction rating of about 5.2 (std = 1.1) on a 7-point scale. With the current cost increases we can no longer interview everybody. So, this year we had a company that conducts surveys complete the interview using a sample of 120 employees who volunteered in response to a notice in the weekly company newsletter. We were very disappointed to find that the overall satisfaction rating had dropped to 3.1 (std=1.0). At a meeting to discuss how to improve worker satisfaction, one of the younger managers asked to see the rest of the report and asked use to look carefully at one of the tables… Table 3 -- Sample statistics (From 100 completed interviews) gender 50 males (50%) 50 females (50%) job 34 mang. (34%) 30 cler. (30%) 36 ser/jan (36%) age mean = 21.3 std = 10 seniority mean = 2.1 std = 6 And now … Kinds of “values” -- identify all of each type … parameter descriptive statistics inferential statistic Each year we used to interview everybody from senior managers to part-time janitorial staff once a year to get a feel for “How things were going?”. Generally we found we had 70% female and 30% male employees, divided about 10% management, 70% clerical and 20% service/janitorial, with an average age of 32.5 (std=5.0) years and 7.4 (std= 5.0) years seniority. From these folks we usually got an overall satisfaction rating of 5.2 (std = 1.1) on a 7-point scale. With the current cost increases we can no longer interview everybody. So, this year we had a company that conducts surveys complete the interview using a sample of 120 employees who volunteered in response to a notice in the weekly company newsletter. We were very disappointed to find that the overall satisfaction rating had dropped to 3.1 (std=1.0). At a meeting to discuss how to improve worker satisfaction, one of the younger managers asked to see the rest of the report and asked use to look carefully at one of the tables… Table 3 -- Sample statistics (From 100 completed interviews) gender 50 males (50%) 50 females (50%) job 34 mang. (34%) 30 cler. (30%) 36 ser/jan (36%) age mean = 21.3 std = 10 seniority mean = 2.1 std = 6 And now … Kinds of “values” -- identify all of each type … parameter descriptive statistics inferential statistic Of course, the real question is whether the “3.1 rating” is cause for concern… Should we interpret the mean rating of 3.1 as indicating that the folks who work here are much less satisfied than they used to be? Why or why not? Looks bad, doesn’t it ? Well – depends upon whether the sample is representative of the population. Any way to check that? We can compare sample descriptive statistics & population parameters • this sample is not representative of the population • the sample is “too male,” “too managerial & janitorial”, “too young,” and “short-tenured” compared to the population parameters Here’s the point… Our theories, hypotheses and implementations are about populations, but (because we can never, ever collect data from the entire population) our data come from samples !!! We infer that the data and analysis results we obtain from our sample tell us about the relationships between those variables in the population! How do we know our inferences are correct? • we can never “know” – there will never be “proof” (only evidence) • check the selection/sampling procedures we used • we check that our sample statistics match known population parameters (when we know those parameters) • we check if our results agree with previous results from “the lit” • we perform replication and converging operations research Here’s another version of this same idea !!! “Critical Experiment” vs. “Converging Operations” You might be asking yourself, “How can we sure we ‘got the study right’?” How can we be sure that we.. • … have a sample that represents the target population? • … have the best research design? • … have good measures, tasks and a good setting? • … did the right analyses and make the correct interpretations? Said differently – How can we be sure we’re running the right study in the right way ??? This question assumes the “critical experiment” approach to empirical research – that there is “one correct way to run the one correct study” and the answer to that study will be “proof”. For both philosophical and pragmatic reasons (that will become apparent as we go along) scientific psychologists have abandoned this approach and adopted “converging operations” – the process of running multiple comparable versions of each study and looking for consistency (or determining sources of inconsistencies) – also called the Research Loop Library Research Hypothesis Formation Learning “what is known” about the target behavior Based on Lib. Rsh., propose some “new knowledge” the “Research Loop” • Novel RH: Draw Conclusions • Replication Decide how your “new knowledge” changes “what is known” about the target behavior • Convergence Research Design Determine how to obtain the data to test the RH: Data Collection Carrying out the research design and getting the data. Data Analysis Hypothesis Testing Based on design properties and statistical results Data collation and statistical analysis “Comparable” studies -- replication • The more similar the studies the more direct the test of replication and the more meaningful will be a “failure to replicate” • The more differences between the studies the more “reasons” the results might not agree, and so, the less meaningful will be a “failure to replicate” • Ground rules… • Same or similar IV (qual vs. quant not important) • Same or similar DV (qual vs. quant not important) • Similar population, setting & task/stimulus • Note if similar design (e.g., experiment or nonexperiment) “Comparing” studies -- replication If the studies are comparable, then the comparison is based on… Effect size (r) and direction/pattern • Direction/pattern • Be sure to take DV “direction” into account (e.g., measuring % correct vs. % error or “depression” vs. “happiness” • Effect size • Don’t get too fussy about effect size comparability … • Remember .1 = small .3 = medium .5 = large • Smaller but in the same direction is still pretty similar What about differences in “significance”??? If the effect sizes are similar, these are usually just “power” or “sample size” differences – far less important than effect size/direction ! Replication – some classic “conundrums” #1 Imagine there are four previous studies, all procedurally similar, all looking at the relationship between social skills and helping behavior. Here are the results… r(36) = .28, p>.05 r(65) = .27, p<.05 r(72) = .27, p<.05 r(31) = .28, p>.05 Do these studies replicate one another? Yes !!! • the studies all found similar effects (size & direction) • the differences in significance are due to power & sample size differences Replication – some classic “conundrums” #2 Imagine there are four previous studies, all procedurally similar, all looking at the relationship between amount of therapy and decrease in depressive symptoms. Here are the results… r(36) = .25, p>.05 r(42) = .27, p>.05 r(51) = .27, p>.05 r(31) = .25, p>.05 Given these results, what is your “best guess” of the population correlation between social skills and helping behavior? r = .00, since none of the results were significant? r ≈ .25 - .27, since this is the consistent answer? I’d go with the .25-.27, but encourage someone to do an a priori power analysis before the next study!!! Remember this one … Researcher #1 Acquired 20 computers of each type, had researcher assistants (working in shifts & following a prescribed protocol) keep each machine working continually for 24 hours & count the number of times each machine failed and was re-booted. Mean failures PC = 5.7, std = 2.1 Researcher #2 Acquired 20 computers of each type, had researcher assistants (working in shifts & following a prescribed protocol) keep each machine working continually for 24 hours or until it failed. PC Mean failures Mac = 3.6, std = 2.1 Failed Not F(1,38) = 10.26, p = .003 X2(1) = 8.12, p <.003 F / (F + df) = 10.26 / (10.26+38) r = .46 ² / N Mac 15 6 5 14 = 8.12 / 40 r = .45 So, by computing effect sizes and effect direction/pattern, we can compare these similar studies (same IV – conceptually similar DV) and see that the results replicate! Try this one … Researcher #1 Asked each of 60 students whether or not they had completed the 20-problem on-line exam preparation and noted their scores on the exam (%). She used BG ANOVA to compare the mean % of the two groups. Researcher #2 Asked each of 304 students how many of the on-line exam questions they had completed and noted their scores on the exam (%). She used correlation to test for a relationship between these two quantitative variables. Completed Exam Prep = 83% No Exam Prep = 76% F(1,58) = 8.32, p = .003 F / (F + df) = 8.32 / (8.32+58) r = .35 r (301) = .12, p = .042 Comparing the two studies we see that while the effects are in the same direction (better performance is associated with “more” online exam practice), the size of the effects in the two studies is very different. Also, the significance of the second effect is due to the huge sample size! And this one … Researcher #1 Interviewed 85 patients from a local clinic and recorded the number of weeks of therapy they had attended and the change in their wellness scores. She used correlation to examine the relationship between these two variables. Researcher #2 Assigned each of 120 patients to receive group or not and noted whether or not they had improved after 24 weeks. She used X2 to examine the relationship between these variables. Therapy Improved Not Control 45 25 15 35 X2(1) = 13.71, p <.001 r (83) = .30, p = .002 ² / N = 13.71 / 1120 r = .34 So, by computing effect sizes and effect direction/pattern, we can compare these similar studies (conceptually similar IV & DV) and see that the results show a strong replication! Replication & Generalization in k-group Designs -- ANOVA • Most k-group designs are an “expansion” or an extension of an earlier, simpler design • When comparing with a 2-group design, be sure to use the correct conditions Study #1 Study #2 Mean failures PC = 5.7, std = 2.1 Mean failures IBM = 5.9, std = 2.1 Mean failures Mac = 3.6, std = 2.1 Mean failures Dell = 3.8, std = 2.1 F(1,38) = 10.26, p = .003 r = F / (F + df) = .46 Mean failures Mac = 3.6, std = 2.1 F(2,57) = 10.26, p = .003, MSe = We need to know what “PC” means in the first study! What is “PCs” were IBM? What if PC were Dell? What if PC were something else? Replication & Generalization in k-group Designs -- X2 • Most k-group designs are an “expansion” or an extension of an earlier, simpler design Study #1 Study #2 PC Failed Not 15 Mac 6 5 14 X2(1) = 8.12, p <.003 r = ² / N = .45 IBM Failed Not Dell 16 5 4 15 X2(1) = 13.79, p <.004 We need to know what “PC” means in the first study! What if “PCs” were IBM? What if PC were Dell? What if PC were something else? Mac 7 13