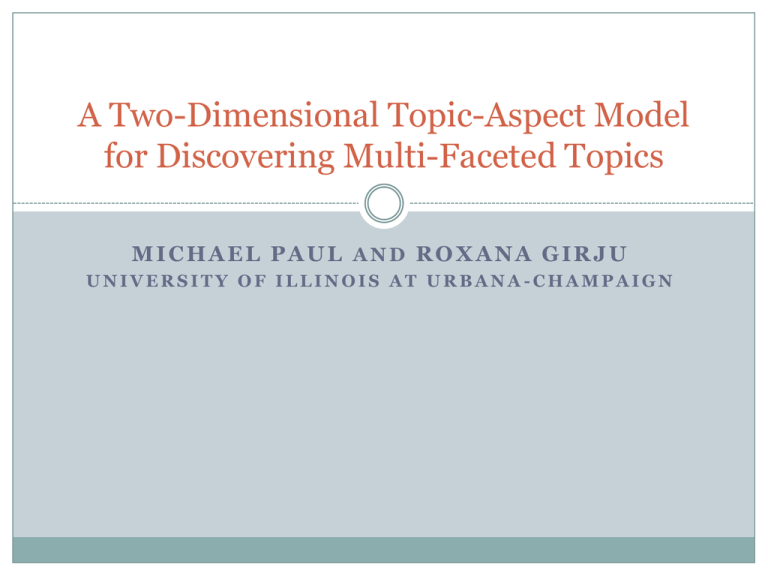

A Two-Dimensional Topic-Aspect Model for Discovering Multi

advertisement

A Two-Dimensional Topic-Aspect Model for Discovering Multi-Faceted Topics MICHAEL PAUL AND ROXANA GIRJU UNIVERSITY OF ILLINOIS AT URBANA-CHAMPAIGN Probabilistic Topic Models Each word token associated with hidden “topic” variable Probabilistic approach to dimensionality reduction Useful for uncovering latent structures in text Basic formulation: P(w|d) = P(w|topic) P(topic|d) Probabilistic Topic Models “Topics” are latent distributions over words A topic can be interpreted of as a cluster of words Topic models often cluster words by what people would consider topicality There are often other dimensions in which words could be clustered Sentiment/perspective/theme What if we want to model both? Previous Work Topic-Sentiment Mixture Model (Mei et al., 2007) Words come from either topic distribution or sentiment distribution Topic+Perspective Model (Lin et al., 2008) Words are weighted as topical vs. ideological Previous Work Cross-Collection LDA (Paul and Girju, 2009) Each document belongs to a collection Each topic has a word distribution shared among collections plus distributions unique to each collection What if the “collection” was a hidden variable? -> Topic-Aspect Model (TAM) Topic-Aspect Model Each document has a multinomial topic mixture a multinomial aspect mixture Words may depend on both! Topic-Aspect Model Topic and aspect mixtures are drawn independently of one another This differs from hierarchical topic models where one depends on the other Can be thought of as two separate clustering dimensions Topic-Aspect Model Each word token also has 2 binary variables: the “level” (background or topical) denotes if the word depends on the topic or not the “route” (neutral or aspectual) denotes if the word depends on the aspect or not A word may depend on a topic, an aspect, both, or neither Topic-Aspect Model “Computational” Aspect Route / Level Neutral Aspectual Background Topical paper, new, present speech, recognition algorithm, model markov, hmm, error A word may depend on a topic, an aspect, both, or neither Topic-Aspect Model “Linguistic” Aspect Route / Level Background Topical Neutral paper, new, present speech, recognition Aspectual language, linguistic prosody, intonation, tone A word may depend on a topic, an aspect, both, or neither Topic-Aspect Model “Linguistic” Aspect Route / Level Background Topical Neutral paper, new, present communication, interaction Aspectual language, linguistic conversation, social A word may depend on a topic, an aspect, both, or neither Topic-Aspect Model “Computational” Aspect Route / Level Neutral Aspectual Background Topical paper, new, present communication, interaction algorithm, model dialogue, system, user A word may depend on a topic, an aspect, both, or neither Topic-Aspect Model Generative process for a document d: Sample a topic z from P(z|d) Sample an aspect y from P(y|d) Sample a level l from P(l|d) Sample a route x from P(x|l,z) Sample a word w from either: P(w|l=0,x=0), P(w|z,l=1,x=0), P(w|y,l=0,x=1), P(w|z,y,l=1,x=1) Topic-Aspect Model Distributions have Dirichlet/Beta priors Latent Dirchlet Allocation framework Number of aspects and topics are user-supplied parameters Straightforward inference with Gibbs sampling Topic-Aspect Model Semi-supervised TAM when aspect label is known Two options: Fix P(y|d)=1 for the correct aspect label and 0 otherwise Behaves like ccLDA (Paul and Girju, 2009) Define a prior for P(y|d) to bias it toward the true label Experiments Three Datasets: 4,247 abstracts from the ACL Anthology 2,173 abstracts from linguistics journals 594 articles from the Bitterlemons corpus (Lin et al., 2006) a collection of editorials on the Israeli/Palestinian conflict Experiments Example: Computational Linguistics Experiments Example: Israeli/Palestinian Conflict Unsupervised Prior for P(aspect|d) for true label Evaluation Cluster coherence “word intrusion” method (Chang et al., 2009) 5 human annotators Compare against ccLDA and LDA TAM clusters are as coherent as other established models Evaluation Document classification Classify Bitterlemons perspectives (Israeli vs Palestinian) Use TAM (2 aspects + 12 topics) output as input to SVM Use aspect mixtures and topic mixtures as features Compare against LDA Evaluation Document classification 2 aspects from TAM much more strongly associated with true perspectives than 2 topics from LDA Suggests that TAM is clustering along a different dimension than LDA by separating out another “topical” dimension (with 12 components) Summary: Topic-Aspect Model Can cluster along two independent dimensions Words may be generated by both dimensions, thus clusters can be inter-related Cluster definitions are arbitrary and their structure will depend on the data and the model parameterization (especially # of aspects/topics) Modeling with 2 aspects and many topics is shown to produce aspect clusters corresponding to document perspectives on certain corpora References Chang, J.; Boyd-Graber, J.; Gerrish, S.; Wang, C.; and Blei, D. 2009. Reading tea leaves: How humans interpret topic models. In Neural Information Processing Systems. Lin, W.; Wilson, T.; Wiebe, J.; and Hauptmann, A. 2006. Which side are you on? identifying perspectives at the document and sentence levels. In Proceedings of Tenth Conference on Natural Language Learning (CoNLL). Lin, W.; Xing, E.; and Hauptmann, A. 2008. A joint topic and perspective model for ideological discourse. In ECML PKDD ’08: Proceedings of the European conference on Machine Learning and Knowledge Discovery in Databases -Part II, 17–32. Berlin, Heidelberg: Springer-Verlag. Mei, Q.; Ling, X.; Wondra, M.; Su, H.; and Zhai, C. 2007. Topic sentiment mixture: modeling facets and opinions in weblogs. In WWW ’07: Proceedings of the 16th international conference on World Wide Web, 171–180. Paul, M., and Girju, R. 2009. Cross-cultural analysis of blogs and forums with mixed-collection topic models. In Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing, 1408–1417.