Problem Solving by Searching

advertisement

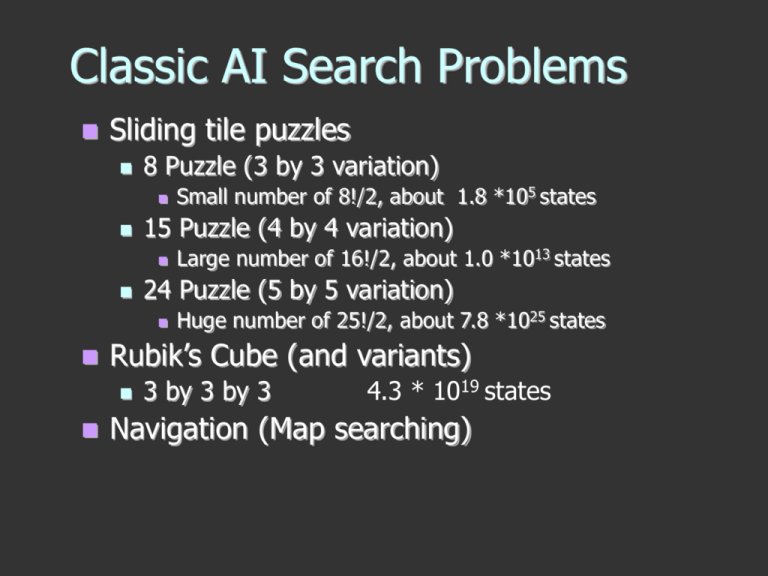

Classic AI Search Problems Sliding tile puzzles 8 Puzzle (3 by 3 variation) 15 Puzzle (4 by 4 variation) Huge number of 25!/2, about 7.8 *1025 states Rubik’s Cube (and variants) Large number of 16!/2, about 1.0 *1013 states 24 Puzzle (5 by 5 variation) Small number of 8!/2, about 1.8 *105 states 3 by 3 by 3 4.3 * 1019 states Navigation (Map searching) Classic AI Search Problems 2 4 5 1 7 8 3 6 Invented by Sam Loyd in 1878 16!/2, about 1013 states Average number of 53 moves to solve Known diameter (maximum length of optimal path) of 87 Branching factor of 2.13 3*3*3 Rubik’s Cube Invented by Rubik in 1974 4.3 * 1019 states Average number of 18 moves to solve Conjectured diameter of 20 Branching factor of 13.35 Navigation Arad to Bucharest start end Representing Search Arad Zerind Arad Sibiu Oradea Sibiu Timisoara Fagaras Bucharest Rimnicu Vilcea General (Generic) SearchAlgorithm function general-search(problem, QUEUEING-FUNCTION) nodes = MAKE-QUEUE(MAKE-NODE(problem.INITIAL-STATE)) loop do if EMPTY(nodes) then return "failure" node = REMOVE-FRONT(nodes) if problem.GOAL-TEST(node.STATE) succeeds then return node nodes = QUEUEING-FUNCTION(nodes, EXPAND(node, problem.OPERATORS)) end A nice fact about this search algorithm is that we can use a single algorithm to do many kinds of search. The only difference is in how the nodes are placed in the queue. Search Terminology Completeness solution Time will be found, if it exists complexity number Space of nodes expanded complexity number of nodes in memory Optimality least cost solution will be found Uninformed (blind) Search Breadth first Uniform-cost Depth-first Depth-limited Iterative deepening Bidirectional Breadth first QUEUING-FN:- successors added to end of queue (FIFO) Arad Zerind Arad Arad Oradea Oradea Sibiu Fagaras Arad Timisoara Rimnicu Vilcea Lugoj Properties of Breadth first Complete ? Time ? 1 + b + b2 + b3 +…+ bd = O(bd), so exponential Space ? Yes if branching factor (b) finite O(bd), all nodes are in memory Optimal ? Yes (if cost = 1 per step), not in general Properties of Breadth first cont. Assuming b = 10, 1 node per ms and 100 bytes per node Uniform-cost Uniform-cost QUEUING-FN:- insert in order of increasing path cost Arad 75 Zerind 75 140 Arad Arad 71 118 140 Sibiu 151 Oradea Oradea Timisoara 99118 Fagaras Arad 80 111 Rimnicu Lugoj Vilcea Properties of Uniform-cost Complete Yes Time ? if step cost >= epsilon ? Number of nodes with cost <= cost of optimal solution Space ? Number of nodes with cost <= cost of optimal solution Optimal ?- Yes Depth-first QUEUING-FN:- insert successors at front of queue (LIFO) Arad Zerind Arad Zerind Sibiu Sibiu Oradea Timisoara Timisoara Properties of Depth-first Complete ? No:- fails in infinite- depth spaces, spaces with loops complete in finite spaces Time ? O(bm), Space bad if m is larger than d ? O(bm), Optimal linear in space ?:- No Depth-limited Choose a limit to depth first strategy e.g 19 for the cities Works well if we know what the depth of the solution is Otherwise use Iterative deepening search (IDS) Properties of depth limited Complete ? Time ? O(bl) Space ? Yes if limit, l >= depth of solution, d O(bl) Optimal ? No Iterative deepening search (IDS) function ITERATIVE-DEEPENING-SEARCH(): for depth = 0 to infinity do if DEPTH-LIMITED-SEARCH(depth) succeeds then return its result end return failure Properties of IDS Complete ? Time ? (d + 1)b0 + db1 + (d - 1)b2 + .. + bd = O(bd) Space ? Yes O(bd) Optimal ? Yes if step cost = 1 Comparisons Summary Various uninformed search strategies Iterative deepening is linear in space not much more time than others Use Bi-directional Iterative deepening were possible Island Search Suppose that you happen to know that the optimal solution goes thru Rimnicy Vilcea… Island Search Suppose that you happen to know that the optimal solution goes thru Rimnicy Vilcea… Rimnicy Vilcea A* Search Uses g evaluation function f = g + h is a cost function Total cost incurred so far from initial state Used by uniform cost search h is an admissible heuristic Guess of the remaining cost to goal state Used by greedy search Never overestimating makes h admissible A* Our Heuristic A* QUEUING-FN:- insert in order of f(n) = g(n) + h(n) Arad g(Zerind) = 75 Zerind h(Zerind) = 374 f(Zerind) = 75 + 374 g(Sibiu) = 140 Sibiu h(Sibiu) = 253 f(Sibui) = … g(Timisoara) = 118 Timisoara g(Timisoara) = 329 Properties of A* Optimal and complete Admissibility guarantees optimality of A* Becomes uniform cost search if h = 0 Reduces time bound from O(b d ) to O(b d - e) b is asymptotic branching factor of tree d is average value of depth of search e is expected value of the heuristic h Exponential memory usage of O(b d ) Same as BFS and uniform cost. But an iterative deepening version is possible … IDA* IDA* Solves problem of A* memory usage Easier to implement than A* Reduces usage from O(b d ) to O(bd ) Many more problems now possible Don’t need to store previously visited nodes AI Search problem transformed Now problem of developing admissible heuristic Like The Price is Right, the closer a heuristic comes without going over, the better it is Heuristics with just slightly higher expected values can result in significant performance gains A* “trick” Suppose you have two admissible heuristics… But h1(n) > h2(n) You may as well forget h2(n) Suppose you have two admissible heuristics… Sometimes h1(n) > h2(n) and sometimes h1(n) < h2(n) We can now define a better heuristic, h3 h3(n) = max( h1(n) , h2(n) ) What different does the heuristic make? Suppose you have two admissible heuristics… h1(n) is h(n) = 0 (same as uniform cost) h2(n) is misplaced tiles h3(n) is Manhattan distance Effective Branching Factor Search Cost A*(h1) A*(h2) A*(h3) A*(h1) A*(h2) A*(h3) 2 10 6 6 2.45 1.79 1.79 4 112 13 12 2.87 1.48 1.45 6 680 20 18 2.73 1.34 1.30 8 6384 39 25 2.80 1.33 1.24 10 47127 93 39 2.79 1.38 1.22 12 364404 227 73 2.78 1.42 1.24 14 3473941 539 113 2.83 1.44 1.23 16 Big number 1301 211 1.45 1.25 18 Real big Num 3056 363 1.46 1.26 Game Search (Adversarial Search) The study of games is called game theory A branch of economics We’ll consider special kinds of games Deterministic Two-player Zero-sum Perfect information 33 Games A zero-sum game means that the utility values at the end of the game total to 0 e.g. +1 for winning, -1 for losing, 0 for tie Some kinds of games Chess, checkers, tic-tac-toe, etc. 34 Problem Formulation Initial state Operators Returns list of (move, state) pairs, one per legal move Terminal test Initial board position, player to move Determines when the game is over Utility function Numeric value for states E.g. Chess +1, -1, 0 35 Game Tree 36 Game Trees Each level labeled with player to move Max if player wants to maximize utility Min if player wants to minimize utility Each level represents a ply Half a turn 37 Optimal Decisions MAX wants to maximize utility, but knows MIN is trying to prevent that MAX wants a strategy for maximizing utility assuming MIN will do best to minimize MAX’s utility Consider minimax value of each node Utility of node assuming players play optimally 38 Minimax Algorithm Calculate minimax value of each node recursively Depth-first exploration of tree Game tree (aka minimax tree) Max node Min node 39 Example Max 5 Min 4 10 4 Utility 6 2 2 7 5 5 5 6 7 40 Minimax Algorithm Time Complexity? Space Complexity? O(bm) O(bm) or O(m) Is this practical? Chess, b=35, m=100 (50 moves per player) 3510010154 nodes to visit 41 Alpha-Beta Pruning Improvement on minimax algorithm Effectively cut exponent in half Prune or cut out large parts of the tree Basic idea Once you know that a subtree is worse than another option, don’t waste time figuring out exactly how much worse 42 Alpha-Beta Pruning Example 3 3 0 3 3 2 5 30 a pruned 0 32 a pruned 2 5 3 2 3 2 3 53 b pruned 5 5 0 0 1 2 2 1 43