AR Processes

advertisement

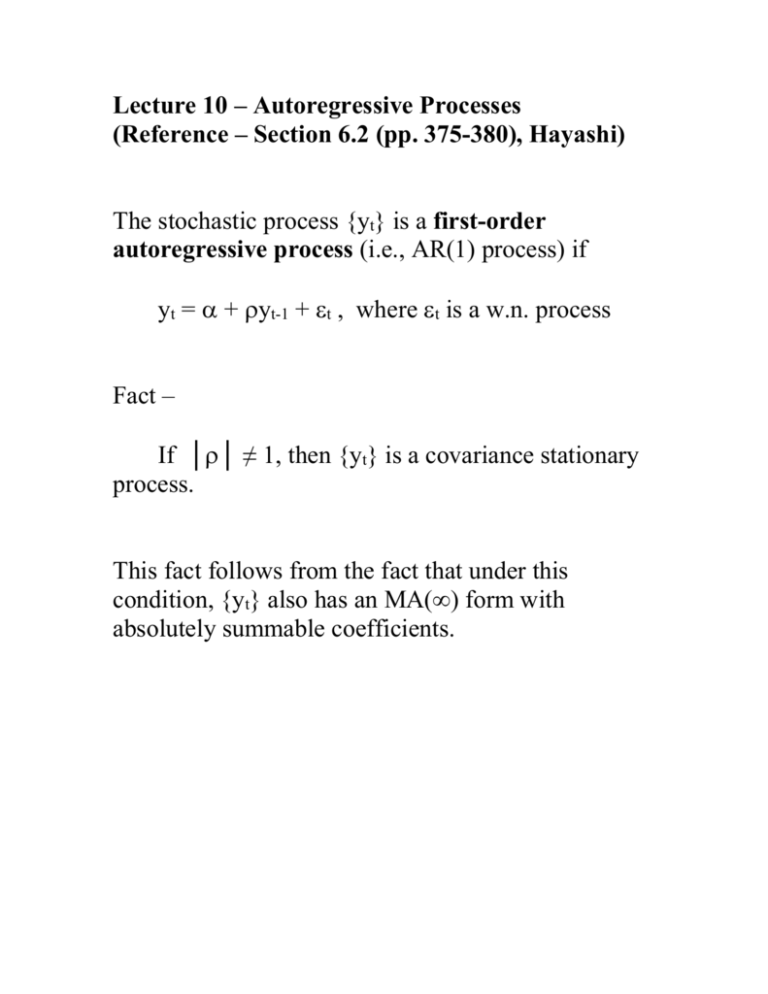

Lecture 10 – Autoregressive Processes

(Reference – Section 6.2 (pp. 375-380), Hayashi)

The stochastic process {yt} is a first-order

autoregressive process (i.e., AR(1) process) if

yt = + yt-1 + t , where t is a w.n. process

Fact –

If ││ ≠ 1, then {yt} is a covariance stationary

process.

This fact follows from the fact that under this

condition, {yt} also has an MA(∞) form with

absolutely summable coefficients.

1. Suppose ││ < 1

Recall –

y t s t s (1 L) 1 t

0

and so,

(1 L) yt (1 L) t

or,

yt yt 1 t ,

where =(1-)μ (since Lc = c if c is a constant).

2. Now suppose ││ > 1, which means that │1/│

< 1.

Consider the process {yt} defined according to

y t t s ( s L s ) t

s

1

1

which is a one-sided MA(∞) in terms of future ’s.

This is covariance stationary process because the

sequence of coefficients {-s} is absolutely

summable. Next, note that

-

1

s

L s = (1-L)-1

since (1-L)(--1L-1--2L-2-…) = 1.

So, in this case,

s s

yt = μ + (1-L)-1t, where (1-L)-1 = - L

1

and, as above, if we multiply both sides by (1-L)

and rearrange, we obtain:

yt = + yt-1 + t , where = (1-) μ

So, an AR(1) process yt is a covariance stationary

process in terms of current and past values of the

white noise process t if ││ < 1. It is a covariance

stationary process process in terms of future values of

the white noise process t if ││ > 1. In economics,

we are generally only interested in AR(1) process

that evolve forward from the past, so we usually rule

out backward-evolving AR(1) processes with ││ >

1.

We refer to the condtion that ││ < 1 as the

stationarity condition.

3. What if = 1 (or –1)?

In this special case, which is called the unit root

case, yt is a nonstationary process – it does not have

a moving average form with absolutely summable

coefficients.

yt = + yt-1 + t

= + (+yt-2+t-1)+t

…

= j + yt-j + (t + t-1 + …+t-j+1)

and so on. The effects of past ’s on current y do not

decrease; dyt/dt-s = 1 for all s!

The unit root case is very important in applied and

theoretical time series econometrics. For example,

when we first difference a time series, like log(real

GDP), to make it stationary, we are assuming that

the time series is a unit root process. (More on this in

Econ 674)

Consider an AR(1) that satisfies the stationarity

condition. It is covaraince-stationary and its mean,

variance, and autocovariance function can be

s

determined by it MA(∞) form, yt t s

0

E(yt) = μ, and Var(yt) = 2/(1-2) where 2 = var(t),

and so on.

Another approach –

yt = + yt-1 + t

---- >

E(yt) = + E(yt-1) since E() = and E(t) =0

E(yt) = + E(yt)

E(yt) = /(1-) = μ

since yt is stationary

WLOG, assume = 0 so that E(yt) = 0. (This won’t

affect the solutions for the variances or

autocovariances and it simplifies their derivations.)

0 =

E(yt2) = E[(yt-1+t)2]

= 2E(yt-12) +E(t2) + 2E(yt-1t)

= 2E(yt2) + 2 + 0

= 2/(1-2)

1 = E(ytyt-1) = E[(yt-1+t)yt-1]

= 0

2 = E(ytyt-2) = E[(yt-1+t)yt-2]

= 1

= 2 0

and so on …

So, for the stationary AR(1) process

0 = 2/(1-2) and j = j0 , j = 0,1,2,…

rj = j/0 = j , j = 0,1,2,…

What is the shape of the autocorrelogram for the

AR(1)? How does it differ from the shape of, say, the

MA(1) or MA(2)?

Forecasting with the AR(1) process –

Suppose yt = + yt-1 + t. Then

E(yt│yt-1,yt-2,…,t-1,t-2,…)

= E(yt│t-1,t-2,…) = E(yt│yt-1,yt-2,…)

= + yt-1

Note that t = yt - E(yt│yt-1,yt-2,…) and so is called

the innovation in yt.

Similarly,

E(yt+1│yt-1,yt-2,…,t-1,t-2,…)

= E(yt+1│t-1,t-2,…) = E(yt+1│yt-1,yt-2,…)

= (1+) + 2yt-1

____________________________

yt+1 = + yt + t+1

E(yt+1│yt-1,yt-2,…)

E(t+1│yt-1,yt-2,…)

= + E(yt│yt-1,yt-2,…) +

= + ( + yt-1) + 0

More generally –

E(yt+s│yt,yt-1,…) =

yt+s for s < 0

= (1++…+s-1) + syt for s > 0

The AR(p) Model –

The stochastic process {yt} is a pth-order

autoregressive process (i.e., AR(p) process) if

yt = + φ1yt-1 +…+ φpyt-p + t ,

where t is a w.n. process

or, writing it in term of the lag operator,

φ(L)yt = + t, where φ(L) = 1 - φ1L - … - φpLp

The stationarity condition for the AR(p) model is

that the roots of the polynomial φ(z) all lie outside of

the unit circle (or equivalently, the roots of zp - φ1zp-1…- φp all lie inside the unit circle).

If the stationarity condition holds, then {yt} is a

covariance stationary process with a one-sided

MA(∞) representation –

y t s t s ,

0

where

(1) 1 /(1 1 ... p )

( L) ( L) 1

and the coefficients of (L) are absolutely

summable

Note that the mean of the stationary AR(p) process is

μ.

The variance of the process is

2

0

2

s

.

The autocovariance function (and, therefore, the

autocorrelation function) can be derived from the

Yule-Walker equations (which are described for the

AR(1) case in Exercise 5, p. 433-444, Hayashi). The

details of computing and characterizing the

autocovariance function for the general AR(p) model

are not of great importance for our purposes. We

note, however, that

the autocovariance function can be calculated

from the AR coefficients (via, e.g., the YuleWalker equations)

the autocovariance function for an AR(p) is an

infinite sequence {γj}

the sequence of autocovariances is absolutely

summable

Forecasting using the AR(p) model –

Suppose that yt is an AR(p) process,

yt = + φ1yt-1 +…+ φpyt-p + t

Then

yˆ t t 1 E(yt│yt-1,yt-2,…,t-1,t-2,…)

= E(yt│t-1,t-2,…) = E(yt│yt-1,yt-2,…)

= + φ1yt-1 +…+ φpyt-p

Thus, t = yt yˆ t t 1 = the innovation in yt

Simlarly -

yˆ t 1 t 1 1 yˆ t t 1 2 yt 1 ... p yt p1

yˆ t 2 t 1 1 yˆ t 1 t 1 2 yt t 1 ... p yt p2

and so on.

In econometrics, if we have a time series {yt} that we

believe is a stationary time series, we are typically

willing to assume that it has an AR(p) form, for

sufficiently large p (and, therefore, it also has an

MA(∞)).

From the point of view of the econometric theorist, it

turns out that the MA(∞) form of the model is often

the more useful representation of the process.

However, from the point of view of the applied

econometrician, the AR(p) form is generally more

useful, because it is essentially just a linear regression

model with serially uncorrelated and homoskedastic

disturbances.

The practical (and related) problems are 1) how to

select p and 2) for given p, how to estimate the

parameters of the AR(p) model, and 3) how to test

restrictions on the model’s coefficients.

We’ll come back to these issues shortly.

A Note on ARMA(p,q) Processes

In addition to the pure AR and pure MA processes,

we can define the ARMA(p,q) process according to –

yt = + φ1yt-1 + … + φpyt-p + θ0t + θ1t-1 + … + θqt-q

Sometimes in practice it turns out that neither a low

order AR(p) model nor a low order MA(q) can

account for all of the serial correlation in yt. In these

cases, however, an ARMA(p,q), with small p+q, may

work well and will have the advantage of explaining

the serial correlation pattern in terms of a relatively

small number of unknown parameters.