A Digression on Vector Autorgressions (VARs)

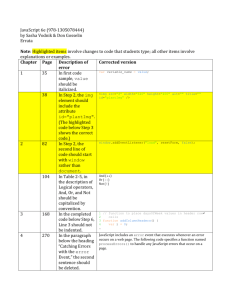

advertisement

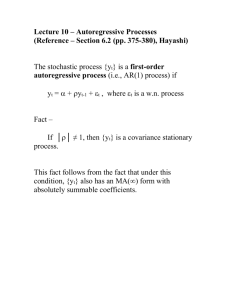

Lecture 11 - Vector Autorgressions (VARs) (Reference – Section 6.3, Hayashi) The AR(p) model for the univariate time series yt provides a simple and useful model for forecasting the future values of y from its own past history. However, it seems overly constraining to only use its own past history. Wouldn’t we generate better forecasts if we could also use the past history of other variables that help determine yt? The VAR(p) model is a natural generalization of the AR(p) model that provides an equally simple forecasting model that uses the past history of a vector of time series (including y itself) to forecast the future values of y. Let Yt = [y1t …ynt]’, t = …,-1,0,1,… be an ndimensional vector time series. Assume that it has the following p-th order vector autoregressive (VAR(p)) representation Yt = A0 + A1Yt-1 + … + ApYt-p + t where A0 is an nx1 constant vector; A1,…,Ap are nxn constant matrices; and t is an nx1 vector white noise process (i.e., E(t) = 0, E(tt’) = Σ, and E(ts’) = 0 if t ≠ s). So each component of Yt , say yit, depends on p lagged values of itself and the other n-1 variables in the system. The stationarity condition for the VAR(p) model , which assures the existence of a well-defined onesided VMA(∞) representation: The roots of the determinantal equation det( In – A1z - …-Apzp) = 0 all lie outside the unit circle or, equivalently, the roots of the determinantal equation det( Inzp – A1zp-1 - …-Ap) = 0 all lie inside the unit circle. The vector version of the Wold Decomposition Theorem says that if Yt is a zero mean (linearly indeterministic) covariance stationary process, then it has a one-sided VMA(∞) representation whose coefficients are absolutely summable. (See Hayashi, p. 387, for the definition of the absolute summability condition in the vector case.) If, in addition, Yt has a VAR(p) representation then the VAR(p)’s coefficients will satisfy the stationarity condition. Note that i) a covariance stationary process will have a VMA form, but may or may not have a VAR form. (If the VMA can be inverted to imply VAR whose coefficients meet the stationarity condition, then the VMA is said to be invertible.) ii) a stochastic process can have a VAR form without being stationary. (E.g.,Yt =Yt-1 + t ) Suppose that Yt has a VAR(p) form (which may or may not meet the stationarity condition) with known coefficients. Consider the problem of forecasting yi,t+s given Yt,Yt1,… For now, let’s assume p = 1 (i.e., a first-order VAR) – Yt+1 = A0 + A1Yt + t+1 E(Yt+1│Yt,Yt-1,…) = A0 + A1Yt Yt+2 = A0 + A1Yt+1 + t+2 E(Yt+2 │Yt,Yt-1,…) = A0 + A1 E(Yt+1│Yt,Yt-1,…) = A0 + A1(A0 + A1Yt) = (I + A1)A0 + A12Yt … E(Yt+s│Yt,Yt-1,…) = (I + A1+…+A1s-1)A0 + A1sYt and E(yi,t+s│Yt,Yt-1,…) = [(I + A1+…+A1s-1)A0 + A1sYt]i , For p > 1: Yt+1 = A0 + A1Yt + …+ ApYt-p + t+1 Yˆt 1 t E(Yt+1│Yt,Yt-1,…) = A0 + A1Yt +…+ApYt-p Yˆt 2 t E (Yt 2 Yt , Yt 1 ,...) A0 A1Yˆt 1 t A2Yt ... A p Yt p 1 … Yˆt s t E (Yt s Yt , Yt 1 ,...) A0 A1Yˆt s 1 t A2Yˆt s 2 t ... A p Yˆt s p t where Then, Yˆt s j t Yt s j if t+s-j < t. yˆ i ,t s t [Yˆt s t ]i Practical considerations include – - selection of variables to include in the VAR - selection of the lag length, p - estimation of the VAR (Note that OLS equation by equation = SURE) The VAR’s value in macroeconomics and financial economics – - summarizes dynamic interrelationships in the data that can guide the development of theoretical models. (What are the facts that theory needs to explain?) - basis for structural economic analysis, i.e., “story-telling”. (The “unrestricted” VAR is a reduced-form model. The trick is coming up with a way to “identify” the underlying structural relationships.) In constrast to traditional simultaneous equation modeling this approach does not rely on exogeneity restrictions or exclusion restrictions for identification. That is, it uses a different (and more plausible?) style of identfication. (More on this in Econ 674).